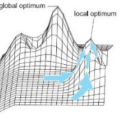

We propose a simple iterative (SI) algorithm for the maxcut problem through fully using an equivalent continuous formulation. It does not need rounding at all and has advantages that all subproblems have explicit analytic solutions, the cut values are monotonically updated and the iteration points converge to a local optima in finite steps via an appropriate subgradient selection. Numerical experiments on G-set demonstrate the performance. In particular, the ratios between the best cut values achieved by SI and those by some advanced combinatorial algorithms in [Ann. Oper. Res. 248 (2017) 365] are at least $0.986$ and can be further improved to at least $0.997$ by a preliminary attempt to break out of local optima.

相關內容

Many convolutional neural networks (CNNs) rely on progressive downsampling of their feature maps to increase the network's receptive field and decrease computational cost. However, this comes at the price of losing granularity in the feature maps, limiting the ability to correctly understand images or recover fine detail in dense prediction tasks. To address this, common practice is to replace the last few downsampling operations in a CNN with dilated convolutions, allowing to retain the feature map resolution without reducing the receptive field, albeit increasing the computational cost. This allows to trade off predictive performance against cost, depending on the output feature resolution. By either regularly downsampling or not downsampling the entire feature map, existing work implicitly treats all regions of the input image and subsequent feature maps as equally important, which generally does not hold. We propose an adaptive downsampling scheme that generalizes the above idea by allowing to process informative regions at a higher resolution than less informative ones. In a variety of experiments, we demonstrate the versatility of our adaptive downsampling strategy and empirically show that it improves the cost-accuracy trade-off of various established CNNs.

Laplace approximation is a very useful tool in Bayesian inference and it claims a nearly Gaussian behavior of the posterior. \cite{SpLaplace2022} established some rather accurate finite sample results about the quality of Laplace approximation in terms of the so called effective dimension $p$ under the critical dimension constraint $p^{3} \ll n$. However, this condition can be too restrictive for many applications like error-in-operator problem or Deep Neuronal Networks. This paper addresses the question whether the dimensionality condition can be relaxed and the accuracy of approximation can be improved if the target of estimation is low dimensional while the nuisance parameter is high or infinite dimensional. Under mild conditions, the marginal posterior can be approximated by a Gaussian mixture and the accuracy of the approximation only depends on the target dimension. Under the condition $p^{2} \ll n$ or in some special situation like semi-orthogonality, the Gaussian mixture can be replaced by one Gaussian distribution leading to a classical Laplace result. The second result greatly benefits from the recent advances in Gaussian comparison from \cite{GNSUl2017}. The results are illustrated and specified for the case of error-in-operator model.

Learning-augmented algorithms have been attracting increasing interest, but have only recently been considered in the setting of explorable uncertainty where precise values of uncertain input elements can be obtained by a query and the goal is to minimize the number of queries needed to solve a problem. We study learning-augmented algorithms for sorting and hypergraph orientation under uncertainty, assuming access to untrusted predictions for the uncertain values. Our algorithms provide improved performance guarantees for accurate predictions while maintaining worst-case guarantees that are best possible without predictions. For hypergraph orientation, for any $\gamma \geq 2$, we give an algorithm that achieves a competitive ratio of $1+1/\gamma$ for correct predictions and $\gamma$ for arbitrarily wrong predictions. For sorting, we achieve an optimal solution for accurate predictions while still being $2$-competitive for arbitrarily wrong predictions. These tradeoffs are the best possible. We also consider different error metrics and show that the performance of our algorithms degrades smoothly with the prediction error in all the cases where this is possible.

D-Adaptation is an approach to automatically setting the learning rate which asymptotically achieves the optimal rate of convergence for minimizing convex Lipschitz functions, with no back-tracking or line searches, and no additional function value or gradient evaluations per step. Our approach is the first hyper-parameter free method for this class without additional multiplicative log factors in the convergence rate. We present extensive experiments for SGD and Adam variants of our method, where the method automatically matches hand-tuned learning rates across more than a dozen diverse machine learning problems, including large-scale vision and language problems. An open-source implementation is available.

Convolutional codes with a maximum distance profile attain the largest possible column distances for the maximum number of time instants and thus have outstanding error-correcting capability especially for streaming applications. Explicit constructions of such codes are scarce in the literature. In particular, known constructions of convolutional codes with rate k/n and a maximum distance profile require a field of size at least exponential in n for general code parameters. At the same time, the only known lower bound on the field size is the trivial bound that is linear in n. In this paper, we show that a finite field of size $\Omega_L(n^{L-1})$ is necessary for constructing convolutional codes with rate k/n and a maximum distance profile of length L. As a direct consequence, this rules out the possibility of constructing convolutional codes with a maximum distance profile of length L >= 3 over a finite field of size O(n). Additionally, we also present an explicit construction of convolutional code with rate k/n and a maximum profile of length L = 1 over a finite field of size $O(n^{\min\{k,n-k\}})$, achieving a smaller field size than known constructions with the same profile length.

Group testing enables to identify infected individuals in a population using a smaller number of tests than individual testing. To achieve this, group testing algorithms commonly assume knowledge of the number of infected individuals; nonadaptive and several adaptive algorithms fall in this category. Some adaptive algorithms, like binary splitting, operate without this assumption, but require a number of stages that may scale linearly with the size of the population. In this paper we contribute a new algorithm that enables a balance between the number of tests and the number of stages used, and which we term diagonal group testing. Diagonal group testing, like binary splitting, does not require knowledge of the number of infected individuals, yet unlike binary splitting, is order-optimal w.r.t. the expected number of tests it requires and is guaranteed to succeed in a small number of stages that scales at most logarithmically with the size of the population. Numerical evaluations, for diagonal group testing and a hybrid approach we propose, support our theoretical findings.

Inspired by certain regularization techniques for linear inverse problems, in this work we investigate the convergence properties of the Levenberg-Marquardt method using singular scaling matrices. Under a completeness condition, we show that the method is well-defined and establish its local quadratic convergence under an error bound assumption. We also prove that the search directions are gradient-related allowing us to show that limit points of the sequence generated by a line-search version of the method are stationary for the sum-of-squares function. The usefulness of the method is illustrated with some examples of parameter identification in heat conduction problems for which specific singular scaling matrices can be used to improve the quality of approximate solutions.

We demonstrate the relevance of an algorithm called generalized iterative scaling (GIS) or simultaneous multiplicative algebraic reconstruction technique (SMART) and its rescaled block-iterative version (RBI-SMART) in the field of optimal transport (OT). Many OT problems can be tackled through the use of entropic regularization by solving the Schr\"odinger problem, which is an information projection problem, that is, with respect to the Kullback--Leibler divergence. Here we consider problems that have several affine constraints. It is well-known that cyclic information projections onto the individual affine sets converge to the solution. In practice, however, even these individual projections are not explicitly available in general. In this paper, we exchange them for one GIS iteration. If this is done for every affine set, we obtain RBI-SMART. We provide a convergence proof using an interpretation of these iterations as two-step affine projections in an equivalent problem. This is done in a slightly more general setting than RBI-SMART, since we use a mix of explicitly known information projections and GIS iterations. We proceed to specialize this algorithm to several OT applications. First, we find the measure that minimizes the regularized OT divergence to a given measure under moment constraints. Second and third, the proposed framework yields an algorithm for solving a regularized martingale OT problem, as well as a relaxed version of the barycentric weak OT problem. Finally, we show an approach from the literature for unbalanced OT problems.

Standard contrastive learning approaches usually require a large number of negatives for effective unsupervised learning and often exhibit slow convergence. We suspect this behavior is due to the suboptimal selection of negatives used for offering contrast to the positives. We counter this difficulty by taking inspiration from support vector machines (SVMs) to present max-margin contrastive learning (MMCL). Our approach selects negatives as the sparse support vectors obtained via a quadratic optimization problem, and contrastiveness is enforced by maximizing the decision margin. As SVM optimization can be computationally demanding, especially in an end-to-end setting, we present simplifications that alleviate the computational burden. We validate our approach on standard vision benchmark datasets, demonstrating better performance in unsupervised representation learning over state-of-the-art, while having better empirical convergence properties.

We advocate the use of implicit fields for learning generative models of shapes and introduce an implicit field decoder for shape generation, aimed at improving the visual quality of the generated shapes. An implicit field assigns a value to each point in 3D space, so that a shape can be extracted as an iso-surface. Our implicit field decoder is trained to perform this assignment by means of a binary classifier. Specifically, it takes a point coordinate, along with a feature vector encoding a shape, and outputs a value which indicates whether the point is outside the shape or not. By replacing conventional decoders by our decoder for representation learning and generative modeling of shapes, we demonstrate superior results for tasks such as shape autoencoding, generation, interpolation, and single-view 3D reconstruction, particularly in terms of visual quality.