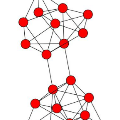

Virtualization technologies are the foundation of modern ICT infrastructure, enabling service providers to create dedicated virtual networks (VNs) that can support a wide range of smart city applications. These VNs continuously generate massive amounts of data, necessitating stringent reliability and security requirements. In virtualized network environments, however, multiple VNs may coexist on the same physical infrastructure and, if not properly isolated, may interfere with or provide unauthorized access to one another. The former causes performance degradation, while the latter compromises the security of VNs. Service assurance for infrastructure providers becomes significantly more complicated when a specific VN violates the isolation requirement. In an effort to address the isolation issue, this paper proposes isolation during virtual network embedding (VNE), the procedure of allocating VNs onto physical infrastructure. We define a simple abstracted concept of isolation levels to capture the variations in isolation requirements and then formulate isolation-aware VNE as an optimization problem with resource and isolation constraints. A deep reinforcement learning (DRL)-based VNE algorithm ISO-DRL_VNE, is proposed that considers resource and isolation constraints and is compared to the existing three state-of-the-art algorithms: NodeRank, Global Resource Capacity (GRC), and Mote-Carlo Tree Search (MCTS). Evaluation results show that the ISO-DRL_VNE algorithm outperforms others in acceptance ratio, long-term average revenue, and long-term average revenue-to-cost ratio by 6%, 13%, and 15%.

相關內容

Optical Wireless Communication networks (OWC) has emerged as a promising technology that enables high-speed and reliable communication bandwidth for a variety of applications. In this work, we investigated applying Random Linear Network Coding (RLNC) over NOMA-based OWC networks to improve the performance of the proposed high density indoor optical wireless network where users are divided into multicast groups, and each group contains users that slightly differ in their channel gains. Moreover, a fixed power allocation strategy is considered to manage interference among these groups and to avoid complexity. The performance of the proposed RLNC-NOMA scheme is evaluated in terms of average bit error rate and ergodic sum rate versus the power allocation ratio factor. The results show that the proposed scheme is more suitable for the considered network compared to the traditional NOMA and orthogonal transmission schemes.

Blockchain technology constitutes a paradigm shift in the way we conceive distributed architectures. A Blockchain system lets us build platforms where data are immutable and tamper-proof, with some constraints on the throughput and the amount of memory required to store the ledger. This paper aims to solve the issue of memory and performance requirements developing a multiple Blockchain architecture that mixes the benefits deriving from a public and a private Blockchain. This kind of approach enables small sensors - with memory and performance constraints - to join the network without worrying about the amount of data to store. The development is proposed following a context-aware approach, to make the architecture scalable and easy to use in different scenarios.

We present a deep learning method for composite and task-driven motion control for physically simulated characters. In contrast to existing data-driven approaches using reinforcement learning that imitate full-body motions, we learn decoupled motions for specific body parts from multiple reference motions simultaneously and directly by leveraging the use of multiple discriminators in a GAN-like setup. In this process, there is no need of any manual work to produce composite reference motions for learning. Instead, the control policy explores by itself how the composite motions can be combined automatically. We further account for multiple task-specific rewards and train a single, multi-objective control policy. To this end, we propose a novel framework for multi-objective learning that adaptively balances the learning of disparate motions from multiple sources and multiple goal-directed control objectives. In addition, as composite motions are typically augmentations of simpler behaviors, we introduce a sample-efficient method for training composite control policies in an incremental manner, where we reuse a pre-trained policy as the meta policy and train a cooperative policy that adapts the meta one for new composite tasks. We show the applicability of our approach on a variety of challenging multi-objective tasks involving both composite motion imitation and multiple goal-directed control.

The past few years have seen rapid progress in combining reinforcement learning (RL) with deep learning. Various breakthroughs ranging from games to robotics have spurred the interest in designing sophisticated RL algorithms and systems. However, the prevailing workflow in RL is to learn tabula rasa, which may incur computational inefficiency. This precludes continuous deployment of RL algorithms and potentially excludes researchers without large-scale computing resources. In many other areas of machine learning, the pretraining paradigm has shown to be effective in acquiring transferable knowledge, which can be utilized for a variety of downstream tasks. Recently, we saw a surge of interest in Pretraining for Deep RL with promising results. However, much of the research has been based on different experimental settings. Due to the nature of RL, pretraining in this field is faced with unique challenges and hence requires new design principles. In this survey, we seek to systematically review existing works in pretraining for deep reinforcement learning, provide a taxonomy of these methods, discuss each sub-field, and bring attention to open problems and future directions.

The development of autonomous agents which can interact with other agents to accomplish a given task is a core area of research in artificial intelligence and machine learning. Towards this goal, the Autonomous Agents Research Group develops novel machine learning algorithms for autonomous systems control, with a specific focus on deep reinforcement learning and multi-agent reinforcement learning. Research problems include scalable learning of coordinated agent policies and inter-agent communication; reasoning about the behaviours, goals, and composition of other agents from limited observations; and sample-efficient learning based on intrinsic motivation, curriculum learning, causal inference, and representation learning. This article provides a broad overview of the ongoing research portfolio of the group and discusses open problems for future directions.

Graph mining tasks arise from many different application domains, ranging from social networks, transportation, E-commerce, etc., which have been receiving great attention from the theoretical and algorithm design communities in recent years, and there has been some pioneering work using the hotly researched reinforcement learning (RL) techniques to address graph data mining tasks. However, these graph mining algorithms and RL models are dispersed in different research areas, which makes it hard to compare different algorithms with each other. In this survey, we provide a comprehensive overview of RL models and graph mining and generalize these algorithms to Graph Reinforcement Learning (GRL) as a unified formulation. We further discuss the applications of GRL methods across various domains and summarize the method description, open-source codes, and benchmark datasets of GRL methods. Finally, we propose possible important directions and challenges to be solved in the future. This is the latest work on a comprehensive survey of GRL literature, and this work provides a global view for researchers as well as a learning resource for researchers outside the domain. In addition, we create an online open-source for both interested researchers who want to enter this rapidly developing domain and experts who would like to compare GRL methods.

Data processing and analytics are fundamental and pervasive. Algorithms play a vital role in data processing and analytics where many algorithm designs have incorporated heuristics and general rules from human knowledge and experience to improve their effectiveness. Recently, reinforcement learning, deep reinforcement learning (DRL) in particular, is increasingly explored and exploited in many areas because it can learn better strategies in complicated environments it is interacting with than statically designed algorithms. Motivated by this trend, we provide a comprehensive review of recent works focusing on utilizing DRL to improve data processing and analytics. First, we present an introduction to key concepts, theories, and methods in DRL. Next, we discuss DRL deployment on database systems, facilitating data processing and analytics in various aspects, including data organization, scheduling, tuning, and indexing. Then, we survey the application of DRL in data processing and analytics, ranging from data preparation, natural language processing to healthcare, fintech, etc. Finally, we discuss important open challenges and future research directions of using DRL in data processing and analytics.

Reinforcement learning (RL) is a popular paradigm for addressing sequential decision tasks in which the agent has only limited environmental feedback. Despite many advances over the past three decades, learning in many domains still requires a large amount of interaction with the environment, which can be prohibitively expensive in realistic scenarios. To address this problem, transfer learning has been applied to reinforcement learning such that experience gained in one task can be leveraged when starting to learn the next, harder task. More recently, several lines of research have explored how tasks, or data samples themselves, can be sequenced into a curriculum for the purpose of learning a problem that may otherwise be too difficult to learn from scratch. In this article, we present a framework for curriculum learning (CL) in reinforcement learning, and use it to survey and classify existing CL methods in terms of their assumptions, capabilities, and goals. Finally, we use our framework to find open problems and suggest directions for future RL curriculum learning research.

Recently, deep multiagent reinforcement learning (MARL) has become a highly active research area as many real-world problems can be inherently viewed as multiagent systems. A particularly interesting and widely applicable class of problems is the partially observable cooperative multiagent setting, in which a team of agents learns to coordinate their behaviors conditioning on their private observations and commonly shared global reward signals. One natural solution is to resort to the centralized training and decentralized execution paradigm. During centralized training, one key challenge is the multiagent credit assignment: how to allocate the global rewards for individual agent policies for better coordination towards maximizing system-level's benefits. In this paper, we propose a new method called Q-value Path Decomposition (QPD) to decompose the system's global Q-values into individual agents' Q-values. Unlike previous works which restrict the representation relation of the individual Q-values and the global one, we leverage the integrated gradient attribution technique into deep MARL to directly decompose global Q-values along trajectory paths to assign credits for agents. We evaluate QPD on the challenging StarCraft II micromanagement tasks and show that QPD achieves the state-of-the-art performance in both homogeneous and heterogeneous multiagent scenarios compared with existing cooperative MARL algorithms.

Mining graph data has become a popular research topic in computer science and has been widely studied in both academia and industry given the increasing amount of network data in the recent years. However, the huge amount of network data has posed great challenges for efficient analysis. This motivates the advent of graph representation which maps the graph into a low-dimension vector space, keeping original graph structure and supporting graph inference. The investigation on efficient representation of a graph has profound theoretical significance and important realistic meaning, we therefore introduce some basic ideas in graph representation/network embedding as well as some representative models in this chapter.