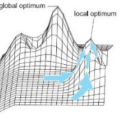

This paper introduces $K$-Tensors, a novel self-consistent clustering algorithm designed to cluster positive semi-definite (PSD) matrices by their eigenstructures. Clustering PSD matrices is crucial across various fields, including computer and biomedical sciences. Traditional clustering methods, which often involve matrix vectorization, tend to overlook the inherent PSD characteristics, thereby discarding valuable shape and eigenstructural information. To preserve this essential shape and eigenstructral information, our approach incorporates a unique distance metric that respects the PSD nature of the data. We demonstrate that $K$-Tensors is not only self-consistent but also reliably converges to a local optimum. Through numerical studies, we further validate the algorithm's effectiveness and explore its properties in detail.

相關內容

Compared to business-to-consumer (B2C) e-commerce systems, consumer-to-consumer (C2C) e-commerce platforms usually encounter the limited-stock problem, that is, a product can only be sold one time in a C2C system. This poses several unique challenges for click-through rate (CTR) prediction. Due to limited user interactions for each product (i.e. item), the corresponding item embedding in the CTR model may not easily converge. This makes the conventional sequence modeling based approaches cannot effectively utilize user history information since historical user behaviors contain a mixture of items with different volume of stocks. Particularly, the attention mechanism in a sequence model tends to assign higher score to products with more accumulated user interactions, making limited-stock products being ignored and contribute less to the final output. To this end, we propose the Meta-Split Network (MSNet) to split user history sequence regarding to the volume of stock for each product, and adopt differentiated modeling approaches for different sequences. As for the limited-stock products, a meta-learning approach is applied to address the problem of inconvergence, which is achieved by designing meta scaling and shifting networks with ID and side information. In addition, traditional approach can hardly update item embedding once the product is consumed. Thereby, we propose an auxiliary loss that makes the parameters updatable even when the product is no longer in distribution. To the best of our knowledge, this is the first solution addressing the recommendation of limited-stock product. Experimental results on the production dataset and online A/B testing demonstrate the effectiveness of our proposed method.

This study introduces the Hybrid Sequential Manipulation Planner (H-MaP), a novel approach that iteratively does motion planning using contact points and waypoints for complex sequential manipulation tasks in robotics. Combining optimization-based methods for generalizability and sampling-based methods for robustness, H-MaP enhances manipulation planning through active contact mode switches and enables interactions with auxiliary objects and tools. This framework, validated by a series of diverse physical manipulation tasks and real-robot experiments, offers a scalable and adaptable solution for complex real-world applications in robotic manipulation.

This paper presents a new approach to form-filling by reformulating the task as multimodal natural language Question Answering (QA). The reformulation is achieved by first translating the elements on the GUI form (text fields, buttons, icons, etc.) to natural language questions, where these questions capture the element's multimodal semantics. After a match is determined between the form element (Question) and the user utterance (Answer), the form element is filled through a pre-trained extractive QA system. By leveraging pre-trained QA models and not requiring form-specific training, this approach to form-filling is zero-shot. The paper also presents an approach to further refine the form-filling by using multi-task training to incorporate a potentially large number of successive tasks. Finally, the paper introduces a multimodal natural language form-filling dataset Multimodal Forms (mForms), as well as a multimodal extension of the popular ATIS dataset to support future research and experimentation. Results show the new approach not only maintains robust accuracy for sparse training conditions but achieves state-of-the-art F1 of 0.97 on ATIS with approximately 1/10th of the training data.

This paper explores the utilization of LLMs for data preprocessing (DP), a crucial step in the data mining pipeline that transforms raw data into a clean format conducive to easy processing. Whereas the use of LLMs has sparked interest in devising universal solutions to DP, recent initiatives in this domain typically rely on GPT APIs, raising inevitable data breach concerns. Unlike these approaches, we consider instruction-tuning local LLMs (7 - 13B models) as universal DP ask solver. We select a collection of datasets across four representative DP tasks and construct instruction-tuning data using serialization and knowledge injection techniques tailored to DP. As such, the instruction-tuned LLMs empower users to manually craft instructions for DP. Meanwhile, they can operate on a local, single, and low-priced GPU, ensuring data security and enabling further tuning. Our experiments show that our dataset constructed for DP instruction tuning, namely Jellyfish, effectively enhances LLMs' DP performances and barely compromises their abilities in NLP tasks. By tuning Mistral-7B and OpenOrca-Platypus2-13B with Jellyfish, the models deliver competitiveness compared to state-of-the-art DP methods and strong generalizability to unseen tasks. The models' performance rivals that of GPT series models, and the interpretation offers enhanced reasoning capabilities compared to GPT-3.5. The 7B and 13B Jellyfish models are available at Hugging Face: //huggingface.co/NECOUDBFM/Jellyfish-7B //huggingface.co/NECOUDBFM/Jellyfish-13B

This paper introduces a novel method leveraging bi-encoder-based detectors along with a comprehensive study comparing different out-of-distribution (OOD) detection methods in NLP using different feature extractors. The feature extraction stage employs popular methods such as Universal Sentence Encoder (USE), BERT, MPNET, and GLOVE to extract informative representations from textual data. The evaluation is conducted on several datasets, including CLINC150, ROSTD-Coarse, SNIPS, and YELLOW. Performance is assessed using metrics such as F1-Score, MCC, FPR@90, FPR@95, AUPR, an AUROC. The experimental results demonstrate that the proposed bi-encoder-based detectors outperform other methods, both those that require OOD labels in training and those that do not, across all datasets, showing great potential for OOD detection in NLP. The simplicity of the training process and the superior detection performance make them applicable to real-world scenarios. The presented methods and benchmarking metrics serve as a valuable resource for future research in OOD detection, enabling further advancements in this field. The code and implementation details can be found on our GitHub repository: //github.com/yellowmessenger/ood-detection.

Recently, deep learning-based Text-to-Speech (TTS) systems have achieved high-quality speech synthesis results. Recurrent neural networks have become a standard modeling technique for sequential data in TTS systems and are widely used. However, training a TTS model which includes RNN components requires powerful GPU performance and takes a long time. In contrast, CNN-based sequence synthesis techniques can significantly reduce the parameters and training time of a TTS model while guaranteeing a certain performance due to their high parallelism, which alleviate these economic costs of training. In this paper, we propose a lightweight TTS system based on deep convolutional neural networks, which is a two-stage training end-to-end TTS model and does not employ any recurrent units. Our model consists of two stages: Text2Spectrum and SSRN. The former is used to encode phonemes into a coarse mel spectrogram and the latter is used to synthesize the complete spectrum from the coarse mel spectrogram. Meanwhile, we improve the robustness of our model by a series of data augmentations, such as noise suppression, time warping, frequency masking and time masking, for solving the low resource mongolian problem. Experiments show that our model can reduce the training time and parameters while ensuring the quality and naturalness of the synthesized speech compared to using mainstream TTS models. Our method uses NCMMSC2022-MTTSC Challenge dataset for validation, which significantly reduces training time while maintaining a certain accuracy.

Deep learning on graphs has attracted significant interests recently. However, most of the works have focused on (semi-) supervised learning, resulting in shortcomings including heavy label reliance, poor generalization, and weak robustness. To address these issues, self-supervised learning (SSL), which extracts informative knowledge through well-designed pretext tasks without relying on manual labels, has become a promising and trending learning paradigm for graph data. Different from SSL on other domains like computer vision and natural language processing, SSL on graphs has an exclusive background, design ideas, and taxonomies. Under the umbrella of graph self-supervised learning, we present a timely and comprehensive review of the existing approaches which employ SSL techniques for graph data. We construct a unified framework that mathematically formalizes the paradigm of graph SSL. According to the objectives of pretext tasks, we divide these approaches into four categories: generation-based, auxiliary property-based, contrast-based, and hybrid approaches. We further conclude the applications of graph SSL across various research fields and summarize the commonly used datasets, evaluation benchmark, performance comparison and open-source codes of graph SSL. Finally, we discuss the remaining challenges and potential future directions in this research field.

We consider an interesting problem-salient instance segmentation in this paper. Other than producing bounding boxes, our network also outputs high-quality instance-level segments. Taking into account the category-independent property of each target, we design a single stage salient instance segmentation framework, with a novel segmentation branch. Our new branch regards not only local context inside each detection window but also its surrounding context, enabling us to distinguish the instances in the same scope even with obstruction. Our network is end-to-end trainable and runs at a fast speed (40 fps when processing an image with resolution 320x320). We evaluate our approach on a publicly available benchmark and show that it outperforms other alternative solutions. We also provide a thorough analysis of the design choices to help readers better understand the functions of each part of our network. The source code can be found at \url{//github.com/RuochenFan/S4Net}.

We present MMKG, a collection of three knowledge graphs that contain both numerical features and (links to) images for all entities as well as entity alignments between pairs of KGs. Therefore, multi-relational link prediction and entity matching communities can benefit from this resource. We believe this data set has the potential to facilitate the development of novel multi-modal learning approaches for knowledge graphs.We validate the utility ofMMKG in the sameAs link prediction task with an extensive set of experiments. These experiments show that the task at hand benefits from learning of multiple feature types.

This paper describes a general framework for learning Higher-Order Network Embeddings (HONE) from graph data based on network motifs. The HONE framework is highly expressive and flexible with many interchangeable components. The experimental results demonstrate the effectiveness of learning higher-order network representations. In all cases, HONE outperforms recent embedding methods that are unable to capture higher-order structures with a mean relative gain in AUC of $19\%$ (and up to $75\%$ gain) across a wide variety of networks and embedding methods.