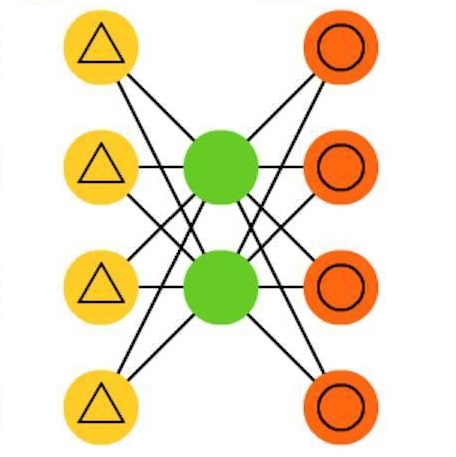

A deep autoencoder (DAE)-based structure for endto-end communication over the two-user Z-interference channel (ZIC) with finite-alphabet inputs is designed in this paper. The proposed structure jointly optimizes the two encoder/decoder pairs and generates interference-aware constellations that dynamically adapt their shape based on interference intensity to minimize the bit error rate (BER). An in-phase/quadrature-phase (I/Q) power allocation layer is introduced in the DAE to guarantee an average power constraint and enable the architecture to generate constellations with nonuniform shapes. This brings further gain compared to standard uniform constellations such as quadrature amplitude modulation. The proposed structure is then extended to work with imperfect channel state information (CSI). The CSI imperfection due to both the estimation and quantization errors are examined. The performance of the DAEZIC is compared with two baseline methods, i.e., standard and rotated constellations. The proposed structure significantly enhances the performance of the ZIC both for the perfect and imperfect CSI. Simulation results show that the improvement is achieved in all interference regimes (weak, moderate, and strong) and consistently increases with the signal-to-noise ratio (SNR). For example, more than an order of magnitude BER reduction is obtained with respect to the most competitive conventional method at weak interference when SNR>15dB and two bits per symbol are transmitted. The improvements reach about two orders of magnitude when quantization error exists, indicating that the DAE-ZIC is more robust to the interference compared to the conventional methods.

相關內容

Adapters, a plug-in neural network module with some tunable parameters, have emerged as a parameter-efficient transfer learning technique for adapting pre-trained models to downstream tasks, especially for natural language processing (NLP) and computer vision (CV) fields. Meanwhile, learning recommendation models directly from raw item modality features -- e.g., texts of NLP and images of CV -- can enable effective and transferable recommender systems (called TransRec). In view of this, a natural question arises: can adapter-based learning techniques achieve parameter-efficient TransRec with good performance? To this end, we perform empirical studies to address several key sub-questions. First, we ask whether the adapter-based TransRec performs comparably to TransRec based on standard full-parameter fine-tuning? does it hold for recommendation with different item modalities, e.g., textual RS and visual RS. If yes, we benchmark these existing adapters, which have been shown to be effective in NLP and CV tasks, in item recommendation tasks. Third, we carefully study several key factors for the adapter-based TransRec in terms of where and how to insert these adapters? Finally, we look at the effects of adapter-based TransRec by either scaling up its source training data or scaling down its target training data. Our paper provides key insights and practical guidance on unified & transferable recommendation -- a less studied recommendation scenario. We release our codes and other materials at: //github.com/westlake-repl/Adapter4Rec/.

Entity alignment seeks identical entities in different knowledge graphs, which is a long-standing task in the database research. Recent work leverages deep learning to embed entities in vector space and align them via nearest neighbor search. Although embedding-based entity alignment has gained marked success in recent years, it lacks explanations for alignment decisions. In this paper, we present the first framework that can generate explanations for understanding and repairing embedding-based entity alignment results. Given an entity alignment pair produced by an embedding model, we first compare its neighbor entities and relations to build a matching subgraph as a local explanation. We then construct an alignment dependency graph to understand the pair from an abstract perspective. Finally, we repair the pair by resolving three types of alignment conflicts based on dependency graphs. Experiments on five datasets demonstrate the effectiveness and generalization of our framework in explaining and repairing embedding-based entity alignment results.

Requirements Satisfaction Assessment (RSA) evaluates whether the set of design elements linked to a single requirement provide sufficient coverage of that requirement -- typically meaning that all concepts in the requirement are addressed by at least one of the design elements. RSA is an important software engineering activity for systems with any form of hierarchical decomposition -- especially safety or mission critical ones. In previous studies, researchers used basic Information Retrieval (IR) models to decompose requirements and design elements into chunks, and then evaluated the extent to which chunks of design elements covered all chunks in the requirement. However, results had low accuracy because many critical concepts that extend across the entirety of the sentence were not well represented when the sentence was parsed into independent chunks. In this paper we leverage recent advances in natural language processing to deliver significantly more accurate results. We propose two major architectures: Satisfaction BERT (Sat-BERT), and Dual-Satisfaction BERT (DSat-BERT), along with their multitask learning variants to improve satisfaction assessments. We perform RSA on five different datasets and compare results from our variants against the chunk-based legacy approach. All BERT-based models significantly outperformed the legacy baseline, and Sat-BERT delivered the best results returning an average improvement of 124.75% in Mean Average Precision.

We numerically demonstrate a silicon add-drop microring-based reservoir computing scheme that combines parallel delayed inputs and wavelength division multiplexing. The scheme solves memory-demanding tasks like time-series prediction with good performance without requiring external optical feedback.

Much of the previous work towards digital agents for graphical user interfaces (GUIs) has relied on text-based representations (derived from HTML or other structured data sources), which are not always readily available. These input representations have been often coupled with custom, task-specific action spaces. This paper focuses on creating agents that interact with the digital world using the same conceptual interface that humans commonly use -- via pixel-based screenshots and a generic action space corresponding to keyboard and mouse actions. Building upon recent progress in pixel-based pretraining, we show, for the first time, that it is possible for such agents to outperform human crowdworkers on the MiniWob++ benchmark of GUI-based instruction following tasks.

We introduce a system that allows users of Ableton Live to create MIDI-clips by naming them with musical descriptions. Users can compose by typing the desired musical content directly in Ableton's clip view, which is then inserted by our integrated system. This allows users to stay in the flow of their creative process while quickly generating musical ideas. The system works by prompting ChatGPT to reply using one of several text-based musical formats, such as ABC notation, chord symbols, or drum tablature. This is an important step in integrating generative AI tools into pre-existing musical workflows, and could be valuable for content makers who prefer to express their creative vision through descriptive language. Code is available at //github.com/supersational/JAMMIN-GPT.

We present parallel proof-of-work with DAG-style voting, a novel proof-of-work cryptocurrency protocol that, compared to Bitcoin, provides better consistency guarantees, higher transaction throughput, lower transaction confirmation latency, and higher resilience against incentive attacks. The superior consistency guarantees follow from implementing parallel proof-of-work, a recent consensus scheme that enforces a configurable number of proof-of-work votes per block. Our work is inspired by another recent protocol, Tailstorm, which structures the individual votes as tree and mitigates incentive attacks by discounting the mining rewards proportionally to the depth of the tree. We propose to structure the votes as a directed acyclic graph (DAG) instead of a tree. This allows for a more targeted punishment of offending miners and, as we show through a reinforcement learning based attack search, makes the protocol even more resilient to incentive attacks. An interesting by-product of our analysis is that parallel proof-of-work without reward discounting is less resilient to incentive attacks than Bitcoin in some realistic network scenarios.

Convolutional neural networks (CNNs) have shown dramatic improvements in single image super-resolution (SISR) by using large-scale external samples. Despite their remarkable performance based on the external dataset, they cannot exploit internal information within a specific image. Another problem is that they are applicable only to the specific condition of data that they are supervised. For instance, the low-resolution (LR) image should be a "bicubic" downsampled noise-free image from a high-resolution (HR) one. To address both issues, zero-shot super-resolution (ZSSR) has been proposed for flexible internal learning. However, they require thousands of gradient updates, i.e., long inference time. In this paper, we present Meta-Transfer Learning for Zero-Shot Super-Resolution (MZSR), which leverages ZSSR. Precisely, it is based on finding a generic initial parameter that is suitable for internal learning. Thus, we can exploit both external and internal information, where one single gradient update can yield quite considerable results. (See Figure 1). With our method, the network can quickly adapt to a given image condition. In this respect, our method can be applied to a large spectrum of image conditions within a fast adaptation process.

The recent proliferation of knowledge graphs (KGs) coupled with incomplete or partial information, in the form of missing relations (links) between entities, has fueled a lot of research on knowledge base completion (also known as relation prediction). Several recent works suggest that convolutional neural network (CNN) based models generate richer and more expressive feature embeddings and hence also perform well on relation prediction. However, we observe that these KG embeddings treat triples independently and thus fail to cover the complex and hidden information that is inherently implicit in the local neighborhood surrounding a triple. To this effect, our paper proposes a novel attention based feature embedding that captures both entity and relation features in any given entity's neighborhood. Additionally, we also encapsulate relation clusters and multihop relations in our model. Our empirical study offers insights into the efficacy of our attention based model and we show marked performance gains in comparison to state of the art methods on all datasets.

Image-to-image translation aims to learn the mapping between two visual domains. There are two main challenges for many applications: 1) the lack of aligned training pairs and 2) multiple possible outputs from a single input image. In this work, we present an approach based on disentangled representation for producing diverse outputs without paired training images. To achieve diversity, we propose to embed images onto two spaces: a domain-invariant content space capturing shared information across domains and a domain-specific attribute space. Our model takes the encoded content features extracted from a given input and the attribute vectors sampled from the attribute space to produce diverse outputs at test time. To handle unpaired training data, we introduce a novel cross-cycle consistency loss based on disentangled representations. Qualitative results show that our model can generate diverse and realistic images on a wide range of tasks without paired training data. For quantitative comparisons, we measure realism with user study and diversity with a perceptual distance metric. We apply the proposed model to domain adaptation and show competitive performance when compared to the state-of-the-art on the MNIST-M and the LineMod datasets.