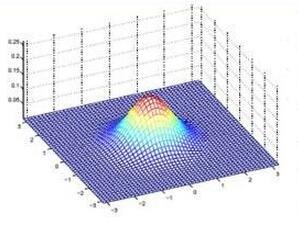

There is a need for new models for characterizing dependence in multivariate data. The multivariate Gaussian distribution is routinely used, but cannot characterize nonlinear relationships in the data. Most non-linear extensions tend to be highly complex; for example, involving estimation of a non-linear regression model in latent variables. In this article, we propose a relatively simple class of Ellipsoid-Gaussian multivariate distributions, which are derived by using a Gaussian linear factor model involving latent variables having a von Mises-Fisher distribution on a unit hyper-sphere. We show that the Ellipsoid-Gaussian distribution can flexibly model curved relationships among variables with lower-dimensional structures. Taking a Bayesian approach, we propose a hybrid of gradient-based geodesic Monte Carlo and adaptive Metropolis for posterior sampling. We derive basic properties and illustrate the utility of the Ellipsoid-Gaussian distribution on a variety of simulated and real data applications.

相關內容

Truncated densities are probability density functions defined on truncated domains. They share the same parametric form with their non-truncated counterparts up to a normalizing constant. Since the computation of their normalizing constants is usually infeasible, Maximum Likelihood Estimation cannot be easily applied to estimate truncated density models. Score Matching (SM) is a powerful tool for fitting parameters using only unnormalized models. However, it cannot be directly applied here as boundary conditions used to derive a tractable SM objective are not satisfied by truncated densities. In this paper, we study parameter estimation for truncated probability densities using SM. The estimator minimizes a weighted Fisher divergence. The weight function is simply the shortest distance from a data point to the boundary of the domain. We show this choice of weight function naturally arises from minimizing the Stein discrepancy as well as upperbounding the finite-sample estimation error. The usefulness of our method is demonstrated by numerical experiments and a study on the Chicago crime data set. We also show that the proposed density estimation can correct the outlier-trimming bias caused by aggressive outlier detection methods.

We introduce a family of pairwise stochastic gradient estimators for gradients of expectations, which are related to the log-derivative trick, but involve pairwise interactions between samples. The simplest example of our new estimator, dubbed the fundamental trick estimator, is shown to arise from either a) introducing and approximating an integral representation based on the fundamental theorem of calculus, or b) applying the reparameterisation trick to an implicit parameterisation under infinitesimal perturbation of the parameters. From the former perspective we generalise to a reproducing kernel Hilbert space representation, giving rise to a locality parameter in the pairwise interactions mentioned above, yielding our representer trick estimator. The resulting estimators are unbiased and shown to offer an independent component of useful information in comparison with the log-derivative estimator. We provide a further novel theoretical analysis which further characterises the variance reduction afforded by the new techniques. Promising analytical and numerical examples confirm the theory and intuitions behind the new estimators.

Safety is critical in autonomous robotic systems. A safe control law ensures forward invariance of a safe set (a subset in the state space). It has been extensively studied regarding how to derive a safe control law with a control-affine analytical dynamic model. However, in complex environments and tasks, it is challenging and time-consuming to obtain a principled analytical model of the system. In these situations, data-driven learning is extensively used and the learned models are encoded in neural networks. How to formally derive a safe control law with Neural Network Dynamic Models (NNDM) remains unclear due to the lack of computationally tractable methods to deal with these black-box functions. In fact, even finding the control that minimizes an objective for NNDM without any safety constraint is still challenging. In this work, we propose MIND-SIS (Mixed Integer for Neural network Dynamic model with Safety Index Synthesis), the first method to derive safe control laws for NNDM. The method includes two parts: 1) SIS: an algorithm for the offline synthesis of the safety index (also called as barrier function), which uses evolutionary methods and 2) MIND: an algorithm for online computation of the optimal and safe control signal, which solves a constrained optimization using a computationally efficient encoding of neural networks. It has been theoretically proved that MIND-SIS guarantees forward invariance and finite convergence. And it has been numerically validated that MIND-SIS achieves safe and optimal control of NNDM. From our experiments, the optimality gap is less than $10^{-8}$, and the safety constraint violation is $0$.

Covariance estimation for matrix-valued data has received an increasing interest in applications. Unlike previous works that rely heavily on matrix normal distribution assumption and the requirement of fixed matrix size, we propose a class of distribution-free regularized covariance estimation methods for high-dimensional matrix data under a separability condition and a bandable covariance structure. Under these conditions, the original covariance matrix is decomposed into a Kronecker product of two bandable small covariance matrices representing the variability over row and column directions. We formulate a unified framework for estimating bandable covariance, and introduce an efficient algorithm based on rank one unconstrained Kronecker product approximation. The convergence rates of the proposed estimators are established, and the derived minimax lower bound shows our proposed estimator is rate-optimal under certain divergence regimes of matrix size. We further introduce a class of robust covariance estimators and provide theoretical guarantees to deal with heavy-tailed data. We demonstrate the superior finite-sample performance of our methods using simulations and real applications from a gridded temperature anomalies dataset and a S&P 500 stock data analysis.

We study the problem of testing whether a function $f: \mathbb{R}^n \to \mathbb{R}$ is a polynomial of degree at most $d$ in the \emph{distribution-free} testing model. Here, the distance between functions is measured with respect to an unknown distribution $\mathcal{D}$ over $\mathbb{R}^n$ from which we can draw samples. In contrast to previous work, we do not assume that $\mathcal{D}$ has finite support. We design a tester that given query access to $f$, and sample access to $\mathcal{D}$, makes $(d/\varepsilon)^{O(1)}$ many queries to $f$, accepts with probability $1$ if $f$ is a polynomial of degree $d$, and rejects with probability at least $2/3$ if every degree-$d$ polynomial $P$ disagrees with $f$ on a set of mass at least $\varepsilon$ with respect to $\mathcal{D}$. Our result also holds under mild assumptions when we receive only a polynomial number of bits of precision for each query to $f$, or when $f$ can only be queried on rational points representable using a logarithmic number of bits. Along the way, we prove a new stability theorem for multivariate polynomials that may be of independent interest.

This paper presents new deterministic and distributed low-diameter decomposition algorithms for weighted graphs. In particular, we show that if one can efficiently compute approximate distances in a parallel or a distributed setting, one can also efficiently compute low-diameter decompositions. This consequently implies solutions to many fundamental distance based problems using a polylogarithmic number of approximate distance computations. Our low-diameter decomposition generalizes and extends the line of work starting from [Rozho\v{n}, Ghaffari STOC 2020] to weighted graphs in a very model-independent manner. Moreover, our clustering results have additional useful properties, including strong-diameter guarantees, separation properties, restricting cluster centers to specified terminals, and more. Applications include: -- The first near-linear work and polylogarithmic depth randomized and deterministic parallel algorithm for low-stretch spanning trees (LSST) with polylogarithmic stretch. Previously, the best parallel LSST algorithm required $m \cdot n^{o(1)}$ work and $n^{o(1)}$ depth and was inherently randomized. No deterministic LSST algorithm with truly sub-quadratic work and sub-linear depth was known. -- The first near-linear work and polylogarithmic depth deterministic algorithm for computing an $\ell_1$-embedding into polylogarithmic dimensional space with polylogarithmic distortion. The best prior deterministic algorithms for $\ell_1$-embeddings either require large polynomial work or are inherently sequential. Even when we apply our techniques to the classical problem of computing a ball-carving with strong-diameter $O(\log^2 n)$ in an unweighted graph, our new clustering algorithm still leads to an improvement in round complexity from $O(\log^{10} n)$ rounds [Chang, Ghaffari PODC 21] to $O(\log^{4} n)$.

This paper studies how well generative adversarial networks (GANs) learn probability distributions from finite samples. Our main results establish the convergence rates of GANs under a collection of integral probability metrics defined through H\"older classes, including the Wasserstein distance as a special case. We also show that GANs are able to adaptively learn data distributions with low-dimensional structures or have H\"older densities, when the network architectures are chosen properly. In particular, for distributions concentrated around a low-dimensional set, we show that the learning rates of GANs do not depend on the high ambient dimension, but on the lower intrinsic dimension. Our analysis is based on a new oracle inequality decomposing the estimation error into the generator and discriminator approximation error and the statistical error, which may be of independent interest.

At the same time that AI and machine learning are becoming central to human life, their potential harms become more vivid. In the presence of such drawbacks, a critical question one needs to address before using these data-driven technologies to make a decision is whether to trust their outcomes. Aligned with recent efforts on data-centric AI, this paper proposes a novel approach to address the trust question through the lens of data, by associating data sets with distrust quantification that specify their scope of use for predicting future query points. The distrust values raise warning signals when a prediction based on a dataset is questionable and are valuable alongside other techniques for trustworthy AI. We propose novel algorithms for computing the distrust values in the neighborhood of a query point efficiently and effectively. Learning the necessary components of the measures from the data itself, our sub-linear algorithms scale to very large and multi-dimensional settings. Besides demonstrating the efficiency of our algorithms, our extensive experiments reflect a consistent correlation between distrust values and model performance. This underscores the message that when the distrust value of a query point is high, the prediction outcome should be discarded or at least not considered for critical decisions.

It is shown, with two sets of indicators that separately load on two distinct factors, independent of one another conditional on the past, that if it is the case that at least one of the factors causally affects the other, then, in many settings, the process will converge to a factor model in which a single factor will suffice to capture the covariance structure among the indicators. Factor analysis with one wave of data can then not distinguish between factor models with a single factor versus those with two factors that are causally related. Therefore, unless causal relations between factors can be ruled out a priori, alleged empirical evidence from one-wave factor analysis for a single factor still leaves open the possibilities of a single factor or of two factors that causally affect one another. The implications for interpreting the factor structure of psychological scales, such as self-report scales for anxiety and depression, or for happiness and purpose, are discussed. The results are further illustrated through simulations to gain insight into the practical implications of the results in more realistic settings prior to the convergence of the processes. Some further generalizations to an arbitrary number of underlying factors are noted.

There are many important high dimensional function classes that have fast agnostic learning algorithms when strong assumptions on the distribution of examples can be made, such as Gaussianity or uniformity over the domain. But how can one be sufficiently confident that the data indeed satisfies the distributional assumption, so that one can trust in the output quality of the agnostic learning algorithm? We propose a model by which to systematically study the design of tester-learner pairs $(\mathcal{A},\mathcal{T})$, such that if the distribution on examples in the data passes the tester $\mathcal{T}$ then one can safely trust the output of the agnostic learner $\mathcal{A}$ on the data. To demonstrate the power of the model, we apply it to the classical problem of agnostically learning halfspaces under the standard Gaussian distribution and present a tester-learner pair with a combined run-time of $n^{\tilde{O}(1/\epsilon^4)}$. This qualitatively matches that of the best known ordinary agnostic learning algorithms for this task. In contrast, finite sample Gaussian distribution testers do not exist for the $L_1$ and EMD distance measures. A key step in the analysis is a novel characterization of concentration and anti-concentration properties of a distribution whose low-degree moments approximately match those of a Gaussian. We also use tools from polynomial approximation theory. In contrast, we show strong lower bounds on the combined run-times of tester-learner pairs for the problems of agnostically learning convex sets under the Gaussian distribution and for monotone Boolean functions under the uniform distribution over $\{0,1\}^n$. Through these lower bounds we exhibit natural problems where there is a dramatic gap between standard agnostic learning run-time and the run-time of the best tester-learner pair.