Principal component analysis and factor analysis are fundamental multivariate analysis methods. In this paper a unified framework to connect them is introduced. Under a general latent variable model, we present matrix optimization problems from the viewpoint of loss function minimization, and show that the two methods can be viewed as solutions to the optimization problems with specific loss functions. Specifically, principal component analysis can be derived from a broad class of loss functions including the L2 norm, while factor analysis corresponds to a modified L0 norm problem. Related problems are discussed, including algorithms, penalized maximum likelihood estimation under the latent variable model, and a principal component factor model. These results can lead to new tools of data analysis and research topics.

相關內容

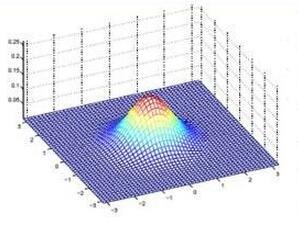

This paper presents a fitted space-time finite element method for solving a parabolic advection-diffusion problem with a nonstationary interface. The jumping diffusion coefficient gives rise to the discontinuity of the spatial gradient of solution across the interface. We use the Banach-Necas-Babuska theorem to show the well-posedness of the continuous variational problem. A fully discrete finite-element based scheme is analyzed using the Galerkin method and unstructured fitted meshes. An optimal error estimate is established in a discrete energy norm under appropriate globally low but locally high regularity conditions. Some numerical results corroborate our theoretical results.

Neural radiance fields (NeRFs) are a deep learning technique that can generate novel views of 3D scenes using sparse 2D images from different viewing directions and camera poses. As an extension of conventional NeRFs in underwater environment, where light can get absorbed and scattered by water, SeaThru-NeRF was proposed to separate the clean appearance and geometric structure of underwater scene from the effects of the scattering medium. Since the quality of the appearance and structure of underwater scenes is crucial for downstream tasks such as underwater infrastructure inspection, the reliability of the 3D reconstruction model should be considered and evaluated. Nonetheless, owing to the lack of ability to quantify uncertainty in 3D reconstruction of underwater scenes under natural ambient illumination, the practical deployment of NeRFs in unmanned autonomous underwater navigation is limited. To address this issue, we introduce a spatial perturbation field D_omega based on Bayes' rays in SeaThru-NeRF and perform Laplace approximation to obtain a Gaussian distribution N(0,Sigma) of the parameters omega, where the diagonal elements of Sigma correspond to the uncertainty at each spatial location. We also employ a simple thresholding method to remove artifacts from the rendered results of underwater scenes. Numerical experiments are provided to demonstrate the effectiveness of this approach.

In this paper we discuss reduced order models for the approximation of parametric eigenvalue problems. In particular, we are interested in the presence of intersections or clusters of eigenvalues. The singularities originating by these phenomena make it hard a straightforward generalization of well known strategies normally used for standards PDEs. We investigate how the known results extend (or not) to higher order frequencies.

The novelty of the current work is precisely to propose a statistical procedure to combine estimates of the modal parameters provided by any set of Operational Modal Analysis (OMA) algorithms so as to avoid preference for a particular one and also to derive an approximate joint probability distribution of the modal parameters, from which engineering statistics of interest such as mean value and variance are readily provided. The effectiveness of the proposed strategy is assessed considering measured data from an actual centrifugal compressor. The statistics obtained for both forward and backward modal parameters are finally compared against modal parameters identified during standard stability verification testing (SVT) of centrifugal compressors prior to shipment, using classical Experimental Modal Analysis (EMA) algorithms. The current work demonstrates that combination of OMA algorithms can provide quite accurate estimates for both the modal parameters and the associated uncertainties with low computational costs.

Mediation analyses allow researchers to quantify the effect of an exposure variable on an outcome variable through a mediator variable. If a binary mediator variable is misclassified, the resulting analysis can be severely biased. Misclassification is especially difficult to deal with when it is differential and when there are no gold standard labels available. Previous work has addressed this problem using a sensitivity analysis framework or by assuming that misclassification rates are known. We leverage a variable related to the misclassification mechanism to recover unbiased parameter estimates without using gold standard labels. The proposed methods require the reasonable assumption that the sum of the sensitivity and specificity is greater than 1. Three correction methods are presented: (1) an ordinary least squares correction for Normal outcome models, (2) a multi-step predictive value weighting method, and (3) a seamless expectation-maximization algorithm. We apply our misclassification correction strategies to investigate the mediating role of gestational hypertension on the association between maternal age and pre-term birth.

3D instance segmentation is crucial for applications demanding comprehensive 3D scene understanding. In this paper, we introduce a novel method that simultaneously learns coefficients and prototypes. Employing an overcomplete sampling strategy, our method produces an overcomplete set of instance predictions, from which the optimal ones are selected through a Non-Maximum Suppression (NMS) algorithm during inference. The obtained prototypes are visualizable and interpretable. Our method demonstrates superior performance on S3DIS-blocks, consistently outperforming existing methods in mRec and mPrec. Moreover, it operates 32.9% faster than the state-of-the-art. Notably, with only 0.8% of the total inference time, our method exhibits an over 20-fold reduction in the variance of inference time compared to existing methods. These attributes render our method well-suited for practical applications requiring both rapid inference and high reliability.

Finding the optimal design of experiments in the Bayesian setting typically requires estimation and optimization of the expected information gain functional. This functional consists of one outer and one inner integral, separated by the logarithm function applied to the inner integral. When the mathematical model of the experiment contains uncertainty about the parameters of interest and nuisance uncertainty, (i.e., uncertainty about parameters that affect the model but are not themselves of interest to the experimenter), two inner integrals must be estimated. Thus, the already considerable computational effort required to determine good approximations of the expected information gain is increased further. The Laplace approximation has been applied successfully in the context of experimental design in various ways, and we propose two novel estimators featuring the Laplace approximation to alleviate the computational burden of both inner integrals considerably. The first estimator applies Laplace's method followed by a Laplace approximation, introducing a bias. The second estimator uses two Laplace approximations as importance sampling measures for Monte Carlo approximations of the inner integrals. Both estimators use Monte Carlo approximation for the remaining outer integral estimation. We provide four numerical examples demonstrating the applicability and effectiveness of our proposed estimators.

This paper delves into the equivalence problem of Smith forms for multivariate polynomial matrices. Generally speaking, multivariate ($n \geq 2$) polynomial matrices and their Smith forms may not be equivalent. However, under certain specific condition, we derive the necessary and sufficient condition for their equivalence. Let $F\in K[x_1,\ldots,x_n]^{l\times m}$ be of rank $r$, $d_r(F)\in K[x_1]$ be the greatest common divisor of all the $r\times r$ minors of $F$, where $K$ is a field, $x_1,\ldots,x_n$ are variables and $1 \leq r \leq \min\{l,m\}$. Our key findings reveal the result: $F$ is equivalent to its Smith form if and only if all the $i\times i$ reduced minors of $F$ generate $K[x_1,\ldots,x_n]$ for $i=1,\ldots,r$.

Shape-restricted inferences have exhibited empirical success in various applications with survival data. However, certain works fall short in providing a rigorous theoretical justification and an easy-to-use variance estimator with theoretical guarantee. Motivated by Deng et al. (2023), this paper delves into an additive and shape-restricted partially linear Cox model for right-censored data, where each additive component satisfies a specific shape restriction, encompassing monotonic increasing/decreasing and convexity/concavity. We systematically investigate the consistencies and convergence rates of the shape-restricted maximum partial likelihood estimator (SMPLE) of all the underlying parameters. We further establish the aymptotic normality and semiparametric effiency of the SMPLE for the linear covariate shift. To estimate the asymptotic variance, we propose an innovative data-splitting variance estimation method that boasts exceptional versatility and broad applicability. Our simulation results and an analysis of the Rotterdam Breast Cancer dataset demonstrate that the SMPLE has comparable performance with the maximum likelihood estimator under the Cox model when the Cox model is correct, and outperforms the latter and Huang (1999)'s method when the Cox model is violated or the hazard is nonsmooth. Meanwhile, the proposed variance estimation method usually leads to reliable interval estimates based on the SMPLE and its competitors.

In this paper we develop a novel neural network model for predicting implied volatility surface. Prior financial domain knowledge is taken into account. A new activation function that incorporates volatility smile is proposed, which is used for the hidden nodes that process the underlying asset price. In addition, financial conditions, such as the absence of arbitrage, the boundaries and the asymptotic slope, are embedded into the loss function. This is one of the very first studies which discuss a methodological framework that incorporates prior financial domain knowledge into neural network architecture design and model training. The proposed model outperforms the benchmarked models with the option data on the S&P 500 index over 20 years. More importantly, the domain knowledge is satisfied empirically, showing the model is consistent with the existing financial theories and conditions related to implied volatility surface.