A numerical method ADER-DG with a local DG predictor for solving a DAE system has been developed, which was based on the formulation of ADER-DG methods using a local DG predictor for solving ODE and PDE systems. The basis functions were chosen in the form of Lagrange interpolation polynomials with nodal points at the roots of the Radau polynomials, which differs from the classical formulations of the ADER-DG method, where it is customary to use the roots of Legendre polynomials. It was shown that the use of this basis leads to A-stability and L1-stability in the case of using the DAE solver as ODE solver. The numerical method ADER-DG allows one to obtain a highly accurate numerical solution even on very coarse grids, with a step greater than the main characteristic scale of solution variation. The local discrete time solution can be used as a numerical solution of the DAE system between grid nodes, thereby providing subgrid resolution even in the case of very coarse grids. The classical test examples were solved by developed numerical method ADER-DG. With increasing index of the DAE system, a decrease in the empirical convergence orders p is observed. An unexpected result was obtained in the numerical solution of the stiff DAE system -- the empirical convergence orders of the numerical solution obtained using the developed method turned out to be significantly higher than the values expected for this method in the case of stiff problems. It turns out that the use of Lagrange interpolation polynomials with nodal points at the roots of the Radau polynomials is much better suited for solving stiff problems. Estimates showed that the computational costs of the ADER-DG method are approximately comparable to the computational costs of implicit Runge-Kutta methods used to solve DAE systems. Methods were proposed to reduce the computational costs of the ADER-DG method.

相關內容

We propose a local discontinuous Galerkin (LDG) method for fractional Korteweg-de Vries equation involving the fractional Laplacian with exponent $\alpha\in (1,2)$ in one and two space dimensions. By decomposing the fractional Laplacian into a first order derivative and a fractional integral, we prove $L^2$-stability of the semi-discrete LDG scheme incorporating suitable interface and boundary fluxes. We analyze the error estimate by considering linear convection term and utilizing the estimate, we derive the error estimate for general nonlinear flux and demonstrate an order of convergence $\mathcal{O}(h^{k+1/2})$. Moreover, the stability and error analysis have been extended to multiple space dimensional case. Numerical illustrations are shown to demonstrate the efficiency of the scheme by obtaining an optimal order of convergence.

Splitting methods are widely used for solving initial value problems (IVPs) due to their ability to simplify complicated evolutions into more manageable subproblems which can be solved efficiently and accurately. Traditionally, these methods are derived using analytic and algebraic techniques from numerical analysis, including truncated Taylor series and their Lie algebraic analogue, the Baker--Campbell--Hausdorff formula. These tools enable the development of high-order numerical methods that provide exceptional accuracy for small timesteps. Moreover, these methods often (nearly) conserve important physical invariants, such as mass, unitarity, and energy. However, in many practical applications the computational resources are limited. Thus, it is crucial to identify methods that achieve the best accuracy within a fixed computational budget, which might require taking relatively large timesteps. In this regime, high-order methods derived with traditional methods often exhibit large errors since they are only designed to be asymptotically optimal. Machine Learning techniques offer a potential solution since they can be trained to efficiently solve a given IVP with less computational resources. However, they are often purely data-driven, come with limited convergence guarantees in the small-timestep regime and do not necessarily conserve physical invariants. In this work, we propose a framework for finding machine learned splitting methods that are computationally efficient for large timesteps and have provable convergence and conservation guarantees in the small-timestep limit. We demonstrate numerically that the learned methods, which by construction converge quadratically in the timestep size, can be significantly more efficient than established methods for the Schr\"{o}dinger equation if the computational budget is limited.

We consider the problem of sampling a multimodal distribution with a Markov chain given a small number of samples from the stationary measure. Although mixing can be arbitrarily slow, we show that if the Markov chain has a $k$th order spectral gap, initialization from a set of $\tilde O(k/\varepsilon^2)$ samples from the stationary distribution will, with high probability over the samples, efficiently generate a sample whose conditional law is $\varepsilon$-close in TV distance to the stationary measure. In particular, this applies to mixtures of $k$ distributions satisfying a Poincar\'e inequality, with faster convergence when they satisfy a log-Sobolev inequality. Our bounds are stable to perturbations to the Markov chain, and in particular work for Langevin diffusion over $\mathbb R^d$ with score estimation error, as well as Glauber dynamics combined with approximation error from pseudolikelihood estimation. This justifies the success of data-based initialization for score matching methods despite slow mixing for the data distribution, and improves and generalizes the results of Koehler and Vuong (2023) to have linear, rather than exponential, dependence on $k$ and apply to arbitrary semigroups. As a consequence of our results, we show for the first time that a natural class of low-complexity Ising measures can be efficiently learned from samples.

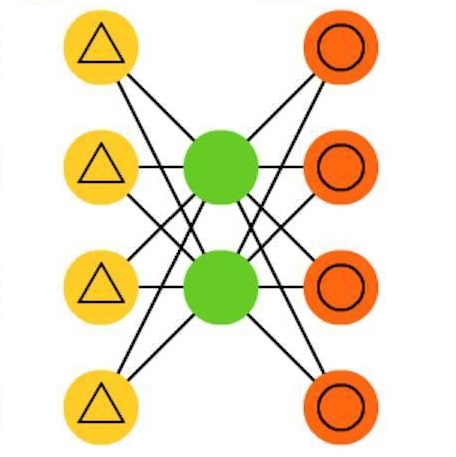

A number of models have been developed for information spread through networks, often for solving the Influence Maximization (IM) problem. IM is the task of choosing a fixed number of nodes to "seed" with information in order to maximize the spread of this information through the network, with applications in areas such as marketing and public health. Most methods for this problem rely heavily on the assumption of known strength of connections between network members (edge weights), which is often unrealistic. In this paper, we develop a likelihood-based approach to estimate edge weights from the fully and partially observed information diffusion paths. We also introduce a broad class of information diffusion models, the general linear threshold (GLT) model, which generalizes the well-known linear threshold (LT) model by allowing arbitrary distributions of node activation thresholds. We then show our weight estimator is consistent under the GLT and some mild assumptions. For the special case of the standard LT model, we also present a much faster expectation-maximization approach for weight estimation. Finally, we prove that for the GLT models, the IM problem can be solved by a natural greedy algorithm with standard optimality guarantees if all node threshold distributions have concave cumulative distribution functions. Extensive experiments on synthetic and real-world networks demonstrate that the flexibility in the choice of threshold distribution combined with the estimation of edge weights significantly improves the quality of IM solutions, spread prediction, and the estimates of the node activation probabilities.

A permutation code is a nonlinear code whose codewords are permutation of a set of symbols. We consider the use of permutation code in the deletion channel, and consider the symbol-invariant error model, meaning that the values of the symbols that are not removed are not affected by the deletion. In 1992, Levenshtein gave a construction of perfect single-deletion-correcting permutation codes that attain the maximum code size. Furthermore, he showed in the same paper that the set of all permutations of a given length can be partitioned into permutation codes so constructed. This construction relies on the binary Varshamov-Tenengolts codes. In this paper we give an independent and more direct proof of Levenshtein's result that does not depend on the Varshamov-Tenengolts code. Using the new approach, we devise efficient encoding and decoding algorithms that correct one deletion.

This paper introduces filtered finite difference methods for numerically solving a dispersive evolution equation with solutions that are highly oscillatory in both space and time. We consider a semiclassically scaled nonlinear Schr\"odinger equation with highly oscillatory initial data in the form of a modulated plane wave. The proposed methods do not need to resolve high-frequency oscillations in both space and time by prohibitively fine grids as would be required by standard finite difference methods. The approach taken here modifies traditional finite difference methods by incorporating appropriate filters. Specifically, we propose the filtered leapfrog and filtered Crank--Nicolson methods, both of which achieve second-order accuracy with time steps and mesh sizes that are not restricted in magnitude by the small semiclassical parameter. Furthermore, the filtered Crank--Nicolson method conserves both the discrete mass and a discrete energy. Numerical experiments illustrate the theoretical results.

We study a deterministic particle scheme to solve a scalar balance equation with nonlocal interaction and nonlinear mobility used to model congested dynamics. The main novelty with respect to "Radici-Stra [SIAM J. Math. Anal. 55.3 (2023)]" is the presence of a source term; this causes the solutions to no longer be probability measures, thus requiring a suitable adaptation of the numerical scheme and of the estimates leading to compactness.

Recently, general fractional calculus was introduced by Kochubei (2011) and Luchko (2021) as a further generalisation of fractional calculus, where the derivative and integral operator admits arbitrary kernel. Such a formalism will have many applications in physics and engineering, since the kernel is no longer restricted. We first extend the work of Al-Refai and Luchko (2023) on finite interval to arbitrary orders. Followed by, developing an efficient Petrov-Galerkin scheme by introducing Jacobi convolution polynomials as basis functions. A notable property of this basis function, the general fractional derivative of Jacobi convolution polynomial is a shifted Jacobi polynomial. Thus, with a suitable test function it results in diagonal stiffness matrix, hence, the efficiency in implementation. Furthermore, our method is constructed for any arbitrary kernel including that of fractional operator, since, its a special case of general fractional operator.

Moist thermodynamics is a fundamental driver of atmospheric dynamics across all scales, making accurate modeling of these processes essential for reliable weather forecasts and climate change projections. However, atmospheric models often make a variety of inconsistent approximations in representing moist thermodynamics. These inconsistencies can introduce spurious sources and sinks of energy, potentially compromising the integrity of the models. Here, we present a thermodynamically consistent and structure preserving formulation of the moist compressible Euler equations. When discretised with a summation by parts method, our spatial discretisation conserves: mass, water, entropy, and energy. These properties are achieved by discretising a skew symmetric form of the moist compressible Euler equations, using entropy as a prognostic variable, and the summation-by-parts property of discrete derivative operators. Additionally, we derive a discontinuous Galerkin spectral element method with energy and tracer variance stable numerical fluxes, and experimentally verify our theoretical results through numerical simulations.

The goal of explainable Artificial Intelligence (XAI) is to generate human-interpretable explanations, but there are no computationally precise theories of how humans interpret AI generated explanations. The lack of theory means that validation of XAI must be done empirically, on a case-by-case basis, which prevents systematic theory-building in XAI. We propose a psychological theory of how humans draw conclusions from saliency maps, the most common form of XAI explanation, which for the first time allows for precise prediction of explainee inference conditioned on explanation. Our theory posits that absent explanation humans expect the AI to make similar decisions to themselves, and that they interpret an explanation by comparison to the explanations they themselves would give. Comparison is formalized via Shepard's universal law of generalization in a similarity space, a classic theory from cognitive science. A pre-registered user study on AI image classifications with saliency map explanations demonstrate that our theory quantitatively matches participants' predictions of the AI.