This paper introduces a structured, adaptive-length deep representation called Neural Eigenmap. Unlike prior spectral methods such as Laplacian Eigenmap that operate in a nonparametric manner, Neural Eigenmap leverages NeuralEF to parametrically model eigenfunctions using a neural network. We show that, when the eigenfunction is derived from positive relations in a data augmentation setup, applying NeuralEF results in an objective function that resembles those of popular self-supervised learning methods, with an additional symmetry-breaking property that leads to \emph{structured} representations where features are ordered by importance. We demonstrate using such representations as adaptive-length codes in image retrieval systems. By truncation according to feature importance, our method requires up to $16\times$ shorter representation length than leading self-supervised learning ones to achieve similar retrieval performance. We further apply our method to graph data and report strong results on a node representation learning benchmark with more than one million nodes.

相關內容

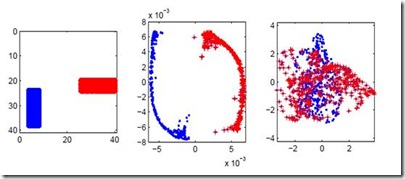

Kernel methods are applied to many problems in pattern recognition, including subspace clustering (SC). That way, nonlinear problems in the input data space become linear in mapped high-dimensional feature space. Thereby, computationally tractable nonlinear algorithms are enabled through implicit mapping by the virtue of kernel trick. However, kernelization of linear algorithms is possible only if square of the Froebenious norm of the error term is used in related optimization problem. That, however, implies normal distribution of the error. That is not appropriate for non-Gaussian errors such as gross sparse corruptions that are modeled by -norm. Herein, to the best of our knowledge, we propose for the first time robust kernel sparse SC (RKSSC) algorithm for data with gross sparse corruptions. The concept, in principle, can be applied to other SC algorithms to achieve robustness to the presence of such type of corruption. We validated proposed approach on two well-known datasets with linear robust SSC algorithm as a baseline model. According to Wilcoxon test, clustering performance obtained by the RKSSC algorithm is statistically significantly better than corresponding performance obtained by the robust SSC algorithm. MATLAB code of proposed RKSSC algorithm is posted on //github.com/ikopriva/RKSSC.

This paper discusses the development of synthetic cohomology in Homotopy Type Theory (HoTT), as well as its computer formalisation. The objectives of this paper are (1) to generalise previous work on integral cohomology in HoTT by the current authors and Brunerie (2022) to cohomology with arbitrary coefficients and (2) to provide the mathematical details of, as well as extend, results underpinning the computer formalisation of cohomology rings by the current authors and Lamiaux (2023). With respect to objective (1), we provide new direct definitions of the cohomology group operations and of the cup product, which, just as in (Brunerie et al., 2022), enable significant simplifications of many earlier proofs in synthetic cohomology theory. In particular, the new definition of the cup product allows us to give the first complete formalisation of the axioms needed to turn the cohomology groups into a graded commutative ring. We also establish that this cohomology theory satisfies the HoTT formulation of the Eilenberg-Steenrod axioms for cohomology and study the classical Mayer-Vietoris and Gysin sequences. With respect to objective (2), we characterise the cohomology groups and rings of various spaces, including the spheres, torus, Klein bottle, real/complex projective planes, and infinite real projective space. All results have been formalised in Cubical Agda and we obtain multiple new numbers, similar to the famous `Brunerie number', which can be used as benchmarks for computational implementations of HoTT. Some of these numbers are infeasible to compute in Cubical Agda and hence provide new computational challenges and open problems which are much easier to define than the original Brunerie number.

This paper introduces a novel ridgelet transform-based method for Poisson image denoising. Our work focuses on harnessing the Poisson noise's unique non-additive and signal-dependent properties, distinguishing it from Gaussian noise. The core of our approach is a new thresholding scheme informed by theoretical insights into the ridgelet coefficients of Poisson-distributed images and adaptive thresholding guided by Stein's method. We verify our theoretical model through numerical experiments and demonstrate the potential of ridgelet thresholding across assorted scenarios. Our findings represent a significant step in enhancing the understanding of Poisson noise and offer an effective denoising method for images corrupted with it.

In this paper, we propose a novel graph neural network-based recommendation model called KGLN, which leverages Knowledge Graph (KG) information to enhance the accuracy and effectiveness of personalized recommendations. We first use a single-layer neural network to merge individual node features in the graph, and then adjust the aggregation weights of neighboring entities by incorporating influence factors. The model evolves from a single layer to multiple layers through iteration, enabling entities to access extensive multi-order associated entity information. The final step involves integrating features of entities and users to produce a recommendation score. The model performance was evaluated by comparing its effects on various aggregation methods and influence factors. In tests over the MovieLen-1M and Book-Crossing datasets, KGLN shows an Area Under the ROC curve (AUC) improvement of 0.3% to 5.9% and 1.1% to 8.2%, respectively, which is better than existing benchmark methods like LibFM, DeepFM, Wide&Deep, and RippleNet.

This study introduces the Lower Ricci Curvature (LRC), a novel, scalable, and scale-free discrete curvature designed to enhance community detection in networks. Addressing the computational challenges posed by existing curvature-based methods, LRC offers a streamlined approach with linear computational complexity, making it well-suited for large-scale network analysis. We further develop an LRC-based preprocessing method that effectively augments popular community detection algorithms. Through comprehensive simulations and applications on real-world datasets, including the NCAA football league network, the DBLP collaboration network, the Amazon product co-purchasing network, and the YouTube social network, we demonstrate the efficacy of our method in significantly improving the performance of various community detection algorithms.

This paper introduces a Korean legal judgment prediction (LJP) dataset for insurance disputes. Successful LJP models on insurance disputes can benefit insurance companies and their customers. It can save both sides' time and money by allowing them to predict how the result would come out if they proceed to the dispute mediation process. As is often the case with low-resource languages, there is a limitation on the amount of data available for this specific task. To mitigate this issue, we investigate how one can achieve a good performance despite the limitation in data. In our experiment, we demonstrate that Sentence Transformer Fine-tuning (SetFit, Tunstall et al., 2022) is a good alternative to standard fine-tuning when training data are limited. The models fine-tuned with the SetFit approach on our data show similar performance to the Korean LJP benchmark models (Hwang et al., 2022) despite the much smaller data size.

Technology ecosystems often undergo significant transformations as they mature. For example, telephony, the Internet, and PCs all started with a single provider, but in the United States each is now served by a competitive market that uses comprehensive and universal technology standards to provide compatibility. This white paper presents our view on how the cloud ecosystem, barely over fifteen years old, could evolve as it matures.

This paper shows that masked autoencoders (MAE) are scalable self-supervised learners for computer vision. Our MAE approach is simple: we mask random patches of the input image and reconstruct the missing pixels. It is based on two core designs. First, we develop an asymmetric encoder-decoder architecture, with an encoder that operates only on the visible subset of patches (without mask tokens), along with a lightweight decoder that reconstructs the original image from the latent representation and mask tokens. Second, we find that masking a high proportion of the input image, e.g., 75%, yields a nontrivial and meaningful self-supervisory task. Coupling these two designs enables us to train large models efficiently and effectively: we accelerate training (by 3x or more) and improve accuracy. Our scalable approach allows for learning high-capacity models that generalize well: e.g., a vanilla ViT-Huge model achieves the best accuracy (87.8%) among methods that use only ImageNet-1K data. Transfer performance in downstream tasks outperforms supervised pre-training and shows promising scaling behavior.

This paper presents a new approach for assembling graph neural networks based on framelet transforms. The latter provides a multi-scale representation for graph-structured data. With the framelet system, we can decompose the graph feature into low-pass and high-pass frequencies as extracted features for network training, which then defines a framelet-based graph convolution. The framelet decomposition naturally induces a graph pooling strategy by aggregating the graph feature into low-pass and high-pass spectra, which considers both the feature values and geometry of the graph data and conserves the total information. The graph neural networks with the proposed framelet convolution and pooling achieve state-of-the-art performance in many types of node and graph prediction tasks. Moreover, we propose shrinkage as a new activation for the framelet convolution, which thresholds the high-frequency information at different scales. Compared to ReLU, shrinkage in framelet convolution improves the graph neural network model in terms of denoising and signal compression: noises in both node and structure can be significantly reduced by accurately cutting off the high-pass coefficients from framelet decomposition, and the signal can be compressed to less than half its original size with the prediction performance well preserved.

This paper proposes a method to modify traditional convolutional neural networks (CNNs) into interpretable CNNs, in order to clarify knowledge representations in high conv-layers of CNNs. In an interpretable CNN, each filter in a high conv-layer represents a certain object part. We do not need any annotations of object parts or textures to supervise the learning process. Instead, the interpretable CNN automatically assigns each filter in a high conv-layer with an object part during the learning process. Our method can be applied to different types of CNNs with different structures. The clear knowledge representation in an interpretable CNN can help people understand the logics inside a CNN, i.e., based on which patterns the CNN makes the decision. Experiments showed that filters in an interpretable CNN were more semantically meaningful than those in traditional CNNs.