: Deep learning methodologies have been used to create applications that can cause threats to privacy, democracy and national security and could be used to further amplify malicious activities. One of those deep learning-powered applications in recent times is synthesized videos of famous personalities. According to Forbes, Generative Adversarial Networks(GANs) generated fake videos growing exponentially every year and the organization known as Deeptrace had estimated an increase of deepfakes by 84% from the year 2018 to 2019. They are used to generate and modify human faces, where most of the existing fake videos are of prurient non-consensual nature, of which its estimates to be around 96% and some carried out impersonating personalities for cyber crime. In this paper, available video datasets are identified and a pretrained model BlazeFace is used to detect faces, and a ResNet and Xception ensembled architectured neural network trained on the dataset to achieve the goal of detection of fake faces in videos. The model is optimized over a loss value and log loss values and evaluated over its F1 score. Over a sample of data, it is observed that focal loss provides better accuracy, F1 score and loss as the gamma of the focal loss becomes a hyper parameter. This provides a k-folded accuracy of around 91% at its peak in a training cycle with the real world accuracy subjected to change over time as the model decays.

相關內容

Traditional object detection answers two questions; "what" (what the object is?) and "where" (where the object is?). "what" part of the object detection can be fine-grained further i.e. "what type", "what shape" and "what material" etc. This results in the shifting of the object detection tasks to the object description paradigm. Describing an object provides additional detail that enables us to understand the characteristics and attributes of the object ("plastic boat" not just boat, "glass bottle" not just bottle). This additional information can implicitly be used to gain insight into unseen objects (e.g. unknown object is "metallic", "has wheels"), which is not possible in traditional object detection. In this paper, we present a new approach to simultaneously detect objects and infer their attributes, we call it Detect and Describe (DaD) framework. DaD is a deep learning-based approach that extends object detection to object attribute prediction as well. We train our model on aPascal train set and evaluate our approach on aPascal test set. We achieve 97.0% in Area Under the Receiver Operating Characteristic Curve (AUC) for object attributes prediction on aPascal test set. We also show qualitative results for object attribute prediction on unseen objects, which demonstrate the effectiveness of our approach for describing unknown objects.

In this paper we present a novel method for a naive agent to detect novel objects it encounters in an interaction. We train a reinforcement learning policy on a stacking task given a known object type, and then observe the results of the agent attempting to stack various other objects based on the same trained policy. By extracting embedding vectors from a convolutional neural net trained over the results of the aforementioned stacking play, we can determine the similarity of a given object to known object types, and determine if the given object is likely dissimilar enough to the known types to be considered a novel class of object. We present the results of this method on two datasets gathered using two different policies and demonstrate what information the agent needs to extract from its environment to make these novelty judgments.

AI-driven image generation has improved significantly in recent years. Generative adversarial networks (GANs), like StyleGAN, are able to generate high-quality realistic data and have artistic control over the output, as well. In this work, we present StyleT2F, a method of controlling the output of StyleGAN2 using text, in order to be able to generate a detailed human face from textual description. We utilize StyleGAN's latent space to manipulate different facial features and conditionally sample the required latent code, which embeds the facial features mentioned in the input text. Our method proves to capture the required features correctly and shows consistency between the input text and the output images. Moreover, our method guarantees disentanglement on manipulating a wide range of facial features that sufficiently describes a human face.

In recent years, malware detection has become an active research topic in the area of Internet of Things (IoT) security. The principle is to exploit knowledge from large quantities of continuously generated malware. Existing algorithms practice available malware features for IoT devices and lack real-time prediction behaviors. More research is thus required on malware detection to cope with real-time misclassification of the input IoT data. Motivated by this, in this paper we propose an adversarial self-supervised architecture for detecting malware in IoT networks, SETTI, considering samples of IoT network traffic that may not be labeled. In the SETTI architecture, we design three self-supervised attack techniques, namely Self-MDS, GSelf-MDS and ASelf-MDS. The Self-MDS method considers the IoT input data and the adversarial sample generation in real-time. The GSelf-MDS builds a generative adversarial network model to generate adversarial samples in the self-supervised structure. Finally, ASelf-MDS utilizes three well-known perturbation sample techniques to develop adversarial malware and inject it over the self-supervised architecture. Also, we apply a defence method to mitigate these attacks, namely adversarial self-supervised training to protect the malware detection architecture against injecting the malicious samples. To validate the attack and defence algorithms, we conduct experiments on two recent IoT datasets: IoT23 and NBIoT. Comparison of the results shows that in the IoT23 dataset, the Self-MDS method has the most damaging consequences from the attacker's point of view by reducing the accuracy rate from 98% to 74%. In the NBIoT dataset, the ASelf-MDS method is the most devastating algorithm that can plunge the accuracy rate from 98% to 77%.

Multi-camera vehicle tracking is one of the most complicated tasks in Computer Vision as it involves distinct tasks including Vehicle Detection, Tracking, and Re-identification. Despite the challenges, multi-camera vehicle tracking has immense potential in transportation applications including speed, volume, origin-destination (O-D), and routing data generation. Several recent works have addressed the multi-camera tracking problem. However, most of the effort has gone towards improving accuracy on high-quality benchmark datasets while disregarding lower camera resolutions, compression artifacts and the overwhelming amount of computational power and time needed to carry out this task on its edge and thus making it prohibitive for large-scale and real-time deployment. Therefore, in this work we shed light on practical issues that should be addressed for the design of a multi-camera tracking system to provide actionable and timely insights. Moreover, we propose a real-time city-scale multi-camera vehicle tracking system that compares favorably to computationally intensive alternatives and handles real-world, low-resolution CCTV instead of idealized and curated video streams. To show its effectiveness, in addition to integration into the Regional Integrated Transportation Information System (RITIS), we participated in the 2021 NVIDIA AI City multi-camera tracking challenge and our method is ranked among the top five performers on the public leaderboard.

Automatic fake news detection models are ostensibly based on logic, where the truth of a claim made in a headline can be determined by supporting or refuting evidence found in a resulting web query. These models are believed to be reasoning in some way; however, it has been shown that these same results, or better, can be achieved without considering the claim at all -- only the evidence. This implies that other signals are contained within the examined evidence, and could be based on manipulable factors such as emotion, sentiment, or part-of-speech (POS) frequencies, which are vulnerable to adversarial inputs. We neutralize some of these signals through multiple forms of both neural and non-neural pre-processing and style transfer, and find that this flattening of extraneous indicators can induce the models to actually require both claims and evidence to perform well. We conclude with the construction of a model using emotion vectors built off a lexicon and passed through an "emotional attention" mechanism to appropriately weight certain emotions. We provide quantifiable results that prove our hypothesis that manipulable features are being used for fact-checking.

Humans have a natural instinct to identify unknown object instances in their environments. The intrinsic curiosity about these unknown instances aids in learning about them, when the corresponding knowledge is eventually available. This motivates us to propose a novel computer vision problem called: `Open World Object Detection', where a model is tasked to: 1) identify objects that have not been introduced to it as `unknown', without explicit supervision to do so, and 2) incrementally learn these identified unknown categories without forgetting previously learned classes, when the corresponding labels are progressively received. We formulate the problem, introduce a strong evaluation protocol and provide a novel solution, which we call ORE: Open World Object Detector, based on contrastive clustering and energy based unknown identification. Our experimental evaluation and ablation studies analyze the efficacy of ORE in achieving Open World objectives. As an interesting by-product, we find that identifying and characterizing unknown instances helps to reduce confusion in an incremental object detection setting, where we achieve state-of-the-art performance, with no extra methodological effort. We hope that our work will attract further research into this newly identified, yet crucial research direction.

The considerable significance of Anomaly Detection (AD) problem has recently drawn the attention of many researchers. Consequently, the number of proposed methods in this research field has been increased steadily. AD strongly correlates with the important computer vision and image processing tasks such as image/video anomaly, irregularity and sudden event detection. More recently, Deep Neural Networks (DNNs) offer a high performance set of solutions, but at the expense of a heavy computational cost. However, there is a noticeable gap between the previously proposed methods and an applicable real-word approach. Regarding the raised concerns about AD as an ongoing challenging problem, notably in images and videos, the time has come to argue over the pitfalls and prospects of methods have attempted to deal with visual AD tasks. Hereupon, in this survey we intend to conduct an in-depth investigation into the images/videos deep learning based AD methods. We also discuss current challenges and future research directions thoroughly.

Detection and recognition of text in natural images are two main problems in the field of computer vision that have a wide variety of applications in analysis of sports videos, autonomous driving, industrial automation, to name a few. They face common challenging problems that are factors in how text is represented and affected by several environmental conditions. The current state-of-the-art scene text detection and/or recognition methods have exploited the witnessed advancement in deep learning architectures and reported a superior accuracy on benchmark datasets when tackling multi-resolution and multi-oriented text. However, there are still several remaining challenges affecting text in the wild images that cause existing methods to underperform due to there models are not able to generalize to unseen data and the insufficient labeled data. Thus, unlike previous surveys in this field, the objectives of this survey are as follows: first, offering the reader not only a review on the recent advancement in scene text detection and recognition, but also presenting the results of conducting extensive experiments using a unified evaluation framework that assesses pre-trained models of the selected methods on challenging cases, and applies the same evaluation criteria on these techniques. Second, identifying several existing challenges for detecting or recognizing text in the wild images, namely, in-plane-rotation, multi-oriented and multi-resolution text, perspective distortion, illumination reflection, partial occlusion, complex fonts, and special characters. Finally, the paper also presents insight into the potential research directions in this field to address some of the mentioned challenges that are still encountering scene text detection and recognition techniques.

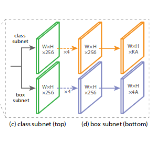

It is a common paradigm in object detection frameworks to treat all samples equally and target at maximizing the performance on average. In this work, we revisit this paradigm through a careful study on how different samples contribute to the overall performance measured in terms of mAP. Our study suggests that the samples in each mini-batch are neither independent nor equally important, and therefore a better classifier on average does not necessarily mean higher mAP. Motivated by this study, we propose the notion of Prime Samples, those that play a key role in driving the detection performance. We further develop a simple yet effective sampling and learning strategy called PrIme Sample Attention (PISA) that directs the focus of the training process towards such samples. Our experiments demonstrate that it is often more effective to focus on prime samples than hard samples when training a detector. Particularly, On the MSCOCO dataset, PISA outperforms the random sampling baseline and hard mining schemes, e.g. OHEM and Focal Loss, consistently by more than 1% on both single-stage and two-stage detectors, with a strong backbone ResNeXt-101.