As a rapidly growing cyber-physical platform, Autonomous Vehicles (AVs) are encountering more security challenges as their capabilities continue to expand. In recent years, adversaries are actively targeting the perception sensors of autonomous vehicles with sophisticated attacks that are not easily detected by the vehicles' control systems. This work proposes an Anomaly Behavior Analysis approach to detect a perception sensor attack against an autonomous vehicle. The framework relies on temporal features extracted from a physics-based autonomous vehicle behavior model to capture the normal behavior of vehicular perception in autonomous driving. By employing a combination of model-based techniques and machine learning algorithms, the proposed framework distinguishes between normal and abnormal vehicular perception behavior. To demonstrate the application of the framework in practice, we performed a depth camera attack experiment on an autonomous vehicle testbed and generated an extensive dataset. We validated the effectiveness of the proposed framework using this real-world data and released the dataset for public access. To our knowledge, this dataset is the first of its kind and will serve as a valuable resource for the research community in evaluating their intrusion detection techniques effectively.

相關內容

Matrix factorization (MF) is a simple collaborative filtering technique that achieves superior recommendation accuracy by decomposing the user-item rating matrix into user and item latent matrices. This approach relies on learning from user-item interactions, which may not effectively capture the underlying shared dependencies between users or items. Therefore, there is scope to explicitly capture shared dependencies to further improve recommendation accuracy and the interpretability of learning results by summarizing user-item interactions. Based on these insights, we propose "Hierarchical Matrix Factorization" (HMF), which incorporates clustering concepts to capture the hierarchy, where leaf nodes and other nodes correspond to users/items and clusters, respectively. Central to our approach, called hierarchical embeddings, is the additional decomposition of the user and item latent matrices (embeddings) into probabilistic connection matrices, which link the hierarchy, and a root cluster latent matrix. Thus, each node is represented by the weighted average of the embeddings of its parent clusters. The embeddings are differentiable, allowing simultaneous learning of interactions and clustering using a single gradient descent method. Furthermore, the obtained cluster-specific interactions naturally summarize user-item interactions and provide interpretability. Experimental results on rating and ranking predictions demonstrated the competitiveness of HMF over vanilla and hierarchical MF methods, especially its robustness in sparse interactions. Additionally, it was confirmed that the clustering integration of HMF has the potential for faster learning convergence and mitigation of overfitting compared to MF, and also provides interpretability through a cluster-centered case study.

We define a symbolic execution framework QSE for quantum programs by integrating symbolic variables into quantum states and the outcomes of quantum measurements. The soundness theorem of QSE is proved. We further introduce symbolic stabilizer states, which facilitate the efficient analysis of quantum error correction programs. Within the QSE framework, we can use symbolic expressions to characterize the possible adversarial errors in quantum error correction, providing a significant improvement over existing methods that rely on sampling with simulators. We implement QSE with the support of symbolic stabilizer states in a prototype tool named QuantumSE.jl. With experiments on representative quantum error correction codes, including quantum repetition codes, Kitaev's toric codes, and quantum Tanner codes, we demonstrate the efficiency of QuantumSE.jl for debugging quantum error correction programs with over 1000 qubits. In addition, as a by-product of QSE, QuantumSE.jl's sampling functionality for stabilizer circuits also outperforms the state-of-the-art stabilizer simulator, Google's Stim, in the experiments.

The Universal Basic Computing Power (UBCP) initiative ensures global, free access to a set amount of computing power specifically for AI research and development (R&D). This initiative comprises three key elements. First, UBCP must be cost free, with its usage limited to AI R&D and minimal additional conditions. Second, UBCP should continually incorporate the state of the art AI advancements, including efficiently distilled, compressed, and deployed training data, foundational models, benchmarks, and governance tools. Lastly, it's essential for UBCP to be universally accessible, ensuring convenience for all users. We urge major stakeholders in AI development large platforms, open source contributors, and policymakers to prioritize the UBCP initiative.

Recently, graph neural networks (GNNs) have been widely used for document classification. However, most existing methods are based on static word co-occurrence graphs without sentence-level information, which poses three challenges:(1) word ambiguity, (2) word synonymity, and (3) dynamic contextual dependency. To address these challenges, we propose a novel GNN-based sparse structure learning model for inductive document classification. Specifically, a document-level graph is initially generated by a disjoint union of sentence-level word co-occurrence graphs. Our model collects a set of trainable edges connecting disjoint words between sentences and employs structure learning to sparsely select edges with dynamic contextual dependencies. Graphs with sparse structures can jointly exploit local and global contextual information in documents through GNNs. For inductive learning, the refined document graph is further fed into a general readout function for graph-level classification and optimization in an end-to-end manner. Extensive experiments on several real-world datasets demonstrate that the proposed model outperforms most state-of-the-art results, and reveal the necessity to learn sparse structures for each document.

Graph neural networks (GNNs) is widely used to learn a powerful representation of graph-structured data. Recent work demonstrates that transferring knowledge from self-supervised tasks to downstream tasks could further improve graph representation. However, there is an inherent gap between self-supervised tasks and downstream tasks in terms of optimization objective and training data. Conventional pre-training methods may be not effective enough on knowledge transfer since they do not make any adaptation for downstream tasks. To solve such problems, we propose a new transfer learning paradigm on GNNs which could effectively leverage self-supervised tasks as auxiliary tasks to help the target task. Our methods would adaptively select and combine different auxiliary tasks with the target task in the fine-tuning stage. We design an adaptive auxiliary loss weighting model to learn the weights of auxiliary tasks by quantifying the consistency between auxiliary tasks and the target task. In addition, we learn the weighting model through meta-learning. Our methods can be applied to various transfer learning approaches, it performs well not only in multi-task learning but also in pre-training and fine-tuning. Comprehensive experiments on multiple downstream tasks demonstrate that the proposed methods can effectively combine auxiliary tasks with the target task and significantly improve the performance compared to state-of-the-art methods.

Recent contrastive representation learning methods rely on estimating mutual information (MI) between multiple views of an underlying context. E.g., we can derive multiple views of a given image by applying data augmentation, or we can split a sequence into views comprising the past and future of some step in the sequence. Contrastive lower bounds on MI are easy to optimize, but have a strong underestimation bias when estimating large amounts of MI. We propose decomposing the full MI estimation problem into a sum of smaller estimation problems by splitting one of the views into progressively more informed subviews and by applying the chain rule on MI between the decomposed views. This expression contains a sum of unconditional and conditional MI terms, each measuring modest chunks of the total MI, which facilitates approximation via contrastive bounds. To maximize the sum, we formulate a contrastive lower bound on the conditional MI which can be approximated efficiently. We refer to our general approach as Decomposed Estimation of Mutual Information (DEMI). We show that DEMI can capture a larger amount of MI than standard non-decomposed contrastive bounds in a synthetic setting, and learns better representations in a vision domain and for dialogue generation.

Relation prediction for knowledge graphs aims at predicting missing relationships between entities. Despite the importance of inductive relation prediction, most previous works are limited to a transductive setting and cannot process previously unseen entities. The recent proposed subgraph-based relation reasoning models provided alternatives to predict links from the subgraph structure surrounding a candidate triplet inductively. However, we observe that these methods often neglect the directed nature of the extracted subgraph and weaken the role of relation information in the subgraph modeling. As a result, they fail to effectively handle the asymmetric/anti-symmetric triplets and produce insufficient embeddings for the target triplets. To this end, we introduce a \textbf{C}\textbf{o}mmunicative \textbf{M}essage \textbf{P}assing neural network for \textbf{I}nductive re\textbf{L}ation r\textbf{E}asoning, \textbf{CoMPILE}, that reasons over local directed subgraph structures and has a vigorous inductive bias to process entity-independent semantic relations. In contrast to existing models, CoMPILE strengthens the message interactions between edges and entitles through a communicative kernel and enables a sufficient flow of relation information. Moreover, we demonstrate that CoMPILE can naturally handle asymmetric/anti-symmetric relations without the need for explosively increasing the number of model parameters by extracting the directed enclosing subgraphs. Extensive experiments show substantial performance gains in comparison to state-of-the-art methods on commonly used benchmark datasets with variant inductive settings.

Graph neural networks (GNNs) are a popular class of machine learning models whose major advantage is their ability to incorporate a sparse and discrete dependency structure between data points. Unfortunately, GNNs can only be used when such a graph-structure is available. In practice, however, real-world graphs are often noisy and incomplete or might not be available at all. With this work, we propose to jointly learn the graph structure and the parameters of graph convolutional networks (GCNs) by approximately solving a bilevel program that learns a discrete probability distribution on the edges of the graph. This allows one to apply GCNs not only in scenarios where the given graph is incomplete or corrupted but also in those where a graph is not available. We conduct a series of experiments that analyze the behavior of the proposed method and demonstrate that it outperforms related methods by a significant margin.

Multi-relation Question Answering is a challenging task, due to the requirement of elaborated analysis on questions and reasoning over multiple fact triples in knowledge base. In this paper, we present a novel model called Interpretable Reasoning Network that employs an interpretable, hop-by-hop reasoning process for question answering. The model dynamically decides which part of an input question should be analyzed at each hop; predicts a relation that corresponds to the current parsed results; utilizes the predicted relation to update the question representation and the state of the reasoning process; and then drives the next-hop reasoning. Experiments show that our model yields state-of-the-art results on two datasets. More interestingly, the model can offer traceable and observable intermediate predictions for reasoning analysis and failure diagnosis, thereby allowing manual manipulation in predicting the final answer.

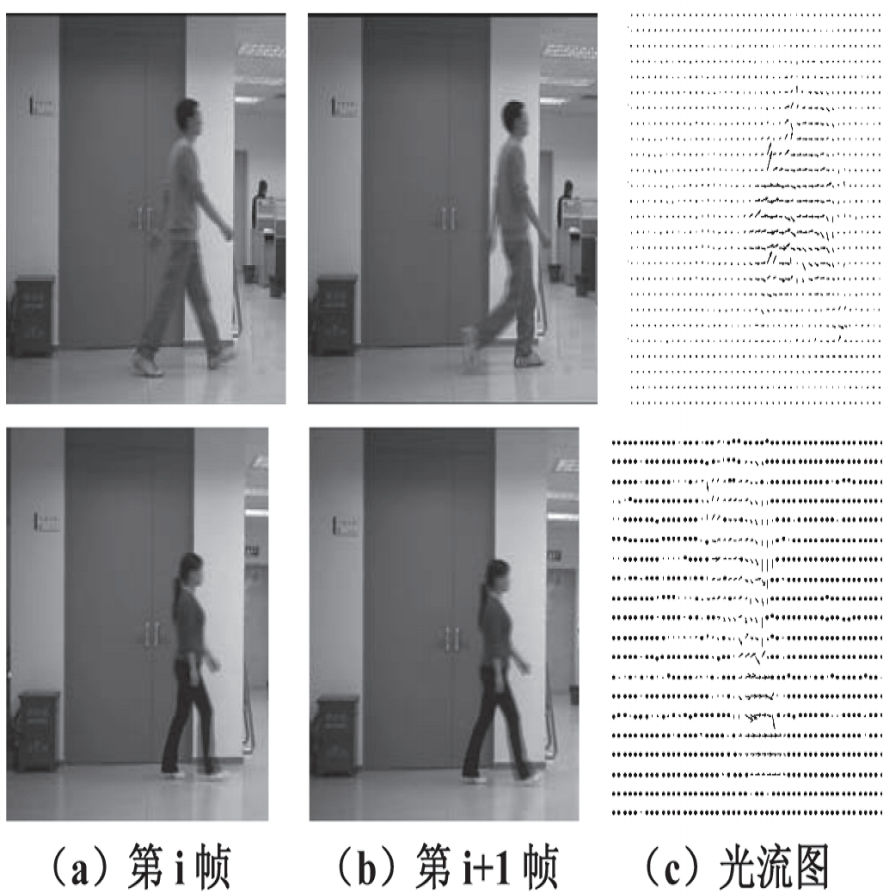

Detecting carried objects is one of the requirements for developing systems to reason about activities involving people and objects. We present an approach to detect carried objects from a single video frame with a novel method that incorporates features from multiple scales. Initially, a foreground mask in a video frame is segmented into multi-scale superpixels. Then the human-like regions in the segmented area are identified by matching a set of extracted features from superpixels against learned features in a codebook. A carried object probability map is generated using the complement of the matching probabilities of superpixels to human-like regions and background information. A group of superpixels with high carried object probability and strong edge support is then merged to obtain the shape of the carried object. We applied our method to two challenging datasets, and results show that our method is competitive with or better than the state-of-the-art.