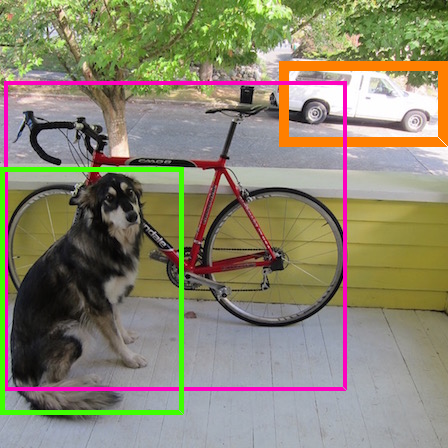

Object detection is a fundamental task in various applications ranging from autonomous driving to intelligent security systems. However, recognition of a person can be hindered when their clothing is decorated with carefully designed graffiti patterns, leading to the failure of object detection. To achieve greater attack potential against unknown black-box models, adversarial patches capable of affecting the outputs of multiple-object detection models are required. While ensemble models have proven effective, current research in the field of object detection typically focuses on the simple fusion of the outputs of all models, with limited attention being given to developing general adversarial patches that can function effectively in the physical world. In this paper, we introduce the concept of energy and treat the adversarial patches generation process as an optimization of the adversarial patches to minimize the total energy of the ``person'' category. Additionally, by adopting adversarial training, we construct a dynamically optimized ensemble model. During training, the weight parameters of the attacked target models are adjusted to find the balance point at which the generated adversarial patches can effectively attack all target models. We carried out six sets of comparative experiments and tested our algorithm on five mainstream object detection models. The adversarial patches generated by our algorithm can reduce the recognition accuracy of YOLOv2 and YOLOv3 to 13.19\% and 29.20\%, respectively. In addition, we conducted experiments to test the effectiveness of T-shirts covered with our adversarial patches in the physical world and could achieve that people are not recognized by the object detection model. Finally, leveraging the Grad-CAM tool, we explored the attack mechanism of adversarial patches from an energetic perspective.

相關內容

Trajectory prediction has garnered widespread attention in different fields, such as autonomous driving and robotic navigation. However, due to the significant variations in trajectory patterns across different scenarios, models trained in known environments often falter in unseen ones. To learn a generalized model that can directly handle unseen domains without requiring any model updating, we propose a novel meta-learning-based trajectory prediction method called MetaTra. This approach incorporates a Dual Trajectory Transformer (Dual-TT), which enables a thorough exploration of the individual intention and the interactions within group motion patterns in diverse scenarios. Building on this, we propose a meta-learning framework to simulate the generalization process between source and target domains. Furthermore, to enhance the stability of our prediction outcomes, we propose a Serial and Parallel Training (SPT) strategy along with a feature augmentation method named MetaMix. Experimental results on several real-world datasets confirm that MetaTra not only surpasses other state-of-the-art methods but also exhibits plug-and-play capabilities, particularly in the realm of domain generalization.

Combining LiDAR and camera data has shown potential in enhancing short-distance object detection in autonomous driving systems. Yet, the fusion encounters difficulties with extended distance detection due to the contrast between LiDAR's sparse data and the dense resolution of cameras. Besides, discrepancies in the two data representations further complicate fusion methods. We introduce AYDIV, a novel framework integrating a tri-phase alignment process specifically designed to enhance long-distance detection even amidst data discrepancies. AYDIV consists of the Global Contextual Fusion Alignment Transformer (GCFAT), which improves the extraction of camera features and provides a deeper understanding of large-scale patterns; the Sparse Fused Feature Attention (SFFA), which fine-tunes the fusion of LiDAR and camera details; and the Volumetric Grid Attention (VGA) for a comprehensive spatial data fusion. AYDIV's performance on the Waymo Open Dataset (WOD) with an improvement of 1.24% in mAPH value(L2 difficulty) and the Argoverse2 Dataset with a performance improvement of 7.40% in AP value demonstrates its efficacy in comparison to other existing fusion-based methods. Our code is publicly available at //github.com/sanjay-810/AYDIV2

Manipulating deformable objects is a ubiquitous task in household environments, demanding adequate representation and accurate dynamics prediction due to the objects' infinite degrees of freedom. This work proposes DeformNet, which utilizes latent space modeling with a learned 3D representation model to tackle these challenges effectively. The proposed representation model combines a PointNet encoder and a conditional neural radiance field (NeRF), facilitating a thorough acquisition of object deformations and variations in lighting conditions. To model the complex dynamics, we employ a recurrent state-space model (RSSM) that accurately predicts the transformation of the latent representation over time. Extensive simulation experiments with diverse objectives demonstrate the generalization capabilities of DeformNet for various deformable object manipulation tasks, even in the presence of previously unseen goals. Finally, we deploy DeformNet on an actual UR5 robotic arm to demonstrate its capability in real-world scenarios.

Trajectory planning is a fundamental problem in robotics. It facilitates a wide range of applications in navigation and motion planning, control, and multi-agent coordination. Trajectory planning is a difficult problem due to its computational complexity and real-world environment complexity with uncertainty, non-linearity, and real-time requirements. The multi-agent trajectory planning problem adds another dimension of difficulty due to inter-agent interaction. Existing solutions are either search-based or optimization-based approaches with simplified assumptions of environment, limited planning speed, and limited scalability in the number of agents. In this work, we make the first attempt to reformulate single agent and multi-agent trajectory planning problem as query problems over an implicit neural representation of trajectories. We formulate such implicit representation as Neural Trajectory Models (NTM) which can be queried to generate nearly optimal trajectory in complex environments. We conduct experiments in simulation environments and demonstrate that NTM can solve single-agent and multi-agent trajectory planning problems. In the experiments, NTMs achieve (1) sub-millisecond panning time using GPUs, (2) almost avoiding all environment collision, (3) almost avoiding all inter-agent collision, and (4) generating almost shortest paths. We also demonstrate that the same NTM framework can also be used for trajectories correction and multi-trajectory conflict resolution refining low quality and conflicting multi-agent trajectories into nearly optimal solutions efficiently. (Open source code will be available at //github.com/laser2099/neural-trajectory-model)

Deep neural networks are vulnerable to adversarial examples, posing a threat to the models' applications and raising security concerns. An intriguing property of adversarial examples is their strong transferability. Several methods have been proposed to enhance transferability, including ensemble attacks which have demonstrated their efficacy. However, prior approaches simply average logits, probabilities, or losses for model ensembling, lacking a comprehensive analysis of how and why model ensembling significantly improves transferability. In this paper, we propose a similar targeted attack method named Similar Target~(ST). By promoting cosine similarity between the gradients of each model, our method regularizes the optimization direction to simultaneously attack all surrogate models. This strategy has been proven to enhance generalization ability. Experimental results on ImageNet validate the effectiveness of our approach in improving adversarial transferability. Our method outperforms state-of-the-art attackers on 18 discriminative classifiers and adversarially trained models.

Community detection is a crucial task in network analysis that can be significantly improved by incorporating subject-level information, i.e. covariates. However, current methods often struggle with selecting tuning parameters and analyzing low-degree nodes. In this paper, we introduce a novel method that addresses these challenges by constructing network-adjusted covariates, which leverage the network connections and covariates with a unique weight to each node based on the node's degree. Spectral clustering on network-adjusted covariates yields an exact recovery of community labels under certain conditions, which is tuning-free and computationally efficient. We present novel theoretical results about the strong consistency of our method under degree-corrected stochastic blockmodels with covariates, even in the presence of mis-specification and sparse communities with bounded degrees. Additionally, we establish a general lower bound for the community detection problem when both network and covariates are present, and it shows our method is optimal up to a constant factor. Our method outperforms existing approaches in simulations and a LastFM app user network, and provides interpretable community structures in a statistics publication citation network where $30\%$ of nodes are isolated.

The electronic design automation of analog circuits has been a longstanding challenge in the integrated circuit field due to the huge design space and complex design trade-offs among circuit specifications. In the past decades, intensive research efforts have mostly been paid to automate the transistor sizing with a given circuit topology. By recognizing the graph nature of circuits, this paper presents a Circuit Graph Neural Network (CktGNN) that simultaneously automates the circuit topology generation and device sizing based on the encoder-dependent optimization subroutines. Particularly, CktGNN encodes circuit graphs using a two-level GNN framework (of nested GNN) where circuits are represented as combinations of subgraphs in a known subgraph basis. In this way, it significantly improves design efficiency by reducing the number of subgraphs to perform message passing. Nonetheless, another critical roadblock to advancing learning-assisted circuit design automation is a lack of public benchmarks to perform canonical assessment and reproducible research. To tackle the challenge, we introduce Open Circuit Benchmark (OCB), an open-sourced dataset that contains $10$K distinct operational amplifiers with carefully-extracted circuit specifications. OCB is also equipped with communicative circuit generation and evaluation capabilities such that it can help to generalize CktGNN to design various analog circuits by producing corresponding datasets. Experiments on OCB show the extraordinary advantages of CktGNN through representation-based optimization frameworks over other recent powerful GNN baselines and human experts' manual designs. Our work paves the way toward a learning-based open-sourced design automation for analog circuits. Our source code is available at \url{//github.com/zehao-dong/CktGNN}.

Resilience against stragglers is a critical element of prediction serving systems, tasked with executing inferences on input data for a pre-trained machine-learning model. In this paper, we propose NeRCC, as a general straggler-resistant framework for approximate coded computing. NeRCC includes three layers: (1) encoding regression and sampling, which generates coded data points, as a combination of original data points, (2) computing, in which a cluster of workers run inference on the coded data points, (3) decoding regression and sampling, which approximately recovers the predictions of the original data points from the available predictions on the coded data points. We argue that the overall objective of the framework reveals an underlying interconnection between two regression models in the encoding and decoding layers. We propose a solution to the nested regressions problem by summarizing their dependence on two regularization terms that are jointly optimized. Our extensive experiments on different datasets and various machine learning models, including LeNet5, RepVGG, and Vision Transformer (ViT), demonstrate that NeRCC accurately approximates the original predictions in a wide range of stragglers, outperforming the state-of-the-art by up to 23%.

Autonomic computing investigates how systems can achieve (user) specified control outcomes on their own, without the intervention of a human operator. Autonomic computing fundamentals have been substantially influenced by those of control theory for closed and open-loop systems. In practice, complex systems may exhibit a number of concurrent and inter-dependent control loops. Despite research into autonomic models for managing computer resources, ranging from individual resources (e.g., web servers) to a resource ensemble (e.g., multiple resources within a data center), research into integrating Artificial Intelligence (AI) and Machine Learning (ML) to improve resource autonomy and performance at scale continues to be a fundamental challenge. The integration of AI/ML to achieve such autonomic and self-management of systems can be achieved at different levels of granularity, from full to human-in-the-loop automation. In this article, leading academics, researchers, practitioners, engineers, and scientists in the fields of cloud computing, AI/ML, and quantum computing join to discuss current research and potential future directions for these fields. Further, we discuss challenges and opportunities for leveraging AI and ML in next generation computing for emerging computing paradigms, including cloud, fog, edge, serverless and quantum computing environments.

Multi-modal fusion is a fundamental task for the perception of an autonomous driving system, which has recently intrigued many researchers. However, achieving a rather good performance is not an easy task due to the noisy raw data, underutilized information, and the misalignment of multi-modal sensors. In this paper, we provide a literature review of the existing multi-modal-based methods for perception tasks in autonomous driving. Generally, we make a detailed analysis including over 50 papers leveraging perception sensors including LiDAR and camera trying to solve object detection and semantic segmentation tasks. Different from traditional fusion methodology for categorizing fusion models, we propose an innovative way that divides them into two major classes, four minor classes by a more reasonable taxonomy in the view of the fusion stage. Moreover, we dive deep into the current fusion methods, focusing on the remaining problems and open-up discussions on the potential research opportunities. In conclusion, what we expect to do in this paper is to present a new taxonomy of multi-modal fusion methods for the autonomous driving perception tasks and provoke thoughts of the fusion-based techniques in the future.