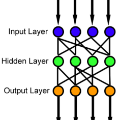

Several neural network approaches for solving differential equations employ trial solutions with a feedforward neural network. There are different means to incorporate the trial solution in the construction, for instance one may include them directly in the cost function. Used within the corresponding neural network, the trial solutions define the so-called neural form. Such neural forms represent general, flexible tools by which one may solve various differential equations. In this article we consider time-dependent initial value problems, which require to set up the neural form framework adequately. The neural forms presented up to now in the literature for such a setting can be considered as first order polynomials. In this work we propose to extend the polynomial order of the neural forms. The novel collocation-type construction includes several feedforward neural networks, one for each order. Additionally, we propose the fragmentation of the computational domain into subdomains. The neural forms are solved on each subdomain, whereas the interfacing grid points overlap in order to provide initial values over the whole fragmentation. We illustrate in experiments that the combination of collocation neural forms of higher order and the domain fragmentation allows to solve initial value problems over large domains with high accuracy and reliability.

相關內容

Formalisms based on temporal logics interpreted over finite strict linear orders, known in the literature as finite traces, have been used for temporal specification in automated planning, process modelling, (runtime) verification and synthesis of programs, as well as in knowledge representation and reasoning. In this paper, we focus on first-order temporal logic on finite traces. We first investigate preservation of equivalences and satisfiability of formulas between finite and infinite traces, by providing a set of semantic and syntactic conditions to guarantee when the distinction between reasoning in the two cases can be blurred. Moreover, we show that the satisfiability problem on finite traces for several decidable fragments of first-order temporal logic is ExpSpace-complete, as in the infinite trace case, while it decreases to NExpTime when finite traces bounded in the number of instants are considered. This leads also to new complexity results for temporal description logics over finite traces. Finally, we investigate applications to planning and verification, in particular by establishing connections with the notions of insensitivity to infiniteness and safety from the literature.

This work proposes an adaptive structure-preserving model order reduction method for finite-dimensional parametrized Hamiltonian systems modeling non-dissipative phenomena. To overcome the slowly decaying Kolmogorov width typical of transport problems, the full model is approximated on local reduced spaces that are adapted in time using dynamical low-rank approximation techniques. The reduced dynamics is prescribed by approximating the symplectic projection of the Hamiltonian vector field in the tangent space to the local reduced space. This ensures that the canonical symplectic structure of the Hamiltonian dynamics is preserved during the reduction. In addition, accurate approximations with low-rank reduced solutions are obtained by allowing the dimension of the reduced space to change during the time evolution. Whenever the quality of the reduced solution, assessed via an error indicator, is not satisfactory, the reduced basis is augmented in the parameter direction that is worst approximated by the current basis. Extensive numerical tests involving wave interactions, nonlinear transport problems, and the Vlasov equation demonstrate the superior stability properties and considerable runtime speedups of the proposed method as compared to global and traditional reduced basis approaches.

Markov Chain Monte Carlo (MCMC) methods form one of the algorithmic foundations of high-dimensional Bayesian inverse problems. The recent development of likelihood-informed subspace (LIS) methods offer a viable route to designing efficient MCMC methods for exploring high-dimensional posterior distributions via exploiting the intrinsic low-dimensional structure of the underlying inverse problem. However, existing LIS methods and the associated performance analysis often assume that the prior distribution is Gaussian. This assumption is limited for inverse problems aiming to promote sparsity in the parameter estimation, as heavy-tailed priors, e.g., Laplace distribution or the elastic net commonly used in Bayesian LASSO, are often needed in this case. To overcome this limitation, we consider a prior normalization technique that transforms any non-Gaussian (e.g. heavy-tailed) priors into standard Gaussian distributions, which make it possible to implement LIS methods to accelerate MCMC sampling via such transformations. We also rigorously investigate the integration of such transformations with several MCMC methods for high-dimensional problems. Finally, we demonstrate various aspects of our theoretical claims on two nonlinear inverse problems.

To overcome topological constraints and improve the expressiveness of normalizing flow architectures, Wu, K\"ohler and No\'e introduced stochastic normalizing flows which combine deterministic, learnable flow transformations with stochastic sampling methods. In this paper, we consider stochastic normalizing flows from a Markov chain point of view. In particular, we replace transition densities by general Markov kernels and establish proofs via Radon-Nikodym derivatives which allows to incorporate distributions without densities in a sound way. Further, we generalize the results for sampling from posterior distributions as required in inverse problems. The performance of the proposed conditional stochastic normalizing flow is demonstrated by numerical examples.

Data availability is one of the most important features in distributed storage systems, made possible by data replication. Nowadays data are generated rapidly and the goal to develop efficient, scalable and reliable storage systems has become one of the major challenges for high performance computing. In this work, we develop a dynamic, robust and strongly consistent distributed storage implementation suitable for handling large objects (such as files). We do so by integrating an Adaptive, Reconfigurable, Atomic Storage framework, called ARES, with a distributed file system, called COBFS, which relies on a block fragmentation technique to handle large objects. With the addition of ARES, we also enable the use of an erasure-coded algorithm to further split our data and to potentially improve storage efficiency at the replica servers and operation latency. To put the practicality of our outcomes at test, we conduct an in-depth experimental evaluation on the Emulab and AWS EC2 testbeds, illustrating the benefits of our approaches, as well as other interesting tradeoffs.

In this work, we present $\texttt{Volley Revolver}$, a novel matrix-encoding method that is particularly convenient for privacy-preserving neural networks to make predictions, and use it to implement a CNN for handwritten image classification. Based on this encoding method, we develop several additional operations for putting into practice the secure matrix multiplication over encrypted data matrices. For two matrices $A$ and $B$ to perform multiplication $A \times B$, the main idea is, in a simple version, to encrypt matrix $A$ and the transposition of the matrix $B$ into two ciphertexts respectively. Along with the additional operations, the homomorphic matrix multiplication $A \times B$ can be calculated over encrypted data matrices efficiently. For the convolution operation in CNN, on the basis of the $\texttt{Volley Revolver}$ encoding method, we develop a feasible and efficient evaluation strategy for performing the convolution operation. We in advance span each convolution kernel of CNN to a matrix space of the same size as the input image so as to generate several ciphertexts, each of which is later used together with the input image for calculating some part of the final convolution result. We accumulate all these part results of convolution operation and thus obtain the final convolution result.

Casting nonlocal problems in variational form and discretizing them with the finite element (FE) method facilitates the use of nonlocal vector calculus to prove well-posedeness, convergence, and stability of such schemes. Employing an FE method also facilitates meshing of complicated domain geometries and coupling with FE methods for local problems. However, nonlocal weak problems involve the computation of a double-integral, which is computationally expensive and presents several challenges. In particular, the inner integral of the variational form associated with the stiffness matrix is defined over the intersections of FE mesh elements with a ball of radius $\delta$, where $\delta$ is the range of nonlocal interaction. Identifying and parameterizing these intersections is a nontrivial computational geometry problem. In this work, we propose a quadrature technique where the inner integration is performed using quadrature points distributed over the full ball, without regard for how it intersects elements, and weights are computed based on the generalized moving least squares method. Thus, as opposed to all previously employed methods, our technique does not require element-by-element integration and fully circumvents the computation of element-ball intersections. This paper considers one- and two-dimensional implementations of piecewise linear continuous FE approximations, focusing on the case where the element size h and the nonlocal radius $\delta$ are proportional, as is typical of practical computations. When boundary conditions are treated carefully and the outer integral of the variational form is computed accurately, the proposed method is asymptotically compatible in the limit of $h \sim \delta \to 0$, featuring at least first-order convergence in L^2 for all dimensions, using both uniform and nonuniform grids.

We present a percolation inverse problem for diode networks: Given information about which pairs of nodes allow current to percolate from one to the other, can one construct a diode network consistent with the observed currents? We implement a divide-and-concur iterative projection method for solving the problem and demonstrate the supremacy of our method over an exhaustive approach for nontrivial instances of the problem. We find that the problem is most difficult when some but not all of the percolation data are hidden, and that the most difficult networks to reconstruct generally are those for which the currents are most sensitive to the addition or removal of a single diode.

Graph signals are signals with an irregular structure that can be described by a graph. Graph neural networks (GNNs) are information processing architectures tailored to these graph signals and made of stacked layers that compose graph convolutional filters with nonlinear activation functions. Graph convolutions endow GNNs with invariance to permutations of the graph nodes' labels. In this paper, we consider the design of trainable nonlinear activation functions that take into consideration the structure of the graph. This is accomplished by using graph median filters and graph max filters, which mimic linear graph convolutions and are shown to retain the permutation invariance of GNNs. We also discuss modifications to the backpropagation algorithm necessary to train local activation functions. The advantages of localized activation function architectures are demonstrated in four numerical experiments: source localization on synthetic graphs, authorship attribution of 19th century novels, movie recommender systems and scientific article classification. In all cases, localized activation functions are shown to improve model capacity.

This paper addresses the problem of formally verifying desirable properties of neural networks, i.e., obtaining provable guarantees that neural networks satisfy specifications relating their inputs and outputs (robustness to bounded norm adversarial perturbations, for example). Most previous work on this topic was limited in its applicability by the size of the network, network architecture and the complexity of properties to be verified. In contrast, our framework applies to a general class of activation functions and specifications on neural network inputs and outputs. We formulate verification as an optimization problem (seeking to find the largest violation of the specification) and solve a Lagrangian relaxation of the optimization problem to obtain an upper bound on the worst case violation of the specification being verified. Our approach is anytime i.e. it can be stopped at any time and a valid bound on the maximum violation can be obtained. We develop specialized verification algorithms with provable tightness guarantees under special assumptions and demonstrate the practical significance of our general verification approach on a variety of verification tasks.