We study the joint occurrence of large values of a Markov random field or undirected graphical model associated to a block graph. On such graphs, containing trees as special cases, we aim to generalize recent results for extremes of Markov trees. Every pair of nodes in a block graph is connected by a unique shortest path. These paths are shown to determine the limiting distribution of the properly rescaled random field given that a fixed variable exceeds a high threshold. When the sub-vectors induced by the blocks follow H\"usler-Reiss extreme value copulas, the global Markov property of the original field induces a particular structure on the parameter matrix of the limiting max-stable H\"usler-Reiss distribution. The multivariate Pareto version of the latter turns out to be an extremal graphical model according to the original block graph. Moreover, thanks to these algebraic relations, the parameters are still identifiable even if some variables are latent.

相關內容

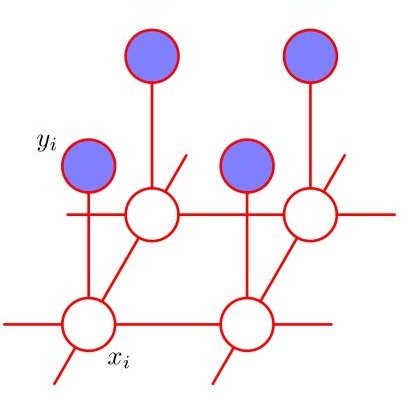

In high-dimensional regression, we attempt to estimate a parameter vector $\beta_0\in\mathbb{R}^p$ from $n\lesssim p$ observations $\{(y_i,x_i)\}_{i\leq n}$ where $x_i\in\mathbb{R}^p$ is a vector of predictors and $y_i$ is a response variable. A well-established approach uses convex regularizers to promote specific structures (e.g. sparsity) of the estimate $\widehat{\beta}$, while allowing for practical algorithms. Theoretical analysis implies that convex penalization schemes have nearly optimal estimation properties in certain settings. However, in general the gaps between statistically optimal estimation (with unbounded computational resources) and convex methods are poorly understood. We show that when the statistican has very simple structural information about the distribution of the entries of $\beta_0$, a large gap frequently exists between the best performance achieved by any convex regularizer satisfying a mild technical condition and either (i) the optimal statistical error or (ii) the statistical error achieved by optimal approximate message passing algorithms. Remarkably, a gap occurs at high enough signal-to-noise ratio if and only if the distribution of the coordinates of $\beta_0$ is not log-concave. These conclusions follow from an analysis of standard Gaussian designs. Our lower bounds are expected to be generally tight, and we prove tightness under certain conditions.

Super-resolution estimation is the problem of recovering a stream of spikes (point sources) from the noisy observation of a few number of its first trigonometric moments. The performance of super-resolution is recognized to be intimately related to the separation between the spikes to recover. A novel notion of stability of the Fisher information matrix (FIM) of the super-resolution problem is introduced, when the minimal eigenvalue of the FIM is not asymptotically vanishing. The regime where the minimal separation is inversely proportional to the number of acquired moments is considered. It is shown that there is a separation threshold above which the eigenvalues of the FIM can be bounded by a quantity that does not depend on the number of moments. The proof relies on characterizing the connection between the stability of the FIM and a generalization of the Beurling-Selberg box approximation problem.

How can we approximate sparse graphs and sequences of sparse graphs (with unbounded average degree)? We consider convergence in the first $k$ moments of the graph spectrum (equivalent to the numbers of closed $k$-walks) appropriately normalized. We introduce a simple, easy to sample, random graph model that captures the limiting spectra of many sequences of interest, including the sequence of hypercube graphs. The Random Overlapping Communities (ROC) model is specified by a distribution on pairs $(s,q)$, $s \in \mathbb{Z}_+, q \in (0,1]$. A graph on $n$ vertices with average degree $d$ is generated by repeatedly picking pairs $(s,q)$ from the distribution, adding an Erd\H{o}s-R\'{e}nyi random graph of edge density $q$ on a subset of vertices chosen by including each vertex with probability $s/n$, and repeating this process so that the expected degree is $d$. Our proof of convergence to a ROC random graph is based on the Stieltjes moment condition. We also show that the model is an effective approximation for individual graphs. For almost all possible triangle-to-edge and four-cycle-to-edge ratios, there exists a pair $(s,q)$ such that the ROC model with this single community type produces graphs with both desired ratios, a property that cannot be achieved by stochastic block models of bounded description size. Moreover, ROC graphs exhibit an inverse relationship between degree and clustering coefficient, a characteristic of many real-world networks.

We consider the problem of high-dimensional Ising model selection using neighborhood-based least absolute shrinkage and selection operator (Lasso). It is rigorously proved that under some mild coherence conditions on the population covariance matrix of the Ising model, consistent model selection can be achieved with sample sizes $n=\Omega{(d^3\log{p})}$ for any tree-like graph in the paramagnetic phase, where $p$ is the number of variables and $d$ is the maximum node degree. The obtained sufficient conditions for consistent model selection with Lasso are the same in the scaling of the sample complexity as that of $\ell_1$-regularized logistic regression.

A class of models that have been widely used are the exponential random graph (ERG) models, which form a comprehensive family of models that include independent and dyadic edge models, Markov random graphs, and many other graph distributions, in addition to allow the inclusion of covariates that can lead to a better fit of the model. Another increasingly popular class of models in statistical network analysis are stochastic block models (SBMs). They can be used for the purpose of grouping nodes into communities or discovering and analyzing a latent structure of a network. The stochastic block model is a generative model for random graphs that tends to produce graphs containing subsets of nodes characterized by being connected to each other, called communities. Many researchers from various areas have been using computational tools to adjust these models without, however, analyzing their suitability for the data of the networks they are studying. The complexity involved in the estimation process and in the goodness-of-fit verification methodologies for these models can be factors that make the analysis of adequacy difficult and a possible discard of one model in favor of another. And it is clear that the results obtained through an inappropriate model can lead the researcher to very wrong conclusions about the phenomenon studied. The purpose of this work is to present a simple methodology, based on Hypothesis Tests, to verify if there is a model specification error for these two cases widely used in the literature to represent complex networks: the ERGM and the SBM. We believe that this tool can be very useful for those who want to use these models in a more careful way, verifying beforehand if the models are suitable for the data under study.

This paper considers synchronous discrete-time dynamical systems on graphs based on the threshold model. It is well known that after a finite number of rounds these systems either reach a fixed point or enter a 2-cycle. The problem of finding the fixed points for this type of dynamical system is in general both NP-hard and #P-complete. In this paper we give a surprisingly simple graph-theoretic characterization of fixed points and 2-cycles for the class of finite trees. Thus, the class of trees is the first nontrivial graph class for which a complete characterization of fixed points exists. This characterization enables us to provide bounds for the total number of fixed points and pure 2-cycles. It also leads to an output-sensitive algorithm to efficiently generate these states.

Let $G$ be a large (simple, unlabeled) dense graph on $n$ vertices. Suppose that we only know, or can estimate, the empirical distribution of the number of subgraphs $F$ that each vertex in $G$ participates in, for some fixed small graph $F$. How many other graphs would look essentially the same to us, i.e., would have a similar local structure? In this paper, we derive upper and lower bounds on the number graphs whose empirical distribution lies close (in the Kolmogorov-Smirnov distance) to that of $G$. Our bounds are given as solutions to a maximum entropy problem on random graphs of a fixed size $k$ that does not depend on $n$, under $d$ global density constraints. The bounds are asymptotically close, with a gap that vanishes with $d$ at a rate that depends on the concentration function of the center of the Kolmogorov-Smirnov ball.

Graph neural networks (GNNs) are typically applied to static graphs that are assumed to be known upfront. This static input structure is often informed purely by insight of the machine learning practitioner, and might not be optimal for the actual task the GNN is solving. In absence of reliable domain expertise, one might resort to inferring the latent graph structure, which is often difficult due to the vast search space of possible graphs. Here we introduce Pointer Graph Networks (PGNs) which augment sets or graphs with additional inferred edges for improved model generalisation ability. PGNs allow each node to dynamically point to another node, followed by message passing over these pointers. The sparsity of this adaptable graph structure makes learning tractable while still being sufficiently expressive to simulate complex algorithms. Critically, the pointing mechanism is directly supervised to model long-term sequences of operations on classical data structures, incorporating useful structural inductive biases from theoretical computer science. Qualitatively, we demonstrate that PGNs can learn parallelisable variants of pointer-based data structures, namely disjoint set unions and link/cut trees. PGNs generalise out-of-distribution to 5x larger test inputs on dynamic graph connectivity tasks, outperforming unrestricted GNNs and Deep Sets.

We advocate the use of implicit fields for learning generative models of shapes and introduce an implicit field decoder for shape generation, aimed at improving the visual quality of the generated shapes. An implicit field assigns a value to each point in 3D space, so that a shape can be extracted as an iso-surface. Our implicit field decoder is trained to perform this assignment by means of a binary classifier. Specifically, it takes a point coordinate, along with a feature vector encoding a shape, and outputs a value which indicates whether the point is outside the shape or not. By replacing conventional decoders by our decoder for representation learning and generative modeling of shapes, we demonstrate superior results for tasks such as shape autoencoding, generation, interpolation, and single-view 3D reconstruction, particularly in terms of visual quality.

This paper introduces the Hawkes skeleton and the Hawkes graph. These objects summarize the branching structure of a multivariate Hawkes point process in a compact, yet meaningful way. We demonstrate how graph-theoretic vocabulary (`ancestor sets', `parent sets', `connectivity', `walks', `walk weights', ...) is very convenient for the discussion of multivariate Hawkes processes. For example, we reformulate the classic eigenvalue-based subcriticality criterion of multitype branching processes in graph terms. Next to these more terminological contributions, we show how the graph view may be used for the specification and estimation of Hawkes models from large, multitype event streams. Based on earlier work, we give a nonparametric statistical procedure to estimate the Hawkes skeleton and the Hawkes graph from data. We show how the graph estimation may then be used for specifying and fitting parametric Hawkes models. Our estimation method avoids the a priori assumptions on the model from a straighforward MLE-approach and is numerically more flexible than the latter. Our method has two tuning parameters: one controlling numerical complexity, the other one controlling the sparseness of the estimated graph. A simulation study confirms that the presented procedure works as desired. We pay special attention to computational issues in the implementation. This makes our results applicable to high-dimensional event-stream data, such as dozens of event streams and thousands of events per component.