Accurate segmentation of interconnected line networks, such as grain boundaries in polycrystalline material microstructures, poses a significant challenge due to the fragmented masks produced by conventional computer vision algorithms, including convolutional neural networks. These algorithms struggle with thin masks, often necessitating intricate post-processing for effective contour closure and continuity. Addressing this issue, this paper introduces a fast, high-fidelity post-processing technique, leveraging domain knowledge about grain boundary connectivity and employing conditional random fields and perceptual grouping rules. This approach significantly enhances segmentation mask accuracy, achieving a 79% segment identification accuracy in validation with a U-Net model on electron microscopy images of a polycrystalline oxide. Additionally, a novel grain alignment metric is introduced, showing a 51% improvement in grain alignment, providing a more detailed assessment of segmentation performance for complex microstructures. This method not only enables rapid and accurate segmentation but also facilitates an unprecedented level of data analysis, significantly improving the statistical representation of grain boundary networks, making it suitable for a range of disciplines where precise segmentation of interconnected line networks is essential.

相關內容

Self-Admitted Technical Debt (SATD) encompasses a wide array of sub-optimal design and implementation choices reported in software artefacts (e.g., code comments and commit messages) by developers themselves. Such reports have been central to the study of software maintenance and evolution over the last decades. However, they can also be deemed as dreadful sources of information on potentially exploitable vulnerabilities and security flaws. This work investigates the security implications of SATD from a technical and developer-centred perspective. On the one hand, it analyses whether security pointers disclosed inside SATD sources can be used to characterise vulnerabilities in Open-Source Software (OSS) projects and repositories. On the other hand, it delves into developers' perspectives regarding the motivations behind this practice, its prevalence, and its potential negative consequences. We followed a mixed-methods approach consisting of (i) the analysis of a preexisting dataset containing 94,455 SATD instances and (ii) an online survey with 222 OSS practitioners. We gathered 201 SATD instances through the dataset analysis and mapped them to different Common Weakness Enumeration (CWE) identifiers. Overall, 25 different types of CWEs were spotted across commit messages, pull requests, code comments, and issue sections, from which 8 appear among MITRE's Top-25 most dangerous ones. The survey shows that software practitioners often place security pointers across SATD artefacts to promote a security culture among their peers and help them spot flaky code sections, among other motives. However, they also consider such a practice risky as it may facilitate vulnerability exploits. Our findings suggest that preserving the contextual integrity of security pointers disseminated across SATD artefacts is critical to safeguard both commercial and OSS solutions against zero-day attacks.

Beamforming techniques are considered as essential parts to compensate the severe path loss in millimeter-wave (mmWave) communications by adopting large antenna arrays and formulating narrow beams to obtain satisfactory received powers. However, performing accurate beam alignment over such narrow beams for efficient link configuration by traditional beam selection approaches, mainly relied on channel state information, typically impose significant latency and computing overheads, which is often infeasible in vehicle-to-vehicle (V2V) communications like highly dynamic scenarios. In contrast, utilizing out-of-band contextual information, such as vehicular position information, is a potential alternative to reduce such overheads. In this context, this paper presents a deep learning-based solution on utilizing the vehicular position information for predicting the optimal beams having sufficient mmWave received powers so that the best V2V line-of-sight links can be ensured proactively. After experimental evaluation of the proposed solution on real-world measured mmWave sensing and communications datasets, the results show that the solution can achieve up to 84.58% of received power of link status on average, which confirm a promising solution for beamforming in mmWave at 60 GHz enabled V2V communications.

Deep neural networks have exhibited remarkable performance in a variety of computer vision fields, especially in semantic segmentation tasks. Their success is often attributed to multi-level feature fusion, which enables them to understand both global and local information from an image. However, we found that multi-level features from parallel branches are on different scales. The scale disequilibrium is a universal and unwanted flaw that leads to detrimental gradient descent, thereby degrading performance in semantic segmentation. We discover that scale disequilibrium is caused by bilinear upsampling, which is supported by both theoretical and empirical evidence. Based on this observation, we propose injecting scale equalizers to achieve scale equilibrium across multi-level features after bilinear upsampling. Our proposed scale equalizers are easy to implement, applicable to any architecture, hyperparameter-free, implementable without requiring extra computational cost, and guarantee scale equilibrium for any dataset. Experiments showed that adopting scale equalizers consistently improved the mIoU index across various target datasets, including ADE20K, PASCAL VOC 2012, and Cityscapes, as well as various decoder choices, including UPerHead, PSPHead, ASPPHead, SepASPPHead, and FCNHead.

In the current era of vast data and transparent machine learning, it is essential for techniques to operate at a large scale while providing a clear mathematical comprehension of the internal workings of the method. Although there already exist interpretable semi-parametric regression methods for large-scale applications that take into account non-linearity in the data, the complexity of the models is still often limited. One of the main challenges is the absence of interactions in these models, which are left out for the sake of better interpretability but also due to impractical computational costs. To overcome this limitation, we propose a new approach using a factorization method to derive a highly scalable higher-order tensor product spline model. Our method allows for the incorporation of all (higher-order) interactions of non-linear feature effects while having computational costs proportional to a model without interactions. We further develop a meaningful penalization scheme and examine the induced optimization problem. We conclude by evaluating the predictive and estimation performance of our method.

We solve the derandomized direct product testing question in the low acceptance regime, by constructing new high dimensional expanders that have no small connected covers. We show that our complexes have swap cocycle expansion, which allows us to deduce the agreement theorem by relying on previous work. Derandomized direct product testing, also known as agreement testing, is the following problem. Let X be a family of k-element subsets of [n] and let $\{f_s:s\to\Sigma\}_{s\in X}$ be an ensemble of local functions, each defined over a subset $s\subset [n]$. Suppose that we run the following so-called agreement test: choose a random pair of sets $s_1,s_2\in X$ that intersect on $\sqrt k$ elements, and accept if $f_{s_1},f_{s_2}$ agree on the elements in $s_1\cap s_2$. We denote the success probability of this test by $Agr(\{f_s\})$. Given that $Agr(\{f_s\})=\epsilon>0$, is there a global function $G:[n]\to\Sigma$ such that $f_s = G|_s$ for a non-negligible fraction of $s\in X$ ? We construct a family X of k-subsets of $[n]$ such that $|X| = O(n)$ and such that it satisfies the low acceptance agreement theorem. Namely, $Agr (\{f_s\}) > \epsilon \; \; \longrightarrow$ there is a function $G:[n]\to\Sigma$ such that $\Pr_s[f_s\overset{0.99}{\approx} G|_s]\geq poly(\epsilon)$. A key idea is to replace the well-studied LSV complexes by symplectic high dimensional expanders (HDXs). The family X is just the k-faces of the new symplectic HDXs. The later serve our needs better since their fundamental group satisfies the congruence subgroup property, which implies that they lack small covers.

Common crowdsourcing systems average estimates of a latent quantity of interest provided by many crowdworkers to produce a group estimate. We develop a new approach -- predict-each-worker -- that leverages self-supervised learning and a novel aggregation scheme. This approach adapts weights assigned to crowdworkers based on estimates they provided for previous quantities. When skills vary across crowdworkers or their estimates correlate, the weighted sum offers a more accurate group estimate than the average. Existing algorithms such as expectation maximization can, at least in principle, produce similarly accurate group estimates. However, their computational requirements become onerous when complex models, such as neural networks, are required to express relationships among crowdworkers. Predict-each-worker accommodates such complexity as well as many other practical challenges. We analyze the efficacy of predict-each-worker through theoretical and computational studies. Among other things, we establish asymptotic optimality as the number of engagements per crowdworker grows.

Predictive multiplicity refers to the phenomenon in which classification tasks may admit multiple competing models that achieve almost-equally-optimal performance, yet generate conflicting outputs for individual samples. This presents significant concerns, as it can potentially result in systemic exclusion, inexplicable discrimination, and unfairness in practical applications. Measuring and mitigating predictive multiplicity, however, is computationally challenging due to the need to explore all such almost-equally-optimal models, known as the Rashomon set, in potentially huge hypothesis spaces. To address this challenge, we propose a novel framework that utilizes dropout techniques for exploring models in the Rashomon set. We provide rigorous theoretical derivations to connect the dropout parameters to properties of the Rashomon set, and empirically evaluate our framework through extensive experimentation. Numerical results show that our technique consistently outperforms baselines in terms of the effectiveness of predictive multiplicity metric estimation, with runtime speedup up to $20\times \sim 5000\times$. With efficient Rashomon set exploration and metric estimation, mitigation of predictive multiplicity is then achieved through dropout ensemble and model selection.

Primal-dual methods have a natural application in Safe Reinforcement Learning (SRL), posed as a constrained policy optimization problem. In practice however, applying primal-dual methods to SRL is challenging, due to the inter-dependency of the learning rate (LR) and Lagrangian multipliers (dual variables) each time an embedded unconstrained RL problem is solved. In this paper, we propose, analyze and evaluate adaptive primal-dual (APD) methods for SRL, where two adaptive LRs are adjusted to the Lagrangian multipliers so as to optimize the policy in each iteration. We theoretically establish the convergence, optimality and feasibility of the APD algorithm. Finally, we conduct numerical evaluation of the practical APD algorithm with four well-known environments in Bullet-Safey-Gym employing two state-of-the-art SRL algorithms: PPO-Lagrangian and DDPG-Lagrangian. All experiments show that the practical APD algorithm outperforms (or achieves comparable performance) and attains more stable training than the constant LR cases. Additionally, we substantiate the robustness of selecting the two adaptive LRs by empirical evidence.

Knowledge graph embedding, which aims to represent entities and relations as low dimensional vectors (or matrices, tensors, etc.), has been shown to be a powerful technique for predicting missing links in knowledge graphs. Existing knowledge graph embedding models mainly focus on modeling relation patterns such as symmetry/antisymmetry, inversion, and composition. However, many existing approaches fail to model semantic hierarchies, which are common in real-world applications. To address this challenge, we propose a novel knowledge graph embedding model---namely, Hierarchy-Aware Knowledge Graph Embedding (HAKE)---which maps entities into the polar coordinate system. HAKE is inspired by the fact that concentric circles in the polar coordinate system can naturally reflect the hierarchy. Specifically, the radial coordinate aims to model entities at different levels of the hierarchy, and entities with smaller radii are expected to be at higher levels; the angular coordinate aims to distinguish entities at the same level of the hierarchy, and these entities are expected to have roughly the same radii but different angles. Experiments demonstrate that HAKE can effectively model the semantic hierarchies in knowledge graphs, and significantly outperforms existing state-of-the-art methods on benchmark datasets for the link prediction task.

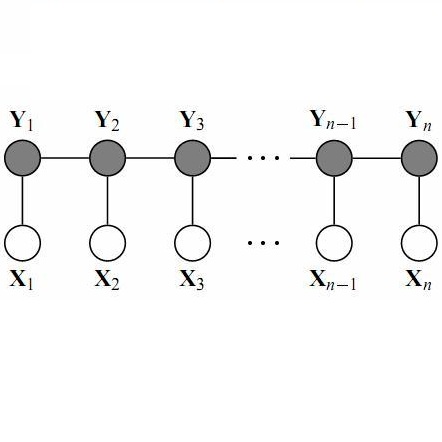

Learning latent representations of nodes in graphs is an important and ubiquitous task with widespread applications such as link prediction, node classification, and graph visualization. Previous methods on graph representation learning mainly focus on static graphs, however, many real-world graphs are dynamic and evolve over time. In this paper, we present Dynamic Self-Attention Network (DySAT), a novel neural architecture that operates on dynamic graphs and learns node representations that capture both structural properties and temporal evolutionary patterns. Specifically, DySAT computes node representations by jointly employing self-attention layers along two dimensions: structural neighborhood and temporal dynamics. We conduct link prediction experiments on two classes of graphs: communication networks and bipartite rating networks. Our experimental results show that DySAT has a significant performance gain over several different state-of-the-art graph embedding baselines.