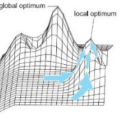

Correlation acts as a critical role in the tracking field, especially in recent popular Siamese-based trackers. The correlation operation is a simple fusion manner to consider the similarity between the template and the search region. However, the correlation operation itself is a local linear matching process, leading to lose semantic information and fall into local optimum easily, which may be the bottleneck of designing high-accuracy tracking algorithms. Is there any better feature fusion method than correlation? To address this issue, inspired by Transformer, this work presents a novel attention-based feature fusion network, which effectively combines the template and search region features solely using attention. Specifically, the proposed method includes an ego-context augment module based on self-attention and a cross-feature augment module based on cross-attention. Finally, we present a Transformer tracking (named TransT) method based on the Siamese-like feature extraction backbone, the designed attention-based fusion mechanism, and the classification and regression head. Experiments show that our TransT achieves very promising results on six challenging datasets, especially on large-scale LaSOT, TrackingNet, and GOT-10k benchmarks. Our tracker runs at approximatively 50 fps on GPU. Code and models are available at //github.com/chenxin-dlut/TransT.

相關內容

Recently, most siamese network based trackers locate targets via object classification and bounding-box regression. Generally, they select the bounding-box with maximum classification confidence as the final prediction. This strategy may miss the right result due to the accuracy misalignment between classification and regression. In this paper, we propose a novel siamese tracking algorithm called SiamRCR, addressing this problem with a simple, light and effective solution. It builds reciprocal links between classification and regression branches, which can dynamically re-weight their losses for each positive sample. In addition, we add a localization branch to predict the localization accuracy, so that it can work as the replacement of the regression assistance link during inference. This branch makes the training and inference more consistent. Extensive experimental results demonstrate the effectiveness of SiamRCR and its superiority over the state-of-the-art competitors on GOT-10k, LaSOT, TrackingNet, OTB-2015, VOT-2018 and VOT-2019. Moreover, our SiamRCR runs at 65 FPS, far above the real-time requirement.

Significant progress on the crowd counting problem has been achieved by integrating larger context into convolutional neural networks (CNNs). This indicates that global scene context is essential, despite the seemingly bottom-up nature of the problem. This may be explained by the fact that context knowledge can adapt and improve local feature extraction to a given scene. In this paper, we therefore investigate the role of global context for crowd counting. Specifically, a pure transformer is used to extract features with global information from overlapping image patches. Inspired by classification, we add a context token to the input sequence, to facilitate information exchange with tokens corresponding to image patches throughout transformer layers. Due to the fact that transformers do not explicitly model the tried-and-true channel-wise interactions, we propose a token-attention module (TAM) to recalibrate encoded features through channel-wise attention informed by the context token. Beyond that, it is adopted to predict the total person count of the image through regression-token module (RTM). Extensive experiments demonstrate that our method achieves state-of-the-art performance on various datasets, including ShanghaiTech, UCF-QNRF, JHU-CROWD++ and NWPU. On the large-scale JHU-CROWD++ dataset, our method improves over the previous best results by 26.9% and 29.9% in terms of MAE and MSE, respectively.

Recently, plenty of work has tried to introduce transformers into computer vision tasks, with good results. Unlike classic convolution networks, which extract features within a local receptive field, transformers can adaptively aggregate similar features from a global view using self-attention mechanism. For object detection, Feature Pyramid Network (FPN) proposes feature interaction across layers and proves its extremely importance. However, its interaction is still in a local manner, which leaves a lot of room for improvement. Since transformer was originally designed for NLP tasks, adapting processing subject directly from text to image will cause unaffordable computation and space overhead. In this paper, we utilize a linearized attention function to overcome above problems and build a novel architecture, named Content-Augmented Feature Pyramid Network (CA-FPN), which proposes a global content extraction module and deeply combines with FPN through light linear transformers. What's more, light transformers can further make the application of multi-head attention mechanism easier. Most importantly, our CA-FPN can be readily plugged into existing FPN-based models. Extensive experiments on the challenging COCO object detection dataset demonstrated that our CA-FPN significantly outperforms competitive baselines without bells and whistles. Code will be made publicly available.

In video object tracking, there exist rich temporal contexts among successive frames, which have been largely overlooked in existing trackers. In this work, we bridge the individual video frames and explore the temporal contexts across them via a transformer architecture for robust object tracking. Different from classic usage of the transformer in natural language processing tasks, we separate its encoder and decoder into two parallel branches and carefully design them within the Siamese-like tracking pipelines. The transformer encoder promotes the target templates via attention-based feature reinforcement, which benefits the high-quality tracking model generation. The transformer decoder propagates the tracking cues from previous templates to the current frame, which facilitates the object searching process. Our transformer-assisted tracking framework is neat and trained in an end-to-end manner. With the proposed transformer, a simple Siamese matching approach is able to outperform the current top-performing trackers. By combining our transformer with the recent discriminative tracking pipeline, our method sets several new state-of-the-art records on prevalent tracking benchmarks.

Triple extraction is an essential task in information extraction for natural language processing and knowledge graph construction. In this paper, we revisit the end-to-end triple extraction task for sequence generation. Since generative triple extraction may struggle to capture long-term dependencies and generate unfaithful triples, we introduce a novel model, contrastive triple extraction with a generative transformer. Specifically, we introduce a single shared transformer module for encoder-decoder-based generation. To generate faithful results, we propose a novel triplet contrastive training object. Moreover, we introduce two mechanisms to further improve model performance (i.e., batch-wise dynamic attention-masking and triple-wise calibration). Experimental results on three datasets (i.e., NYT, WebNLG, and MIE) show that our approach achieves better performance than that of baselines.

Recently, we have seen a rapid development of Deep Neural Network (DNN) based visual tracking solutions. Some trackers combine the DNN-based solutions with Discriminative Correlation Filters (DCF) to extract semantic features and successfully deliver the state-of-the-art tracking accuracy. However, these solutions are highly compute-intensive, which require long processing time, resulting unsecured real-time performance. To deliver both high accuracy and reliable real-time performance, we propose a novel tracker called SiamVGG. It combines a Convolutional Neural Network (CNN) backbone and a cross-correlation operator, and takes advantage of the features from exemplary images for more accurate object tracking. The architecture of SiamVGG is customized from VGG-16, with the parameters shared by both exemplary images and desired input video frames. We demonstrate the proposed SiamVGG on OTB-2013/50/100 and VOT 2015/2016/2017 datasets with the state-of-the-art accuracy while maintaining a decent real-time performance of 50 FPS running on a GTX 1080Ti. Our design can achieve 2% higher Expected Average Overlap (EAO) compared to the ECO and C-COT in VOT2017 Challenge.

Although Transformer has achieved great successes on many NLP tasks, its heavy structure with fully-connected attention connections leads to dependencies on large training data. In this paper, we present Star-Transformer, a lightweight alternative by careful sparsification. To reduce model complexity, we replace the fully-connected structure with a star-shaped topology, in which every two non-adjacent nodes are connected through a shared relay node. Thus, complexity is reduced from quadratic to linear, while preserving capacity to capture both local composition and long-range dependency. The experiments on four tasks (22 datasets) show that Star-Transformer achieved significant improvements against the standard Transformer for the modestly sized datasets.

Discrete correlation filter (DCF) based trackers have shown considerable success in visual object tracking. These trackers often make use of low to mid level features such as histogram of gradients (HoG) and mid-layer activations from convolution neural networks (CNNs). We argue that including semantically higher level information to the tracked features may provide further robustness to challenging cases such as viewpoint changes. Deep salient object detection is one example of such high level features, as it make use of semantic information to highlight the important regions in the given scene. In this work, we propose an improvement over DCF based trackers by combining saliency based and other features based filter responses. This combination is performed with an adaptive weight on the saliency based filter responses, which is automatically selected according to the temporal consistency of visual saliency. We show that our method consistently improves a baseline DCF based tracker especially in challenging cases and performs superior to the state-of-the-art. Our improved tracker operates at 9.3 fps, introducing a small computational burden over the baseline which operates at 11 fps.

Being intensively studied, visual object tracking has witnessed great advances in either speed (e.g., with correlation filters) or accuracy (e.g., with deep features). Real-time and high accuracy tracking algorithms, however, remain scarce. In this paper we study the problem from a new perspective and present a novel parallel tracking and verifying (PTAV) framework, by taking advantage of the ubiquity of multi-thread techniques and borrowing ideas from the success of parallel tracking and mapping in visual SLAM. The proposed PTAV framework is typically composed of two components, a (base) tracker T and a verifier V, working in parallel on two separate threads. The tracker T aims to provide a super real-time tracking inference and is expected to perform well most of the time; by contrast, the verifier V validates the tracking results and corrects T when needed. The key innovation is that, V does not work on every frame but only upon the requests from T; on the other end, T may adjust the tracking according to the feedback from V. With such collaboration, PTAV enjoys both the high efficiency provided by T and the strong discriminative power by V. Meanwhile, to adapt V to object appearance changes over time, we maintain a dynamic target template pool for adaptive verification, resulting in further performance improvements. In our extensive experiments on popular benchmarks including OTB2015, TC128, UAV20L and VOT2016, PTAV achieves the best tracking accuracy among all real-time trackers, and in fact even outperforms many deep learning based algorithms. Moreover, as a general framework, PTAV is very flexible with great potentials for future improvement and generalization.

Current convolutional neural networks algorithms for video object tracking spend the same amount of computation for each object and video frame. However, it is harder to track an object in some frames than others, due to the varying amount of clutter, scene complexity, amount of motion, and object's distinctiveness against its background. We propose a depth-adaptive convolutional Siamese network that performs video tracking adaptively at multiple neural network depths. Parametric gating functions are trained to control the depth of the convolutional feature extractor by minimizing a joint loss of computational cost and tracking error. Our network achieves accuracy comparable to the state-of-the-art on the VOT2016 benchmark. Furthermore, our adaptive depth computation achieves higher accuracy for a given computational cost than traditional fixed-structure neural networks. The presented framework extends to other tasks that use convolutional neural networks and enables trading speed for accuracy at runtime.