In this paper we address the importance and the impact of employing structure preserving neural networks as surrogate of the analytical physics-based models typically employed to describe the rheology of non-Newtonian fluids in Stokes flows. In particular, we propose and test on real-world scenarios a novel strategy to build data-driven rheological models based on the use of Input-Output Convex Neural Networks (ICNNs), a special class of feedforward neural network scalar valued functions that are convex with respect to their inputs. Moreover, we show, through a detailed campaign of numerical experiments, that the use of ICNNs is of paramount importance to guarantee the well-posedness of the associated non-Newtonian Stokes differential problem. Finally, building upon a novel perturbation result for non-Newtonian Stokes problems, we study the impact of our data-driven ICNN based rheological model on the accuracy of the finite element approximation.

相關內容

With the growing prevalence of machine learning and artificial intelligence-based medical decision support systems, it is equally important to ensure that these systems provide patient outcomes in a fair and equitable fashion. This paper presents an innovative framework for detecting areas of algorithmic bias in medical-AI decision support systems. Our approach efficiently identifies potential biases in medical-AI models, specifically in the context of sepsis prediction, by employing the Classification and Regression Trees (CART) algorithm. We verify our methodology by conducting a series of synthetic data experiments, showcasing its ability to estimate areas of bias in controlled settings precisely. The effectiveness of the concept is further validated by experiments using electronic medical records from Grady Memorial Hospital in Atlanta, Georgia. These tests demonstrate the practical implementation of our strategy in a clinical environment, where it can function as a vital instrument for guaranteeing fairness and equity in AI-based medical decisions.

In this paper, we study three representations of lattices by means of a set with a binary relation of compatibility in the tradition of Plo\v{s}\v{c}ica. The standard representations of complete ortholattices and complete perfect Heyting algebras drop out as special cases of the first representation, while the second covers arbitrary complete lattices, as well as complete lattices equipped with a negation we call a protocomplementation. The third topological representation is a variant of that of Craig, Haviar, and Priestley. We then extend each of the three representations to lattices with a multiplicative unary modality; the representing structures, like so-called graph-based frames, add a second relation of accessibility interacting with compatibility. The three representations generalize possibility semantics for classical modal logics to non-classical modal logics, motivated by a recent application of modal orthologic to natural language semantics.

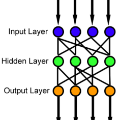

To investigate neural network parameters, it is easier to study the distribution of parameters than to study the parameters in each neuron. The ridgelet transform is a pseudo-inverse operator that maps a given function $f$ to the parameter distribution $\gamma$ so that a network $\mathtt{NN}[\gamma]$ reproduces $f$, i.e. $\mathtt{NN}[\gamma]=f$. For depth-2 fully-connected networks on a Euclidean space, the ridgelet transform has been discovered up to the closed-form expression, thus we could describe how the parameters are distributed. However, for a variety of modern neural network architectures, the closed-form expression has not been known. In this paper, we explain a systematic method using Fourier expressions to derive ridgelet transforms for a variety of modern networks such as networks on finite fields $\mathbb{F}_p$, group convolutional networks on abstract Hilbert space $\mathcal{H}$, fully-connected networks on noncompact symmetric spaces $G/K$, and pooling layers, or the $d$-plane ridgelet transform.

Operator-based neural network architectures such as DeepONets have emerged as a promising tool for the surrogate modeling of physical systems. In general, towards operator surrogate modeling, the training data is generated by solving the PDEs using techniques such as Finite Element Method (FEM). The computationally intensive nature of data generation is one of the biggest bottleneck in deploying these surrogate models for practical applications. In this study, we propose a novel methodology to alleviate the computational burden associated with training data generation for DeepONets. Unlike existing literature, the proposed framework for data generation does not use any partial differential equation integration strategy, thereby significantly reducing the computational cost associated with generating training dataset for DeepONet. In the proposed strategy, first, the output field is generated randomly, satisfying the boundary conditions using Gaussian Process Regression (GPR). From the output field, the input source field can be calculated easily using finite difference techniques. The proposed methodology can be extended to other operator learning methods, making the approach widely applicable. To validate the proposed approach, we employ the heat equations as the model problem and develop the surrogate model for numerous boundary value problems.

In recent years, the rapid development of high-precision map technology combined with artificial intelligence has ushered in a new development opportunity in the field of intelligent vehicles. High-precision map technology is an important guarantee for intelligent vehicles to achieve autonomous driving. However, due to the lack of research on high-precision map technology, it is difficult to rationally use this technology in the field of intelligent vehicles. Therefore, relevant researchers studied a fast and effective algorithm to generate high-precision GPS data from a large number of low-precision GPS trajectory data fusion, and generated several key data points to simplify the description of GPS trajectory, and realized the "crowdsourced update" model based on a large number of social vehicles for map data collection came into being. This kind of algorithm has the important significance to improve the data accuracy, reduce the measurement cost and reduce the data storage space. On this basis, this paper analyzes the implementation form of crowdsourcing map, so as to improve the various information data in the high-precision map according to the actual situation, and promote the high-precision map can be reasonably applied to the intelligent car.

With the rising popularity of the internet and the widespread use of networks and information systems via the cloud and data centers, the privacy and security of individuals and organizations have become extremely crucial. In this perspective, encryption consolidates effective technologies that can effectively fulfill these requirements by protecting public information exchanges. To achieve these aims, the researchers used a wide assortment of encryption algorithms to accommodate the varied requirements of this field, as well as focusing on complex mathematical issues during their work to substantially complicate the encrypted communication mechanism. as much as possible to preserve personal information while significantly reducing the possibility of attacks. Depending on how complex and distinct the requirements established by these various applications are, the potential of trying to break them continues to occur, and systems for evaluating and verifying the cryptographic algorithms implemented continue to be necessary. The best approach to analyzing an encryption algorithm is to identify a practical and efficient technique to break it or to learn ways to detect and repair weak aspects in algorithms, which is known as cryptanalysis. Experts in cryptanalysis have discovered several methods for breaking the cipher, such as discovering a critical vulnerability in mathematical equations to derive the secret key or determining the plaintext from the ciphertext. There are various attacks against secure cryptographic algorithms in the literature, and the strategies and mathematical solutions widely employed empower cryptanalysts to demonstrate their findings, identify weaknesses, and diagnose maintenance failures in algorithms.

This paper revisits the classical concept of network modularity and its spectral relaxations used throughout graph data analysis. We formulate and study several modularity statistic variants for which we establish asymptotic distributional results in the large-network limit for networks exhibiting nodal community structure. Our work facilitates testing for network differences and can be used in conjunction with existing theoretical guarantees for stochastic blockmodel random graphs. Our results are enabled by recent advances in the study of low-rank truncations of large network adjacency matrices. We provide confirmatory simulation studies and real data analysis pertaining to the network neuroscience study of psychosis, specifically schizophrenia. Collectively, this paper contributes to the limited existing literature to date on statistical inference for modularity-based network analysis. Supplemental materials for this article are available online.

To achieve near-zero training error in a classification problem, the layers of a feed-forward network have to disentangle the manifolds of data points with different labels, to facilitate the discrimination. However, excessive class separation can bring to overfitting since good generalisation requires learning invariant features, which involve some level of entanglement. We report on numerical experiments showing how the optimisation dynamics finds representations that balance these opposing tendencies with a non-monotonic trend. After a fast segregation phase, a slower rearrangement (conserved across data sets and architectures) increases the class entanglement.The training error at the inversion is stable under subsampling, and across network initialisations and optimisers, which characterises it as a property solely of the data structure and (very weakly) of the architecture. The inversion is the manifestation of tradeoffs elicited by well-defined and maximally stable elements of the training set, coined ``stragglers'', particularly influential for generalisation.

There is currently a focus on statistical methods which can use historical trial information to help accelerate the discovery, development and delivery of medicine. Bayesian methods can be constructed so that the borrowing is "dynamic" in the sense that the similarity of the data helps to determine how much information is used. In the time to event setting with one historical data set, a popular model for a range of baseline hazards is the piecewise exponential model where the time points are fixed and a borrowing structure is imposed on the model. Although convenient for implementation this approach effects the borrowing capability of the model. We propose a Bayesian model which allows the time points to vary and a dependency to be placed between the baseline hazards. This serves to smooth the posterior baseline hazard improving both model estimation and borrowing characteristics. We explore a variety of prior structures for the borrowing within our proposed model and assess their performance against established approaches. We demonstrate that this leads to improved type I error in the presence of prior data conflict and increased power. We have developed accompanying software which is freely available and enables easy implementation of the approach.

We hypothesize that due to the greedy nature of learning in multi-modal deep neural networks, these models tend to rely on just one modality while under-fitting the other modalities. Such behavior is counter-intuitive and hurts the models' generalization, as we observe empirically. To estimate the model's dependence on each modality, we compute the gain on the accuracy when the model has access to it in addition to another modality. We refer to this gain as the conditional utilization rate. In the experiments, we consistently observe an imbalance in conditional utilization rates between modalities, across multiple tasks and architectures. Since conditional utilization rate cannot be computed efficiently during training, we introduce a proxy for it based on the pace at which the model learns from each modality, which we refer to as the conditional learning speed. We propose an algorithm to balance the conditional learning speeds between modalities during training and demonstrate that it indeed addresses the issue of greedy learning. The proposed algorithm improves the model's generalization on three datasets: Colored MNIST, Princeton ModelNet40, and NVIDIA Dynamic Hand Gesture.