While the 5th generation (5G) of mobile networks has landed in the commercial area, the research community is exploring new functionalities for 6th generation (6G) networks, for example non-terrestrial networks (NTNs) via space/air nodes such as Unmanned Aerial Vehicles (UAVs), High Altitute Platforms (HAPs) or satellites. Specifically, satellite-based communication offers new opportunities for future wireless applications, such as providing connectivity to remote or otherwise unconnected areas, complementing terrestrial networks to reduce connection downtime, as well as increasing traffic efficiency in hot spot areas. In this context, an accurate characterization of the NTN channel is the first step towards proper protocol design. Along these lines, this paper provides an ns-3 implementation of the 3rd Generation Partnership Project (3GPP) channel and antenna models for NTN described in Technical Report 38.811. In particular, we extend the ns-3 code base with new modules to implement the attenuation of the signal in air/space due to atmospheric gases and scintillation, and new mobility and fading models to account for the Geocentric Cartesian coordinate system of satellites. Finally, we validate the accuracy of our ns-3 module via simulations against 3GPP calibration results

相關內容

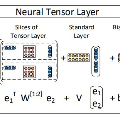

Machine Learning (ML) has recently been a skyrocketing field in Computer Science. As computer hardware engineers, we are enthusiastic about hardware implementations of popular software ML architectures to optimize their performance, reliability, and resource usage. In this project, we designed a highly-configurable, real-time device for recognizing handwritten letters and digits using an Altera DE1 FPGA Kit. We followed various engineering standards, including IEEE-754 32-bit Floating-Point Standard, Video Graphics Array (VGA) display protocol, Universal Asynchronous Receiver-Transmitter (UART) protocol, and Inter-Integrated Circuit (I2C) protocols to achieve the project goals. These significantly improved our design in compatibility, reusability, and simplicity in verifications. Following these standards, we designed a 32-bit floating-point (FP) instruction set architecture (ISA). We developed a 5-stage RISC processor in System Verilog to manage image processing, matrix multiplications, ML classifications, and user interfaces. Three different ML architectures were implemented and evaluated on our design: Linear Classification (LC), a 784-64-10 fully connected neural network (NN), and a LeNet-like Convolutional Neural Network (CNN) with ReLU activation layers and 36 classes (10 for the digits and 26 for the case-insensitive letters). The training processes were done in Python scripts, and the resulting kernels and weights were stored in hex files and loaded into the FPGA's SRAM units. Convolution, pooling, data management, and various other ML features were guided by firmware in our custom assembly language. This paper documents the high-level design block diagrams, interfaces between each System Verilog module, implementation details of our software and firmware components, and further discussions on potential impacts.

The performance of distributed storage systems deployed on wide-area networks can be improved using weighted (majority) quorum systems instead of their regular variants due to the heterogeneous performance of the nodes. A significant limitation of weighted majority quorum systems lies in their dependence on static weights, which are inappropriate for systems subject to the dynamic nature of networked environments. To overcome this limitation, such quorum systems require mechanisms for reassigning weights over time according to the performance variations. We study the problem of node weight reassignment in asynchronous systems with a static set of servers and static fault threshold. We prove that solving such a problem is as hard as solving consensus, i.e., it cannot be implemented in asynchronous failure-prone distributed systems. This result is somewhat counter-intuitive, given the recent results showing that two related problems -- replica set reconfiguration and asset transfer -- can be solved in asynchronous systems. Inspired by these problems, we present two versions of the problem that contain restrictions on the weights of servers and the way they are reassigned. We propose a protocol to implement one of the restricted problems in asynchronous systems. As a case study, we construct a dynamic-weighted atomic storage based on such a protocol. We also discuss the relationship between weight reassignment and asset transfer problems and compare our dynamic-weighted atomic storage with reconfigurable atomic storage.

Task and Motion Planning (TAMP) approaches are effective at planning long-horizon autonomous robot manipulation. However, because they require a planning model, it can be difficult to apply them to domains where the environment and its dynamics are not fully known. We propose to overcome these limitations by leveraging deep generative modeling, specifically diffusion models, to learn constraints and samplers that capture these difficult-to-engineer aspects of the planning model. These learned samplers are composed and combined within a TAMP solver in order to find action parameter values jointly that satisfy the constraints along a plan. To tractably make predictions for unseen objects in the environment, we define these samplers on low-dimensional learned latent embeddings of changing object state. We evaluate our approach in an articulated object manipulation domain and show how the combination of classical TAMP, generative learning, and latent embeddings enables long-horizon constraint-based reasoning.

The estimation of unknown parameters in simulations, also known as calibration, is crucial for practical management of epidemics and prediction of pandemic risk. A simple yet widely used approach is to estimate the parameters by minimizing the sum of the squared distances between actual observations and simulation outputs. It is shown in this paper that this method is inefficient, particularly when the epidemic models are developed based on certain simplifications of reality, also known as imperfect models which are commonly used in practice. To address this issue, a new estimator is introduced that is asymptotically consistent, has a smaller estimation variance than the least squares estimator, and achieves the semiparametric efficiency. Numerical studies are performed to examine the finite sample performance. The proposed method is applied to the analysis of the COVID-19 pandemic for 20 countries based on the SEIR (Susceptible-Exposed-Infectious-Recovered) model with both deterministic and stochastic simulations. The estimation of the parameters, including the basic reproduction number and the average incubation period, reveal the risk of disease outbreaks in each country and provide insights to the design of public health interventions.

The high altitude platform station (HAPS) concept has recently received notable attention from both industry and academia to support future wireless networks. A HAPS can be equipped with 5th generation (5G) and beyond technologies such as massive multiple-input multiple-output (MIMO) and reconfigurable intelligent surface (RIS). Hence, it is expected that HAPS will support numerous applications in both rural and urban areas. However, this comes at the expense of high energy consumption and thus shorter loitering time. To tackle this issue, we envision the use of a multi-mode HAPS that can adaptively switch between different modes so as to reduce energy consumption and extend the HAPS loitering time. These modes comprise a HAPS super macro base station (HAPS-SMBS) mode for enhanced computing, caching, and communication services, a HAPS relay station (HAPS-RS) mode for active communication, and a HAPS-RIS mode for passive communication. This multimode HAPS ensures that operations rely mostly on the passive communication payload, while switching to an energy-greedy active mode only when necessary. In this article, we begin with a brief review of HAPS features compared to other non-terrestrial systems, followed by an exposition of the different HAPS modes proposed. Subsequently, we illustrate the envisioned multi-mode HAPS, and discuss its benefits and challenges. Finally, we validate the multi-mode efficiency through a case study.

Adopting Convolutional Neural Networks (CNNs) in the daily routine of primary diagnosis requires not only near-perfect precision, but also a sufficient degree of generalization to data acquisition shifts and transparency. Existing CNN models act as black boxes, not ensuring to the physicians that important diagnostic features are used by the model. Building on top of successfully existing techniques such as multi-task learning, domain adversarial training and concept-based interpretability, this paper addresses the challenge of introducing diagnostic factors in the training objectives. Here we show that our architecture, by learning end-to-end an uncertainty-based weighting combination of multi-task and adversarial losses, is encouraged to focus on pathology features such as density and pleomorphism of nuclei, e.g. variations in size and appearance, while discarding misleading features such as staining differences. Our results on breast lymph node tissue show significantly improved generalization in the detection of tumorous tissue, with best average AUC 0.89 (0.01) against the baseline AUC 0.86 (0.005). By applying the interpretability technique of linearly probing intermediate representations, we also demonstrate that interpretable pathology features such as nuclei density are learned by the proposed CNN architecture, confirming the increased transparency of this model. This result is a starting point towards building interpretable multi-task architectures that are robust to data heterogeneity. Our code is available at //github.com/maragraziani/multitask_adversarial

Understanding human mobility patterns is important in applications as diverse as urban planning, public health, and political organizing. One rich source of data on human mobility is taxi ride data. Using the city of Chicago as a case study, we examine data from taxi rides in 2016 with the goal of understanding how neighborhoods are interconnected. This analysis will provide a sense of which neighborhoods individuals are using taxis to travel between, suggesting regions to focus new public transit development efforts. Additionally, this analysis will map traffic circulation patterns and provide an understanding of where in the city people are traveling from and where they are heading to - perhaps informing traffic or road pollution mitigation efforts. For the first application, representing the data as an undirected graph will suffice. Transit lines run in both directions so simply a knowledge of which neighborhoods have high rates of taxi travel between them provides an argument for placing public transit along those routes. However, in order to understand the flow of people throughout a city, we must make a distinction between the neighborhood from which people are departing and the areas to which they are arriving - this requires methods that can deal with directed graphs. All developed codes can be found at //github.com/Nikunj-Gupta/Spectral-Clustering-Directed-Graphs.

Vast amount of data generated from networks of sensors, wearables, and the Internet of Things (IoT) devices underscores the need for advanced modeling techniques that leverage the spatio-temporal structure of decentralized data due to the need for edge computation and licensing (data access) issues. While federated learning (FL) has emerged as a framework for model training without requiring direct data sharing and exchange, effectively modeling the complex spatio-temporal dependencies to improve forecasting capabilities still remains an open problem. On the other hand, state-of-the-art spatio-temporal forecasting models assume unfettered access to the data, neglecting constraints on data sharing. To bridge this gap, we propose a federated spatio-temporal model -- Cross-Node Federated Graph Neural Network (CNFGNN) -- which explicitly encodes the underlying graph structure using graph neural network (GNN)-based architecture under the constraint of cross-node federated learning, which requires that data in a network of nodes is generated locally on each node and remains decentralized. CNFGNN operates by disentangling the temporal dynamics modeling on devices and spatial dynamics on the server, utilizing alternating optimization to reduce the communication cost, facilitating computations on the edge devices. Experiments on the traffic flow forecasting task show that CNFGNN achieves the best forecasting performance in both transductive and inductive learning settings with no extra computation cost on edge devices, while incurring modest communication cost.

Reasoning with knowledge expressed in natural language and Knowledge Bases (KBs) is a major challenge for Artificial Intelligence, with applications in machine reading, dialogue, and question answering. General neural architectures that jointly learn representations and transformations of text are very data-inefficient, and it is hard to analyse their reasoning process. These issues are addressed by end-to-end differentiable reasoning systems such as Neural Theorem Provers (NTPs), although they can only be used with small-scale symbolic KBs. In this paper we first propose Greedy NTPs (GNTPs), an extension to NTPs addressing their complexity and scalability limitations, thus making them applicable to real-world datasets. This result is achieved by dynamically constructing the computation graph of NTPs and including only the most promising proof paths during inference, thus obtaining orders of magnitude more efficient models. Then, we propose a novel approach for jointly reasoning over KBs and textual mentions, by embedding logic facts and natural language sentences in a shared embedding space. We show that GNTPs perform on par with NTPs at a fraction of their cost while achieving competitive link prediction results on large datasets, providing explanations for predictions, and inducing interpretable models. Source code, datasets, and supplementary material are available online at //github.com/uclnlp/gntp.

We advocate the use of implicit fields for learning generative models of shapes and introduce an implicit field decoder for shape generation, aimed at improving the visual quality of the generated shapes. An implicit field assigns a value to each point in 3D space, so that a shape can be extracted as an iso-surface. Our implicit field decoder is trained to perform this assignment by means of a binary classifier. Specifically, it takes a point coordinate, along with a feature vector encoding a shape, and outputs a value which indicates whether the point is outside the shape or not. By replacing conventional decoders by our decoder for representation learning and generative modeling of shapes, we demonstrate superior results for tasks such as shape autoencoding, generation, interpolation, and single-view 3D reconstruction, particularly in terms of visual quality.