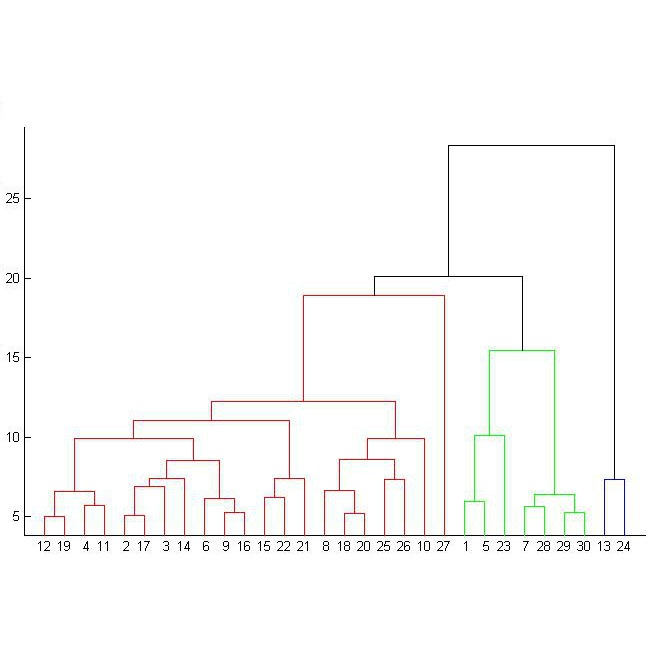

Motivated by extracting and summarizing relevant information in short sentence settings, such as satisfaction questionnaires, hotel reviews, and X/Twitter, we study the problem of clustering words in a hierarchical fashion. In particular, we focus on the problem of clustering with horizontal and vertical structural constraints. Horizontal constraints are typically cannot-link and must-link among words, while vertical constraints are precedence constraints among cluster levels. We overcome state-of-the-art bottlenecks by formulating the problem in two steps: first, as a soft-constrained regularized least-squares which guides the result of a sequential graph coarsening algorithm towards the horizontal feasible set. Then, flat clusters are extracted from the resulting hierarchical tree by computing optimal cut heights based on the available constraints. We show that the resulting approach compares very well with respect to existing algorithms and is computationally light.

相關內容

Federated unlearning has emerged as a promising paradigm to erase the client-level data effect without affecting the performance of collaborative learning models. However, the federated unlearning process often introduces extensive storage overhead and consumes substantial computational resources, thus hindering its implementation in practice. To address this issue, this paper proposes a scalable federated unlearning framework based on isolated sharding and coded computing. We first divide distributed clients into multiple isolated shards across stages to reduce the number of clients being affected. Then, to reduce the storage overhead of the central server, we develop a coded computing mechanism by compressing the model parameters across different shards. In addition, we provide the theoretical analysis of time efficiency and storage effectiveness for the isolated and coded sharding. Finally, extensive experiments on two typical learning tasks, i.e., classification and generation, demonstrate that our proposed framework can achieve better performance than three state-of-the-art frameworks in terms of accuracy, retraining time, storage overhead, and F1 scores for resisting membership inference attacks.

Coded distributed computing (CDC), proposed by Li et al., offers significant potential for reducing the communication load in MapReduce computing systems. In the setting of the cascaded CDC that consisting of $K$ nodes, $N$ input files, and $Q$ output functions, the objective is to compute each output function through $s\geq 1$ nodes with a computation load $r\geq 1$, enabling the application of coding techniques during the Shuffle phase to achieve minimum communication load. However, a significant limitation in most existing cascaded CDC schemes is their demand for splitting the original data into an exponentially growing number of input files and requiring an exponentially large number of output functions, which imposes stringent requirements for implementation. In this paper, we focus on the cascaded case of $K/s\in\mathbb{N}$, deliberately designing the strategy of data placement and output functions assignment based on a grouping method, such that a low-complexity Shuffle strategy is achievable. The main advantages of the proposed scheme include: 1) the multicast gains equal to $(r+s-1)(1-1/s)$ and $r+s-1$ which is approximate to $r+s-1$ when $s$ is relatively large, and the communication load is quite approximate to or surprisingly better than the optimal state-of-the-art scheme proposed by Li et al.; 2) the proposed scheme requires significantly less number of input files and output functions; 3) all the operations are implemented over the minimum binary field $\mathbb{F}_2$ in the one-shot fashion. Finally, we derive a new converse bound for the cascaded CDC framework, under the given strategies of data placement and output functions assignment. We demonstrate that the communication load of the proposed scheme is order optimal within a factor of $2$; and is also approximately optimal when $K$ is sufficiently large for a given $r$.

Previous theoretical results pertaining to meta-learning on sequences build on contrived assumptions and are somewhat convoluted. We introduce new information-theoretic tools that lead to an elegant and very general decomposition of error into three components: irreducible error, meta-learning error, and intra-task error. These tools unify analyses across many meta-learning challenges. To illustrate, we apply them to establish new results about in-context learning with transformers. Our theoretical results characterizes how error decays in both the number of training sequences and sequence lengths. Our results are very general; for example, they avoid contrived mixing time assumptions made by all prior results that establish decay of error with sequence length.

In the feature space, the collapse between features invokes critical problems in representation learning by remaining the features undistinguished. Interpolation-based augmentation methods such as mixup have shown their effectiveness in relieving the collapse problem between different classes, called inter-class collapse. However, intra-class collapse raised in coarse-to-fine transfer learning has not been discussed in the augmentation approach. To address them, we propose a better feature augmentation method, asymptotic midpoint mixup. The method generates augmented features by interpolation but gradually moves them toward the midpoint of inter-class feature pairs. As a result, the method induces two effects: 1) balancing the margin for all classes and 2) only moderately broadening the margin until it holds maximal confidence. We empirically analyze the collapse effects by measuring alignment and uniformity with visualizing representations. Then, we validate the intra-class collapse effects in coarse-to-fine transfer learning and the inter-class collapse effects in imbalanced learning on long-tailed datasets. In both tasks, our method shows better performance than other augmentation methods.

Formal contracts and assertions are effective methods to enhance software quality by enforcing preconditions, postconditions, and invariants. Previous research has demonstrated the value of contracts in traditional software development contexts. However, the adoption and impact of contracts in the context of mobile application development, particularly of Android applications, remain unexplored. To address this, we present the first large-scale empirical study on the presence and use of contracts in Android applications, written in Java or Kotlin. We consider different types of contract elements divided into five categories: conditional runtime exceptions, APIs, annotations, assertions, and other. We analyzed 2,390 Android applications from the F-Droid repository and processed more than 51,749 KLOC to determine 1) how and to what extent contracts are used, 2) how contract usage evolves, and 3) whether contracts are used safely in the context of program evolution and inheritance. Our findings include: 1) although most applications do not specify contracts, annotation-based approaches are the most popular among practitioners; 2) applications that use contracts continue to use them in later versions, but the number of methods increases at a higher rate than the number of contracts; and 3) there are many potentially unsafe specification changes when applications evolve and in subtyping relationships, which indicates a lack of specification stability. Our findings show that it would be desirable to have libraries that standardize contract specifications in Java and Kotlin, and tools that aid practitioners in writing stronger contracts and in detecting contract violations in the context of program evolution and inheritance.

Conventional recommendation methods have achieved notable advancements by harnessing collaborative or sequential information from user behavior. Recently, large language models (LLMs) have gained prominence for their capabilities in understanding and reasoning over textual semantics, and have found utility in various domains, including recommendation. Conventional recommendation methods and LLMs each have their strengths and weaknesses. While conventional methods excel at mining collaborative information and modeling sequential behavior, they struggle with data sparsity and the long-tail problem. LLMs, on the other hand, are proficient at utilizing rich textual contexts but face challenges in mining collaborative or sequential information. Despite their individual successes, there is a significant gap in leveraging their combined potential to enhance recommendation performance. In this paper, we introduce a general and model-agnostic framework known as \textbf{L}arge \textbf{la}nguage model with \textbf{m}utual augmentation and \textbf{a}daptive aggregation for \textbf{Rec}ommendation (\textbf{Llama4Rec}). Llama4Rec synergistically combines conventional and LLM-based recommendation models. Llama4Rec proposes data augmentation and prompt augmentation strategies tailored to enhance the conventional model and LLM respectively. An adaptive aggregation module is adopted to combine the predictions of both kinds of models to refine the final recommendation results. Empirical studies on three real-world datasets validate the superiority of Llama4Rec, demonstrating its consistent outperformance of baseline methods and significant improvements in recommendation performance.

Recent contrastive representation learning methods rely on estimating mutual information (MI) between multiple views of an underlying context. E.g., we can derive multiple views of a given image by applying data augmentation, or we can split a sequence into views comprising the past and future of some step in the sequence. Contrastive lower bounds on MI are easy to optimize, but have a strong underestimation bias when estimating large amounts of MI. We propose decomposing the full MI estimation problem into a sum of smaller estimation problems by splitting one of the views into progressively more informed subviews and by applying the chain rule on MI between the decomposed views. This expression contains a sum of unconditional and conditional MI terms, each measuring modest chunks of the total MI, which facilitates approximation via contrastive bounds. To maximize the sum, we formulate a contrastive lower bound on the conditional MI which can be approximated efficiently. We refer to our general approach as Decomposed Estimation of Mutual Information (DEMI). We show that DEMI can capture a larger amount of MI than standard non-decomposed contrastive bounds in a synthetic setting, and learns better representations in a vision domain and for dialogue generation.

Knowledge graph embedding, which aims to represent entities and relations as low dimensional vectors (or matrices, tensors, etc.), has been shown to be a powerful technique for predicting missing links in knowledge graphs. Existing knowledge graph embedding models mainly focus on modeling relation patterns such as symmetry/antisymmetry, inversion, and composition. However, many existing approaches fail to model semantic hierarchies, which are common in real-world applications. To address this challenge, we propose a novel knowledge graph embedding model---namely, Hierarchy-Aware Knowledge Graph Embedding (HAKE)---which maps entities into the polar coordinate system. HAKE is inspired by the fact that concentric circles in the polar coordinate system can naturally reflect the hierarchy. Specifically, the radial coordinate aims to model entities at different levels of the hierarchy, and entities with smaller radii are expected to be at higher levels; the angular coordinate aims to distinguish entities at the same level of the hierarchy, and these entities are expected to have roughly the same radii but different angles. Experiments demonstrate that HAKE can effectively model the semantic hierarchies in knowledge graphs, and significantly outperforms existing state-of-the-art methods on benchmark datasets for the link prediction task.

Benefit from the quick development of deep learning techniques, salient object detection has achieved remarkable progresses recently. However, there still exists following two major challenges that hinder its application in embedded devices, low resolution output and heavy model weight. To this end, this paper presents an accurate yet compact deep network for efficient salient object detection. More specifically, given a coarse saliency prediction in the deepest layer, we first employ residual learning to learn side-output residual features for saliency refinement, which can be achieved with very limited convolutional parameters while keep accuracy. Secondly, we further propose reverse attention to guide such side-output residual learning in a top-down manner. By erasing the current predicted salient regions from side-output features, the network can eventually explore the missing object parts and details which results in high resolution and accuracy. Experiments on six benchmark datasets demonstrate that the proposed approach compares favorably against state-of-the-art methods, and with advantages in terms of simplicity, efficiency (45 FPS) and model size (81 MB).

Multi-relation Question Answering is a challenging task, due to the requirement of elaborated analysis on questions and reasoning over multiple fact triples in knowledge base. In this paper, we present a novel model called Interpretable Reasoning Network that employs an interpretable, hop-by-hop reasoning process for question answering. The model dynamically decides which part of an input question should be analyzed at each hop; predicts a relation that corresponds to the current parsed results; utilizes the predicted relation to update the question representation and the state of the reasoning process; and then drives the next-hop reasoning. Experiments show that our model yields state-of-the-art results on two datasets. More interestingly, the model can offer traceable and observable intermediate predictions for reasoning analysis and failure diagnosis.