In exploratory factor analysis, model parameters are usually estimated by maximum likelihood method. The maximum likelihood estimate is obtained by solving a complicated multivariate algebraic equation. Since the solution to the equation is usually intractable, it is typically computed with continuous optimization methods, such as Newton-Raphson methods. With this procedure, however, the solution is inevitably dependent on the estimation algorithm and initial value since the log-likelihood function is highly non-concave. Particularly, the estimates of unique variances can result in zero or negative, referred to as improper solutions; in this case, the maximum likelihood estimate can be severely unstable. To delve into the issue of the instability of the maximum likelihood estimate, we compute exact solutions to the multivariate algebraic equation by using algebraic computations. We provide a computationally efficient algorithm based on the algebraic computations specifically optimized for maximum likelihood factor analysis. To be specific, Gr\"oebner basis and cylindrical decomposition are employed, powerful tools for solving the multivariate algebraic equation. Our proposed procedure produces all exact solutions to the algebraic equation; therefore, these solutions are independent of the initial value and estimation algorithm. We conduct Monte Carlo simulations to investigate the characteristics of the maximum likelihood solutions.

相關內容

Statistical tools which satisfy rigorous privacy guarantees are necessary for modern data analysis. It is well-known that robustness against contamination is linked to differential privacy. Despite this fact, using multivariate medians for differentially private and robust multivariate location estimation has not been systematically studied. We develop novel finite-sample performance guarantees for differentially private multivariate depth-based medians, which are essentially sharp. Our results cover commonly used depth functions, such as the halfspace (or Tukey) depth, spatial depth, and the integrated dual depth. We show that under Cauchy marginals, the cost of heavy-tailed location estimation outweighs the cost of privacy. We demonstrate our results numerically using a Gaussian contamination model in dimensions up to d = 100, and compare them to a state-of-the-art private mean estimation algorithm. As a by-product of our investigation, we prove concentration inequalities for the output of the exponential mechanism about the maximizer of the population objective function. This bound applies to objective functions that satisfy a mild regularity condition.

The paper presents a new approach of stability evaluation of the approximate Riemann solvers based on the direct Lyapunov method. The present methodology offers a detailed understanding of the origins of numerical shock instability in the approximate Riemann solvers. The pressure perturbation feeding the density and transverse momentum perturbations is identified as the cause of the numerical shock instabilities in the complete approximate Riemann solvers, while the magnitude of the numerical shock instabilities are found to be proportional to the magnitude of the pressure perturbations. A shock-stable HLLEM scheme is proposed based on the insights obtained from this analysis about the origins of numerical shock instability in the approximate Riemann solvers. A set of numerical test cases are solved to show that the proposed scheme is free from numerical shock instability problems of the original HLLEM scheme at high Mach numbers.

The present article aims to design and analyze efficient first-order strong schemes for a generalized A\"{i}t-Sahalia type model arising in mathematical finance and evolving in a positive domain $(0, \infty)$, which possesses a diffusion term with superlinear growth and a highly nonlinear drift that blows up at the origin. Such a complicated structure of the model unavoidably causes essential difficulties in the construction and convergence analysis of time discretizations. By incorporating implicitness in the term $\alpha_{-1} x^{-1}$ and a corrective mapping $\Phi_h$ in the recursion, we develop a novel class of explicit and unconditionally positivity-preserving (i.e., for any step-size $h>0$) Milstein-type schemes for the underlying model. In both non-critical and general critical cases, we introduce a novel approach to analyze mean-square error bounds of the novel schemes, without relying on a priori high-order moment bounds of the numerical approximations. The expected order-one mean-square convergence is attained for the proposed scheme. The above theoretical guarantee can be used to justify the optimal complexity of the Multilevel Monte Carlo method. Numerical experiments are finally provided to verify the theoretical findings.

This paper makes two contributions to the field of text-based patent similarity. First, it compares the performance of different kinds of patent-specific pretrained embedding models, namely static word embeddings (such as word2vec and doc2vec models) and contextual word embeddings (such as transformers based models), on the task of patent similarity calculation. Second, it compares specifically the performance of Sentence Transformers (SBERT) architectures with different training phases on the patent similarity task. To assess the models' performance, we use information about patent interferences, a phenomenon in which two or more patent claims belonging to different patent applications are proven to be overlapping by patent examiners. Therefore, we use these interferences cases as a proxy for maximum similarity between two patents, treating them as ground-truth to evaluate the performance of the different embedding models. Our results point out that, first, Patent SBERT-adapt-ub, the domain adaptation of the pretrained Sentence Transformer architecture proposed in this research, outperforms the current state-of-the-art in patent similarity. Second, they show that, in some cases, large static models performances are still comparable to contextual ones when trained on extensive data; thus, we believe that the superiority in the performance of contextual embeddings may not be related to the actual architecture but rather to the way the training phase is performed.

A procedure for asymptotic bias reduction of maximum likelihood estimates of generic estimands is developed. The estimator is realized as a plug-in estimator, where the parameter maximizes the penalized likelihood with a penalty function that satisfies a quasi-linear partial differential equation of the first order. The integration of the partial differential equation with the aid of differential geometry is discussed. Applications to generalized linear models, linear mixed-effects models, and a location-scale family are presented.

We propose to improve the convergence properties of the single-reference coupled cluster (CC) method through an augmented Lagrangian formalism. The conventional CC method changes a linear high-dimensional eigenvalue problem with exponential size into a problem of determining the roots of a nonlinear system of equations that has a manageable size. However, current numerical procedures for solving this system of equations to get the lowest eigenvalue suffer from two practical issues: First, solving the CC equations may not converge, and second, when converging, they may converge to other -- potentially unphysical -- states, which are stationary points of the CC energy expression. We show that both issues can be dealt with when a suitably defined energy is minimized in addition to solving the original CC equations. We further propose an augmented Lagrangian method for coupled cluster (alm-CC) to solve the resulting constrained optimization problem. We numerically investigate the proposed augmented Lagrangian formulation showing that the convergence towards the ground state is significantly more stable and that the optimization procedure is less susceptible to local minima. Furthermore, the computational cost of alm-CC is comparable to the conventional CC method.

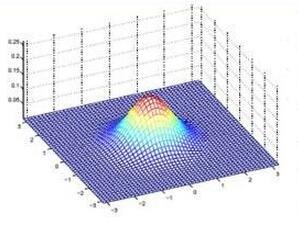

We address the problem of the best uniform approximation of a continuous function on a convex domain. The approximation is by linear combinations of a finite system of functions (not necessarily Chebyshev) under arbitrary linear constraints. By modifying the concept of alternance and of the Remez iterative procedure we present a method, which demonstrates its efficiency in numerical problems. The linear rate of convergence is proved under some favourable assumptions. A special attention is paid to systems of complex exponents, Gaussian functions, lacunar algebraic and trigonometric polynomials. Applications to signal processing, linear ODE, switching dynamical systems, and to Markov-Bernstein type inequalities are considered.

Deep generative models aim to learn the underlying distribution of data and generate new ones. Despite the diversity of generative models and their high-quality generation performance in practice, most of them lack rigorous theoretical convergence proofs. In this work, we aim to establish some convergence results for OT-Flow, one of the deep generative models. First, by reformulating the framework of OT-Flow model, we establish the $\Gamma$-convergence of the formulation of OT-flow to the corresponding optimal transport (OT) problem as the regularization term parameter $\alpha$ goes to infinity. Second, since the loss function will be approximated by Monte Carlo method in training, we established the convergence between the discrete loss function and the continuous one when the sample number $N$ goes to infinity as well. Meanwhile, the approximation capability of the neural network provides an upper bound for the discrete loss function of the minimizers. The proofs in both aspects provide convincing assurances for OT-Flow.

In the search for highly efficient decoders for short LDPC codes approaching maximum likelihood performance, a relayed decoding strategy, specifically activating the ordered statistics decoding process upon failure of a neural min-sum decoder, is enhanced by instilling three innovations. Firstly, soft information gathered at each step of the neural min-sum decoder is leveraged to forge a new reliability measure using a convolutional neural network. This measure aids in constructing the most reliable basis of ordered statistics decoding, bolstering the decoding process by excluding error-prone bits or concentrating them in a smaller area. Secondly, an adaptive ordered statistics decoding process is introduced, guided by a derived decoding path comprising prioritized blocks, each containing distinct test error patterns. The priority of these blocks is determined from the statistical data during the query phase. Furthermore, effective complexity management methods are devised by adjusting the decoding path's length or refining constraints on the involved blocks. Thirdly, a simple auxiliary criterion is introduced to reduce computational complexity by minimizing the number of candidate codewords before selecting the optimal estimate. Extensive experimental results and complexity analysis strongly support the proposed framework, demonstrating its advantages in terms of high throughput, low complexity, independence from noise variance, in addition to superior decoding performance.

We introduce a 2-dimensional stochastic dominance (2DSD) index to characterize both strict and almost stochastic dominance. Based on this index, we derive an estimator for the minimum violation ratio (MVR), also known as the critical parameter, of the almost stochastic ordering condition between two variables. We determine the asymptotic properties of the empirical 2DSD index and MVR for the most frequently used stochastic orders. We also provide conditions under which the bootstrap estimators of these quantities are strongly consistent. As an application, we develop consistent bootstrap testing procedures for almost stochastic dominance. The performance of the tests is checked via simulations and the analysis of real data.