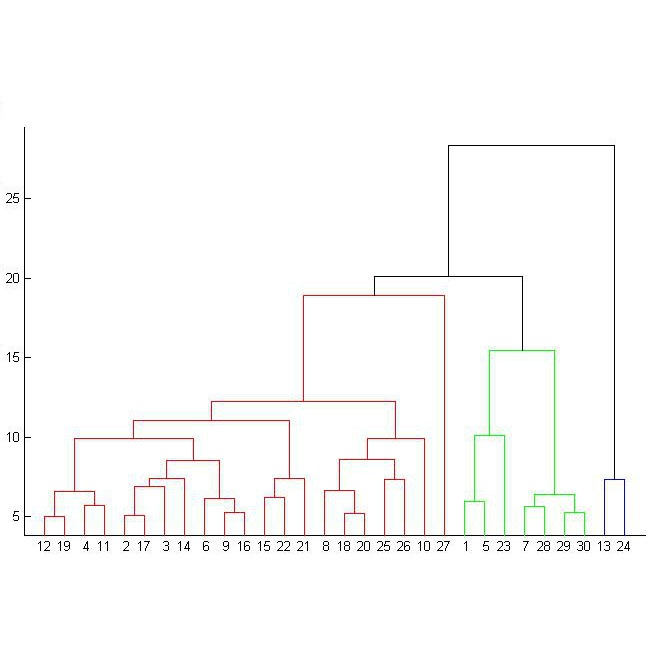

Movement specific vehicle classification and counting at traffic intersections is a crucial component for various traffic management activities. In this context, with recent advancements in computer-vision based techniques, cameras have emerged as a reliable data source for extracting vehicular trajectories from traffic scenes. However, classifying these trajectories by movement type is quite challenging as characteristics of motion trajectories obtained this way vary depending on camera calibrations. Although some existing methods have addressed such classification tasks with decent accuracies, the performance of these methods significantly relied on manual specification of several regions of interest. In this study, we proposed an automated classification method for movement specific classification (such as right-turn, left-turn and through movements) of vision-based vehicle trajectories. Our classification framework identifies different movement patterns observed in a traffic scene using an unsupervised hierarchical clustering technique Thereafter a similarity-based assignment strategy is adopted to assign incoming vehicle trajectories to identified movement groups. A new similarity measure was designed to overcome the inherent shortcomings of vision-based trajectories. Experimental results demonstrated the effectiveness of the proposed classification approach and its ability to adapt to different traffic scenarios without any manual intervention.

相關內容

Security Orchestration, Automation, and Response (SOAR) platforms integrate and orchestrate a wide variety of security tools to accelerate the operational activities of Security Operation Center (SOC). Integration of security tools in a SOAR platform is mostly done manually using APIs, plugins, and scripts. SOC teams need to navigate through API calls of different security tools to find a suitable API to define or update an incident response action. Analyzing various types of API documentation with diverse API format and presentation structure involves significant challenges such as data availability, data heterogeneity, and semantic variation for automatic identification of security tool APIs specific to a particular task. Given these challenges can have negative impact on SOC team's ability to handle security incident effectively and efficiently, we consider it important to devise suitable automated support solutions to address these challenges. We propose a novel learning-based framework for automated security tool API Recommendation for security Orchestration, automation, and response, APIRO. To mitigate data availability constraint, APIRO enriches security tool API description by applying a wide variety of data augmentation techniques. To learn data heterogeneity of the security tools and semantic variation in API descriptions, APIRO consists of an API-specific word embedding model and a Convolutional Neural Network (CNN) model that are used for prediction of top 3 relevant APIs for a task. We experimentally demonstrate the effectiveness of APIRO in recommending APIs for different tasks using 3 security tools and 36 augmentation techniques. Our experimental results demonstrate the feasibility of APIRO for achieving 91.9% Top-1 Accuracy.

Owing to effective and flexible data acquisition, unmanned aerial vehicle (UAV) has recently become a hotspot across the fields of computer vision (CV) and remote sensing (RS). Inspired by recent success of deep learning (DL), many advanced object detection and tracking approaches have been widely applied to various UAV-related tasks, such as environmental monitoring, precision agriculture, traffic management. This paper provides a comprehensive survey on the research progress and prospects of DL-based UAV object detection and tracking methods. More specifically, we first outline the challenges, statistics of existing methods, and provide solutions from the perspectives of DL-based models in three research topics: object detection from the image, object detection from the video, and object tracking from the video. Open datasets related to UAV-dominated object detection and tracking are exhausted, and four benchmark datasets are employed for performance evaluation using some state-of-the-art methods. Finally, prospects and considerations for the future work are discussed and summarized. It is expected that this survey can facilitate those researchers who come from remote sensing field with an overview of DL-based UAV object detection and tracking methods, along with some thoughts on their further developments.

Automated machine learning (AutoML) aims to find optimal machine learning solutions automatically given a machine learning problem. It could release the burden of data scientists from the multifarious manual tuning process and enable the access of domain experts to the off-the-shelf machine learning solutions without extensive experience. In this paper, we review the current developments of AutoML in terms of three categories, automated feature engineering (AutoFE), automated model and hyperparameter learning (AutoMHL), and automated deep learning (AutoDL). State-of-the-art techniques adopted in the three categories are presented, including Bayesian optimization, reinforcement learning, evolutionary algorithm, and gradient-based approaches. We summarize popular AutoML frameworks and conclude with current open challenges of AutoML.

Accurate detection and tracking of objects is vital for effective video understanding. In previous work, the two tasks have been combined in a way that tracking is based heavily on detection, but the detection benefits marginally from the tracking. To increase synergy, we propose to more tightly integrate the tasks by conditioning the object detection in the current frame on tracklets computed in prior frames. With this approach, the object detection results not only have high detection responses, but also improved coherence with the existing tracklets. This greater coherence leads to estimated object trajectories that are smoother and more stable than the jittered paths obtained without tracklet-conditioned detection. Over extensive experiments, this approach is shown to achieve state-of-the-art performance in terms of both detection and tracking accuracy, as well as noticeable improvements in tracking stability.

The ever-growing interest witnessed in the acquisition and development of unmanned aerial vehicles (UAVs), commonly known as drones in the past few years, has brought generation of a very promising and effective technology. Because of their characteristic of small size and fast deployment, UAVs have shown their effectiveness in collecting data over unreachable areas and restricted coverage zones. Moreover, their flexible-defined capacity enables them to collect information with a very high level of detail, leading to high resolution images. UAVs mainly served in military scenario. However, in the last decade, they have being broadly adopted in civilian applications as well. The task of aerial surveillance and situation awareness is usually completed by integrating intelligence, surveillance, observation, and navigation systems, all interacting in the same operational framework. To build this capability, UAV's are well suited tools that can be equipped with a wide variety of sensors, such as cameras or radars. Deep learning has been widely recognized as a prominent approach in different computer vision applications. Specifically, one-stage object detector and two-stage object detector are regarded as the most important two groups of Convolutional Neural Network based object detection methods. One-stage object detector could usually outperform two-stage object detector in speed; however, it normally trails in detection accuracy, compared with two-stage object detectors. In this study, focal loss based RetinaNet, which works as one-stage object detector, is utilized to be able to well match the speed of regular one-stage detectors and also defeat two-stage detectors in accuracy, for UAV based object detection. State-of-the-art performance result has been showed on the UAV captured image dataset-Stanford Drone Dataset (SDD).

Traditional multiple object tracking methods divide the task into two parts: affinity learning and data association. The separation of the task requires to define a hand-crafted training goal in affinity learning stage and a hand-crafted cost function of data association stage, which prevents the tracking goals from learning directly from the feature. In this paper, we present a new multiple object tracking (MOT) framework with data-driven association method, named as Tracklet Association Tracker (TAT). The framework aims at gluing feature learning and data association into a unity by a bi-level optimization formulation so that the association results can be directly learned from features. To boost the performance, we also adopt the popular hierarchical association and perform the necessary alignment and selection of raw detection responses. Our model trains over 20X faster than a similar approach, and achieves the state-of-the-art performance on both MOT2016 and MOT2017 benchmarks.

Visual object tracking is an important computer vision problem with numerous real-world applications including human-computer interaction, autonomous vehicles, robotics, motion-based recognition, video indexing, surveillance and security. In this paper, we aim to extensively review the latest trends and advances in the tracking algorithms and evaluate the robustness of trackers in the presence of noise. The first part of this work comprises a comprehensive survey of recently proposed tracking algorithms. We broadly categorize trackers into correlation filter based trackers and the others as non-correlation filter trackers. Each category is further classified into various types of trackers based on the architecture of the tracking mechanism. In the second part of this work, we experimentally evaluate tracking algorithms for robustness in the presence of additive white Gaussian noise. Multiple levels of additive noise are added to the Object Tracking Benchmark (OTB) 2015, and the precision and success rates of the tracking algorithms are evaluated. Some algorithms suffered more performance degradation than others, which brings to light a previously unexplored aspect of the tracking algorithms. The relative rank of the algorithms based on their performance on benchmark datasets may change in the presence of noise. Our study concludes that no single tracker is able to achieve the same efficiency in the presence of noise as under noise-free conditions; thus, there is a need to include a parameter for robustness to noise when evaluating newly proposed tracking algorithms.

In this paper, we present a new method for detecting road users in an urban environment which leads to an improvement in multiple object tracking. Our method takes as an input a foreground image and improves the object detection and segmentation. This new image can be used as an input to trackers that use foreground blobs from background subtraction. The first step is to create foreground images for all the frames in an urban video. Then, starting from the original blobs of the foreground image, we merge the blobs that are close to one another and that have similar optical flow. The next step is extracting the edges of the different objects to detect multiple objects that might be very close (and be merged in the same blob) and to adjust the size of the original blobs. At the same time, we use the optical flow to detect occlusion of objects that are moving in opposite directions. Finally, we make a decision on which information we keep in order to construct a new foreground image with blobs that can be used for tracking. The system is validated on four videos of an urban traffic dataset. Our method improves the recall and precision metrics for the object detection task compared to the vanilla background subtraction method and improves the CLEAR MOT metrics in the tracking tasks for most videos.

The field of Multi-Agent System (MAS) is an active area of research within Artificial Intelligence, with an increasingly important impact in industrial and other real-world applications. Within a MAS, autonomous agents interact to pursue personal interests and/or to achieve common objectives. Distributed Constraint Optimization Problems (DCOPs) have emerged as one of the prominent agent architectures to govern the agents' autonomous behavior, where both algorithms and communication models are driven by the structure of the specific problem. During the last decade, several extensions to the DCOP model have enabled them to support MAS in complex, real-time, and uncertain environments. This survey aims at providing an overview of the DCOP model, giving a classification of its multiple extensions and addressing both resolution methods and applications that find a natural mapping within each class of DCOPs. The proposed classification suggests several future perspectives for DCOP extensions, and identifies challenges in the design of efficient resolution algorithms, possibly through the adaptation of strategies from different areas.

A novel multi-atlas based image segmentation method is proposed by integrating a semi-supervised label propagation method and a supervised random forests method in a pattern recognition based label fusion framework. The semi-supervised label propagation method takes into consideration local and global image appearance of images to be segmented and segments the images by propagating reliable segmentation results obtained by the supervised random forests method. Particularly, the random forests method is used to train a regression model based on image patches of atlas images for each voxel of the images to be segmented. The regression model is used to obtain reliable segmentation results to guide the label propagation for the segmentation. The proposed method has been compared with state-of-the-art multi-atlas based image segmentation methods for segmenting the hippocampus in MR images. The experiment results have demonstrated that our method obtained superior segmentation performance.