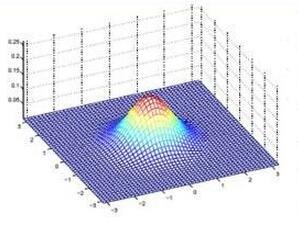

There is a need for new models for characterizing dependence in multivariate data. The multivariate Gaussian distribution is routinely used, but cannot characterize nonlinear relationships in the data. Most non-linear extensions tend to be highly complex; for example, involving estimation of a non-linear regression model in latent variables. In this article, we propose a relatively simple class of Ellipsoid-Gaussian multivariate distributions, which are derived by using a Gaussian linear factor model involving latent variables having a von Mises-Fisher distribution on a unit hyper-sphere. We show that the Ellipsoid-Gaussian distribution can flexibly model curved relationships among variables with lower-dimensional structures. Taking a Bayesian approach, we propose a hybrid of gradient-based geodesic Monte Carlo and adaptive Metropolis for posterior sampling. We derive basic properties and illustrate the utility of the Ellipsoid-Gaussian distribution on a variety of simulated and real data applications. An accompanying R package is also available.

相關內容

Computational analysis with the finite element method requires geometrically accurate meshes. It is well known that high-order meshes can accurately capture curved surfaces with fewer degrees of freedom in comparison to low-order meshes. Existing techniques for high-order mesh generation typically output meshes with same polynomial order for all elements. However, high order elements away from curvilinear boundaries or interfaces increase the computational cost of the simulation without increasing geometric accuracy. In prior work, we have presented one such approach for generating body-fitted uniform-order meshes that takes a given mesh and morphs it to align with the surface of interest prescribed as the zero isocontour of a level-set function. We extend this method to generate mixed-order meshes such that curved surfaces of the domain are discretized with high-order elements, while low-order elements are used elsewhere. Numerical experiments demonstrate the robustness of the approach and show that it can be used to generate mixed-order meshes that are much more efficient than high uniform-order meshes. The proposed approach is purely algebraic, and extends to different types of elements (quadrilaterals/triangles/tetrahedron/hexahedra) in two- and three-dimensions.

Faithfully summarizing the knowledge encoded by a deep neural network (DNN) into a few symbolic primitive patterns without losing much information represents a core challenge in explainable AI. To this end, Ren et al. (2023c) have derived a series of theorems to prove that the inference score of a DNN can be explained as a small set of interactions between input variables. However, the lack of generalization power makes it still hard to consider such interactions as faithful primitive patterns encoded by the DNN. Therefore, given different DNNs trained for the same task, we develop a new method to extract interactions that are shared by these DNNs. Experiments show that the extracted interactions can better reflect common knowledge shared by different DNNs.

It is a challenge to manage infinite- or high-dimensional data in situations where storage, transmission, or computation resources are constrained. In the simplest scenario when the data consists of a noisy infinite-dimensional signal, we introduce the notion of local \emph{effective dimension} (i.e., pertinent to the underlying signal), formulate and study the problem of its recovery on the basis of noisy data. This problem can be associated to the problems of adaptive quantization, (lossy) data compression, oracle signal estimation. We apply a Bayesian approach and study frequentists properties of the resulting posterior, a purely frequentist version of the results is also proposed. We derive certain upper and lower bounds results about identifying the local effective dimension which show that only the so called \emph{one-sided inference} on the local effective dimension can be ensured whereas the \emph{two-sided inference}, on the other hand, is in general impossible. We establish the \emph{minimal} conditions under which two-sided inference can be made. Finally, connection to the problem of smoothness estimation for some traditional smoothness scales (Sobolev scales) is considered.

Any interactive protocol between a pair of parties can be reliably simulated in the presence of noise with a multiplicative overhead on the number of rounds (Schulman 1996). The reciprocal of the best (least) overhead is called the interactive capacity of the noisy channel. In this work, we present lower bounds on the interactive capacity of the binary erasure channel. Our lower bound improves the best known bound due to Ben-Yishai et al. 2021 by roughly a factor of 1.75. The improvement is due to a tighter analysis of the correctness of the simulation protocol using error pattern analysis. More precisely, instead of using the well-known technique of bounding the least number of erasures needed to make the simulation fail, we identify and bound the probability of specific erasure patterns causing simulation failure. We remark that error pattern analysis can be useful in solving other problems involving stochastic noise, such as bounding the interactive capacity of different channels.

A new sparse semiparametric model is proposed, which incorporates the influence of two functional random variables in a scalar response in a flexible and interpretable manner. One of the functional covariates is included through a single-index structure, while the other is included linearly through the high-dimensional vector formed by its discretised observations. For this model, two new algorithms are presented for selecting relevant variables in the linear part and estimating the model. Both procedures utilise the functional origin of linear covariates. Finite sample experiments demonstrated the scope of application of both algorithms: the first method is a fast algorithm that provides a solution (without loss in predictive ability) for the significant computational time required by standard variable selection methods for estimating this model, and the second algorithm completes the set of relevant linear covariates provided by the first, thus improving its predictive efficiency. Some asymptotic results theoretically support both procedures. A real data application demonstrated the applicability of the presented methodology from a predictive perspective in terms of the interpretability of outputs and low computational cost.

In this work, a Generalized Finite Difference (GFD) scheme is presented for effectively computing the numerical solution of a parabolic-elliptic system modelling a bacterial strain with density-suppressed motility. The GFD method is a meshless method known for its simplicity for solving non-linear boundary value problems over irregular geometries. The paper first introduces the basic elements of the GFD method, and then an explicit-implicit scheme is derived. The convergence of the method is proven under a bound for the time step, and an algorithm is provided for its computational implementation. Finally, some examples are considered comparing the results obtained with a regular mesh and an irregular cloud of points.

Typical pipelines for model geometry generation in computational biomedicine stem from images, which are usually considered to be at rest, despite the object being in mechanical equilibrium under several forces. We refer to the stress-free geometry computation as the reference configuration problem, and in this work we extend such a formulation to the theory of fully nonlinear poroelastic media. The main steps are (i) writing the equations in terms of the reference porosity and (ii) defining a time dependent problem whose steady state solution is the reference porosity. This problem can be computationally challenging as it can require several hundreds of iterations to converge, so we propose the use of Anderson acceleration to speed up this procedure. Our evidence shows that this strategy can reduce the number of iterations up to 80\%. In addition, we note that a primal formulation of the nonlinear mass conservation equations is not consistent due to the presence of second order derivatives of the displacement, which we alleviate through adequate mixed formulations. All claims are validated through numerical simulations in both idealized and realistic scenarios.

A common requirement in science is to store and share large sets of simulation data in an efficient, nested, flexible and human-readable way. Such datasets contain number counts and distributions, i.e. histograms and maps, of arbitrary dimension and variable type, e.g. floating-point number, integer or character string. Modern high-level programming languages like Perl and Python have associated arrays, knowns as dictionaries or hashes, respectively, to fulfil this storage need. Low-level languages used more commonly for fast computational simulations, such as C and Fortran, lack this functionality. We present libcdict, a C dictionary library, to solve this problem. Libcdict provides C and Fortran application programming interfaces (APIs) to native dictionaries, called cdicts, and functions for cdicts to load and save these as JSON and hence for easy interpretation in other software and languages like Perl, Python and R.

Speech super-resolution (SR) is the task that restores high-resolution speech from low-resolution input. Existing models employ simulated data and constrained experimental settings, which limit generalization to real-world SR. Predictive models are known to perform well in fixed experimental settings, but can introduce artifacts in adverse conditions. On the other hand, generative models learn the distribution of target data and have a better capacity to perform well on unseen conditions. In this study, we propose a novel two-stage approach that combines the strengths of predictive and generative models. Specifically, we employ a diffusion-based model that is conditioned on the output of a predictive model. Our experiments demonstrate that the model significantly outperforms single-stage counterparts and existing strong baselines on benchmark SR datasets. Furthermore, we introduce a repainting technique during the inference of the diffusion process, enabling the proposed model to regenerate high-frequency components even in mismatched conditions. An additional contribution is the collection of and evaluation on real SR recordings, using the same microphone at different native sampling rates. We make this dataset freely accessible, to accelerate progress towards real-world speech super-resolution.

Inequality measures are quantitative measures that take values in the unit interval, with a zero value characterizing perfect equality. Although originally proposed to measure economic inequalities, they can be applied to several other situations, in which one is interested in the mutual variability between a set of observations, rather than in their deviations from the mean. While unidimensional measures of inequality, such as the Gini index, are widely known and employed, multidimensional measures, such as Lorenz Zonoids, are difficult to interpret and computationally expensive and, for these reasons, are not much well known. To overcome the problem, in this paper we propose a new scaling invariant multidimensional inequality index, based on the Fourier transform, which exhibits a number of interesting properties, and whose application to the multidimensional case is rather straightforward to calculate and interpret.