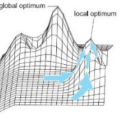

The $(1 + (\lambda,\lambda))$ genetic algorithm is a younger evolutionary algorithm trying to profit also from inferior solutions. Rigorous runtime analyses on unimodal fitness functions showed that it can indeed be faster than classical evolutionary algorithms, though on these simple problems the gains were only moderate. In this work, we conduct the first runtime analysis of this algorithm on a multimodal problem class, the jump functions benchmark. We show that with the right parameters, the \ollga optimizes any jump function with jump size $2 \le k \le n/4$ in expected time $O(n^{(k+1)/2} e^{O(k)} k^{-k/2})$, which significantly and already for constant~$k$ outperforms standard mutation-based algorithms with their $\Theta(n^k)$ runtime and standard crossover-based algorithms with their $\tilde{O}(n^{k-1})$ runtime guarantee. For the isolated problem of leaving the local optimum of jump functions, we determine provably optimal parameters that lead to a runtime of $(n/k)^{k/2} e^{\Theta(k)}$. This suggests some general advice on how to set the parameters of the \ollga, which might ease the further use of this algorithm.

相關內容

We prove a bound of $O( k (n+m)\log^{d-1})$ on the number of incidences between $n$ points and $m$ axis parallel boxes in $\mathbb{R}^d$, if no $k$ boxes contain $k$ common points. That is, the incidence graph between the points and the boxes does not contain $K_{k,k}$ as a subgraph. This new bound improves over previous work by a factor of $\log^d n$, for $d >2$. We also study other variants of the problem. For halfspaces, using shallow cuttings, we get a near linear bound in two and three dimensions. Finally, we present near linear bound for the case of shapes in the plane with low union complexity (e.g. fat triangles).

In recent work (Maierhofer & Huybrechs, 2022, Adv. Comput. Math.), the authors showed that least-squares oversampling can improve the convergence properties of collocation methods for boundary integral equations involving operators of certain pseudo-differential form. The underlying principle is that the discrete method approximates a Bubnov$-$Galerkin method in a suitable sense. In the present work, we extend this analysis to the case when the integral operator is perturbed by a compact operator $\mathcal{K}$ which is continuous as a map on Sobolev spaces on the boundary, $\mathcal{K}:H^{p}\rightarrow H^{q}$ for all $p,q\in\mathbb{R}$. This study is complicated by the fact that both the test and trial functions in the discrete Bubnov-Galerkin orthogonality conditions are modified over the unperturbed setting. Our analysis guarantees that previous results concerning optimal convergence rates and sufficient rates of oversampling are preserved in the more general case. Indeed, for the first time, this analysis provides a complete explanation of the advantages of least-squares oversampled collocation for boundary integral formulations of the Laplace equation on arbitrary smooth Jordan curves in 2D. Our theoretical results are shown to be in very good agreement with numerical experiments.

We consider the problem of finding nearly optimal solutions of optimization problems with random objective functions. Two concrete problems we consider are (a) optimizing the Hamiltonian of a spherical or Ising $p$-spin glass model, and (b) finding a large independent set in a sparse Erd\H{o}s-R\'{e}nyi graph. The following families of algorithms are considered: (a) low-degree polynomials of the input; (b) low-depth Boolean circuits; (c) the Langevin dynamics algorithm. We show that these families of algorithms fail to produce nearly optimal solutions with high probability. For the case of Boolean circuits, our results improve the state-of-the-art bounds known in circuit complexity theory (although we consider the search problem as opposed to the decision problem). Our proof uses the fact that these models are known to exhibit a variant of the overlap gap property (OGP) of near-optimal solutions. Specifically, for both models, every two solutions whose objectives are above a certain threshold are either close or far from each other. The crux of our proof is that the classes of algorithms we consider exhibit a form of stability. We show by an interpolation argument that stable algorithms cannot overcome the OGP barrier. The stability of Langevin dynamics is an immediate consequence of the well-posedness of stochastic differential equations. The stability of low-degree polynomials and Boolean circuits is established using tools from Gaussian and Boolean analysis -- namely hypercontractivity and total influence, as well as a novel lower bound for random walks avoiding certain subsets. In the case of Boolean circuits, the result also makes use of Linal-Mansour-Nisan's classical theorem. Our techniques apply more broadly to low influence functions and may apply more generally.

We initiate the study of Boolean function analysis on high-dimensional expanders. We give a random-walk based definition of high-dimensional expansion, which coincides with the earlier definition in terms of two-sided link expanders. Using this definition, we describe an analog of the Fourier expansion and the Fourier levels of the Boolean hypercube for simplicial complexes. Our analog is a decomposition into approximate eigenspaces of random walks associated with the simplicial complexes. Our random-walk definition and the decomposition have the additional advantage that they extend to the more general setting of posets, encompassing both high-dimensional expanders and the Grassmann poset, which appears in recent work on the unique games conjecture. We then use this decomposition to extend the Friedgut-Kalai-Naor theorem to high-dimensional expanders. Our results demonstrate that a constant-degree high-dimensional expander can sometimes serve as a sparse model for the Boolean slice or hypercube, and quite possibly additional results from Boolean function analysis can be carried over to this sparse model. Therefore, this model can be viewed as a derandomization of the Boolean slice, containing only $|X(k-1)|=O(n)$ points in contrast to $\binom{n}{k}$ points in the $k$-slice (which consists of all $n$-bit strings with exactly $k$ ones).

Operating systems include many heuristic algorithms designed to improve overall storage performance and throughput. Because such heuristics cannot work well for all conditions and workloads, system designers resorted to exposing numerous tunable parameters to users -- thus burdening users with continually optimizing their own storage systems and applications. Storage systems are usually responsible for most latency in I/O-heavy applications, so even a small latency improvement can be significant. Machine learning (ML) techniques promise to learn patterns, generalize from them, and enable optimal solutions that adapt to changing workloads. We propose that ML solutions become a first-class component in OSs and replace manual heuristics to optimize storage systems dynamically. In this paper, we describe our proposed ML architecture, called KML. We developed a prototype KML architecture and applied it to two case studies: optimizing readahead and NFS read-size values. Our experiments show that KML consumes less than 4KB of dynamic kernel memory, has a CPU overhead smaller than 0.2%, and yet can learn patterns and improve I/O throughput by as much as 2.3x and 15x for two case studies -- even for complex, never-seen-before, concurrently running mixed workloads on different storage devices.

For many applications, drones are required to operate entirely or partially autonomously. To fly completely or partially on their own, drones need access to location services to get navigation commands. While using the Global Positioning System (GPS) is an obvious choice, GPS is not always available, can be spoofed or jammed, and is highly error-prone for indoor and underground environments. The ranging method using beacons is one of the popular methods for localization, specially for indoor environments. In general, localization error in this class is due to two factors: the ranging error and the error induced by the relative geometry between the beacons and the target object to localize. This paper proposes OPTILOD (Optimal Beacon Placement for High-Accuracy Indoor Localization of Drones), an optimization algorithm for the optimal placement of beacons deployed in three-dimensional indoor environments. OPTILOD leverages advances in Evolutionary Algorithms to compute the minimum number of beacons and their optimal placement to minimize the localization error. These problems belong to the Mixed Integer Programming (MIP) class and are both considered NP-Hard. Despite that, OPTILOD can provide multiple optimal beacon configurations that minimize the localization error and the number of deployed beacons concurrently and time efficiently.

We study the optimal sample complexity of learning a Gaussian directed acyclic graph (DAG) from observational data. Our main result establishes the minimax optimal sample complexity for learning the structure of a linear Gaussian DAG model with equal variances to be $n\asymp q\log(d/q)$, where $q$ is the maximum number of parents and $d$ is the number of nodes. We further make comparisons with the classical problem of learning (undirected) Gaussian graphical models, showing that under the equal variance assumption, these two problems share the same optimal sample complexity. In other words, at least for Gaussian models with equal error variances, learning a directed graphical model is not more difficult than learning an undirected graphical model. Our results also extend to more general identification assumptions as well as subgaussian errors.

One of the central problems in machine learning is domain adaptation. Unlike past theoretical work, we consider a new model for subpopulation shift in the input or representation space. In this work, we propose a provably effective framework for domain adaptation based on label propagation. In our analysis, we use a simple but realistic ``expansion'' assumption, proposed in \citet{wei2021theoretical}. Using a teacher classifier trained on the source domain, our algorithm not only propagates to the target domain but also improves upon the teacher. By leveraging existing generalization bounds, we also obtain end-to-end finite-sample guarantees on the entire algorithm. In addition, we extend our theoretical framework to a more general setting of source-to-target transfer based on a third unlabeled dataset, which can be easily applied in various learning scenarios.

The problem of Approximate Nearest Neighbor (ANN) search is fundamental in computer science and has benefited from significant progress in the past couple of decades. However, most work has been devoted to pointsets whereas complex shapes have not been sufficiently treated. Here, we focus on distance functions between discretized curves in Euclidean space: they appear in a wide range of applications, from road segments to time-series in general dimension. For $\ell_p$-products of Euclidean metrics, for any $p$, we design simple and efficient data structures for ANN, based on randomized projections, which are of independent interest. They serve to solve proximity problems under a notion of distance between discretized curves, which generalizes both discrete Fr\'echet and Dynamic Time Warping distances. These are the most popular and practical approaches to comparing such curves. We offer the first data structures and query algorithms for ANN with arbitrarily good approximation factor, at the expense of increasing space usage and preprocessing time over existing methods. Query time complexity is comparable or significantly improved by our algorithms, our algorithm is especially efficient when the length of the curves is bounded.

Image segmentation is an important component of many image understanding systems. It aims to group pixels in a spatially and perceptually coherent manner. Typically, these algorithms have a collection of parameters that control the degree of over-segmentation produced. It still remains a challenge to properly select such parameters for human-like perceptual grouping. In this work, we exploit the diversity of segments produced by different choices of parameters. We scan the segmentation parameter space and generate a collection of image segmentation hypotheses (from highly over-segmented to under-segmented). These are fed into a cost minimization framework that produces the final segmentation by selecting segments that: (1) better describe the natural contours of the image, and (2) are more stable and persistent among all the segmentation hypotheses. We compare our algorithm's performance with state-of-the-art algorithms, showing that we can achieve improved results. We also show that our framework is robust to the choice of segmentation kernel that produces the initial set of hypotheses.