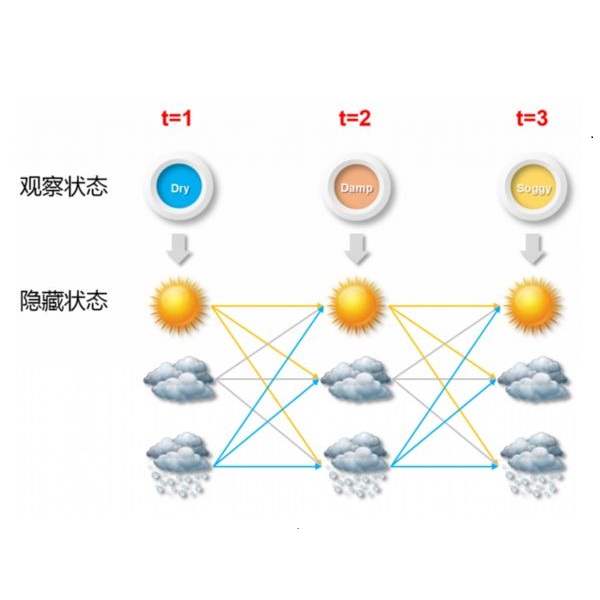

Hidden Markov models (HMMs) are commonly used for disease progression modeling when the true patient health state is not fully known. Since HMMs typically have multiple local optima, incorporating additional patient covariates can improve parameter estimation and predictive performance. To allow for this, we develop hidden Markov recurrent neural networks (HMRNNs), a special case of recurrent neural networks that combine neural networks' flexibility with HMMs' interpretability. The HMRNN can be reduced to a standard HMM, with an identical likelihood function and parameter interpretations, but can also be combined with other predictive neural networks that take patient information as input. The HMRNN estimates all parameters simultaneously via gradient descent. Using a dataset of Alzheimer's disease patients, we demonstrate how the HMRNN can combine an HMM with other predictive neural networks to improve disease forecasting and to offer a novel clinical interpretation compared with a standard HMM trained via expectation-maximization.

相關內容

The essence of multivariate sequential learning is all about how to extract dependencies in data. These data sets, such as hourly medical records in intensive care units and multi-frequency phonetic time series, often time exhibit not only strong serial dependencies in the individual components (the "marginal" memory) but also non-negligible memories in the cross-sectional dependencies (the "joint" memory). Because of the multivariate complexity in the evolution of the joint distribution that underlies the data generating process, we take a data-driven approach and construct a novel recurrent network architecture, termed Memory-Gated Recurrent Networks (mGRN), with gates explicitly regulating two distinct types of memories: the marginal memory and the joint memory. Through a combination of comprehensive simulation studies and empirical experiments on a range of public datasets, we show that our proposed mGRN architecture consistently outperforms state-of-the-art architectures targeting multivariate time series.

In this paper, from a theoretical perspective, we study how powerful graph neural networks (GNNs) can be for learning approximation algorithms for combinatorial problems. To this end, we first establish a new class of GNNs that can solve strictly a wider variety of problems than existing GNNs. Then, we bridge the gap between GNN theory and the theory of distributed local algorithms to theoretically demonstrate that the most powerful GNN can learn approximation algorithms for the minimum dominating set problem and the minimum vertex cover problem with some approximation ratios and that no GNN can perform better than with these ratios. This paper is the first to elucidate approximation ratios of GNNs for combinatorial problems. Furthermore, we prove that adding coloring or weak-coloring to each node feature improves these approximation ratios. This indicates that preprocessing and feature engineering theoretically strengthen model capabilities.

Deep neural networks and decision trees operate on largely separate paradigms; typically, the former performs representation learning with pre-specified architectures, while the latter is characterised by learning hierarchies over pre-specified features with data-driven architectures. We unite the two via adaptive neural trees (ANTs), a model that incorporates representation learning into edges, routing functions and leaf nodes of a decision tree, along with a backpropagation-based training algorithm that adaptively grows the architecture from primitive modules (e.g., convolutional layers). ANTs allow increased interpretability via hierarchical clustering, e.g., learning meaningful class associations, such as separating natural vs. man-made objects. We demonstrate this on classification and regression tasks, achieving over 99% and 90% accuracy on the MNIST and CIFAR-10 datasets, and outperforming standard neural networks, random forests and gradient boosted trees on the SARCOS dataset. Furthermore, ANT optimisation naturally adapts the architecture to the size and complexity of the training data.

Recurrent neural networks (RNNs) provide state-of-the-art performance in processing sequential data but are memory intensive to train, limiting the flexibility of RNN models which can be trained. Reversible RNNs---RNNs for which the hidden-to-hidden transition can be reversed---offer a path to reduce the memory requirements of training, as hidden states need not be stored and instead can be recomputed during backpropagation. We first show that perfectly reversible RNNs, which require no storage of the hidden activations, are fundamentally limited because they cannot forget information from their hidden state. We then provide a scheme for storing a small number of bits in order to allow perfect reversal with forgetting. Our method achieves comparable performance to traditional models while reducing the activation memory cost by a factor of 10--15. We extend our technique to attention-based sequence-to-sequence models, where it maintains performance while reducing activation memory cost by a factor of 5--10 in the encoder, and a factor of 10--15 in the decoder.

Automatic neural architecture design has shown its potential in discovering powerful neural network architectures. Existing methods, no matter based on reinforcement learning or evolutionary algorithms (EA), conduct architecture search in a discrete space, which is highly inefficient. In this paper, we propose a simple and efficient method to automatic neural architecture design based on continuous optimization. We call this new approach neural architecture optimization (NAO). There are three key components in our proposed approach: (1) An encoder embeds/maps neural network architectures into a continuous space. (2) A predictor takes the continuous representation of a network as input and predicts its accuracy. (3) A decoder maps a continuous representation of a network back to its architecture. The performance predictor and the encoder enable us to perform gradient based optimization in the continuous space to find the embedding of a new architecture with potentially better accuracy. Such a better embedding is then decoded to a network by the decoder. Experiments show that the architecture discovered by our method is very competitive for image classification task on CIFAR-10 and language modeling task on PTB, outperforming or on par with the best results of previous architecture search methods with a significantly reduction of computational resources. Specifically we obtain $2.07\%$ test set error rate for CIFAR-10 image classification task and $55.9$ test set perplexity of PTB language modeling task. The best discovered architectures on both tasks are successfully transferred to other tasks such as CIFAR-100 and WikiText-2.

The Conditional Random Field as a Recurrent Neural Network layer is a recently proposed algorithm meant to be placed on top of an existing Fully-Convolutional Neural Network to improve the quality of semantic segmentation. In this paper, we test whether this algorithm, which was shown to improve semantic segmentation for 2D RGB images, is able to improve segmentation quality for 3D multi-modal medical images. We developed an implementation of the algorithm which works for any number of spatial dimensions, input/output image channels, and reference image channels. As far as we know this is the first publicly available implementation of this sort. We tested the algorithm with two distinct 3D medical imaging datasets, we concluded that the performance differences observed were not statistically significant. Finally, in the discussion section of the paper, we go into the reasons as to why this technique transfers poorly from natural images to medical images.

Memory-based neural networks model temporal data by leveraging an ability to remember information for long periods. It is unclear, however, whether they also have an ability to perform complex relational reasoning with the information they remember. Here, we first confirm our intuitions that standard memory architectures may struggle at tasks that heavily involve an understanding of the ways in which entities are connected -- i.e., tasks involving relational reasoning. We then improve upon these deficits by using a new memory module -- a \textit{Relational Memory Core} (RMC) -- which employs multi-head dot product attention to allow memories to interact. Finally, we test the RMC on a suite of tasks that may profit from more capable relational reasoning across sequential information, and show large gains in RL domains (e.g. Mini PacMan), program evaluation, and language modeling, achieving state-of-the-art results on the WikiText-103, Project Gutenberg, and GigaWord datasets.

We propose a Bayesian convolutional neural network built upon Bayes by Backprop and elaborate how this known method can serve as the fundamental construct of our novel, reliable variational inference method for convolutional neural networks. First, we show how Bayes by Backprop can be applied to convolutional layers where weights in filters have probability distributions instead of point-estimates; and second, how our proposed framework leads with various network architectures to performances comparable to convolutional neural networks with point-estimates weights. In the past, Bayes by Backprop has been successfully utilised in feedforward and recurrent neural networks, but not in convolutional ones. This work symbolises the extension of the group of Bayesian neural networks which encompasses all three aforementioned types of network architectures now.

In this work, we compare three different modeling approaches for the scores of soccer matches with regard to their predictive performances based on all matches from the four previous FIFA World Cups 2002 - 2014: Poisson regression models, random forests and ranking methods. While the former two are based on the teams' covariate information, the latter method estimates adequate ability parameters that reflect the current strength of the teams best. Within this comparison the best-performing prediction methods on the training data turn out to be the ranking methods and the random forests. However, we show that by combining the random forest with the team ability parameters from the ranking methods as an additional covariate we can improve the predictive power substantially. Finally, this combination of methods is chosen as the final model and based on its estimates, the FIFA World Cup 2018 is simulated repeatedly and winning probabilities are obtained for all teams. The model slightly favors Spain before the defending champion Germany. Additionally, we provide survival probabilities for all teams and at all tournament stages as well as the most probable tournament outcome.

Partially inspired by successful applications of variational recurrent neural networks, we propose a novel variational recurrent neural machine translation (VRNMT) model in this paper. Different from the variational NMT, VRNMT introduces a series of latent random variables to model the translation procedure of a sentence in a generative way, instead of a single latent variable. Specifically, the latent random variables are included into the hidden states of the NMT decoder with elements from the variational autoencoder. In this way, these variables are recurrently generated, which enables them to further capture strong and complex dependencies among the output translations at different timesteps. In order to deal with the challenges in performing efficient posterior inference and large-scale training during the incorporation of latent variables, we build a neural posterior approximator, and equip it with a reparameterization technique to estimate the variational lower bound. Experiments on Chinese-English and English-German translation tasks demonstrate that the proposed model achieves significant improvements over both the conventional and variational NMT models.