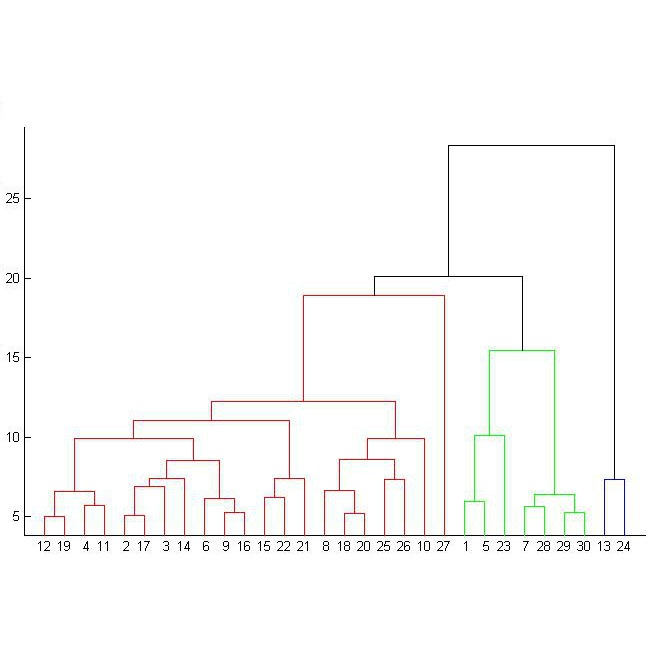

We propose a new generative hierarchical clustering model that learns a flexible tree-based posterior distribution over latent variables. The proposed Tree Variational Autoencoder (TreeVAE) hierarchically divides samples according to their intrinsic characteristics, shedding light on hidden structure in the data. It adapts its architecture to discover the optimal tree for encoding dependencies between latent variables. The proposed tree-based generative architecture permits lightweight conditional inference and improves generative performance by utilizing specialized leaf decoders. We show that TreeVAE uncovers underlying clusters in the data and finds meaningful hierarchical relations between the different groups on a variety of datasets, including real-world imaging data. We present empirically that TreeVAE provides a more competitive log-likelihood lower bound than the sequential counterparts. Finally, due to its generative nature, TreeVAE is able to generate new samples from the discovered clusters via conditional sampling.

相關內容

Mode connectivity is a phenomenon where trained models are connected by a path of low loss. We reframe this in the context of Information Geometry, where neural networks are studied as spaces of parameterized distributions with curved geometry. We hypothesize that shortest paths in these spaces, known as geodesics, correspond to mode-connecting paths in the loss landscape. We propose an algorithm to approximate geodesics and demonstrate that they achieve mode connectivity.

We propose an optimization algorithm for Variational Inference (VI) in complex models. Our approach relies on natural gradient updates where the variational space is a Riemann manifold. We develop an efficient algorithm for Gaussian Variational Inference that implicitly satisfies the positive definite constraint on the variational covariance matrix. Our Exact manifold Gaussian Variational Bayes (EMGVB) provides exact but simple update rules and is straightforward to implement. Due to its black-box nature, EMGVB stands as a ready-to-use solution for VI in complex models. Over five datasets, we empirically validate our feasible approach on different statistical, econometric, and deep learning models, discussing its performance with respect to baseline methods.

In problems such as variable selection and graph estimation, models are characterized by Boolean logical structure such as presence or absence of a variable or an edge. Consequently, false positive and false negative errors can be specified as the number of variables or edges that are incorrectly included/excluded in an estimated model. However, there are several other problems such as ranking, clustering, and causal inference in which the associated model classes do not admit transparent notions of false positive and false negative errors due to the lack of an underlying Boolean logical structure. In this paper, we present a generic approach to endow a collection of models with partial order structure, which leads to a hierarchical organization of model classes as well as natural analogs of false positive and false negative errors. We describe model selection procedures that provide false positive error control in our general setting and we illustrate their utility with numerical experiments.

Given an array A[1: n] of n elements drawn from an ordered set, the sorted range selection problem is to build a data structure that can be used to answer the following type of queries efficiently: Given a pair of indices i, j $ (1\le i\le j \le n)$, and a positive integer k, report the k smallest elements from the sub-array A[i: j] in order. Brodal et al. (Brodal, G.S., Fagerberg, R., Greve, M., and L{\'o}pez-Ortiz, A., Online sorted range reporting. Algorithms and Computation (2009) pp. 173--182) introduced the problem and gave an optimal solution. After O(n log n) time for preprocessing, the query time is O(k). The space used is O(n). In this paper, we propose the only other possible optimal trade-off for the problem. We present a linear space solution to the problem that takes O(k log k) time to answer a range selection query. The preprocessing time is O(n). Moreover, the proposed algorithm reports the output elements one by one in non-decreasing order. Our solution is simple and practical.

Deep Neural Networks (DNNs) deployed to the real world are regularly subject to out-of-distribution (OoD) data, various types of noise, and shifting conceptual objectives. This paper proposes a framework for adapting to data distribution drift modeled by benchmark Continual Learning datasets. We develop and evaluate a method of Continual Learning that leverages uncertainty quantification from Bayesian Inference to mitigate catastrophic forgetting. We expand on previous approaches by removing the need for Monte Carlo sampling of the model weights to sample the predictive distribution. We optimize a closed-form Evidence Lower Bound (ELBO) objective approximating the predictive distribution by propagating the first two moments of a distribution, i.e. mean and covariance, through all network layers. Catastrophic forgetting is mitigated by using the closed-form ELBO to approximate the Minimum Description Length (MDL) Principle, inherently penalizing changes in the model likelihood by minimizing the KL Divergence between the variational posterior for the current task and the previous task's variational posterior acting as the prior. Leveraging the approximation of the MDL principle, we aim to initially learn a sparse variational posterior and then minimize additional model complexity learned for subsequent tasks. Our approach is evaluated for the task incremental learning scenario using density propagated versions of fully-connected and convolutional neural networks across multiple sequential benchmark datasets with varying task sequence lengths. Ultimately, this procedure produces a minimally complex network over a series of tasks mitigating catastrophic forgetting.

In object detection, the cost of labeling is much high because it needs not only to confirm the categories of multiple objects in an image but also to accurately determine the bounding boxes of each object. Thus, integrating active learning into object detection will raise pretty positive significance. In this paper, we propose a classification committee for active deep object detection method by introducing a discrepancy mechanism of multiple classifiers for samples' selection when training object detectors. The model contains a main detector and a classification committee. The main detector denotes the target object detector trained from a labeled pool composed of the selected informative images. The role of the classification committee is to select the most informative images according to their uncertainty values from the view of classification, which is expected to focus more on the discrepancy and representative of instances. Specifically, they compute the uncertainty for a specified instance within the image by measuring its discrepancy output by the committee pre-trained via the proposed Maximum Classifiers Discrepancy Group Loss (MCDGL). The most informative images are finally determined by selecting the ones with many high-uncertainty instances. Besides, to mitigate the impact of interference instances, we design a Focus on Positive Instances Loss (FPIL) to make the committee the ability to automatically focus on the representative instances as well as precisely encode their discrepancies for the same instance. Experiments are conducted on Pascal VOC and COCO datasets versus some popular object detectors. And results show that our method outperforms the state-of-the-art active learning methods, which verifies the effectiveness of the proposed method.

Disentangled Representation Learning (DRL) aims to learn a model capable of identifying and disentangling the underlying factors hidden in the observable data in representation form. The process of separating underlying factors of variation into variables with semantic meaning benefits in learning explainable representations of data, which imitates the meaningful understanding process of humans when observing an object or relation. As a general learning strategy, DRL has demonstrated its power in improving the model explainability, controlability, robustness, as well as generalization capacity in a wide range of scenarios such as computer vision, natural language processing, data mining etc. In this article, we comprehensively review DRL from various aspects including motivations, definitions, methodologies, evaluations, applications and model designs. We discuss works on DRL based on two well-recognized definitions, i.e., Intuitive Definition and Group Theory Definition. We further categorize the methodologies for DRL into four groups, i.e., Traditional Statistical Approaches, Variational Auto-encoder Based Approaches, Generative Adversarial Networks Based Approaches, Hierarchical Approaches and Other Approaches. We also analyze principles to design different DRL models that may benefit different tasks in practical applications. Finally, we point out challenges in DRL as well as potential research directions deserving future investigations. We believe this work may provide insights for promoting the DRL research in the community.

Graph representation learning is to learn universal node representations that preserve both node attributes and structural information. The derived node representations can be used to serve various downstream tasks, such as node classification and node clustering. When a graph is heterogeneous, the problem becomes more challenging than the homogeneous graph node learning problem. Inspired by the emerging information theoretic-based learning algorithm, in this paper we propose an unsupervised graph neural network Heterogeneous Deep Graph Infomax (HDGI) for heterogeneous graph representation learning. We use the meta-path structure to analyze the connections involving semantics in heterogeneous graphs and utilize graph convolution module and semantic-level attention mechanism to capture local representations. By maximizing local-global mutual information, HDGI effectively learns high-level node representations that can be utilized in downstream graph-related tasks. Experiment results show that HDGI remarkably outperforms state-of-the-art unsupervised graph representation learning methods on both classification and clustering tasks. By feeding the learned representations into a parametric model, such as logistic regression, we even achieve comparable performance in node classification tasks when comparing with state-of-the-art supervised end-to-end GNN models.

We investigate a lattice-structured LSTM model for Chinese NER, which encodes a sequence of input characters as well as all potential words that match a lexicon. Compared with character-based methods, our model explicitly leverages word and word sequence information. Compared with word-based methods, lattice LSTM does not suffer from segmentation errors. Gated recurrent cells allow our model to choose the most relevant characters and words from a sentence for better NER results. Experiments on various datasets show that lattice LSTM outperforms both word-based and character-based LSTM baselines, achieving the best results.

The dominant sequence transduction models are based on complex recurrent or convolutional neural networks in an encoder-decoder configuration. The best performing models also connect the encoder and decoder through an attention mechanism. We propose a new simple network architecture, the Transformer, based solely on attention mechanisms, dispensing with recurrence and convolutions entirely. Experiments on two machine translation tasks show these models to be superior in quality while being more parallelizable and requiring significantly less time to train. Our model achieves 28.4 BLEU on the WMT 2014 English-to-German translation task, improving over the existing best results, including ensembles by over 2 BLEU. On the WMT 2014 English-to-French translation task, our model establishes a new single-model state-of-the-art BLEU score of 41.8 after training for 3.5 days on eight GPUs, a small fraction of the training costs of the best models from the literature. We show that the Transformer generalizes well to other tasks by applying it successfully to English constituency parsing both with large and limited training data.