Decision-focused (DF) model-based reinforcement learning has recently been introduced as a powerful algorithm that can focus on learning the MDP dynamics that are most relevant for obtaining high returns. While this approach increases the agent's performance by directly optimizing the reward, it does so by learning less accurate dynamics from a maximum likelihood perspective. We demonstrate that when the reward function is defined by preferences over multiple objectives, the DF model may be sensitive to changes in the objective preferences.In this work, we develop the robust decision-focused (RDF) algorithm, which leverages the non-identifiability of DF solutions to learn models that maximize expected returns while simultaneously learning models that transfer to changes in the preference over multiple objectives. We demonstrate the effectiveness of RDF on two synthetic domains and two healthcare simulators, showing that it significantly improves the robustness of DF model learning to changes in the reward function without compromising training-time return.

相關內容

It is desirable in many multi-objective machine learning applications, such as multi-task learning with conflicting objectives and multi-objective reinforcement learning, to find a Pareto solution that can match a given preference of a decision maker. These problems are often large-scale with available gradient information but cannot be handled very well by the existing algorithms. To tackle this critical issue, this paper proposes a novel predict-and-correct framework for locating a Pareto solution that fits the preference of a decision maker. In the proposed framework, a constraint function is introduced in the search progress to align the solution with a user-specific preference, which can be optimized simultaneously with multiple objective functions. Experimental results show that our proposed method can efficiently find a particular Pareto solution under the demand of a decision maker for standard multiobjective benchmark, multi-task learning, and multi-objective reinforcement learning problems with more than thousands of decision variables. Code is available at: //github.com/xzhang2523/pmgda. Our code is current provided in the pgmda.rar attached file and will be open-sourced after publication.}

Previous in-context learning (ICL) research has focused on tasks such as classification, machine translation, text2table, etc., while studies on whether ICL can improve human-like dialogue generation are scarce. Our work fills this gap by systematically investigating the ICL capabilities of large language models (LLMs) in persona-based dialogue generation, conducting extensive experiments on high-quality real human Chinese dialogue datasets. From experimental results, we draw three conclusions: 1) adjusting prompt instructions is the most direct, effective, and economical way to improve generation quality; 2) randomly retrieving demonstrations (demos) achieves the best results, possibly due to the greater diversity and the amount of effective information; counter-intuitively, retrieving demos with a context identical to the query performs the worst; 3) even when we destroy the multi-turn associations and single-turn semantics in the demos, increasing the number of demos still improves dialogue performance, proving that LLMs can learn from corrupted dialogue demos. Previous explanations of the ICL mechanism, such as $n$-gram induction head, cannot fully account for this phenomenon.

Is preferred tokenization for humans also preferred for machine-learning (ML) models? This study examines the relations between preferred tokenization for humans (appropriateness and readability) and one for ML models (performance on an NLP task). The question texts of the Japanese commonsense question-answering dataset are tokenized with six different tokenizers, and the performances of human annotators and ML models were compared. Furthermore, we analyze relations among performance of answers by human and ML model, the appropriateness of tokenization for human, and response time to questions by human. This study provides a quantitative investigation result that shows that preferred tokenizations for humans and ML models are not necessarily always the same. The result also implies that existing methods using language models for tokenization could be a good compromise both for human and ML models.

Federated learning (FL) has emerged as a prevalent distributed machine learning scheme that enables collaborative model training without aggregating raw data. Cloud service providers further embrace Federated Learning as a Service (FLaaS), allowing data analysts to execute their FL training pipelines over differentially-protected data. Due to the intrinsic properties of differential privacy, the enforced privacy level on data blocks can be viewed as a privacy budget that requires careful scheduling to cater to diverse training pipelines. Existing privacy budget scheduling studies prioritize either efficiency or fairness individually. In this paper, we propose DPBalance, a novel privacy budget scheduling mechanism that jointly optimizes both efficiency and fairness. We first develop a comprehensive utility function incorporating data analyst-level dominant shares and FL-specific performance metrics. A sequential allocation mechanism is then designed using the Lagrange multiplier method and effective greedy heuristics. We theoretically prove that DPBalance satisfies Pareto Efficiency, Sharing Incentive, Envy-Freeness, and Weak Strategy Proofness. We also theoretically prove the existence of a fairness-efficiency tradeoff in privacy budgeting. Extensive experiments demonstrate that DPBalance outperforms state-of-the-art solutions, achieving an average efficiency improvement of $1.44\times \sim 3.49 \times$, and an average fairness improvement of $1.37\times \sim 24.32 \times$.

The operator learning has received significant attention in recent years, with the aim of learning a mapping between function spaces. Prior works have proposed deep neural networks (DNNs) for learning such a mapping, enabling the learning of solution operators of partial differential equations (PDEs). However, these works still struggle to learn dynamics that obeys the laws of physics. This paper proposes Energy-consistent Neural Operators (ENOs), a general framework for learning solution operators of PDEs that follows the energy conservation or dissipation law from observed solution trajectories. We introduce a novel penalty function inspired by the energy-based theory of physics for training, in which the energy functional is modeled by another DNN, allowing one to bias the outputs of the DNN-based solution operators to ensure energetic consistency without explicit PDEs. Experiments on multiple physical systems show that ENO outperforms existing DNN models in predicting solutions from data, especially in super-resolution settings.

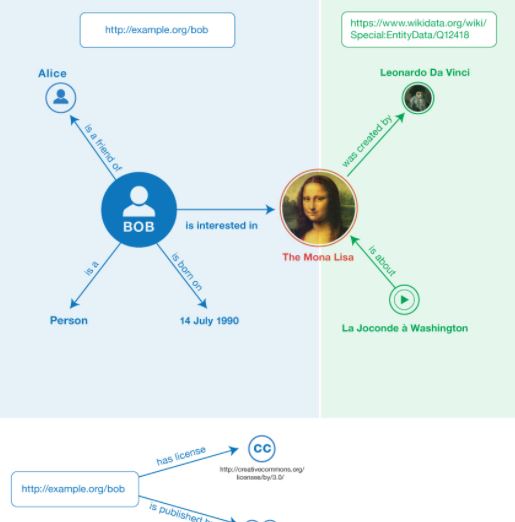

Link prediction on knowledge graphs (KGs) is a key research topic. Previous work mainly focused on binary relations, paying less attention to higher-arity relations although they are ubiquitous in real-world KGs. This paper considers link prediction upon n-ary relational facts and proposes a graph-based approach to this task. The key to our approach is to represent the n-ary structure of a fact as a small heterogeneous graph, and model this graph with edge-biased fully-connected attention. The fully-connected attention captures universal inter-vertex interactions, while with edge-aware attentive biases to particularly encode the graph structure and its heterogeneity. In this fashion, our approach fully models global and local dependencies in each n-ary fact, and hence can more effectively capture associations therein. Extensive evaluation verifies the effectiveness and superiority of our approach. It performs substantially and consistently better than current state-of-the-art across a variety of n-ary relational benchmarks. Our code is publicly available.

There recently has been a surge of interest in developing a new class of deep learning (DL) architectures that integrate an explicit time dimension as a fundamental building block of learning and representation mechanisms. In turn, many recent results show that topological descriptors of the observed data, encoding information on the shape of the dataset in a topological space at different scales, that is, persistent homology of the data, may contain important complementary information, improving both performance and robustness of DL. As convergence of these two emerging ideas, we propose to enhance DL architectures with the most salient time-conditioned topological information of the data and introduce the concept of zigzag persistence into time-aware graph convolutional networks (GCNs). Zigzag persistence provides a systematic and mathematically rigorous framework to track the most important topological features of the observed data that tend to manifest themselves over time. To integrate the extracted time-conditioned topological descriptors into DL, we develop a new topological summary, zigzag persistence image, and derive its theoretical stability guarantees. We validate the new GCNs with a time-aware zigzag topological layer (Z-GCNETs), in application to traffic forecasting and Ethereum blockchain price prediction. Our results indicate that Z-GCNET outperforms 13 state-of-the-art methods on 4 time series datasets.

Recently, ensemble has been applied to deep metric learning to yield state-of-the-art results. Deep metric learning aims to learn deep neural networks for feature embeddings, distances of which satisfy given constraint. In deep metric learning, ensemble takes average of distances learned by multiple learners. As one important aspect of ensemble, the learners should be diverse in their feature embeddings. To this end, we propose an attention-based ensemble, which uses multiple attention masks, so that each learner can attend to different parts of the object. We also propose a divergence loss, which encourages diversity among the learners. The proposed method is applied to the standard benchmarks of deep metric learning and experimental results show that it outperforms the state-of-the-art methods by a significant margin on image retrieval tasks.

Deep learning (DL) based semantic segmentation methods have been providing state-of-the-art performance in the last few years. More specifically, these techniques have been successfully applied to medical image classification, segmentation, and detection tasks. One deep learning technique, U-Net, has become one of the most popular for these applications. In this paper, we propose a Recurrent Convolutional Neural Network (RCNN) based on U-Net as well as a Recurrent Residual Convolutional Neural Network (RRCNN) based on U-Net models, which are named RU-Net and R2U-Net respectively. The proposed models utilize the power of U-Net, Residual Network, as well as RCNN. There are several advantages of these proposed architectures for segmentation tasks. First, a residual unit helps when training deep architecture. Second, feature accumulation with recurrent residual convolutional layers ensures better feature representation for segmentation tasks. Third, it allows us to design better U-Net architecture with same number of network parameters with better performance for medical image segmentation. The proposed models are tested on three benchmark datasets such as blood vessel segmentation in retina images, skin cancer segmentation, and lung lesion segmentation. The experimental results show superior performance on segmentation tasks compared to equivalent models including U-Net and residual U-Net (ResU-Net).

While existing machine learning models have achieved great success for sentiment classification, they typically do not explicitly capture sentiment-oriented word interaction, which can lead to poor results for fine-grained analysis at the snippet level (a phrase or sentence). Factorization Machine provides a possible approach to learning element-wise interaction for recommender systems, but they are not directly applicable to our task due to the inability to model contexts and word sequences. In this work, we develop two Position-aware Factorization Machines which consider word interaction, context and position information. Such information is jointly encoded in a set of sentiment-oriented word interaction vectors. Compared to traditional word embeddings, SWI vectors explicitly capture sentiment-oriented word interaction and simplify the parameter learning. Experimental results show that while they have comparable performance with state-of-the-art methods for document-level classification, they benefit the snippet/sentence-level sentiment analysis.