In this study, we address the challenge of using energy-based models to produce high-quality, label-specific data in complex structured datasets, such as population genetics, RNA or protein sequences data. Traditional training methods encounter difficulties due to inefficient Markov chain Monte Carlo mixing, which affects the diversity of synthetic data and increases generation times. To address these issues, we use a novel training algorithm that exploits non-equilibrium effects. This approach, applied on the Restricted Boltzmann Machine, improves the model's ability to correctly classify samples and generate high-quality synthetic data in only a few sampling steps. The effectiveness of this method is demonstrated by its successful application to four different types of data: handwritten digits, mutations of human genomes classified by continental origin, functionally characterized sequences of an enzyme protein family, and homologous RNA sequences from specific taxonomies.

相關內容

We study the role of information and access in capacity-constrained selection problems with fairness concerns. We develop a theoretical statistical discrimination framework, where each applicant has multiple features and is potentially strategic. The model formalizes the trade-off between the (potentially positive) informational role of a feature and its (negative) exclusionary nature when members of different social groups have unequal access to this feature. Our framework finds a natural application to recent policy debates on dropping standardized testing in college admissions. Our primary takeaway is that the decision to drop a feature (such as test scores) cannot be made without the joint context of the information provided by other features and how the requirement affects the applicant pool composition. Dropping a feature may exacerbate disparities by decreasing the amount of information available for each applicant, especially those from non-traditional backgrounds. However, in the presence of access barriers to a feature, the interaction between the informational environment and the effect of access barriers on the applicant pool size becomes highly complex. In this case, we provide a threshold characterization regarding when removing a feature improves both academic merit and diversity. Finally, using calibrated simulations in both the strategic and non-strategic settings, we demonstrate the presence of practical instances where the decision to eliminate standardized testing improves or worsens all metrics.

In this study, we propose an automatic diary generation system that uses information from past joint experiences with the aim of increasing the favorability for robots through shared experiences between humans and robots. For the verbalization of the robot's memory, the system applies a large-scale language model, which is a rapidly developing field. Since this model does not have memories of experiences, it generates a diary by receiving information from joint experiences. As an experiment, a robot and a human went for a walk and generated a diary with interaction and dialogue history. The proposed diary achieved high scores in comfort and performance in the evaluation of the robot's impression. In the survey of diaries giving more favorable impressions, diaries with information on joint experiences were selected higher than diaries without such information, because diaries with information on joint experiences showed more cooperation between the robot and the human and more intimacy from the robot.

Copy-move forgery on speech (CMF), coupled with post-processing techniques, presents a great challenge to the forensic detection and localization of tampered areas. Most of the existing CMF detection approaches necessitate pre-segmentation of speech to facilitate similarity calculations among these segments. However, these approaches usually suffer from the problems of uncontrollable computational complexity and sensitivity to the presence of a word that is read multiple times within a speech recording. To address these issues, we propose a local feature tensors-based CMF detection algorithm that can transform duplicate detection and localization problems into a special tensor-matching procedure, accompanied by complete theoretical analysis as support. Through extensive experimentation, we have demonstrated that our method exhibits computational efficiency and robustness against post-processing techniques. Notably, it can effectively and blindly detect tampered segments, even those as short as a fractional second. These advantages highlight the promising potential of our approach for practical applications.

Research reproducibility - i.e., rerunning analyses on original data to replicate the results - is paramount for guaranteeing scientific validity. However, reproducibility is often very challenging, especially in research fields where multi-disciplinary teams are involved, such as child-robot interaction (CRI). This paper presents a systematic review of the last three years (2020-2022) of research in CRI under the lens of reproducibility, by analysing the field for transparency in reporting. Across a total of 325 studies, we found deficiencies in reporting demographics (e.g. age of participants), study design and implementation (e.g. length of interactions), and open data (e.g. maintaining an active code repository). From this analysis, we distill a set of guidelines and provide a checklist to systematically report CRI studies to help and guide research to improve reproducibility in CRI and beyond.

In this paper, we propose a probabilistic reduced-dimensional vector autoregressive (PredVAR) model with oblique projections. This model partitions the measurement space into a dynamic subspace and a static subspace that do not need to be orthogonal. The partition allows us to apply an oblique projection to extract dynamic latent variables (DLVs) from high-dimensional data with maximized predictability. We develop an alternating iterative PredVAR algorithm that exploits the interaction between updating the latent VAR dynamics and estimating the oblique projection, using expectation maximization (EM) and a statistical constraint. In addition, the noise covariance matrices are estimated as a natural outcome of the EM method. A simulation case study of the nonlinear Lorenz oscillation system illustrates the advantages of the proposed approach over two alternatives.

In this work, we propose a novel framework for achieving robotic autonomy in orchards. It consists of two key steps: perception and semantic mapping. In the perception step, we introduce a 3D detection method that accurately identifies objects directly on point cloud maps. In the semantic mapping step, we develop a mapping module that constructs a visibility graph map by incorporating object-level information and terrain analysis. By combining these two steps, our framework improves the autonomy of agricultural robots in orchard environments. The accurate detection of objects and the construction of a semantic map enable the robot to navigate autonomously, perform tasks such as fruit harvesting, and acquire actionable information for efficient agricultural production.

In this paper, we address the issue of fairness in preference-based reinforcement learning (PbRL) in the presence of multiple objectives. The main objective is to design control policies that can optimize multiple objectives while treating each objective fairly. Toward this objective, we design a new fairness-induced preference-based reinforcement learning or FPbRL. The main idea of FPbRL is to learn vector reward functions associated with multiple objectives via new welfare-based preferences rather than reward-based preference in PbRL, coupled with policy learning via maximizing a generalized Gini welfare function. Finally, we provide experiment studies on three different environments to show that the proposed FPbRL approach can achieve both efficiency and equity for learning effective and fair policies.

With the rise of powerful pre-trained vision-language models like CLIP, it becomes essential to investigate ways to adapt these models to downstream datasets. A recently proposed method named Context Optimization (CoOp) introduces the concept of prompt learning -- a recent trend in NLP -- to the vision domain for adapting pre-trained vision-language models. Specifically, CoOp turns context words in a prompt into a set of learnable vectors and, with only a few labeled images for learning, can achieve huge improvements over intensively-tuned manual prompts. In our study we identify a critical problem of CoOp: the learned context is not generalizable to wider unseen classes within the same dataset, suggesting that CoOp overfits base classes observed during training. To address the problem, we propose Conditional Context Optimization (CoCoOp), which extends CoOp by further learning a lightweight neural network to generate for each image an input-conditional token (vector). Compared to CoOp's static prompts, our dynamic prompts adapt to each instance and are thus less sensitive to class shift. Extensive experiments show that CoCoOp generalizes much better than CoOp to unseen classes, even showing promising transferability beyond a single dataset; and yields stronger domain generalization performance as well. Code is available at //github.com/KaiyangZhou/CoOp.

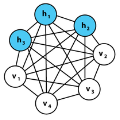

With the advances of data-driven machine learning research, a wide variety of prediction problems have been tackled. It has become critical to explore how machine learning and specifically deep learning methods can be exploited to analyse healthcare data. A major limitation of existing methods has been the focus on grid-like data; however, the structure of physiological recordings are often irregular and unordered which makes it difficult to conceptualise them as a matrix. As such, graph neural networks have attracted significant attention by exploiting implicit information that resides in a biological system, with interactive nodes connected by edges whose weights can be either temporal associations or anatomical junctions. In this survey, we thoroughly review the different types of graph architectures and their applications in healthcare. We provide an overview of these methods in a systematic manner, organized by their domain of application including functional connectivity, anatomical structure and electrical-based analysis. We also outline the limitations of existing techniques and discuss potential directions for future research.

In this paper, we propose the joint learning attention and recurrent neural network (RNN) models for multi-label classification. While approaches based on the use of either model exist (e.g., for the task of image captioning), training such existing network architectures typically require pre-defined label sequences. For multi-label classification, it would be desirable to have a robust inference process, so that the prediction error would not propagate and thus affect the performance. Our proposed model uniquely integrates attention and Long Short Term Memory (LSTM) models, which not only addresses the above problem but also allows one to identify visual objects of interests with varying sizes without the prior knowledge of particular label ordering. More importantly, label co-occurrence information can be jointly exploited by our LSTM model. Finally, by advancing the technique of beam search, prediction of multiple labels can be efficiently achieved by our proposed network model.