Unmanned Aerial Vehicles are increasingly being used in surveillance and traffic monitoring thanks to their high mobility and ability to cover areas at different altitudes and locations. One of the major challenges is to use aerial images to accurately detect cars and count them in real-time for traffic monitoring purposes. Several deep learning techniques were recently proposed based on convolution neural network (CNN) for real-time classification and recognition in computer vision. However, their performance depends on the scenarios where they are used. In this paper, we investigate the performance of two state-of-the-art CNN algorithms, namely Faster R-CNN and YOLOv3, in the context of car detection from aerial images. We trained and tested these two models on a large car dataset taken from UAVs. We demonstrated in this paper that YOLOv3 outperforms Faster R-CNN in sensitivity and processing time, although they are comparable in the precision metric.

相關內容

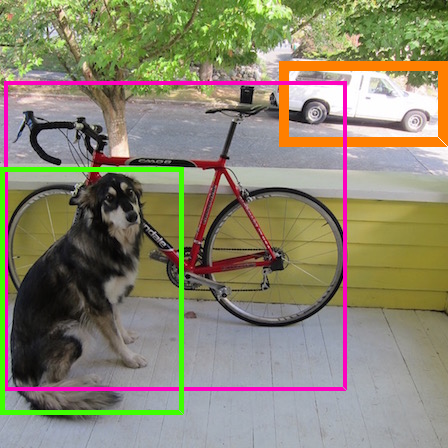

Detecting objects in aerial images is challenging for at least two reasons: (1) target objects like pedestrians are very small in pixels, making them hardly distinguished from surrounding background; and (2) targets are in general sparsely and non-uniformly distributed, making the detection very inefficient. In this paper, we address both issues inspired by observing that these targets are often clustered. In particular, we propose a Clustered Detection (ClusDet) network that unifies object clustering and detection in an end-to-end framework. The key components in ClusDet include a cluster proposal sub-network (CPNet), a scale estimation sub-network (ScaleNet), and a dedicated detection network (DetecNet). Given an input image, CPNet produces object cluster regions and ScaleNet estimates object scales for these regions. Then, each scale-normalized cluster region is fed into DetecNet for object detection. ClusDet has several advantages over previous solutions: (1) it greatly reduces the number of chips for final object detection and hence achieves high running time efficiency, (2) the cluster-based scale estimation is more accurate than previously used single-object based ones, hence effectively improves the detection for small objects, and (3) the final DetecNet is dedicated for clustered regions and implicitly models the prior context information so as to boost detection accuracy. The proposed method is tested on three popular aerial image datasets including VisDrone, UAVDT and DOTA. In all experiments, ClusDet achieves promising performance in comparison with state-of-the-art detectors. Code will be available in \url{//github.com/fyangneil}.

Detection and classification of objects in aerial imagery have several applications like urban planning, crop surveillance, and traffic surveillance. However, due to the lower resolution of the objects and the effect of noise in aerial images, extracting distinguishing features for the objects is a challenge. We evaluate CenterNet, a state of the art method for real-time 2D object detection, on the VisDrone2019 dataset. We evaluate the performance of the model with different backbone networks in conjunction with varying resolutions during training and testing.

Object detection, as of one the most fundamental and challenging problems in computer vision, has received great attention in recent years. Its development in the past two decades can be regarded as an epitome of computer vision history. If we think of today's object detection as a technical aesthetics under the power of deep learning, then turning back the clock 20 years we would witness the wisdom of cold weapon era. This paper extensively reviews 400+ papers of object detection in the light of its technical evolution, spanning over a quarter-century's time (from the 1990s to 2019). A number of topics have been covered in this paper, including the milestone detectors in history, detection datasets, metrics, fundamental building blocks of the detection system, speed up techniques, and the recent state of the art detection methods. This paper also reviews some important detection applications, such as pedestrian detection, face detection, text detection, etc, and makes an in-deep analysis of their challenges as well as technical improvements in recent years.

It is a common paradigm in object detection frameworks to treat all samples equally and target at maximizing the performance on average. In this work, we revisit this paradigm through a careful study on how different samples contribute to the overall performance measured in terms of mAP. Our study suggests that the samples in each mini-batch are neither independent nor equally important, and therefore a better classifier on average does not necessarily mean higher mAP. Motivated by this study, we propose the notion of Prime Samples, those that play a key role in driving the detection performance. We further develop a simple yet effective sampling and learning strategy called PrIme Sample Attention (PISA) that directs the focus of the training process towards such samples. Our experiments demonstrate that it is often more effective to focus on prime samples than hard samples when training a detector. Particularly, On the MSCOCO dataset, PISA outperforms the random sampling baseline and hard mining schemes, e.g. OHEM and Focal Loss, consistently by more than 1% on both single-stage and two-stage detectors, with a strong backbone ResNeXt-101.

Compared with object detection in static images, object detection in videos is more challenging due to degraded image qualities. An effective way to address this problem is to exploit temporal contexts by linking the same object across video to form tubelets and aggregating classification scores in the tubelets. In this paper, we focus on obtaining high quality object linking results for better classification. Unlike previous methods that link objects by checking boxes between neighboring frames, we propose to link in the same frame. To achieve this goal, we extend prior methods in following aspects: (1) a cuboid proposal network that extracts spatio-temporal candidate cuboids which bound the movement of objects; (2) a short tubelet detection network that detects short tubelets in short video segments; (3) a short tubelet linking algorithm that links temporally-overlapping short tubelets to form long tubelets. Experiments on the ImageNet VID dataset show that our method outperforms both the static image detector and the previous state of the art. In particular, our method improves results by 8.8% over the static image detector for fast moving objects.

The ever-growing interest witnessed in the acquisition and development of unmanned aerial vehicles (UAVs), commonly known as drones in the past few years, has brought generation of a very promising and effective technology. Because of their characteristic of small size and fast deployment, UAVs have shown their effectiveness in collecting data over unreachable areas and restricted coverage zones. Moreover, their flexible-defined capacity enables them to collect information with a very high level of detail, leading to high resolution images. UAVs mainly served in military scenario. However, in the last decade, they have being broadly adopted in civilian applications as well. The task of aerial surveillance and situation awareness is usually completed by integrating intelligence, surveillance, observation, and navigation systems, all interacting in the same operational framework. To build this capability, UAV's are well suited tools that can be equipped with a wide variety of sensors, such as cameras or radars. Deep learning has been widely recognized as a prominent approach in different computer vision applications. Specifically, one-stage object detector and two-stage object detector are regarded as the most important two groups of Convolutional Neural Network based object detection methods. One-stage object detector could usually outperform two-stage object detector in speed; however, it normally trails in detection accuracy, compared with two-stage object detectors. In this study, focal loss based RetinaNet, which works as one-stage object detector, is utilized to be able to well match the speed of regular one-stage detectors and also defeat two-stage detectors in accuracy, for UAV based object detection. State-of-the-art performance result has been showed on the UAV captured image dataset-Stanford Drone Dataset (SDD).

Vision-based vehicle detection approaches achieve incredible success in recent years with the development of deep convolutional neural network (CNN). However, existing CNN based algorithms suffer from the problem that the convolutional features are scale-sensitive in object detection task but it is common that traffic images and videos contain vehicles with a large variance of scales. In this paper, we delve into the source of scale sensitivity, and reveal two key issues: 1) existing RoI pooling destroys the structure of small scale objects, 2) the large intra-class distance for a large variance of scales exceeds the representation capability of a single network. Based on these findings, we present a scale-insensitive convolutional neural network (SINet) for fast detecting vehicles with a large variance of scales. First, we present a context-aware RoI pooling to maintain the contextual information and original structure of small scale objects. Second, we present a multi-branch decision network to minimize the intra-class distance of features. These lightweight techniques bring zero extra time complexity but prominent detection accuracy improvement. The proposed techniques can be equipped with any deep network architectures and keep them trained end-to-end. Our SINet achieves state-of-the-art performance in terms of accuracy and speed (up to 37 FPS) on the KITTI benchmark and a new highway dataset, which contains a large variance of scales and extremely small objects.

Object detection is a major challenge in computer vision, involving both object classification and object localization within a scene. While deep neural networks have been shown in recent years to yield very powerful techniques for tackling the challenge of object detection, one of the biggest challenges with enabling such object detection networks for widespread deployment on embedded devices is high computational and memory requirements. Recently, there has been an increasing focus in exploring small deep neural network architectures for object detection that are more suitable for embedded devices, such as Tiny YOLO and SqueezeDet. Inspired by the efficiency of the Fire microarchitecture introduced in SqueezeNet and the object detection performance of the single-shot detection macroarchitecture introduced in SSD, this paper introduces Tiny SSD, a single-shot detection deep convolutional neural network for real-time embedded object detection that is composed of a highly optimized, non-uniform Fire sub-network stack and a non-uniform sub-network stack of highly optimized SSD-based auxiliary convolutional feature layers designed specifically to minimize model size while maintaining object detection performance. The resulting Tiny SSD possess a model size of 2.3MB (~26X smaller than Tiny YOLO) while still achieving an mAP of 61.3% on VOC 2007 (~4.2% higher than Tiny YOLO). These experimental results show that very small deep neural network architectures can be designed for real-time object detection that are well-suited for embedded scenarios.

Detecting objects and estimating their pose remains as one of the major challenges of the computer vision research community. There exists a compromise between localizing the objects and estimating their viewpoints. The detector ideally needs to be view-invariant, while the pose estimation process should be able to generalize towards the category-level. This work is an exploration of using deep learning models for solving both problems simultaneously. For doing so, we propose three novel deep learning architectures, which are able to perform a joint detection and pose estimation, where we gradually decouple the two tasks. We also investigate whether the pose estimation problem should be solved as a classification or regression problem, being this still an open question in the computer vision community. We detail a comparative analysis of all our solutions and the methods that currently define the state of the art for this problem. We use PASCAL3D+ and ObjectNet3D datasets to present the thorough experimental evaluation and main results. With the proposed models we achieve the state-of-the-art performance in both datasets.

Object detection is considered one of the most challenging problems in this field of computer vision, as it involves the combination of object classification and object localization within a scene. Recently, deep neural networks (DNNs) have been demonstrated to achieve superior object detection performance compared to other approaches, with YOLOv2 (an improved You Only Look Once model) being one of the state-of-the-art in DNN-based object detection methods in terms of both speed and accuracy. Although YOLOv2 can achieve real-time performance on a powerful GPU, it still remains very challenging for leveraging this approach for real-time object detection in video on embedded computing devices with limited computational power and limited memory. In this paper, we propose a new framework called Fast YOLO, a fast You Only Look Once framework which accelerates YOLOv2 to be able to perform object detection in video on embedded devices in a real-time manner. First, we leverage the evolutionary deep intelligence framework to evolve the YOLOv2 network architecture and produce an optimized architecture (referred to as O-YOLOv2 here) that has 2.8X fewer parameters with just a ~2% IOU drop. To further reduce power consumption on embedded devices while maintaining performance, a motion-adaptive inference method is introduced into the proposed Fast YOLO framework to reduce the frequency of deep inference with O-YOLOv2 based on temporal motion characteristics. Experimental results show that the proposed Fast YOLO framework can reduce the number of deep inferences by an average of 38.13%, and an average speedup of ~3.3X for objection detection in video compared to the original YOLOv2, leading Fast YOLO to run an average of ~18FPS on a Nvidia Jetson TX1 embedded system.