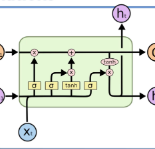

Sequence labeling tasks require the computation of sentence representations for each word within a given sentence. A prevalent method incorporates a Bi-directional Long Short-Term Memory (BiLSTM) layer to enhance the sequence structure information. However, empirical evidence Li (2020) suggests that the capacity of BiLSTM to produce sentence representations for sequence labeling tasks is inherently limited. This limitation primarily results from the integration of fragments from past and future sentence representations to formulate a complete sentence representation. In this study, we observed that the entire sentence representation, found in both the first and last cells of BiLSTM, can supplement each the individual sentence representation of each cell. Accordingly, we devised a global context mechanism to integrate entire future and past sentence representations into each cell's sentence representation within the BiLSTM framework. By incorporating the BERT model within BiLSTM as a demonstration, and conducting exhaustive experiments on nine datasets for sequence labeling tasks, including named entity recognition (NER), part of speech (POS) tagging, and End-to-End Aspect-Based sentiment analysis (E2E-ABSA). We noted significant improvements in F1 scores and accuracy across all examined datasets.

相關內容

Optimal packing of objects in containers is a critical problem in various real-life and industrial applications. This paper investigates the two-dimensional packing of convex polygons without rotations, where only translations are allowed. We study different settings depending on the type of containers used, including minimizing the number of containers or the size of the container based on an objective function. Building on prior research in the field, we develop polynomial-time algorithms with improved approximation guarantees upon the best-known results by Alt, de Berg and Knauer, as well as Aamand, Abrahamsen, Beretta and Kleist, for problems such as Polygon Area Minimization, Polygon Perimeter Minimization, Polygon Strip Packing, and Polygon Bin Packing. Our approach utilizes a sequence of object transformations that allows sorting by height and orientation, thus enhancing the effectiveness of shelf packing algorithms for polygon packing problems. In addition, we present efficient approximation algorithms for special cases of the Polygon Bin Packing problem, progressing toward solving an open question concerning an O(1)-approximation algorithm for arbitrary polygons.

RF fingerprinting is emerging as a physical layer security scheme to identify illegitimate and/or unauthorized emitters sharing the RF spectrum. However, due to the lack of publicly accessible real-world datasets, most research focuses on generating synthetic waveforms with software-defined radios (SDRs) which are not suited for practical deployment settings. On other hand, the limited datasets that are available focus only on chipsets that generate only one kind of waveform. Commercial off-the-shelf (COTS) combo chipsets that support two wireless standards (for example WiFi and Bluetooth) over a shared dual-band antenna such as those found in laptops, adapters, wireless chargers, Raspberry Pis, among others are becoming ubiquitous in the IoT realm. Hence, to keep up with the modern IoT environment, there is a pressing need for real-world open datasets capturing emissions from these combo chipsets transmitting heterogeneous communication protocols. To this end, we capture the first known emissions from the COTS IoT chipsets transmitting WiFi and Bluetooth under two different time frames. The different time frames are essential to rigorously evaluate the generalization capability of the models. To ensure widespread use, each capture within the comprehensive 72 GB dataset is long enough (40 MSamples) to support diverse input tensor lengths and formats. Finally, the dataset also comprises emissions at varying signal powers to account for the feeble to high signal strength emissions as encountered in a real-world setting.

We propose a supervised principal component regression method for relating functional responses with high dimensional predictors. Unlike the conventional principal component analysis, the proposed method builds on a newly defined expected integrated residual sum of squares, which directly makes use of the association between the functional response and the predictors. Minimizing the integrated residual sum of squares gives the supervised principal components, which is equivalent to solving a sequence of nonconvex generalized Rayleigh quotient optimization problems. We reformulate the nonconvex optimization problems into a simultaneous linear regression with a sparse penalty to deal with high dimensional predictors. Theoretically, we show that the reformulated regression problem can recover the same supervised principal subspace under certain conditions. Statistically, we establish non-asymptotic error bounds for the proposed estimators when the covariate covariance is bandable. We demonstrate the advantages of the proposed method through numerical experiments and an application to the Human Connectome Project fMRI data.

We present an evaluation of text simplification (TS) in Spanish for a production system, by means of two corpora focused in both complex-sentence and complex-word identification. We compare the most prevalent Spanish-specific readability scores with neural networks, and show that the latter are consistently better at predicting user preferences regarding TS. As part of our analysis, we find that multilingual models underperform against equivalent Spanish-only models on the same task, yet all models focus too often on spurious statistical features, such as sentence length. We release the corpora in our evaluation to the broader community with the hopes of pushing forward the state-of-the-art in Spanish natural language processing.

Electronic exams (e-exams) have the potential to substantially reduce the effort required for conducting an exam through automation. Yet, care must be taken to sacrifice neither task complexity nor constructive alignment nor grading fairness in favor of automation. To advance automation in the design and fair grading of (functional programming) e-exams, we introduce the following: A novel algorithm to check Proof Puzzles based on finding correct sequences of proof lines that improves fairness compared to an existing, edit distance based algorithm; an open-source static analysis tool to check source code for task relevant features by traversing the abstract syntax tree; a higher-level language and open-source tool to specify regular expressions that makes creating complex regular expressions less error-prone. Our findings are embedded in a complete experience report on transforming a paper exam to an e-exam. We evaluated the resulting e-exam by analyzing the degree of automation in the grading process, asking students for their opinion, and critically reviewing our own experiences. Almost all tasks can be graded automatically at least in part (correct solutions can almost always be detected as such), the students agree that an e-exam is a fitting examination format for the course but are split on how well they can express their thoughts compared to a paper exam, and examiners enjoy a more time-efficient grading process while the point distribution in the exam results was almost exactly the same compared to a paper exam.

As soon as abstract mathematical computations were adapted to computation on digital computers, the problem of efficient representation, manipulation, and communication of the numerical values in those computations arose. Strongly related to the problem of numerical representation is the problem of quantization: in what manner should a set of continuous real-valued numbers be distributed over a fixed discrete set of numbers to minimize the number of bits required and also to maximize the accuracy of the attendant computations? This perennial problem of quantization is particularly relevant whenever memory and/or computational resources are severely restricted, and it has come to the forefront in recent years due to the remarkable performance of Neural Network models in computer vision, natural language processing, and related areas. Moving from floating-point representations to low-precision fixed integer values represented in four bits or less holds the potential to reduce the memory footprint and latency by a factor of 16x; and, in fact, reductions of 4x to 8x are often realized in practice in these applications. Thus, it is not surprising that quantization has emerged recently as an important and very active sub-area of research in the efficient implementation of computations associated with Neural Networks. In this article, we survey approaches to the problem of quantizing the numerical values in deep Neural Network computations, covering the advantages/disadvantages of current methods. With this survey and its organization, we hope to have presented a useful snapshot of the current research in quantization for Neural Networks and to have given an intelligent organization to ease the evaluation of future research in this area.

Answering questions that require reading texts in an image is challenging for current models. One key difficulty of this task is that rare, polysemous, and ambiguous words frequently appear in images, e.g., names of places, products, and sports teams. To overcome this difficulty, only resorting to pre-trained word embedding models is far from enough. A desired model should utilize the rich information in multiple modalities of the image to help understand the meaning of scene texts, e.g., the prominent text on a bottle is most likely to be the brand. Following this idea, we propose a novel VQA approach, Multi-Modal Graph Neural Network (MM-GNN). It first represents an image as a graph consisting of three sub-graphs, depicting visual, semantic, and numeric modalities respectively. Then, we introduce three aggregators which guide the message passing from one graph to another to utilize the contexts in various modalities, so as to refine the features of nodes. The updated nodes have better features for the downstream question answering module. Experimental evaluations show that our MM-GNN represents the scene texts better and obviously facilitates the performances on two VQA tasks that require reading scene texts.

Knowledge graph completion aims to predict missing relations between entities in a knowledge graph. While many different methods have been proposed, there is a lack of a unifying framework that would lead to state-of-the-art results. Here we develop PathCon, a knowledge graph completion method that harnesses four novel insights to outperform existing methods. PathCon predicts relations between a pair of entities by: (1) Considering the Relational Context of each entity by capturing the relation types adjacent to the entity and modeled through a novel edge-based message passing scheme; (2) Considering the Relational Paths capturing all paths between the two entities; And, (3) adaptively integrating the Relational Context and Relational Path through a learnable attention mechanism. Importantly, (4) in contrast to conventional node-based representations, PathCon represents context and path only using the relation types, which makes it applicable in an inductive setting. Experimental results on knowledge graph benchmarks as well as our newly proposed dataset show that PathCon outperforms state-of-the-art knowledge graph completion methods by a large margin. Finally, PathCon is able to provide interpretable explanations by identifying relations that provide the context and paths that are important for a given predicted relation.

Aspect level sentiment classification aims to identify the sentiment expressed towards an aspect given a context sentence. Previous neural network based methods largely ignore the syntax structure in one sentence. In this paper, we propose a novel target-dependent graph attention network (TD-GAT) for aspect level sentiment classification, which explicitly utilizes the dependency relationship among words. Using the dependency graph, it propagates sentiment features directly from the syntactic context of an aspect target. In our experiments, we show our method outperforms multiple baselines with GloVe embeddings. We also demonstrate that using BERT representations further substantially boosts the performance.

Image segmentation is an important component of many image understanding systems. It aims to group pixels in a spatially and perceptually coherent manner. Typically, these algorithms have a collection of parameters that control the degree of over-segmentation produced. It still remains a challenge to properly select such parameters for human-like perceptual grouping. In this work, we exploit the diversity of segments produced by different choices of parameters. We scan the segmentation parameter space and generate a collection of image segmentation hypotheses (from highly over-segmented to under-segmented). These are fed into a cost minimization framework that produces the final segmentation by selecting segments that: (1) better describe the natural contours of the image, and (2) are more stable and persistent among all the segmentation hypotheses. We compare our algorithm's performance with state-of-the-art algorithms, showing that we can achieve improved results. We also show that our framework is robust to the choice of segmentation kernel that produces the initial set of hypotheses.