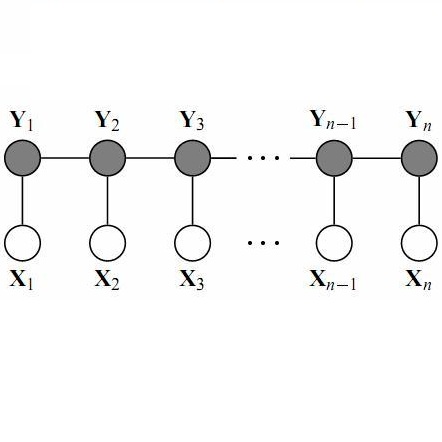

Online technical forums (e.g., StackOverflow) are popular platforms for developers to discuss technical problems such as how to use specific Application Programming Interface (API), how to solve the programming tasks, or how to fix bugs in their codes. These discussions can often provide auxiliary knowledge of how to use the software that is not covered by the official documents. The automatic extraction of such knowledge will support a set of downstream tasks like API searching or indexing. However, unlike official documentation written by experts, discussions in open forums are made by regular developers who write in short and informal texts, including spelling errors or abbreviations. There are three major challenges for the accurate APIs recognition and linking mentioned APIs from unstructured natural language documents to an entry in the API repository: (1) distinguishing API mentions from common words; (2) identifying API mentions without a fully qualified name; and (3) disambiguating API mentions with similar method names but in a different library. In this paper, to tackle these challenges, we propose an ARCLIN tool, which can effectively distinguish and link APIs without using human annotations. Specifically, we first design an API recognizer to automatically extract API mentions from natural language sentences by a Conditional Random Field (CRF) on the top of a Bi-directional Long Short-Term Memory (Bi-LSTM) module, then we apply a context-aware scoring mechanism to compute the mention-entry similarity for each entry in an API repository. Compared to previous approaches with heuristic rules, our proposed tool without manual inspection outperforms by 8% in a high-quality dataset Py-mention, which contains 558 mentions and 2,830 sentences from five popular Python libraries.

相關內容

One of the challenges of language teaching is how to organize the rules regarding syntax, semantics, or phonology of the language in a meaningful manner. This not only requires pedagogical skills, but also requires a deep understanding of that language. While comprehensive materials to develop such curricula are available in English and some broadly spoken languages, for many other languages, teachers need to manually create them in response to their students' needs. This process is challenging because i) it requires that such experts be accessible and have the necessary resources, and ii) even if there are such experts, describing all the intricacies of a language is time-consuming and prone to omission. In this article, we present an automatic framework that aims to facilitate this process by automatically discovering and visualizing descriptions of different aspects of grammar. Specifically, we extract descriptions from a natural text corpus that answer questions about morphosyntax (learning of word order, agreement, case marking, or word formation) and semantics (learning of vocabulary) and show illustrative examples. We apply this method for teaching the Indian languages, Kannada and Marathi, which, unlike English, do not have well-developed pedagogical resources and, therefore, are likely to benefit from this exercise. To assess the perceived utility of the extracted material, we enlist the help of language educators from schools in North America who teach these languages to perform a manual evaluation. Overall, teachers find the materials to be interesting as a reference material for their own lesson preparation or even for learner evaluation.

The human cultural repertoire relies on innovation: our ability to continuously and hierarchically explore how existing elements can be combined to create new ones. Innovation is not solitary, it relies on collective accumulation and merging of previous solutions. Machine learning approaches commonly assume that fully connected multi-agent networks are best suited for innovation. However, human laboratory and field studies have shown that hierarchical innovation is more robustly achieved by dynamic communication topologies. In dynamic topologies, humans oscillate between innovating individually or in small clusters, and then sharing outcomes with others. To our knowledge, the role of multi-agent topology on innovation has not been systematically studied in machine learning. It remains unclear a) which communication topologies are optimal for which innovation tasks, and b) which properties of experience sharing improve multi-level innovation. Here we use a multi-level hierarchical problem setting (WordCraft), with three different innovation tasks. We systematically design networks of DQNs sharing experiences from their replay buffers in varying topologies (fully connected, small world, dynamic, ring). Comparing the level of innovation achieved by different experience-sharing topologies across different tasks shows that, first, consistent with human findings, experience sharing within a dynamic topology achieves the highest level of innovation across tasks. Second, experience sharing is not as helpful when there is a single clear path to innovation. Third, two metrics we propose, conformity and diversity of shared experience, can explain the success of different topologies on different tasks. These contributions can advance our understanding of optimal AI-AI, human-human, and human-AI collaborative networks, inspiring future tools for fostering collective innovation in large organizations.

Topic-controllable summarization is an emerging research area with a wide range of potential applications. However, existing approaches suffer from significant limitations. First, there is currently no established evaluation metric for this task. Furthermore, existing methods built upon recurrent architectures, which can significantly limit their performance compared to more recent Transformer-based architectures, while they also require modifications to the model's architecture for controlling the topic. In this work, we propose a new topic-oriented evaluation measure to automatically evaluate the generated summaries based on the topic affinity between the generated summary and the desired topic. We also conducted a user study that validates the reliability of this measure. Finally, we propose simple, yet powerful methods for topic-controllable summarization either incorporating topic embeddings into the model's architecture or employing control tokens to guide the summary generation. Experimental results show that control tokens can achieve better performance compared to more complicated embedding-based approaches while being at the same time significantly faster.

Hyperparameter optimization (HPO) is crucial for machine learning algorithms to achieve satisfactory performance, whose progress has been boosted by related benchmarks. Nonetheless, existing efforts in benchmarking all focus on HPO for traditional centralized learning while ignoring federated learning (FL), a promising paradigm for collaboratively learning models from dispersed data. In this paper, we first identify some uniqueness of HPO for FL algorithms from various aspects. Due to this uniqueness, existing HPO benchmarks no longer satisfy the need to compare HPO methods in the FL setting. To facilitate the research of HPO in the FL setting, we propose and implement a benchmark suite FedHPO-B that incorporates comprehensive FL tasks, enables efficient function evaluations, and eases continuing extensions. We also conduct extensive experiments based on FedHPO-B to benchmark a few HPO methods. We open-source FedHPO-B at //github.com/alibaba/FederatedScope/tree/master/benchmark/FedHPOB and will maintain it actively.

Modern web services routinely provide REST APIs for clients to access their functionality. These APIs present unique challenges and opportunities for automated testing, driving the recent development of many techniques and tools that generate test cases for API endpoints using various strategies. Understanding how these techniques compare to one another is difficult, as they have been evaluated on different benchmarks and using different metrics. To fill this gap, we performed an empirical study aimed to understand the landscape in automated testing of REST APIs and guide future research in this area. We first identified, through a systematic selection process, a set of 10 state-of-the-art REST API testing tools that included tools developed by both researchers and practitioners. We then applied these tools to a benchmark of 20 real-world open-source RESTful services and analyzed their performance in terms of code coverage achieved and unique failures triggered. This analysis allowed us to identify strengths, weaknesses, and limitations of the tools considered and of their underlying strategies, as well as implications of our findings for future research in this area.

Spatial-temporal data contains rich information and has been widely studied in recent years due to the rapid development of relevant applications in many fields. For instance, medical institutions often use electrodes attached to different parts of a patient to analyse the electorencephal data rich with spatial and temporal features for health assessment and disease diagnosis. Existing research has mainly used deep learning techniques such as convolutional neural network (CNN) or recurrent neural network (RNN) to extract hidden spatial-temporal features. Yet, it is challenging to incorporate both inter-dependencies spatial information and dynamic temporal changes simultaneously. In reality, for a model that leverages these spatial-temporal features to fulfil complex prediction tasks, it often requires a colossal amount of training data in order to obtain satisfactory model performance. Considering the above-mentioned challenges, we propose an adaptive federated relevance framework, namely FedRel, for spatial-temporal graph learning in this paper. After transforming the raw spatial-temporal data into high quality features, the core Dynamic Inter-Intra Graph (DIIG) module in the framework is able to use these features to generate the spatial-temporal graphs capable of capturing the hidden topological and long-term temporal correlation information in these graphs. To improve the model generalization ability and performance while preserving the local data privacy, we also design a relevance-driven federated learning module in our framework to leverage diverse data distributions from different participants with attentive aggregations of their models.

Automated driving systems (ADS) are expected to be reliable and robust against a wide range of driving scenarios. Their decisions, first and foremost, must be well understood. Understanding a decision made by ADS is a great challenge, because it is not straightforward to tell whether the decision is correct or not, and how to verify it systematically. In this paper, a Sequential MetAmoRphic Testing Smart framework is proposed based on metamorphic testing, a mainstream software testing approach. In metamorphic testing, metamorphic groups are constructed by selecting multiple inputs according to the so-called metamorphic relations, which are basically the system's necessary properties; the violation of certain relations by some corresponding metamorphic groups implies the detection of erroneous system behaviors. The proposed framework makes use of sequences of metamorphic groups to understand ADS behaviors, and is applicable without the need of ground-truth datasets. To demonstrate its effectiveness, the framework is applied to test three ADS models that steer an autonomous car in different scenarios with another car either leading in front or approaching in the opposite direction. The conducted experiments reveal a large number of undesirable behaviors in these top-ranked deep learning models in the scenarios. These counter-intuitive behaviors are associated with how the core models of ADS respond to different positions, directions and properties of the other car in its proximity. Further analysis of the results helps identify critical factors affecting ADS decisions and thus demonstrates that the framework can be used to provide a comprehensive understanding of ADS before their deployment

Dynamic attention mechanism and global modeling ability make Transformer show strong feature learning ability. In recent years, Transformer has become comparable to CNNs methods in computer vision. This review mainly investigates the current research progress of Transformer in image and video applications, which makes a comprehensive overview of Transformer in visual learning understanding. First, the attention mechanism is reviewed, which plays an essential part in Transformer. And then, the visual Transformer model and the principle of each module are introduced. Thirdly, the existing Transformer-based models are investigated, and their performance is compared in visual learning understanding applications. Three image tasks and two video tasks of computer vision are investigated. The former mainly includes image classification, object detection, and image segmentation. The latter contains object tracking and video classification. It is significant for comparing different models' performance in various tasks on several public benchmark data sets. Finally, ten general problems are summarized, and the developing prospects of the visual Transformer are given in this review.

Graph machine learning has been extensively studied in both academic and industry. However, as the literature on graph learning booms with a vast number of emerging methods and techniques, it becomes increasingly difficult to manually design the optimal machine learning algorithm for different graph-related tasks. To tackle the challenge, automated graph machine learning, which aims at discovering the best hyper-parameter and neural architecture configuration for different graph tasks/data without manual design, is gaining an increasing number of attentions from the research community. In this paper, we extensively discuss automated graph machine approaches, covering hyper-parameter optimization (HPO) and neural architecture search (NAS) for graph machine learning. We briefly overview existing libraries designed for either graph machine learning or automated machine learning respectively, and further in depth introduce AutoGL, our dedicated and the world's first open-source library for automated graph machine learning. Last but not least, we share our insights on future research directions for automated graph machine learning. This paper is the first systematic and comprehensive discussion of approaches, libraries as well as directions for automated graph machine learning.

In multi-turn dialog, utterances do not always take the full form of sentences \cite{Carbonell1983DiscoursePA}, which naturally makes understanding the dialog context more difficult. However, it is essential to fully grasp the dialog context to generate a reasonable response. Hence, in this paper, we propose to improve the response generation performance by examining the model's ability to answer a reading comprehension question, where the question is focused on the omitted information in the dialog. Enlightened by the multi-task learning scheme, we propose a joint framework that unifies these two tasks, sharing the same encoder to extract the common and task-invariant features with different decoders to learn task-specific features. To better fusing information from the question and the dialog history in the encoding part, we propose to augment the Transformer architecture with a memory updater, which is designed to selectively store and update the history dialog information so as to support downstream tasks. For the experiment, we employ human annotators to write and examine a large-scale dialog reading comprehension dataset. Extensive experiments are conducted on this dataset, and the results show that the proposed model brings substantial improvements over several strong baselines on both tasks. In this way, we demonstrate that reasoning can indeed help better response generation and vice versa. We release our large-scale dataset for further research.