We consider the degree-Rips construction from topological data analysis, which provides a density-sensitive, multiparameter hierarchical clustering algorithm. We analyze its stability to perturbations of the input data using the correspondence-interleaving distance, a metric for hierarchical clusterings that we introduce. Taking certain one-parameter slices of degree-Rips recovers well-known methods for density-based clustering, but we show that these methods are unstable. However, we prove that degree-Rips, as a multiparameter object, is stable, and we propose an alternative approach for taking slices of degree-Rips, which yields a one-parameter hierarchical clustering algorithm with better stability properties. We prove that this algorithm is consistent, using the correspondence-interleaving distance. We provide an algorithm for extracting a single clustering from one-parameter hierarchical clusterings, which is stable with respect to the correspondence-interleaving distance. And, we integrate these methods into a pipeline for density-based clustering, which we call Persistable. Adapting tools from multiparameter persistent homology, we propose visualization tools that guide the selection of all parameters of the pipeline. We demonstrate Persistable on benchmark datasets, showing that it identifies multi-scale cluster structure in data.

相關內容

We construct a family of Markov decision processes for which the policy iteration algorithm needs an exponential number of improving switches with Dantzig's rule, with Bland's rule, and with the Largest Increase pivot rule. This immediately translates to a family of linear programs for which the simplex algorithm needs an exponential number of pivot steps with the same three pivot rules. Our results yield a unified construction that simultaneously reproduces well-known lower bounds for these classical pivot rules, and we are able to infer that any (deterministic or randomized) combination of them cannot avoid an exponential worst-case behavior. Regarding the policy iteration algorithm, pivot rules typically switch multiple edges simultaneously and our lower bound for Dantzig's rule and the Largest Increase rule, which perform only single switches, seem novel. Regarding the simplex algorithm, the individual lower bounds were previously obtained separately via deformed hypercube constructions. In contrast to previous bounds for the simplex algorithm via Markov decision processes, our rigorous analysis is reasonably concise.

This paper investigates the problem of estimating the larger location parameter of two general location families from a decision-theoretic perspective. In this estimation problem, we use the criteria of minimizing the risk function and the Pitman closeness under a general bowl-shaped loss function. Inadmissibility of a general location and equivariant estimators is provided. We prove that a natural estimator (analogue of the BLEE of unordered location parameters) is inadmissible, under certain conditions on underlying densities, and propose a dominating estimator. We also derive a class of improved estimators using the Kubokawa's IERD approach and observe that the boundary estimator of this class is the Brewster-Zidek type estimator. Additionally, under the generalized Pitman criterion, we show that the natural estimator is inadmissible and obtain improved estimators. The results are implemented for different loss functions, and explicit expressions for the dominating estimators are provided. We explore the applications of these results to for exponential and normal distribution under specified loss functions. A simulation is also conducted to compare the risk performance of the proposed estimators. Finally, we present a real-life data analysis to illustrate the practical applications of the paper's findings.

For both public and private firms, comparable companies' analysis is widely used as a method for company valuation. In particular, the method is of great value for valuation of private equity companies. The several approaches to the comparable companies' method usually rely on a qualitative approach to identifying similar peer companies, which tend to use established industry classification schemes and/or analyst intuition and knowledge. However, more quantitative methods have started being used in the literature and in the private equity industry, in particular, machine learning clustering, and natural language processing (NLP). For NLP methods, the process consists of extracting product entities from e.g., the company's website or company descriptions from some financial database system and then to perform similarity analysis. Here, using companies' descriptions/summaries from publicly available companies' Wikipedia websites, we show that using large language models (LLMs), such as GPT from OpenAI, has a much higher precision and success rate than using the standard named entity recognition (NER) methods which use manual annotation. We demonstrate quantitatively a higher precision rate, and show that, qualitatively, it can be used to create appropriate comparable companies peer groups which could then be used for equity valuation.

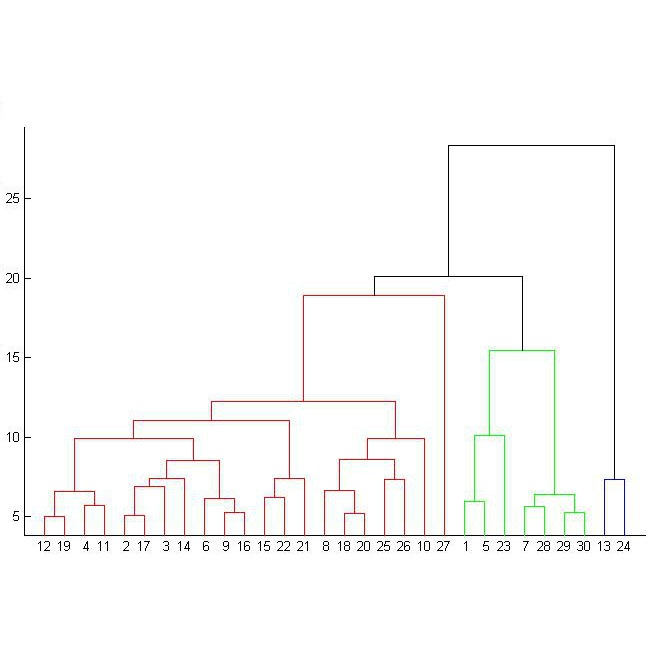

We propose a new class of models for variable clustering called Asymptotic Independent block (AI-block) models, which defines population-level clusters based on the independence of the maxima of a multivariate stationary mixing random process among clusters. This class of models is identifiable, meaning that there exists a maximal element with a partial order between partitions, allowing for statistical inference. We also present an algorithm for recovering the clusters of variables without specifying the number of clusters \emph{a priori}. Our work provides some theoretical insights into the consistency of our algorithm, demonstrating that under certain conditions it can effectively identify clusters in the data with a computational complexity that is polynomial in the dimension. This implies that groups can be learned nonparametrically in which block maxima of a dependent process are only sub-asymptotic. To further illustrate the significance of our work, we applied our method to neuroscience and environmental real-datasets. These applications highlight the potential and versatility of the proposed approach.

Discovering causal relationships from observational data is a fundamental yet challenging task. In some applications, it may suffice to learn the causal features of a given response variable, instead of learning the entire underlying causal structure. Invariant causal prediction (ICP, Peters et al., 2016) is a method for causal feature selection which requires data from heterogeneous settings. ICP assumes that the mechanism for generating the response from its direct causes is the same in all settings and exploits this invariance to output a subset of the causal features. The framework of ICP has been extended to general additive noise models and to nonparametric settings using conditional independence testing. However, nonparametric conditional independence testing often suffers from low power (or poor type I error control) and the aforementioned parametric models are not suitable for applications in which the response is not measured on a continuous scale, but rather reflects categories or counts. To bridge this gap, we develop ICP in the context of transformation models (TRAMs), allowing for continuous, categorical, count-type, and uninformatively censored responses (we show that, in general, these model classes do not allow for identifiability when there is no exogenous heterogeneity). We propose TRAM-GCM, a test for invariance of a subset of covariates, based on the expected conditional covariance between environments and score residuals which satisfies uniform asymptotic level guarantees. For the special case of linear shift TRAMs, we propose an additional invariance test, TRAM-Wald, based on the Wald statistic. We implement both proposed methods in the open-source R package "tramicp" and show in simulations that under the correct model specification, our approach empirically yields higher power than nonparametric ICP based on conditional independence testing.

In the linear regression model, the minimum l2-norm interpolant estimator has received much attention since it was proved to be consistent even though it fits noisy data perfectly under some condition on the covariance matrix $\Sigma$ of the input vector, known as benign overfitting. Motivated by this phenomenon, we study the generalization property of this estimator from a geometrical viewpoint. Our main results extend and improve the convergence rates as well as the deviation probability from [Tsigler and Bartlett]. Our proof differs from the classical bias/variance analysis and is based on the self-induced regularization property introduced in [Bartlett, Montanari and Rakhlin]: the minimum l2-norm interpolant estimator can be written as a sum of a ridge estimator and an overfitting component. The two geometrical properties of random Gaussian matrices at the heart of our analysis are the Dvoretsky-Milman theorem and isomorphic and restricted isomorphic properties. In particular, the Dvoretsky dimension appearing naturally in our geometrical viewpoint, coincides with the effective rank and is the key tool for handling the behavior of the design matrix restricted to the sub-space where overfitting happens. We extend these results to heavy-tailed scenarii proving the universality of this phenomenon beyond exponential moment assumptions. This phenomenon is unknown before and is widely believed to be a significant challenge. This follows from an anistropic version of the probabilistic Dvoretsky-Milman theorem that holds for heavy-tailed vectors which is of independent interest.

A rigidity circuit (in 2D) is a minimal dependent set in the rigidity matroid, i.e. a minimal graph supporting a non-trivial stress in any generic placement of its vertices in $\mathbb R^2$. Any rigidity circuit on $n\geq 5$ vertices can be obtained from rigidity circuits on a fewer number of vertices by applying the combinatorial resultant (CR) operation. The inverse operation is called a combinatorial resultant decomposition (CR-decomp). Any rigidity circuit on $n\geq 5$ vertices can be successively decomposed into smaller circuits, until the complete graphs $K_4$ are reached. This sequence of CR-decomps has the structure of a rooted binary tree called the combinatorial resultant tree (CR-tree). A CR-tree encodes an elimination strategy for computing circuit polynomials via Sylvester resultants. Different CR-trees lead to elimination strategies that can vary greatly in time and memory consumption. It is an open problem to establish criteria for optimal CR-trees, or at least to characterize those CR-trees that lead to good elimination strategies. In [12] we presented an algorithm for enumerating CR-trees where we give the algorithms for decomposing 3-connected rigidity circuits in polynomial time. In this paper we focus on those circuits that are not 3-connected, which we simply call 2-connected. In order to enumerate CR-decomps of 2-connected circuits $G$, a brute force exp-time search has to be performed among the subgraphs induced by the subsets of $V(G)$. This exp-time bottleneck is not present in the 3-connected case. In this paper we will argue that we do not have to account for all possible CR-decomps of 2-connected rigidity circuits to find a good elimination strategy; we only have to account for those CR-decomps that are a 2-split, all of which can be enumerated in polynomial time. We present algorithms and computational evidence in support of this heuristic.

Recently established, directed dependence measures for pairs $(X,Y)$ of random variables build upon the natural idea of comparing the conditional distributions of $Y$ given $X=x$ with the marginal distribution of $Y$. They assign pairs $(X,Y)$ values in $[0,1]$, the value is $0$ if and only if $X,Y$ are independent, and it is $1$ exclusively for $Y$ being a function of $X$. Here we show that comparing randomly drawn conditional distributions with each other instead or, equivalently, analyzing how sensitive the conditional distribution of $Y$ given $X=x$ is on $x$, opens the door to constructing novel families of dependence measures $\Lambda_\varphi$ induced by general convex functions $\varphi: \mathbb{R} \rightarrow \mathbb{R}$, containing, e.g., Chatterjee's coefficient of correlation as special case. After establishing additional useful properties of $\Lambda_\varphi$ we focus on continuous $(X,Y)$, translate $\Lambda_\varphi$ to the copula setting, consider the $L^p$-version and establish an estimator which is strongly consistent in full generality. A real data example and a simulation study illustrate the chosen approach and the performance of the estimator. Complementing the afore-mentioned results, we show how a slight modification of the construction underlying $\Lambda_\varphi$ can be used to define new measures of explainability generalizing the fraction of explained variance.

We prove axiomatic characterizations of several important multiwinner rules within the class of approval-based committee choice rules. These are voting rules that return a set of (fixed-size) committees. In particular, we provide axiomatic characterizations of Proportional Approval Voting, the Chamberlin--Courant rule, and other Thiele methods. These rules share the important property that they satisfy an axiom called consistency, which is crucial in our characterizations.

We propose an approach to compute inner and outer-approximations of the sets of values satisfying constraints expressed as arbitrarily quantified formulas. Such formulas arise for instance when specifying important problems in control such as robustness, motion planning or controllers comparison. We propose an interval-based method which allows for tractable but tight approximations. We demonstrate its applicability through a series of examples and benchmarks using a prototype implementation.