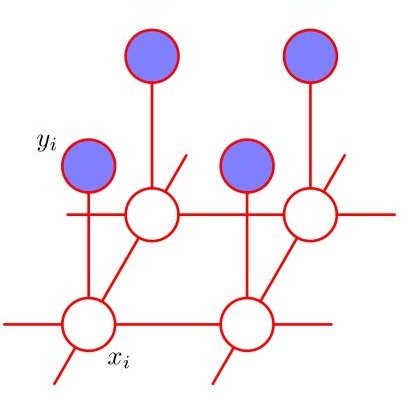

We introduce a Gibbs Markov random field for spatial data on Cartesian grids which is based on the generalized planar rotator (GPR) model. The GPR model generalizes the recently proposed modified planar rotator (MPR) model by including in the Hamiltonian additional terms that better capture realistic features of spatial data, such as smoothness, non-Gaussianity, and geometric anisotropy. In particular, the GPR model includes up to infinite number of higher-order harmonics with exponentially vanishing interaction strength, directional dependence of the bilinear interaction term between nearest grid neighbors, longer-distance neighbor interactions, and two types of an external bias field. Hence, in contrast with the single-parameter MPR model, the GPR model features five additional parameters: the number $n$ of higher-order terms and the parameter $\alpha$ controlling their decay rate, the exchange anisotropy parameter $J^{nn}$, the further-neighbor interaction coupling $J^{fn}$, and the external field (bias) parameters $K$ (or $K'$). We present numerical tests on various synthetic data which demonstrate the effects of the respective terms on the model's prediction performance and we discuss these results in connection with the data properties.

相關內容

Anomaly detection among a large number of processes arises in many applications ranging from dynamic spectrum access to cybersecurity. In such problems one can often obtain noisy observations aggregated from a chosen subset of processes that conforms to a tree structure. The distribution of these observations, based on which the presence of anomalies is detected, may be only partially known. This gives rise to the need for a search strategy designed to account for both the sample complexity and the detection accuracy, as well as cope with statistical models that are known only up to some missing parameters. In this work we propose a sequential search strategy using two variations of the Generalized Local Likelihood Ratio statistic. Our proposed Hierarchical Dynamic Search (HDS) strategy is shown to be order-optimal with respect to the size of the search space and asymptotically optimal with respect to the detection accuracy. An explicit upper bound on the error probability of HDS is established for the finite sample regime. Extensive experiments are conducted, demonstrating the performance gains of HDS over existing methods.

We introduce and analyze various Regularized Combined Field Integral Equations (CFIER) formulations of time-harmonic Navier equations in media with piece-wise constant material properties. These formulations can be derived systematically starting from suitable coercive approximations of Dirichlet-to-Neumann operators (DtN), and we present a periodic pseudodifferential calculus framework within which the well posedness of CIER formulations can be established. We also use the DtN approximations to derive and analyze Optimized Schwarz (OS) methods for the solution of elastodynamics transmission problems. The pseudodifferential calculus we develop in this paper relies on careful singularity splittings of the kernels of Navier boundary integral operators which is also the basis of high-order Nystr\"om quadratures for their discretizations. Based on these high-order discretizations we investigate the rate of convergence of iterative solvers applied to CFIER and OS formulations of scattering and transmission problems. We present a variety of numerical results that illustrate that the CFIER methodology leads to important computational savings over the classical CFIE one, whenever iterative solvers are used for the solution of the ensuing discretized boundary integral equations. Finally, we show that the OS methods are competitive in the high-frequency high-contrast regime.

Graph Convolutional Networks (GCNs) are one of the most popular architectures that are used to solve classification problems accompanied by graphical information. We present a rigorous theoretical understanding of the effects of graph convolutions in multi-layer networks. We study these effects through the node classification problem of a non-linearly separable Gaussian mixture model coupled with a stochastic block model. First, we show that a single graph convolution expands the regime of the distance between the means where multi-layer networks can classify the data by a factor of at least $1/\sqrt[4]{\mathbb{E}{\rm deg}}$, where $\mathbb{E}{\rm deg}$ denotes the expected degree of a node. Second, we show that with a slightly stronger graph density, two graph convolutions improve this factor to at least $1/\sqrt[4]{n}$, where $n$ is the number of nodes in the graph. Finally, we provide both theoretical and empirical insights into the performance of graph convolutions placed in different combinations among the layers of a network, concluding that the performance is mutually similar for all combinations of the placement. We present extensive experiments on both synthetic and real-world data that illustrate our results.

In this paper we present a deep learning method to predict the temporal evolution of dissipative dynamic systems. We propose using both geometric and thermodynamic inductive biases to improve accuracy and generalization of the resulting integration scheme. The first is achieved with Graph Neural Networks, which induces a non-Euclidean geometrical prior with permutation invariant node and edge update functions. The second bias is forced by learning the GENERIC structure of the problem, an extension of the Hamiltonian formalism, to model more general non-conservative dynamics. Several examples are provided in both Eulerian and Lagrangian description in the context of fluid and solid mechanics respectively, achieving relative mean errors of less than 3% in all the tested examples. Two ablation studies are provided based on recent works in both physics-informed and geometric deep learning.

While algorithms for planar graphs have received a lot of attention, few papers have focused on the additional power that one gets from assuming an embedding of the graph is available. While in the classic sequential setting, this assumption gives no additional power (as a planar graph can be embedded in linear time), we show that this is far from being the case in other settings. We assume that the embedding is straight-line, but our methods also generalize to non-straight-line embeddings. Specifically, we focus on sublinear-time computation and massively parallel computation (MPC). Our main technical contribution is a sublinear-time algorithm for computing a relaxed version of an $r$-division. We then show how this can be used to estimate Lipschitz additive graph parameters. This includes, for example, the maximum matching, maximum independent set, or the minimum dominating set. We also show how this can be used to solve some property testing problems with respect to the vertex edit distance. In the second part of our paper, we show an MPC algorithm that computes an $r$-division of the input graph. We show how this can be used to solve various classical graph problems with space per machine of $O(n^{2/3+\epsilon})$ for some $\epsilon>0$, and while performing $O(1)$ rounds. This includes for example approximate shortest paths or the minimum spanning tree. Our results also imply an improved MPC algorithm for Euclidean minimum spanning tree.

Music Structure Analysis (MSA) consists in segmenting a music piece in several distinct sections. We approach MSA within a compression framework, under the hypothesis that the structure is more easily revealed by a simplified representation of the original content of the song. More specifically, under the hypothesis that MSA is correlated with similarities occurring at the bar scale, this article introduces the use of linear and non-linear compression schemes on barwise audio signals. Compressed representations capture the most salient components of the different bars in the song and are then used to infer the song structure using a dynamic programming algorithm. This work explores both low-rank approximation models such as Principal Component Analysis or Nonnegative Matrix Factorization and "piece-specific" Auto-Encoding Neural Networks, with the objective to learn latent representations specific to a given song. Such approaches do not rely on supervision nor annotations, which are well-known to be tedious to collect and possibly ambiguous in MSA description. In our experiments, several unsupervised compression schemes achieve a level of performance comparable to that of state-of-the-art supervised methods (for 3s tolerance) on the RWC-Pop dataset, showcasing the importance of the barwise compression processing for MSA.

Latent Gaussian models and boosting are widely used techniques in statistics and machine learning. Tree-boosting shows excellent prediction accuracy on many data sets, but potential drawbacks are that it assumes conditional independence of samples, produces discontinuous predictions for, e.g., spatial data, and it can have difficulty with high-cardinality categorical variables. Latent Gaussian models, such as Gaussian process and grouped random effects models, are flexible prior models which explicitly model dependence among samples and which allow for efficient learning of predictor functions and for making probabilistic predictions. However, existing latent Gaussian models usually assume either a zero or a linear prior mean function which can be an unrealistic assumption. This article introduces a novel approach that combines boosting and latent Gaussian models to remedy the above-mentioned drawbacks and to leverage the advantages of both techniques. We obtain increased prediction accuracy compared to existing approaches in both simulated and real-world data experiments.

In the pooled data problem we are given a set of $n$ agents, each of which holds a hidden state bit, either $0$ or $1$. A querying procedure returns for a query set the sum of the states of the queried agents. The goal is to reconstruct the states using as few queries as possible. In this paper we consider two noise models for the pooled data problem. In the noisy channel model, the result for each agent flips with a certain probability. In the noisy query model, each query result is subject to random Gaussian noise. Our results are twofold. First, we present and analyze for both error models a simple and efficient distributed algorithm that reconstructs the initial states in a greedy fashion. Our novel analysis pins down the range of error probabilities and distributions for which our algorithm reconstructs the exact initial states with high probability. Secondly, we present simulation results of our algorithm and compare its performance with approximate message passing (AMP) algorithms that are conjectured to be optimal in a number of related problems.

The conjoining of dynamical systems and deep learning has become a topic of great interest. In particular, neural differential equations (NDEs) demonstrate that neural networks and differential equation are two sides of the same coin. Traditional parameterised differential equations are a special case. Many popular neural network architectures, such as residual networks and recurrent networks, are discretisations. NDEs are suitable for tackling generative problems, dynamical systems, and time series (particularly in physics, finance, ...) and are thus of interest to both modern machine learning and traditional mathematical modelling. NDEs offer high-capacity function approximation, strong priors on model space, the ability to handle irregular data, memory efficiency, and a wealth of available theory on both sides. This doctoral thesis provides an in-depth survey of the field. Topics include: neural ordinary differential equations (e.g. for hybrid neural/mechanistic modelling of physical systems); neural controlled differential equations (e.g. for learning functions of irregular time series); and neural stochastic differential equations (e.g. to produce generative models capable of representing complex stochastic dynamics, or sampling from complex high-dimensional distributions). Further topics include: numerical methods for NDEs (e.g. reversible differential equations solvers, backpropagation through differential equations, Brownian reconstruction); symbolic regression for dynamical systems (e.g. via regularised evolution); and deep implicit models (e.g. deep equilibrium models, differentiable optimisation). We anticipate this thesis will be of interest to anyone interested in the marriage of deep learning with dynamical systems, and hope it will provide a useful reference for the current state of the art.

Multimodal sentiment analysis is a very actively growing field of research. A promising area of opportunity in this field is to improve the multimodal fusion mechanism. We present a novel feature fusion strategy that proceeds in a hierarchical fashion, first fusing the modalities two in two and only then fusing all three modalities. On multimodal sentiment analysis of individual utterances, our strategy outperforms conventional concatenation of features by 1%, which amounts to 5% reduction in error rate. On utterance-level multimodal sentiment analysis of multi-utterance video clips, for which current state-of-the-art techniques incorporate contextual information from other utterances of the same clip, our hierarchical fusion gives up to 2.4% (almost 10% error rate reduction) over currently used concatenation. The implementation of our method is publicly available in the form of open-source code.