The Langevin algorithms are frequently used to sample the posterior distributions in Bayesian inference. In many practical problems, however, the posterior distributions often consist of non-differentiable components, posing challenges for the standard Langevin algorithms, as they require to evaluate the gradient of the energy function in each iteration. To this end, a popular remedy is to utilize the proximity operator, and as a result one needs to solve a proximity subproblem in each iteration. The conventional practice is to solve the subproblems accurately, which can be exceedingly expensive, as the subproblem needs to be solved in each iteration. We propose an approximate primal-dual fixed-point algorithm for solving the subproblem, which only seeks an approximate solution of the subproblem and therefore reduces the computational cost considerably. We provide theoretical analysis of the proposed method and also demonstrate its performance with numerical examples.

相關內容

Distributed stochastic optimization has drawn great attention recently due to its effectiveness in solving large-scale machine learning problems. Though numerous algorithms have been proposed and successfully applied to general practical problems, their theoretical guarantees mainly rely on certain boundedness conditions on the stochastic gradients, varying from uniform boundedness to the relaxed growth condition. In addition, how to characterize the data heterogeneity among the agents and its impacts on the algorithmic performance remains challenging. In light of such motivations, we revisit the classical Federated Averaging (FedAvg) algorithm for solving the distributed stochastic optimization problem and establish the convergence results under only a mild variance condition on the stochastic gradients for smooth nonconvex objective functions. Almost sure convergence to a stationary point is also established under the condition. Moreover, we discuss a more informative measurement for data heterogeneity as well as its implications.

This paper studies the online node classification problem under a transductive learning setting. Current methods either invert a graph kernel matrix with $\mathcal{O}(n^3)$ runtime and $\mathcal{O}(n^2)$ space complexity or sample a large volume of random spanning trees, thus are difficult to scale to large graphs. In this work, we propose an improvement based on the \textit{online relaxation} technique introduced by a series of works (Rakhlin et al.,2012; Rakhlin and Sridharan, 2015; 2017). We first prove an effective regret $\mathcal{O}(\sqrt{n^{1+\gamma}})$ when suitable parameterized graph kernels are chosen, then propose an approximate algorithm FastONL enjoying $\mathcal{O}(k\sqrt{n^{1+\gamma}})$ regret based on this relaxation. The key of FastONL is a \textit{generalized local push} method that effectively approximates inverse matrix columns and applies to a series of popular kernels. Furthermore, the per-prediction cost is $\mathcal{O}(\text{vol}({\mathcal{S}})\log 1/\epsilon)$ locally dependent on the graph with linear memory cost. Experiments show that our scalable method enjoys a better tradeoff between local and global consistency.

This paper studies delayed stochastic algorithms for weakly convex optimization in a distributed network with workers connected to a master node. More specifically, we consider a structured stochastic weakly convex objective function which is the composition of a convex function and a smooth nonconvex function. Recently, Xu et al. 2022 showed that an inertial stochastic subgradient method converges at a rate of $\mathcal{O}(\tau/\sqrt{K})$, which suffers a significant penalty from the maximum information delay $\tau$. To alleviate this issue, we propose a new delayed stochastic prox-linear ($\texttt{DSPL}$) method in which the master performs the proximal update of the parameters and the workers only need to linearly approximate the inner smooth function. Somewhat surprisingly, we show that the delays only affect the high order term in the complexity rate and hence, are negligible after a certain number of $\texttt{DSPL}$ iterations. Moreover, to further improve the empirical performance, we propose a delayed extrapolated prox-linear ($\texttt{DSEPL}$) method which employs Polyak-type momentum to speed up the algorithm convergence. Building on the tools for analyzing $\texttt{DSPL}$, we also develop improved analysis of delayed stochastic subgradient method ($\texttt{DSGD}$). In particular, for general weakly convex problems, we show that convergence of $\texttt{DSGD}$ only depends on the expected delay.

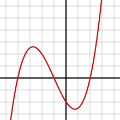

We study the problem of approximate sampling from non-log-concave distributions, e.g., Gaussian mixtures, which is often challenging even in low dimensions due to their multimodality. We focus on performing this task via Markov chain Monte Carlo (MCMC) methods derived from discretizations of the overdamped Langevin diffusions, which are commonly known as Langevin Monte Carlo algorithms. Furthermore, we are also interested in two nonsmooth cases for which a large class of proximal MCMC methods have been developed: (i) a nonsmooth prior is considered with a Gaussian mixture likelihood; (ii) a Laplacian mixture distribution. Such nonsmooth and non-log-concave sampling tasks arise from a wide range of applications to Bayesian inference and imaging inverse problems such as image deconvolution. We perform numerical simulations to compare the performance of most commonly used Langevin Monte Carlo algorithms.

Sampling-based planning algorithm is a powerful tool for solving planning problems in high-dimensional state spaces. In this article, we present a novel approach to sampling in the most promising regions, which significantly reduces planning time-consumption. The RRT# algorithm defines the Relevant Region based on the cost-to-come provided by the optimal forward-searching tree. However, it uses the cumulative cost of a direct connection between the current state and the goal state as the cost-to-go. To improve the path planning efficiency, we propose a batch sampling method that samples in a refined Relevant Region with a direct sampling strategy, which is defined according to the optimal cost-to-come and the adaptive cost-to-go, taking advantage of various sources of heuristic information. The proposed sampling approach allows the algorithm to build the search tree in the direction of the most promising area, resulting in a superior initial solution quality and reducing the overall computation time compared to related work. To validate the effectiveness of our method, we conducted several simulations in both $SE(2)$ and $SE(3)$ state spaces. And the simulation results demonstrate the superiorities of proposed algorithm.

While distributional reinforcement learning (RL) has demonstrated empirical success, the question of when and why it is beneficial has remained unanswered. In this work, we provide one explanation for the benefits of distributional RL through the lens of small-loss bounds, which scale with the instance-dependent optimal cost. If the optimal cost is small, our bounds are stronger than those from non-distributional approaches. As warmup, we show that learning the cost distribution leads to small-loss regret bounds in contextual bandits (CB), and we find that distributional CB empirically outperforms the state-of-the-art on three challenging tasks. For online RL, we propose a distributional version-space algorithm that constructs confidence sets using maximum likelihood estimation, and we prove that it achieves small-loss regret in the tabular MDPs and enjoys small-loss PAC bounds in latent variable models. Building on similar insights, we propose a distributional offline RL algorithm based on the pessimism principle and prove that it enjoys small-loss PAC bounds, which exhibit a novel robustness property. For both online and offline RL, our results provide the first theoretical benefits of learning distributions even when we only need the mean for making decisions.

We propose a new approach to learned optimization where we represent the computation of an optimizer's update step using a neural network. The parameters of the optimizer are then learned by training on a set of optimization tasks with the objective to perform minimization efficiently. Our innovation is a new neural network architecture, Optimus, for the learned optimizer inspired by the classic BFGS algorithm. As in BFGS, we estimate a preconditioning matrix as a sum of rank-one updates but use a Transformer-based neural network to predict these updates jointly with the step length and direction. In contrast to several recent learned optimization-based approaches, our formulation allows for conditioning across the dimensions of the parameter space of the target problem while remaining applicable to optimization tasks of variable dimensionality without retraining. We demonstrate the advantages of our approach on a benchmark composed of objective functions traditionally used for the evaluation of optimization algorithms, as well as on the real world-task of physics-based visual reconstruction of articulated 3d human motion.

We consider the adversarial linear contextual bandit setting, which allows for the loss functions associated with each of $K$ arms to change over time without restriction. Assuming the $d$-dimensional contexts are drawn from a fixed known distribution, the worst-case expected regret over the course of $T$ rounds is known to scale as $\tilde O(\sqrt{Kd T})$. Under the additional assumption that the density of the contexts is log-concave, we obtain a second-order bound of order $\tilde O(K\sqrt{d V_T})$ in terms of the cumulative second moment of the learner's losses $V_T$, and a closely related first-order bound of order $\tilde O(K\sqrt{d L_T^*})$ in terms of the cumulative loss of the best policy $L_T^*$. Since $V_T$ or $L_T^*$ may be significantly smaller than $T$, these improve over the worst-case regret whenever the environment is relatively benign. Our results are obtained using a truncated version of the continuous exponential weights algorithm over the probability simplex, which we analyse by exploiting a novel connection to the linear bandit setting without contexts.

This paper proposes a statistically optimal approach for learning a function value using a confidence interval in a wide range of models, including general non-parametric estimation of an expected loss described as a stochastic programming problem or various SDE models. More precisely, we develop a systematic construction of highly accurate confidence intervals by using a moderate deviation principle-based approach. It is shown that the proposed confidence intervals are statistically optimal in the sense that they satisfy criteria regarding exponential accuracy, minimality, consistency, mischaracterization probability, and eventual uniformly most accurate (UMA) property. The confidence intervals suggested by this approach are expressed as solutions to robust optimization problems, where the uncertainty is expressed via the underlying moderate deviation rate function induced by the data-generating process. We demonstrate that for many models these optimization problems admit tractable reformulations as finite convex programs even when they are infinite-dimensional.

We define an optimal preconditioning for the Langevin diffusion by analytically maximizing the expected squared jumped distance. This yields as the optimal preconditioning an inverse Fisher information covariance matrix, where the covariance matrix is computed as the outer product of log target gradients averaged under the target. We apply this result to the Metropolis adjusted Langevin algorithm (MALA) and derive a computationally efficient adaptive MCMC scheme that learns the preconditioning from the history of gradients produced as the algorithm runs. We show in several experiments that the proposed algorithm is very robust in high dimensions and significantly outperforms other methods, including a closely related adaptive MALA scheme that learns the preconditioning with standard adaptive MCMC as well as the position-dependent Riemannian manifold MALA sampler.