Text clustering remains valuable in real-world applications where manual labeling is cost-prohibitive. It facilitates efficient organization and analysis of information by grouping similar texts based on their representations. However, implementing this approach necessitates fine-tuned embedders for downstream data and sophisticated similarity metrics. To address this issue, this study presents a novel framework for text clustering that effectively leverages the in-context learning capacity of Large Language Models (LLMs). Instead of fine-tuning embedders, we propose to transform the text clustering into a classification task via LLM. First, we prompt LLM to generate potential labels for a given dataset. Second, after integrating similar labels generated by the LLM, we prompt the LLM to assign the most appropriate label to each sample in the dataset. Our framework has been experimentally proven to achieve comparable or superior performance to state-of-the-art clustering methods that employ embeddings, without requiring complex fine-tuning or clustering algorithms. We make our code available to the public for utilization at //anonymous.4open.science/r/Text-Clustering-via-LLM-E500.

相關內容

In the circuit model of quantum computing, amplitude amplification techniques can be used to find solutions to NP-hard problems defined on $n$-bits in time $\text{poly}(n) 2^{n/2}$. In this work, we investigate whether such general statements can be made for adiabatic quantum optimization, as provable results regarding its performance are mostly unknown. Although a lower bound of $\Omega(2^{n/2})$ has existed in such a setting for over a decade, a purely adiabatic algorithm with this running time has been absent. We show that adiabatic quantum optimization using an unstructured search approach results in a running time that matches this lower bound (up to a polylogarithmic factor) for a broad class of classical local spin Hamiltonians. For this, it is necessary to bound the spectral gap throughout the adiabatic evolution and compute beforehand the position of the avoided crossing with sufficient precision so as to adapt the adiabatic schedule accordingly. However, we show that the position of the avoided crossing is approximately given by a quantity that depends on the degeneracies and inverse gaps of the problem Hamiltonian and is NP-hard to compute even within a low additive precision. Furthermore, computing it exactly (or nearly exactly) is \#P-hard. Our work indicates a possible limitation of adiabatic quantum optimization algorithms, leaving open the question of whether provable Grover-like speed-ups can be obtained for any optimization problem using this approach.

Trajectory prediction and generation are crucial for autonomous robots in dynamic environments. While prior research has typically focused on either prediction or generation, our approach unifies these tasks to provide a versatile framework and achieve state-of-the-art performance. While diffusion models excel in trajectory generation, their iterative sampling process is computationally intensive, hindering robotic systems' dynamic capabilities. We introduce Trajectory Conditional Flow Matching (T-CFM), a novel approach using flow matching techniques to learn a solver time-varying vector field for efficient, fast trajectory generation. T-CFM demonstrates effectiveness in adversarial tracking, real-world aircraft trajectory forecasting, and long-horizon planning, outperforming state-of-the-art baselines with 35% higher predictive accuracy and 142% improved planning performance. Crucially, T-CFM achieves up to 100$\times$ speed-up compared to diffusion models without sacrificing accuracy, enabling real-time decision making in robotics. Codebase: //github.com/CORE-Robotics-Lab/TCFM

Classic stochastic volatility models assume volatility is unobservable. We use the VIX for consider it observable, and use the Volatility Index: S\&P 500 VIX. This index was designed to measure volatility of S&P 500. We apply it to a different segment: Corporate bond markets. We fit time series models for spreads between corporate and 10-year Treasury bonds. Next, we divide residuals by VIX. Our main idea is such division makes residuals closer to the ideal case of a Gaussian white noise. This is remarkable, since these residuals and VIX come from separate market segments. We also discuss total returns of Bank of America corporate bonds. We conclude with the analysis of long-term behavior of these models.

Offline reinforcement learning (RL) learns effective policies from a static target dataset. Despite state-of-the-art (SOTA) offline RL algorithms being promising, they highly rely on the quality of the target dataset. The performance of SOTA algorithms can degrade in scenarios with limited samples in the target dataset, which is often the case in real-world applications. To address this issue, domain adaptation that leverages auxiliary samples from related source datasets (such as simulators) can be beneficial. In this context, determining the optimal way to trade off the source and target datasets remains a critical challenge in offline RL. To the best of our knowledge, this paper proposes the first framework that theoretically and experimentally explores how the weight assigned to each dataset affects the performance of offline RL. We establish the performance bounds and convergence neighborhood of our framework, both of which depend on the selection of the weight. Furthermore, we identify the existence of an optimal weight for balancing the two datasets. All theoretical guarantees and optimal weight depend on the quality of the source dataset and the size of the target dataset. Our empirical results on the well-known Procgen Benchmark substantiate our theoretical contributions.

Classic algorithms and machine learning systems like neural networks are both abundant in everyday life. While classic computer science algorithms are suitable for precise execution of exactly defined tasks such as finding the shortest path in a large graph, neural networks allow learning from data to predict the most likely answer in more complex tasks such as image classification, which cannot be reduced to an exact algorithm. To get the best of both worlds, this thesis explores combining both concepts leading to more robust, better performing, more interpretable, more computationally efficient, and more data efficient architectures. The thesis formalizes the idea of algorithmic supervision, which allows a neural network to learn from or in conjunction with an algorithm. When integrating an algorithm into a neural architecture, it is important that the algorithm is differentiable such that the architecture can be trained end-to-end and gradients can be propagated back through the algorithm in a meaningful way. To make algorithms differentiable, this thesis proposes a general method for continuously relaxing algorithms by perturbing variables and approximating the expectation value in closed form, i.e., without sampling. In addition, this thesis proposes differentiable algorithms, such as differentiable sorting networks, differentiable renderers, and differentiable logic gate networks. Finally, this thesis presents alternative training strategies for learning with algorithms.

Graph Neural Networks (GNNs) have shown promising results on a broad spectrum of applications. Most empirical studies of GNNs directly take the observed graph as input, assuming the observed structure perfectly depicts the accurate and complete relations between nodes. However, graphs in the real world are inevitably noisy or incomplete, which could even exacerbate the quality of graph representations. In this work, we propose a novel Variational Information Bottleneck guided Graph Structure Learning framework, namely VIB-GSL, in the perspective of information theory. VIB-GSL advances the Information Bottleneck (IB) principle for graph structure learning, providing a more elegant and universal framework for mining underlying task-relevant relations. VIB-GSL learns an informative and compressive graph structure to distill the actionable information for specific downstream tasks. VIB-GSL deduces a variational approximation for irregular graph data to form a tractable IB objective function, which facilitates training stability. Extensive experimental results demonstrate that the superior effectiveness and robustness of VIB-GSL.

Graph neural networks (GNNs) is widely used to learn a powerful representation of graph-structured data. Recent work demonstrates that transferring knowledge from self-supervised tasks to downstream tasks could further improve graph representation. However, there is an inherent gap between self-supervised tasks and downstream tasks in terms of optimization objective and training data. Conventional pre-training methods may be not effective enough on knowledge transfer since they do not make any adaptation for downstream tasks. To solve such problems, we propose a new transfer learning paradigm on GNNs which could effectively leverage self-supervised tasks as auxiliary tasks to help the target task. Our methods would adaptively select and combine different auxiliary tasks with the target task in the fine-tuning stage. We design an adaptive auxiliary loss weighting model to learn the weights of auxiliary tasks by quantifying the consistency between auxiliary tasks and the target task. In addition, we learn the weighting model through meta-learning. Our methods can be applied to various transfer learning approaches, it performs well not only in multi-task learning but also in pre-training and fine-tuning. Comprehensive experiments on multiple downstream tasks demonstrate that the proposed methods can effectively combine auxiliary tasks with the target task and significantly improve the performance compared to state-of-the-art methods.

It is important to detect anomalous inputs when deploying machine learning systems. The use of larger and more complex inputs in deep learning magnifies the difficulty of distinguishing between anomalous and in-distribution examples. At the same time, diverse image and text data are available in enormous quantities. We propose leveraging these data to improve deep anomaly detection by training anomaly detectors against an auxiliary dataset of outliers, an approach we call Outlier Exposure (OE). This enables anomaly detectors to generalize and detect unseen anomalies. In extensive experiments on natural language processing and small- and large-scale vision tasks, we find that Outlier Exposure significantly improves detection performance. We also observe that cutting-edge generative models trained on CIFAR-10 may assign higher likelihoods to SVHN images than to CIFAR-10 images; we use OE to mitigate this issue. We also analyze the flexibility and robustness of Outlier Exposure, and identify characteristics of the auxiliary dataset that improve performance.

Deep neural networks (DNNs) have been found to be vulnerable to adversarial examples resulting from adding small-magnitude perturbations to inputs. Such adversarial examples can mislead DNNs to produce adversary-selected results. Different attack strategies have been proposed to generate adversarial examples, but how to produce them with high perceptual quality and more efficiently requires more research efforts. In this paper, we propose AdvGAN to generate adversarial examples with generative adversarial networks (GANs), which can learn and approximate the distribution of original instances. For AdvGAN, once the generator is trained, it can generate adversarial perturbations efficiently for any instance, so as to potentially accelerate adversarial training as defenses. We apply AdvGAN in both semi-whitebox and black-box attack settings. In semi-whitebox attacks, there is no need to access the original target model after the generator is trained, in contrast to traditional white-box attacks. In black-box attacks, we dynamically train a distilled model for the black-box model and optimize the generator accordingly. Adversarial examples generated by AdvGAN on different target models have high attack success rate under state-of-the-art defenses compared to other attacks. Our attack has placed the first with 92.76% accuracy on a public MNIST black-box attack challenge.

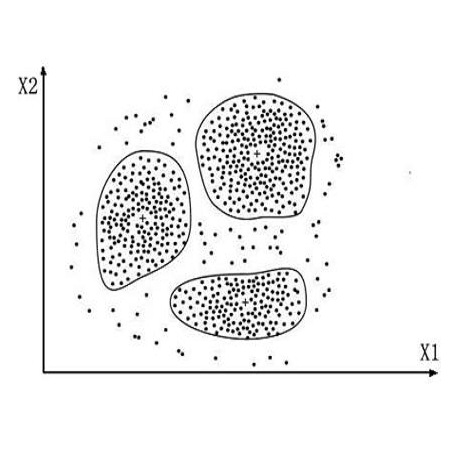

Spectral clustering is a leading and popular technique in unsupervised data analysis. Two of its major limitations are scalability and generalization of the spectral embedding (i.e., out-of-sample-extension). In this paper we introduce a deep learning approach to spectral clustering that overcomes the above shortcomings. Our network, which we call SpectralNet, learns a map that embeds input data points into the eigenspace of their associated graph Laplacian matrix and subsequently clusters them. We train SpectralNet using a procedure that involves constrained stochastic optimization. Stochastic optimization allows it to scale to large datasets, while the constraints, which are implemented using a special-purpose output layer, allow us to keep the network output orthogonal. Moreover, the map learned by SpectralNet naturally generalizes the spectral embedding to unseen data points. To further improve the quality of the clustering, we replace the standard pairwise Gaussian affinities with affinities leaned from unlabeled data using a Siamese network. Additional improvement can be achieved by applying the network to code representations produced, e.g., by standard autoencoders. Our end-to-end learning procedure is fully unsupervised. In addition, we apply VC dimension theory to derive a lower bound on the size of SpectralNet. State-of-the-art clustering results are reported on the Reuters dataset. Our implementation is publicly available at //github.com/kstant0725/SpectralNet .