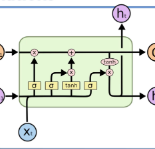

To reduce the complexity of the hardware implementation of neural network-based optical channel equalizers, we demonstrate that the performance of the biLSTM equalizer with approximated activation functions is close to that of the original model.

相關內容

Online computation is a concept to model uncertainty where not all information on a problem instance is known in advance. An online algorithm receives requests which reveal the instance piecewise and has to respond with irrevocable decisions. Often, an adversary is assumed that constructs the instance knowing the deterministic behavior of the algorithm. Thus, the adversary is able to tailor the input to any online algorithm. From a game theoretical point of view, the adversary and the online algorithm are players in an asymmetric two-player game. To overcome this asymmetry, the online algorithm is equipped with an isomorphic copy of the graph, which is referred to as unlabeled map. By applying the game theoretical perspective on online graph problems, where the solution is a subset of the vertices, we analyze the complexity of these online vertex subset games. For this, we introduce a framework for reducing online vertex subset games from TQBF. This framework is based on gadget reductions from 3-SATISFIABILITY to the corresponding offline problem. We further identify a set of rules for extending the 3-SATISFIABILITY-reduction and provide schemes for additional gadgets which assure that these rules are fulfilled. By extending the gadget reduction of the vertex subset problem with these additional gadgets, we obtain a reduction for the corresponding online vertex subset game. At last, we provide example reductions for online vertex subset games based on VERTEX COVER, INDEPENDENT SET, and DOMINATING SET, proving that they are PSPACE-complete. Thus, this paper establishes that the online version with a map of NP-complete vertex subset problems form a large class of PSPACE-complete problems.

Motivated by classical work on the numerical integration of ordinary differential equations we present a ResNet-styled neural network architecture that encodes non-expansive (1-Lipschitz) operators, as long as the spectral norms of the weights are appropriately constrained. This is to be contrasted with the ordinary ResNet architecture which, even if the spectral norms of the weights are constrained, has a Lipschitz constant that, in the worst case, grows exponentially with the depth of the network. Further analysis of the proposed architecture shows that the spectral norms of the weights can be further constrained to ensure that the network is an averaged operator, making it a natural candidate for a learned denoiser in Plug-and-Play algorithms. Using a novel adaptive way of enforcing the spectral norm constraints, we show that, even with these constraints, it is possible to train performant networks. The proposed architecture is applied to the problem of adversarially robust image classification, to image denoising, and finally to the inverse problem of deblurring.

We propose Dual-Feedback Generalized Proximal Gradient Descent (DFGPGD) as a new, hardware-friendly, operator splitting algorithm. We then establish convergence guarantees under approximate computational errors and we derive theoretical criteria for the numerical stability of DFGPGD based on absolute stability of dynamical systems. We also propose a new generalized proximal ADMM that can be used to instantiate most of existing proximal-based composite optimization solvers. We implement DFGPGD and ADMM on FPGA ZCU106 board and compare them in light of FPGA's timing as well as resource utilization and power efficiency. We also perform a full-stack, application-to-hardware, comparison between approximate versions of DFGPGD and ADMM based on dynamic power/error rate trade-off, which is a new hardware-application combined metric.

Singularly perturbed problems present inherent difficulty due to the presence of a thin boundary layer in its solution. To overcome this difficulty, we propose using deep operator networks (DeepONets), a method previously shown to be effective in approximating nonlinear operators between infinite-dimensional Banach spaces. In this paper, we demonstrate for the first time the application of DeepONets to one-dimensional singularly perturbed problems, achieving promising results that suggest their potential as a robust tool for solving this class of problems. We consider the convergence rate of the approximation error incurred by the operator networks in approximating the solution operator, and examine the generalization gap and empirical risk, all of which are shown to converge uniformly with respect to the perturbation parameter. By utilizing Shishkin mesh points as locations of the loss function, we conduct several numerical experiments that provide further support for the effectiveness of operator networks in capturing the singular boundary layer behavior.

We prove the convergence of an incremental projection numerical scheme for the time-dependent incompressible Navier--Stokes equations, without any regularity assumption on the weak solution. The velocity and the pressure are discretised in conforming spaces, whose the compatibility is ensured by the existence of an interpolator for regular functions which preserves approximate divergence free properties. Owing to a priori estimates, we get the existence and uniqueness of the discrete approximation. Compactness properties are then proved, relying on a Lions-like lemma for time translate estimates. It is then possible to show the convergence of the approximate solution to a weak solution of the problem. The construction of the interpolator is detailed in the case of the lowest degree Taylor-Hood finite element.

A dynamical system produces a dependent multivariate sequence called dynamical time series, developed with an evolution function. As variables in the dynamical time series at the current time-point usually depend on the whole variables in the previous time-point, existing studies forecast the variables at the future time-point by estimating the evolution function. However, some variables in the dynamical time-series are missing in some practical situations. In this study, we propose an autoregressive with slack time series (ARS) model. ARS model involves the simultaneous estimation of the evolution function and the underlying missing variables as a slack time series, with the aid of the time-invariance and linearity of the dynamical system. This study empirically demonstrates the effectiveness of the proposed ARS model.

This article shows how to develop an efficient solver for a stabilized numerical space-time formulation of the advection-dominated diffusion transient equation. At the discrete space-time level, we approximate the solution by using higher-order continuous B-spline basis functions in its spatial and temporal dimensions. This problem is very difficult to solve numerically using the standard Galerkin finite element method due to artificial oscillations present when the advection term dominates the diffusion term. However, a first-order constraint least-square formulation allows us to obtain numerical solutions avoiding oscillations. The advantages of space-time formulations are the use of high-order methods and the feasibility of developing space-time mesh adaptive techniques on well-defined discrete problems. We develop a solver for a least-square formulation to obtain a stabilized and symmetric problem on finite element meshes. The computational cost of our solver is bounded by the cost of the inversion of the space-time mass and stiffness (with one value fixed at a point) matrices and the cost of the GMRES solver applied for the symmetric and positive definite problem. We illustrate our findings on an advection-dominated diffusion space-time model problem and present two numerical examples: one with isogeometric analysis discretizations and the second one with an adaptive space-time finite element method.

Hierarchical structures are popular in recent vision transformers, however, they require sophisticated designs and massive datasets to work well. In this paper, we explore the idea of nesting basic local transformers on non-overlapping image blocks and aggregating them in a hierarchical way. We find that the block aggregation function plays a critical role in enabling cross-block non-local information communication. This observation leads us to design a simplified architecture that requires minor code changes upon the original vision transformer. The benefits of the proposed judiciously-selected design are threefold: (1) NesT converges faster and requires much less training data to achieve good generalization on both ImageNet and small datasets like CIFAR; (2) when extending our key ideas to image generation, NesT leads to a strong decoder that is 8$\times$ faster than previous transformer-based generators; and (3) we show that decoupling the feature learning and abstraction processes via this nested hierarchy in our design enables constructing a novel method (named GradCAT) for visually interpreting the learned model. Source code is available //github.com/google-research/nested-transformer.

With the rapid increase of large-scale, real-world datasets, it becomes critical to address the problem of long-tailed data distribution (i.e., a few classes account for most of the data, while most classes are under-represented). Existing solutions typically adopt class re-balancing strategies such as re-sampling and re-weighting based on the number of observations for each class. In this work, we argue that as the number of samples increases, the additional benefit of a newly added data point will diminish. We introduce a novel theoretical framework to measure data overlap by associating with each sample a small neighboring region rather than a single point. The effective number of samples is defined as the volume of samples and can be calculated by a simple formula $(1-\beta^{n})/(1-\beta)$, where $n$ is the number of samples and $\beta \in [0,1)$ is a hyperparameter. We design a re-weighting scheme that uses the effective number of samples for each class to re-balance the loss, thereby yielding a class-balanced loss. Comprehensive experiments are conducted on artificially induced long-tailed CIFAR datasets and large-scale datasets including ImageNet and iNaturalist. Our results show that when trained with the proposed class-balanced loss, the network is able to achieve significant performance gains on long-tailed datasets.

Generative Adversarial Networks (GANs) have recently achieved impressive results for many real-world applications, and many GAN variants have emerged with improvements in sample quality and training stability. However, they have not been well visualized or understood. How does a GAN represent our visual world internally? What causes the artifacts in GAN results? How do architectural choices affect GAN learning? Answering such questions could enable us to develop new insights and better models. In this work, we present an analytic framework to visualize and understand GANs at the unit-, object-, and scene-level. We first identify a group of interpretable units that are closely related to object concepts using a segmentation-based network dissection method. Then, we quantify the causal effect of interpretable units by measuring the ability of interventions to control objects in the output. We examine the contextual relationship between these units and their surroundings by inserting the discovered object concepts into new images. We show several practical applications enabled by our framework, from comparing internal representations across different layers, models, and datasets, to improving GANs by locating and removing artifact-causing units, to interactively manipulating objects in a scene. We provide open source interpretation tools to help researchers and practitioners better understand their GAN models.