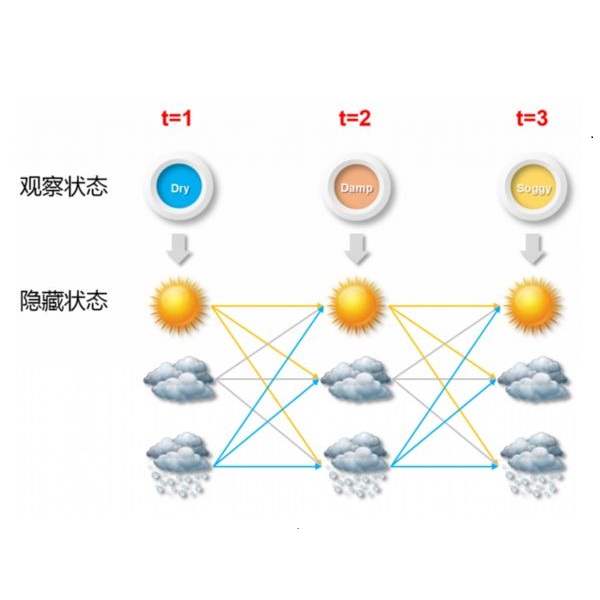

Many studies in the field of education analytics have identified student grade point averages (GPA) as an important indicator and predictor of students' final academic outcomes (graduate or halt). And while semester-to-semester fluctuations in GPA are considered normal, significant changes in academic performance may warrant more thorough investigation and consideration, particularly with regards to final academic outcomes. However, such an approach is challenging due to the difficulties of representing complex academic trajectories over an academic career. In this study, we apply a Hidden Markov Model (HMM) to provide a standard and intuitive classification over students' academic-performance levels, which leads to a compact representation of academic-performance trajectories. Next, we explore the relationship between different academic-performance trajectories and their correspondence to final academic success. Based on student transcript data from University of Central Florida, our proposed HMM is trained using sequences of students' course grades for each semester. Through the HMM, our analysis follows the expected finding that higher academic performance levels correlate with lower halt rates. However, in this paper, we identify that there exist many scenarios in which both improving or worsening academic-performance trajectories actually correlate to higher graduation rates. This counter-intuitive finding is made possible through the proposed and developed HMM model.

相關內容

Runtime verification or runtime monitoring equips safety-critical cyber-physical systems to augment design assurance measures and ensure operational safety and security. Cyber-physical systems have interaction failures, attack surfaces, and attack vectors resulting in unanticipated hazards and loss scenarios. These interaction failures pose challenges to runtime verification regarding monitoring specifications and monitoring placements for in-time detection of hazards. We develop a well-formed workflow model that connects system theoretic process analysis, commonly referred to as STPA, hazard causation information to lower-level runtime monitoring to detect hazards at the operational phase. Specifically, our model follows the DepDevOps paradigm to provide evidence and insights to runtime monitoring on what to monitor, where to monitor, and the monitoring context. We demonstrate and evaluate the value of multilevel monitors by injecting hazards on an autonomous emergency braking system model.

Automated vehicles require the ability to cooperate with humans for smooth integration into today's traffic. While the concept of cooperation is well known, developing a robust and efficient cooperative trajectory planning method is still a challenge. One aspect of this challenge is the uncertainty surrounding the state of the environment due to limited sensor accuracy. This uncertainty can be represented by a Partially Observable Markov Decision Process. Our work addresses this problem by extending an existing cooperative trajectory planning approach based on Monte Carlo Tree Search for continuous action spaces. It does so by explicitly modeling uncertainties in the form of a root belief state, from which start states for trees are sampled. After the trees have been constructed with Monte Carlo Tree Search, their results are aggregated into return distributions using kernel regression. We apply two risk metrics for the final selection, namely a Lower Confidence Bound and a Conditional Value at Risk. It can be demonstrated that the integration of risk metrics in the final selection policy consistently outperforms a baseline in uncertain environments, generating considerably safer trajectories.

Semantic place annotation can provide individual semantics, which can be of great help in the field of trajectory data mining. Most existing methods rely on annotated or external data and require retraining following a change of region, thus preventing their large-scale applications. Herein, we propose an unsupervised method denoted as UPAPP for the semantic place annotation of trajectories using spatiotemporal information. The Bayesian Criterion is specifically employed to decompose the spatiotemporal probability of the candidate place into spatial probability, duration probability, and visiting time probability. Spatial information in ROI and POI data is subsequently adopted to calculate the spatial probability. In terms of the temporal probabilities, the Term Frequency Inverse Document Frequency weighting algorithm is used to count the potential visits to different place types in the trajectories, and generates the prior probabilities of the visiting time and duration. The spatiotemporal probability of the candidate place is then combined with the importance of the place category to annotate the visited places. Validation with a trajectory dataset collected by 709 volunteers in Beijing showed that our method achieved an overall and average accuracy of 0.712 and 0.720, respectively, indicating that the visited places can be annotated accurately without any external data.

Photonic accelerators have been intensively studied to provide enhanced information processing capability to benefit from the unique attributes of physical processes. Recently, it has been reported that chaotically oscillating ultrafast time series from a laser, called laser chaos, provides the ability to solve multi-armed bandit (MAB) problems or decision-making problems at GHz order. Furthermore, it has been confirmed that the negatively correlated time-domain structure of laser chaos contributes to the acceleration of decision-making. However, the underlying mechanism of why decision-making is accelerated by correlated time series is unknown. In this paper, we demonstrate a theoretical model to account for the acceleration of decision-making by correlated time sequence. We first confirm the effectiveness of the negative autocorrelation inherent in time series for solving two-armed bandit problems using Fourier transform surrogate methods. We propose a theoretical model that concerns the correlated time series subjected to the decision-making system and the internal status of the system therein in a unified manner, inspired by correlated random walks. We demonstrate that the performance derived analytically by the theory agrees well with the numerical simulations, which confirms the validity of the proposed model and leads to optimal system design. The present study paves the new way for the effectiveness of correlated time series for decision-making, impacting artificial intelligence and other applications.

Implicit bias may perpetuate healthcare disparities for marginalized patient populations. Such bias is expressed in communication between patients and their providers. We design an ecosystem with guidance from providers to make this bias explicit in patient-provider communication. Our end users are providers seeking to improve their quality of care for patients who are Black, Indigenous, People of Color (BIPOC) and/or Lesbian, Gay, Bisexual, Transgender, and Queer (LGBTQ). We present wireframes displaying communication metrics that negatively impact patient-centered care divided into the following categories: digital nudge, dashboard, and guided reflection. Our wireframes provide quantitative, real-time, and conversational feedback promoting provider reflection on their interactions with patients. This is the first design iteration toward the development of a tool to raise providers' awareness of their own implicit biases.

Context: Forgetting is defined as a gradual process of losing information. Even though there are many studies demonstrating the effect of forgetting in software development, to the best of our knowledge, no study explores the impact of forgetting in software development using a controlled experiment approach. Objective: We would like to provide insights on the impact of forgetting in software development projects. We want to examine whether the recency & frequency of interaction impact forgetting in software development. Methods: We will conduct an experiment that examines the impact of forgetting in software development. Participants will first do an initial task. According to their initial task performance, they will be assigned to either the experiment or the control group. The experiment group will then do two additional tasks to enhance their exposure to the code. Both groups will then do a final task to see if additional exposure to the code benefits the experiment group's performance in the final task. Finally, we will conduct a survey and a recall task with the same participants to collect data about their perceptions of forgetting and quantify their memory performance, respectively.

Autoscaling is a critical component for efficient resource utilization with satisfactory quality of service (QoS) in cloud computing. This paper investigates proactive autoscaling for widely-used scaling-per-query applications where scaling is required for each query, such as container registry and function-as-a-service (FaaS). In these scenarios, the workload often exhibits high uncertainty with complex temporal patterns like periodicity, noises and outliers. Conservative strategies that scale out unnecessarily many instances lead to high resource costs whereas aggressive strategies may result in poor QoS. We present RobustScaler to achieve superior trade-off between cost and QoS. Specifically, we design a novel autoscaling framework based on non-homogeneous Poisson processes (NHPP) modeling and stochastically constrained optimization. Furthermore, we develop a specialized alternating direction method of multipliers (ADMM) to efficiently train the NHPP model, and rigorously prove the QoS guarantees delivered by our optimization-based proactive strategies. Extensive experiments show that RobustScaler outperforms common baseline autoscaling strategies in various real-world traces, with large margins for complex workload patterns.

Alerts are crucial for requesting prompt human intervention upon cloud anomalies. The quality of alerts significantly affects the cloud reliability and the cloud provider's business revenue. In practice, we observe on-call engineers being hindered from quickly locating and fixing faulty cloud services because of the vast existence of misleading, non-informative, non-actionable alerts. We call the ineffectiveness of alerts "anti-patterns of alerts". To better understand the anti-patterns of alerts and provide actionable measures to mitigate anti-patterns, in this paper, we conduct the first empirical study on the practices of mitigating anti-patterns of alerts in an industrial cloud system. We study the alert strategies and the alert processing procedure at Huawei Cloud, a leading cloud provider. Our study combines the quantitative analysis of millions of alerts in two years and a survey with eighteen experienced engineers. As a result, we summarized four individual anti-patterns and two collective anti-patterns of alerts. We also summarize four current reactions to mitigate the anti-patterns of alerts, and the general preventative guidelines for the configuration of alert strategy. Lastly, we propose to explore the automatic evaluation of the Quality of Alerts (QoA), including the indicativeness, precision, and handleability of alerts, as a future research direction that assists in the automatic detection of alerts' anti-patterns. The findings of our study are valuable for optimizing cloud monitoring systems and improving the reliability of cloud services.

Reinforcement learning is one of the core components in designing an artificial intelligent system emphasizing real-time response. Reinforcement learning influences the system to take actions within an arbitrary environment either having previous knowledge about the environment model or not. In this paper, we present a comprehensive study on Reinforcement Learning focusing on various dimensions including challenges, the recent development of different state-of-the-art techniques, and future directions. The fundamental objective of this paper is to provide a framework for the presentation of available methods of reinforcement learning that is informative enough and simple to follow for the new researchers and academics in this domain considering the latest concerns. First, we illustrated the core techniques of reinforcement learning in an easily understandable and comparable way. Finally, we analyzed and depicted the recent developments in reinforcement learning approaches. My analysis pointed out that most of the models focused on tuning policy values rather than tuning other things in a particular state of reasoning.

Driven by the visions of Internet of Things and 5G communications, the edge computing systems integrate computing, storage and network resources at the edge of the network to provide computing infrastructure, enabling developers to quickly develop and deploy edge applications. Nowadays the edge computing systems have received widespread attention in both industry and academia. To explore new research opportunities and assist users in selecting suitable edge computing systems for specific applications, this survey paper provides a comprehensive overview of the existing edge computing systems and introduces representative projects. A comparison of open source tools is presented according to their applicability. Finally, we highlight energy efficiency and deep learning optimization of edge computing systems. Open issues for analyzing and designing an edge computing system are also studied in this survey.