Unmanned Aerial Vehicles (UAVs), have intrigued different people from all walks of life, because of their pervasive computing capabilities. UAV equipped with vision techniques, could be leveraged to establish navigation autonomous control for UAV itself. Also, object detection from UAV could be used to broaden the utilization of drone to provide ubiquitous surveillance and monitoring services towards military operation, urban administration and agriculture management. As the data-driven technologies evolved, machine learning algorithm, especially the deep learning approach has been intensively utilized to solve different traditional computer vision research problems. Modern Convolutional Neural Networks based object detectors could be divided into two major categories: one-stage object detector and two-stage object detector. In this study, we utilize some representative CNN based object detectors to execute the computer vision task over Stanford Drone Dataset (SDD). State-of-the-art performance has been achieved in utilizing focal loss dense detector RetinaNet based approach for object detection from UAV in a fast and accurate manner.

相關內容

Deep learning has been successfully applied to solve various complex problems ranging from big data analytics to computer vision and human-level control. Deep learning advances however have also been employed to create software that can cause threats to privacy, democracy and national security. One of those deep learning-powered applications recently emerged is "deepfake". Deepfake algorithms can create fake images and videos that humans cannot distinguish them from authentic ones. The proposal of technologies that can automatically detect and assess the integrity of digital visual media is therefore indispensable. This paper presents a survey of algorithms used to create deepfakes and, more importantly, methods proposed to detect deepfakes in the literature to date. We present extensive discussions on challenges, research trends and directions related to deepfake technologies. By reviewing the background of deepfakes and state-of-the-art deepfake detection methods, this study provides a comprehensive overview of deepfake techniques and facilitates the development of new and more robust methods to deal with the increasingly challenging deepfakes.

The task of detecting 3D objects in point cloud has a pivotal role in many real-world applications. However, 3D object detection performance is behind that of 2D object detection due to the lack of powerful 3D feature extraction methods. In order to address this issue, we propose to build a 3D backbone network to learn rich 3D feature maps by using sparse 3D CNN operations for 3D object detection in point cloud. The 3D backbone network can inherently learn 3D features from almost raw data without compressing point cloud into multiple 2D images and generate rich feature maps for object detection. The sparse 3D CNN takes full advantages of the sparsity in the 3D point cloud to accelerate computation and save memory, which makes the 3D backbone network achievable. Empirical experiments are conducted on the KITTI benchmark and results show that the proposed method can achieve state-of-the-art performance for 3D object detection.

Unmanned Aerial Vehicles are increasingly being used in surveillance and traffic monitoring thanks to their high mobility and ability to cover areas at different altitudes and locations. One of the major challenges is to use aerial images to accurately detect cars and count them in real-time for traffic monitoring purposes. Several deep learning techniques were recently proposed based on convolution neural network (CNN) for real-time classification and recognition in computer vision. However, their performance depends on the scenarios where they are used. In this paper, we investigate the performance of two state-of-the-art CNN algorithms, namely Faster R-CNN and YOLOv3, in the context of car detection from aerial images. We trained and tested these two models on a large car dataset taken from UAVs. We demonstrated in this paper that YOLOv3 outperforms Faster R-CNN in sensitivity and processing time, although they are comparable in the precision metric.

To optimize fruit production, a portion of the flowers and fruitlets of apple trees must be removed early in the growing season. The proportion to be removed is determined by the bloom intensity, i.e., the number of flowers present in the orchard. Several automated computer vision systems have been proposed to estimate bloom intensity, but their overall performance is still far from satisfactory even in relatively controlled environments. With the goal of devising a technique for flower identification which is robust to clutter and to changes in illumination, this paper presents a method in which a pre-trained convolutional neural network is fine-tuned to become specially sensitive to flowers. Experimental results on a challenging dataset demonstrate that our method significantly outperforms three approaches that represent the state of the art in flower detection, with recall and precision rates higher than $90\%$. Moreover, a performance assessment on three additional datasets previously unseen by the network, which consist of different flower species and were acquired under different conditions, reveals that the proposed method highly surpasses baseline approaches in terms of generalization capability.

Object detection is a fundamental and challenging problem in aerial and satellite image analysis. More recently, a two-stage detector Faster R-CNN is proposed and demonstrated to be a promising tool for object detection in optical remote sensing images, while the sparse and dense characteristic of objects in remote sensing images is complexity. It is unreasonable to treat all images with the same region proposal strategy, and this treatment limits the performance of two-stage detectors. In this paper, we propose a novel and effective approach, named deep adaptive proposal network (DAPNet), address this complexity characteristic of object by learning a new category prior network (CPN) on the basis of the existing Faster R-CNN architecture. Moreover, the candidate regions produced by DAPNet model are different from the traditional region proposal network (RPN), DAPNet predicts the detail category of each candidate region. And these candidate regions combine the object number, which generated by the category prior network to achieve a suitable number of candidate boxes for each image. These candidate boxes can satisfy detection tasks in sparse and dense scenes. The performance of the proposed framework has been evaluated on the challenging NWPU VHR-10 data set. Experimental results demonstrate the superiority of the proposed framework to the state-of-the-art.

Although it is well believed for years that modeling relations between objects would help object recognition, there has not been evidence that the idea is working in the deep learning era. All state-of-the-art object detection systems still rely on recognizing object instances individually, without exploiting their relations during learning. This work proposes an object relation module. It processes a set of objects simultaneously through interaction between their appearance feature and geometry, thus allowing modeling of their relations. It is lightweight and in-place. It does not require additional supervision and is easy to embed in existing networks. It is shown effective on improving object recognition and duplicate removal steps in the modern object detection pipeline. It verifies the efficacy of modeling object relations in CNN based detection. It gives rise to the first fully end-to-end object detector.

Vision-based vehicle detection approaches achieve incredible success in recent years with the development of deep convolutional neural network (CNN). However, existing CNN based algorithms suffer from the problem that the convolutional features are scale-sensitive in object detection task but it is common that traffic images and videos contain vehicles with a large variance of scales. In this paper, we delve into the source of scale sensitivity, and reveal two key issues: 1) existing RoI pooling destroys the structure of small scale objects, 2) the large intra-class distance for a large variance of scales exceeds the representation capability of a single network. Based on these findings, we present a scale-insensitive convolutional neural network (SINet) for fast detecting vehicles with a large variance of scales. First, we present a context-aware RoI pooling to maintain the contextual information and original structure of small scale objects. Second, we present a multi-branch decision network to minimize the intra-class distance of features. These lightweight techniques bring zero extra time complexity but prominent detection accuracy improvement. The proposed techniques can be equipped with any deep network architectures and keep them trained end-to-end. Our SINet achieves state-of-the-art performance in terms of accuracy and speed (up to 37 FPS) on the KITTI benchmark and a new highway dataset, which contains a large variance of scales and extremely small objects.

Recent CNN based object detectors, no matter one-stage methods like YOLO, SSD, and RetinaNe or two-stage detectors like Faster R-CNN, R-FCN and FPN are usually trying to directly finetune from ImageNet pre-trained models designed for image classification. There has been little work discussing on the backbone feature extractor specifically designed for the object detection. More importantly, there are several differences between the tasks of image classification and object detection. 1. Recent object detectors like FPN and RetinaNet usually involve extra stages against the task of image classification to handle the objects with various scales. 2. Object detection not only needs to recognize the category of the object instances but also spatially locate the position. Large downsampling factor brings large valid receptive field, which is good for image classification but compromises the object location ability. Due to the gap between the image classification and object detection, we propose DetNet in this paper, which is a novel backbone network specifically designed for object detection. Moreover, DetNet includes the extra stages against traditional backbone network for image classification, while maintains high spatial resolution in deeper layers. Without any bells and whistles, state-of-the-art results have been obtained for both object detection and instance segmentation on the MSCOCO benchmark based on our DetNet~(4.8G FLOPs) backbone. The code will be released for the reproduction.

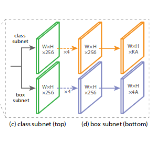

Object detection is a major challenge in computer vision, involving both object classification and object localization within a scene. While deep neural networks have been shown in recent years to yield very powerful techniques for tackling the challenge of object detection, one of the biggest challenges with enabling such object detection networks for widespread deployment on embedded devices is high computational and memory requirements. Recently, there has been an increasing focus in exploring small deep neural network architectures for object detection that are more suitable for embedded devices, such as Tiny YOLO and SqueezeDet. Inspired by the efficiency of the Fire microarchitecture introduced in SqueezeNet and the object detection performance of the single-shot detection macroarchitecture introduced in SSD, this paper introduces Tiny SSD, a single-shot detection deep convolutional neural network for real-time embedded object detection that is composed of a highly optimized, non-uniform Fire sub-network stack and a non-uniform sub-network stack of highly optimized SSD-based auxiliary convolutional feature layers designed specifically to minimize model size while maintaining object detection performance. The resulting Tiny SSD possess a model size of 2.3MB (~26X smaller than Tiny YOLO) while still achieving an mAP of 61.3% on VOC 2007 (~4.2% higher than Tiny YOLO). These experimental results show that very small deep neural network architectures can be designed for real-time object detection that are well-suited for embedded scenarios.

Faster RCNN has achieved great success for generic object detection including PASCAL object detection and MS COCO object detection. In this report, we propose a detailed designed Faster RCNN method named FDNet1.0 for face detection. Several techniques were employed including multi-scale training, multi-scale testing, light-designed RCNN, some tricks for inference and a vote-based ensemble method. Our method achieves two 1th places and one 2nd place in three tasks over WIDER FACE validation dataset (easy set, medium set, hard set).