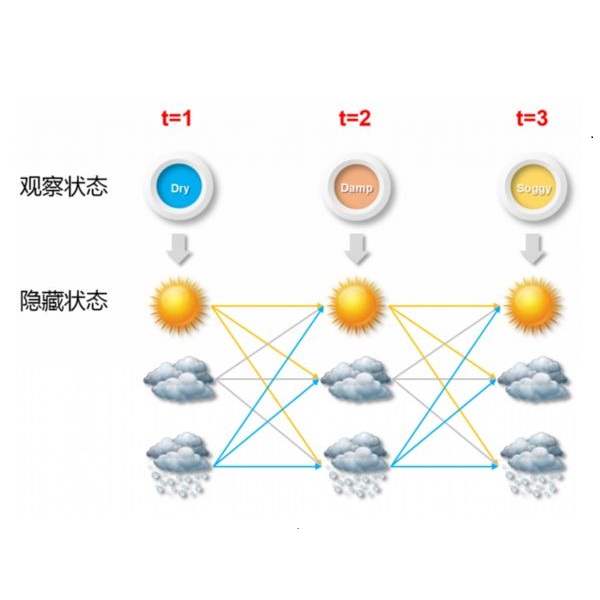

Spurred in part by the ever-growing number of sensors and web-based methods of collecting data, the use of Intensive Longitudinal Data (ILD) is becoming more common in the social and behavioural sciences. The ILD collected in this field are often hypothesised to be the result of latent states (e.g. behaviour, emotions), and the promise of ILD lies in its ability to capture the dynamics of these states as they unfold in time. In particular, by collecting data for multiple subjects, researchers can observe how such dynamics differ between subjects. The Bayesian Multilevel Hidden Markov Model (mHMM) is a relatively novel model that is suited to model the ILD of this kind while taking into account heterogeneity between subjects. While the mHMM has been applied in a variety of settings, large-scale studies that examine the required sample size for this model are lacking. In this paper, we address this research gap by conducting a simulation study to evaluate the effect of changing (1) the number of subjects, (2) the number of occasions, and (3) the between subjects variability on parameter estimates obtained by the mHMM. We frame this simulation study in the context of sleep research, which consists of multivariate continuous data that displays considerable overlap in the state dependent component distributions. In addition, we generate a set of baseline scenarios with more general data properties. Overall, the number of subjects has the largest effect on model performance. However, the number of occasions is important to adequately model latent state transitions. We discuss how the characteristics of the data influence parameter estimation and provide recommendations to researchers seeking to apply the mHMM to their own data.

相關內容

Source:

The approximate uniform sampling of graph realizations with a given degree sequence is an everyday task in several social science, computer science, engineering etc. projects. One approach is using Markov chains. The best available current result about the well-studied switch Markov chain is that it is rapidly mixing on P-stable degree sequences (see DOI:10.1016/j.ejc.2021.103421). The switch Markov chain does not change any degree sequence. However, there are cases where degree intervals are specified rather than a single degree sequence. (A natural scenario where this problem arises is in hypothesis testing on social networks that are only partially observed.) Rechner, Strowick, and M\"uller-Hannemann introduced in 2018 the notion of degree interval Markov chain which uses three (separately well-studied) local operations (switch, hinge-flip and toggle), and employing on degree sequence realizations where any two sequences under scrutiny have very small coordinate-wise distance. Recently Amanatidis and Kleer published a beautiful paper (arXiv:2110.09068), showing that the degree interval Markov chain is rapidly mixing if the sequences are coming from a system of very thin intervals which are centered not far from a regular degree sequence. In this paper we extend substantially their result, showing that the degree interval Markov chain is rapidly mixing if the intervals are centred at P-stable degree sequences.

Truncated densities are probability density functions defined on truncated domains. They share the same parametric form with their non-truncated counterparts up to a normalizing constant. Since the computation of their normalizing constants is usually infeasible, Maximum Likelihood Estimation cannot be easily applied to estimate truncated density models. Score Matching (SM) is a powerful tool for fitting parameters using only unnormalized models. However, it cannot be directly applied here as boundary conditions used to derive a tractable SM objective are not satisfied by truncated densities. In this paper, we study parameter estimation for truncated probability densities using SM. The estimator minimizes a weighted Fisher divergence. The weight function is simply the shortest distance from a data point to the boundary of the domain. We show this choice of weight function naturally arises from minimizing the Stein discrepancy as well as upperbounding the finite-sample estimation error. The usefulness of our method is demonstrated by numerical experiments and a study on the Chicago crime data set. We also show that the proposed density estimation can correct the outlier-trimming bias caused by aggressive outlier detection methods.

The variational autoencoder (VAE) is a popular deep latent variable model used to analyse high-dimensional datasets by learning a low-dimensional latent representation of the data. It simultaneously learns a generative model and an inference network to perform approximate posterior inference. Recently proposed extensions to VAEs that can handle temporal and longitudinal data have applications in healthcare, behavioural modelling, and predictive maintenance. However, these extensions do not account for heterogeneous data (i.e., data comprising of continuous and discrete attributes), which is common in many real-life applications. In this work, we propose the heterogeneous longitudinal VAE (HL-VAE) that extends the existing temporal and longitudinal VAEs to heterogeneous data. HL-VAE provides efficient inference for high-dimensional datasets and includes likelihood models for continuous, count, categorical, and ordinal data while accounting for missing observations. We demonstrate our model's efficacy through simulated as well as clinical datasets, and show that our proposed model achieves competitive performance in missing value imputation and predictive accuracy.

Common tasks encountered in epidemiology, including disease incidence estimation and causal inference, rely on predictive modeling. Constructing a predictive model can be thought of as learning a prediction function, i.e., a function that takes as input covariate data and outputs a predicted value. Many strategies for learning these functions from data are available, from parametric regressions to machine learning algorithms. It can be challenging to choose an approach, as it is impossible to know in advance which one is the most suitable for a particular dataset and prediction task at hand. The super learner (SL) is an algorithm that alleviates concerns over selecting the one "right" strategy while providing the freedom to consider many of them, such as those recommended by collaborators, used in related research, or specified by subject-matter experts. It is an entirely pre-specified and data-adaptive strategy for predictive modeling. To ensure the SL is well-specified for learning the prediction function, the analyst does need to make a few important choices. In this Education Corner article, we provide step-by-step guidelines for making these choices, walking the reader through each of them and providing intuition along the way. In doing so, we aim to empower the analyst to tailor the SL specification to their prediction task, thereby ensuring their SL performs as well as possible. A flowchart provides a concise, easy-to-follow summary of key suggestions and heuristics, based on our accumulated experience, and guided by theory.

Data collection and research methodology represents a critical part of the research pipeline. On the one hand, it is important that we collect data in a way that maximises the validity of what we are measuring, which may involve the use of long scales with many items. On the other hand, collecting a large number of items across multiple scales results in participant fatigue, and expensive and time consuming data collection. It is therefore important that we use the available resources optimally. In this work, we consider how a consideration for theory and the associated causal/structural model can help us to streamline data collection procedures by not wasting time collecting data for variables which are not causally critical for subsequent analysis. This not only saves time and enables us to redirect resources to attend to other variables which are more important, but also increases research transparency and the reliability of theory testing. In order to achieve this streamlined data collection, we leverage structural models, and Markov conditional independency structures implicit in these models to identify the substructures which are critical for answering a particular research question. In this work, we review the relevant concepts and present a number of didactic examples with the hope that psychologists can use these techniques to streamline their data collection process without invalidating the subsequent analysis. We provide a number of simulation results to demonstrate the limited analytical impact of this streamlining.

In this work, we study the transfer learning problem under high-dimensional generalized linear models (GLMs), which aim to improve the fit on target data by borrowing information from useful source data. Given which sources to transfer, we propose a transfer learning algorithm on GLM, and derive its $\ell_1/\ell_2$-estimation error bounds as well as a bound for a prediction error measure. The theoretical analysis shows that when the target and source are sufficiently close to each other, these bounds could be improved over those of the classical penalized estimator using only target data under mild conditions. When we don't know which sources to transfer, an algorithm-free transferable source detection approach is introduced to detect informative sources. The detection consistency is proved under the high-dimensional GLM transfer learning setting. We also propose an algorithm to construct confidence intervals of each coefficient component, and the corresponding theories are provided. Extensive simulations and a real-data experiment verify the effectiveness of our algorithms. We implement the proposed GLM transfer learning algorithms in a new R package glmtrans, which is available on CRAN.

Dynamic Linear Models (DLMs) are commonly employed for time series analysis due to their versatile structure, simple recursive updating, ability to handle missing data, and probabilistic forecasting. However, the options for count time series are limited: Gaussian DLMs require continuous data, while Poisson-based alternatives often lack sufficient modeling flexibility. We introduce a novel semiparametric methodology for count time series by warping a Gaussian DLM. The warping function has two components: a (nonparametric) transformation operator that provides distributional flexibility and a rounding operator that ensures the correct support for the discrete data-generating process. We develop conjugate inference for the warped DLM, which enables analytic and recursive updates for the state space filtering and smoothing distributions. We leverage these results to produce customized and efficient algorithms for inference and forecasting, including Monte Carlo simulation for offline analysis and an optimal particle filter for online inference. This framework unifies and extends a variety of discrete time series models and is valid for natural counts, rounded values, and multivariate observations. Simulation studies illustrate the excellent forecasting capabilities of the warped DLM. The proposed approach is applied to a multivariate time series of daily overdose counts and demonstrates both modeling and computational successes.

In this paper we propose a Bayesian nonparametric approach to modelling sparse time-varying networks. A positive parameter is associated to each node of a network, which models the sociability of that node. Sociabilities are assumed to evolve over time, and are modelled via a dynamic point process model. The model is able to capture long term evolution of the sociabilities. Moreover, it yields sparse graphs, where the number of edges grows subquadratically with the number of nodes. The evolution of the sociabilities is described by a tractable time-varying generalised gamma process. We provide some theoretical insights into the model and apply it to three datasets: a simulated network, a network of hyperlinks between communities on Reddit, and a network of co-occurences of words in Reuters news articles after the September 11th attacks.

Modeling multivariate time series has long been a subject that has attracted researchers from a diverse range of fields including economics, finance, and traffic. A basic assumption behind multivariate time series forecasting is that its variables depend on one another but, upon looking closely, it is fair to say that existing methods fail to fully exploit latent spatial dependencies between pairs of variables. In recent years, meanwhile, graph neural networks (GNNs) have shown high capability in handling relational dependencies. GNNs require well-defined graph structures for information propagation which means they cannot be applied directly for multivariate time series where the dependencies are not known in advance. In this paper, we propose a general graph neural network framework designed specifically for multivariate time series data. Our approach automatically extracts the uni-directed relations among variables through a graph learning module, into which external knowledge like variable attributes can be easily integrated. A novel mix-hop propagation layer and a dilated inception layer are further proposed to capture the spatial and temporal dependencies within the time series. The graph learning, graph convolution, and temporal convolution modules are jointly learned in an end-to-end framework. Experimental results show that our proposed model outperforms the state-of-the-art baseline methods on 3 of 4 benchmark datasets and achieves on-par performance with other approaches on two traffic datasets which provide extra structural information.

With the rapid increase of large-scale, real-world datasets, it becomes critical to address the problem of long-tailed data distribution (i.e., a few classes account for most of the data, while most classes are under-represented). Existing solutions typically adopt class re-balancing strategies such as re-sampling and re-weighting based on the number of observations for each class. In this work, we argue that as the number of samples increases, the additional benefit of a newly added data point will diminish. We introduce a novel theoretical framework to measure data overlap by associating with each sample a small neighboring region rather than a single point. The effective number of samples is defined as the volume of samples and can be calculated by a simple formula $(1-\beta^{n})/(1-\beta)$, where $n$ is the number of samples and $\beta \in [0,1)$ is a hyperparameter. We design a re-weighting scheme that uses the effective number of samples for each class to re-balance the loss, thereby yielding a class-balanced loss. Comprehensive experiments are conducted on artificially induced long-tailed CIFAR datasets and large-scale datasets including ImageNet and iNaturalist. Our results show that when trained with the proposed class-balanced loss, the network is able to achieve significant performance gains on long-tailed datasets.