In collaborative learning, multiple parties contribute their datasets to jointly deduce global machine learning models for numerous predictive tasks. Despite its efficacy, this learning paradigm fails to encompass critical application domains that involve highly sensitive data, such as healthcare and security analytics, where privacy risks limit entities to individually train models using only their own datasets. In this work, we target privacy-preserving collaborative hierarchical clustering. We introduce a formal security definition that aims to achieve the balance between utility and privacy and present a two-party protocol that provably satisfies it. We then extend our protocol with: (i) an optimized version for the single-linkage clustering, and (ii) scalable approximation variants. We implement all our schemes and experimentally evaluate their performance and accuracy on synthetic and real datasets, obtaining very encouraging results. For example, end-to-end execution of our secure approximate protocol for over 1M 10-dimensional data samples requires 35sec of computation and achieves 97.09% accuracy.

相關內容

We study the hierarchy of communities in real-world networks under a generic stochastic block model, in which the connection probabilities are structured in a binary tree. Under such model, a standard recursive bi-partitioning algorithm is dividing the network into two communities based on the Fiedler vector of the unnormalized graph Laplacian and repeating the split until a stopping rule indicates no further community structures. We prove the strong consistency of this method under a wide range of model parameters, which include sparse networks with node degrees as small as $O(\log n)$. In addition, unlike most of existing work, our theory covers multiscale networks where the connection probabilities may differ by orders of magnitude, which comprise an important class of models that are practically relevant but technically challenging to deal with. Finally we demonstrate the performance of our algorithm on synthetic data and real-world examples.

We propose a novel hierarchical approach for multiple rotation averaging, dubbed HARA. Our method incrementally initializes the rotation graph based on a hierarchy of triplet support. The key idea is to build a spanning tree by prioritizing the edges with many strong triplet supports and gradually adding those with weaker and fewer supports. This reduces the risk of adding outliers in the spanning tree. As a result, we obtain a robust initial solution that enables us to filter outliers prior to nonlinear optimization. With minimal modification, our approach can also integrate the knowledge of the number of valid 2D-2D correspondences. We perform extensive evaluations on both synthetic and real datasets, demonstrating state-of-the-art results.

Utility-Based Shortfall Risk (UBSR) is a risk metric that is increasingly popular in financial applications, owing to certain desirable properties that it enjoys. We consider the problem of estimating UBSR in a recursive setting, where samples from the underlying loss distribution are available one-at-a-time. We cast the UBSR estimation problem as a root finding problem, and propose stochastic approximation-based estimations schemes. We derive non-asymptotic bounds on the estimation error in the number of samples. We also consider the problem of UBSR optimization within a parameterized class of random variables. We propose a stochastic gradient descent based algorithm for UBSR optimization, and derive non-asymptotic bounds on its convergence.

Differential privacy is a rigorous definition for privacy that guarantees that any analysis performed on a sensitive dataset leaks no information about the individuals whose data are contained therein. In this work, we develop new differentially private algorithms to analyze streaming data. Specifically, we consider the problem of estimating the density of a stream of users (or, more generally, elements), which expresses the fraction of all users that actually appear in the stream. We focus on one of the strongest privacy guarantees for the streaming model, namely user-level pan-privacy, which ensures that the privacy of any user is protected, even against an adversary that observes, on rare occasions, the internal state of the algorithm. Our proposed algorithms employ optimally all the allocated privacy budget, are specially tailored for the streaming model, and, hence, outperform both theoretically and experimentally the conventional sampling-based approach.

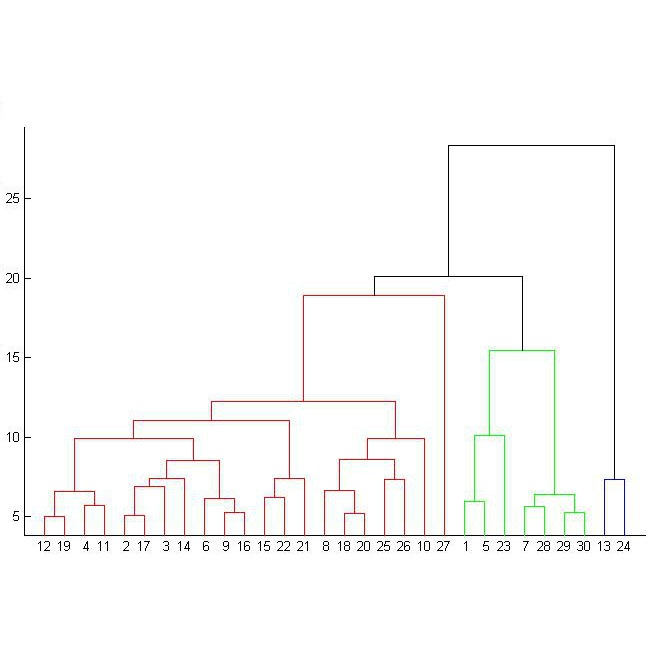

Animal pose estimation has recently come into the limelight due to its application in biology, zoology, and aquaculture. Deep learning methods have effectively been applied to human pose estimation. However, the major bottleneck to the application of these methods to animal pose estimation is the unavailability of sufficient quantities of labeled data. Though there are ample quantities of unlabelled data publicly available, it is economically impractical to label large quantities of data for each animal. In addition, due to the wide variety of body shapes in the animal kingdom, the transfer of knowledge across domains is ineffective. Given the fact that the human brain is able to recognize animal pose without requiring large amounts of labeled data, it is only reasonable that we exploit unsupervised learning to tackle the problem of animal pose recognition from the available, unlabelled data. In this paper, we introduce a novel architecture that is able to recognize the pose of multiple animals fromunlabelled data. We do this by (1) removing background information from each image and employing an edge detection algorithm on the body of the animal, (2) Tracking motion of the edge pixels and performing agglomerative clustering to segment body parts, (3) employing contrastive learning to discourage grouping of distant body parts together. Hence we are able to distinguish between body parts of the animal, based on their visual behavior, instead of the underlying anatomy. Thus, we are able to achieve a more effective classification of the data than their human-labeled counterparts. We test our model on the TigDog and WLD (WildLife Documentary) datasets, where we outperform state-of-the-art approaches by a significant margin. We also study the performance of our model on other public data to demonstrate the generalization ability of our model.

Recently, many unsupervised deep learning methods have been proposed to learn clustering with unlabelled data. By introducing data augmentation, most of the latest methods look into deep clustering from the perspective that the original image and its tansformation should share similar semantic clustering assignment. However, the representation features before softmax activation function could be quite different even the assignment probability is very similar since softmax is only sensitive to the maximum value. This may result in high intra-class diversities in the representation feature space, which will lead to unstable local optimal and thus harm the clustering performance. By investigating the internal relationship between mutual information and contrastive learning, we summarized a general framework that can turn any maximizing mutual information into minimizing contrastive loss. We apply it to both the semantic clustering assignment and representation feature and propose a novel method named Deep Robust Clustering by Contrastive Learning (DRC). Different to existing methods, DRC aims to increase inter-class diver-sities and decrease intra-class diversities simultaneously and achieve more robust clustering results. Extensive experiments on six widely-adopted deep clustering benchmarks demonstrate the superiority of DRC in both stability and accuracy. e.g., attaining 71.6% mean accuracy on CIFAR-10, which is 7.1% higher than state-of-the-art results.

Neural networks of ads systems usually take input from multiple resources, e.g., query-ad relevance, ad features and user portraits. These inputs are encoded into one-hot or multi-hot binary features, with typically only a tiny fraction of nonzero feature values per example. Deep learning models in online advertising industries can have terabyte-scale parameters that do not fit in the GPU memory nor the CPU main memory on a computing node. For example, a sponsored online advertising system can contain more than $10^{11}$ sparse features, making the neural network a massive model with around 10 TB parameters. In this paper, we introduce a distributed GPU hierarchical parameter server for massive scale deep learning ads systems. We propose a hierarchical workflow that utilizes GPU High-Bandwidth Memory, CPU main memory and SSD as 3-layer hierarchical storage. All the neural network training computations are contained in GPUs. Extensive experiments on real-world data confirm the effectiveness and the scalability of the proposed system. A 4-node hierarchical GPU parameter server can train a model more than 2X faster than a 150-node in-memory distributed parameter server in an MPI cluster. In addition, the price-performance ratio of our proposed system is 4-9 times better than an MPI-cluster solution.

Alternating Direction Method of Multipliers (ADMM) is a widely used tool for machine learning in distributed settings, where a machine learning model is trained over distributed data sources through an interactive process of local computation and message passing. Such an iterative process could cause privacy concerns of data owners. The goal of this paper is to provide differential privacy for ADMM-based distributed machine learning. Prior approaches on differentially private ADMM exhibit low utility under high privacy guarantee and often assume the objective functions of the learning problems to be smooth and strongly convex. To address these concerns, we propose a novel differentially private ADMM-based distributed learning algorithm called DP-ADMM, which combines an approximate augmented Lagrangian function with time-varying Gaussian noise addition in the iterative process to achieve higher utility for general objective functions under the same differential privacy guarantee. We also apply the moments accountant method to bound the end-to-end privacy loss. The theoretical analysis shows that DP-ADMM can be applied to a wider class of distributed learning problems, is provably convergent, and offers an explicit utility-privacy tradeoff. To our knowledge, this is the first paper to provide explicit convergence and utility properties for differentially private ADMM-based distributed learning algorithms. The evaluation results demonstrate that our approach can achieve good convergence and model accuracy under high end-to-end differential privacy guarantee.

The availability of large microarray data has led to a growing interest in biclustering methods in the past decade. Several algorithms have been proposed to identify subsets of genes and conditions according to different similarity measures and under varying constraints. In this paper we focus on the exclusive row biclustering problem for gene expression data sets, in which each row can only be a member of a single bicluster while columns can participate in multiple ones. This type of biclustering may be adequate, for example, for clustering groups of cancer patients where each patient (row) is expected to be carrying only a single type of cancer, while each cancer type is associated with multiple (and possibly overlapping) genes (columns). We present a novel method to identify these exclusive row biclusters through a combination of existing biclustering algorithms and combinatorial auction techniques. We devise an approach for tuning the threshold for our algorithm based on comparison to a null model in the spirit of the Gap statistic approach. We demonstrate our approach on both synthetic and real-world gene expression data and show its power in identifying large span non-overlapping rows sub matrices, while considering their unique nature. The Gap statistic approach succeeds in identifying appropriate thresholds in all our examples.

We introduce an algorithmic method for population anomaly detection based on gaussianization through an adversarial autoencoder. This method is applicable to detection of `soft' anomalies in arbitrarily distributed highly-dimensional data. A soft, or population, anomaly is characterized by a shift in the distribution of the data set, where certain elements appear with higher probability than anticipated. Such anomalies must be detected by considering a sufficiently large sample set rather than a single sample. Applications include, but not limited to, payment fraud trends, data exfiltration, disease clusters and epidemics, and social unrests. We evaluate the method on several domains and obtain both quantitative results and qualitative insights.