This paper studies the consistency and statistical inference of simulated Markov random fields (MRFs) in a high dimensional background. Our estimators are based on the Markov chain Monte Carlo maximum likelihood estimation (MCMC-MLE) method, penalized by the Elastic-net. Under mild conditions that ensure a specific convergence rate of the MCMC method, the $\ell_{1}$ consistency of Elastic-net-penalized MCMC-MLE is obtained. We further propose a decorrelated score test based on the decorrelated score function and prove the asymptotic normality of the score function without the influence of many nuisance parameters under the assumption that it accelerates the convergence of the MCMC method. The one-step estimator for a single parameter of interest is constructed by linearizing the decorrelated score function to solve its root, and the normality and confidence interval for the true value, is established. We use different algorithms to control the false discovery rate (FDR) for multiple testing problems via classic p-values and novel e-values. Finally, we empirically validate the asymptotic theories and demonstrate both FDR control procedures in our article have good performance.

相關內容

Motivated by a recent literature on the double-descent phenomenon in machine learning, we consider highly over-parametrized models in causal inference, including synthetic control with many control units. In such models, there may be so many free parameters that the model fits the training data perfectly. As a motivating example, we first investigate high-dimensional linear regression for imputing wage data, where we find that models with many more covariates than sample size can outperform simple ones. As our main contribution, we document the performance of high-dimensional synthetic control estimators with many control units. We find that adding control units can help improve imputation performance even beyond the point where the pre-treatment fit is perfect. We then provide a unified theoretical perspective on the performance of these high-dimensional models. Specifically, we show that more complex models can be interpreted as model-averaging estimators over simpler ones, which we link to an improvement in average performance. This perspective yields concrete insights into the use of synthetic control when control units are many relative to the number of pre-treatment periods.

Significance testing based on p-values has been implicated in the reproducibility crisis in scientific research, with one of the proposals being to eliminate them in favor of Bayesian analyses. Defenders of the p-values have countered that it is the improper use and errors in interpretation, rather than the p-values themselves that are to blame. Similar exchanges about the role of p-values have occurred with some regularity every 10 to 15 years since their formal introduction in statistical practice. The apparent contradiction between the repeated failures in interpretation and continuous use of p-values suggest that there is an inferential value in the computation of these values. In this work we propose to attach a radical Bayesian interpretation to the number computed and reported as a p-value for the Generalized Linear Model, which has been the workhorse of applied statistical work. We introduce a decision analytic framework for thresholding posterior tail areas (pi-values) which for any given Bayesian analysis will have a direct correspondence to p-values in non-Bayesian approaches. Pi-values are non-controversial, posterior probability summaries of treatment effects. A predictive probability argument is made to justify the exploration of the stochastic variation (replication probability) of p and pi-values and culminates into a concrete proposal for the synthesis of Likelihood and Bayesian approaches to data analyses that aim for reproducibility. We illustrate these concepts using the results of recent randomized controlled trials in cardiometabolic and kidney diseases and provide R code for the implementation of the proposed methodology.

We propose a posterior for Bayesian Likelihood-Free Inference (LFI) based on generalized Bayesian inference. To define the posterior, we use Scoring Rules (SRs), which evaluate probabilistic models given an observation. In LFI, we can sample from the model but not evaluate the likelihood; hence, we employ SRs which admit unbiased empirical estimates. We use the Energy and Kernel SRs, for which our posterior enjoys consistency in a well-specified setting and outlier robustness. We perform inference with pseudo-marginal (PM) Markov Chain Monte Carlo (MCMC) or stochastic-gradient (SG) MCMC. While PM-MCMC works satisfactorily for simple setups, it mixes poorly for concentrated targets. Conversely, SG-MCMC requires differentiating the simulator model but improves performance over PM-MCMC when both work and scales to higher-dimensional setups as it is rejection-free. Although both techniques target the SR posterior approximately, the error diminishes as the number of model simulations at each MCMC step increases. In our simulations, we employ automatic differentiation to effortlessly differentiate the simulator model. We compare our posterior with related approaches on standard benchmarks and a chaotic dynamical system from meteorology, for which SG-MCMC allows inferring the parameters of a neural network used to parametrize a part of the update equations of the dynamical system.

Recent years have seen tremendous advances in the theory and application of sequential experiments. While these experiments are not always designed with hypothesis testing in mind, researchers may still be interested in performing tests after the experiment is completed. The purpose of this paper is to aid in the development of optimal tests for sequential experiments by analyzing their asymptotic properties. Our key finding is that the asymptotic power function of any test can be matched by a test in a limit experiment where a Gaussian process is observed for each treatment, and inference is made for the drifts of these processes. This result has important implications, including a powerful sufficiency result: any candidate test only needs to rely on a fixed set of statistics, regardless of the type of sequential experiment. These statistics are the number of times each treatment has been sampled by the end of the experiment, along with final value of the score (for parametric models) or efficient influence function (for non-parametric models) process for each treatment. We then characterize asymptotically optimal tests under various restrictions such as unbiasedness, \alpha-spending constraints etc. Finally, we apply our our results to three key classes of sequential experiments: costly sampling, group sequential trials, and bandit experiments, and show how optimal inference can be conducted in these scenarios.

Score-based diffusion models (SBDM) have recently emerged as state-of-the-art approaches for image generation. Existing SBDMs are typically formulated in a finite-dimensional setting, where images are considered as tensors of a finite size. This papers develops SBDMs in the infinite-dimensional setting, that is, we model the training data as functions supported on a rectangular domain. Besides the quest for generating images at ever higher resolution our primary motivation is to create a well-posed infinite-dimensional learning problem so that we can discretize it consistently on multiple resolution levels. We thereby hope to obtain diffusion models that generalize across different resolution levels and improve the efficiency of the training process. We demonstrate how to overcome two shortcomings of current SBDM approaches in the infinite-dimensional setting. First, we modify the forward process to ensure that the latent distribution is well-defined in the infinite-dimensional setting using the notion of trace class operators. Second, we illustrate that approximating the score function with an operator network, in our case Fourier neural operators (FNOs), is beneficial for multilevel training. After deriving the forward process in the infinite-dimensional setting and reverse processes for finite approximations, we show their well-posedness, derive adequate discretizations, and investigate the role of the latent distributions. We provide first promising numerical results on two datasets, MNIST and material structures. In particular, we show that multilevel training is feasible within this framework.

In this paper, we find a sample complexity bound for learning a simplex from noisy samples. Assume a dataset of size $n$ is given which includes i.i.d. samples drawn from a uniform distribution over an unknown simplex in $\mathbb{R}^K$, where samples are assumed to be corrupted by a multi-variate additive Gaussian noise of an arbitrary magnitude. We prove the existence of an algorithm that with high probability outputs a simplex having a $\ell_2$ distance of at most $\varepsilon$ from the true simplex (for any $\varepsilon>0$). Also, we theoretically show that in order to achieve this bound, it is sufficient to have $n\ge\left(K^2/\varepsilon^2\right)e^{\Omega\left(K/\mathrm{SNR}^2\right)}$ samples, where $\mathrm{SNR}$ stands for the signal-to-noise ratio. This result solves an important open problem and shows as long as $\mathrm{SNR}\ge\Omega\left(K^{1/2}\right)$, the sample complexity of the noisy regime has the same order to that of the noiseless case. Our proofs are a combination of the so-called sample compression technique in \citep{ashtiani2018nearly}, mathematical tools from high-dimensional geometry, and Fourier analysis. In particular, we have proposed a general Fourier-based technique for recovery of a more general class of distribution families from additive Gaussian noise, which can be further used in a variety of other related problems.

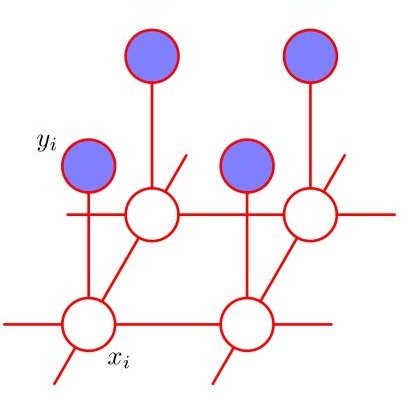

In this paper we study the type IV Knorr Held space time models. Such models typically apply intrinsic Markov random fields and constraints are imposed for identifiability. INLA is an efficient inference tool for such models where constraints are dealt with through a conditioning by kriging approach. When the number of spatial and/or temporal time points become large, it becomes computationally expensive to fit such models, partly due to the number of constraints involved. We propose a new approach, HyMiK, dividing constraints into two separate sets where one part is treated through a mixed effect approach while the other one is approached by the standard conditioning by kriging method, resulting in a more efficient procedure for dealing with constraints. The new approach is easy to apply based on existing implementations of INLA. We run the model on simulated data, on a real data set containing dengue fever cases in Brazil and another real data set of confirmed positive test cases of Covid-19 in the counties of Norway. For all cases we get very similar results when comparing the new approach with the tradition one while at the same time obtaining a significant increase in computational speed, varying on a factor from 2 to 4, depending on the sizes of the data sets.

We study the classical problem of predicting an outcome variable, $Y$, using a linear combination of a $d$-dimensional covariate vector, $\mathbf{X}$. We are interested in linear predictors whose coefficients solve: % \begin{align*} \inf_{\boldsymbol{\beta} \in \mathbb{R}^d} \left( \mathbb{E}_{\mathbb{P}_n} \left[ \left(Y-\mathbf{X}^{\top}\beta \right)^r \right] \right)^{1/r} +\delta \, \rho\left(\boldsymbol{\beta}\right), \end{align*} where $\delta>0$ is a regularization parameter, $\rho:\mathbb{R}^d\to \mathbb{R}_+$ is a convex penalty function, $\mathbb{P}_n$ is the empirical distribution of the data, and $r\geq 1$. We present three sets of new results. First, we provide conditions under which linear predictors based on these estimators % solve a \emph{distributionally robust optimization} problem: they minimize the worst-case prediction error over distributions that are close to each other in a type of \emph{max-sliced Wasserstein metric}. Second, we provide a detailed finite-sample and asymptotic analysis of the statistical properties of the balls of distributions over which the worst-case prediction error is analyzed. Third, we use the distributionally robust optimality and our statistical analysis to present i) an oracle recommendation for the choice of regularization parameter, $\delta$, that guarantees good out-of-sample prediction error; and ii) a test-statistic to rank the out-of-sample performance of two different linear estimators. None of our results rely on sparsity assumptions about the true data generating process; thus, they broaden the scope of use of the square-root lasso and related estimators in prediction problems.

Likelihood-free inference for simulator-based statistical models has developed rapidly from its infancy to a useful tool for practitioners. However, models with more than a handful of parameters still generally remain a challenge for the Approximate Bayesian Computation (ABC) based inference. To advance the possibilities for performing likelihood-free inference in higher dimensional parameter spaces, we introduce an extension of the popular Bayesian optimisation based approach to approximate discrepancy functions in a probabilistic manner which lends itself to an efficient exploration of the parameter space. Our approach achieves computational scalability for higher dimensional parameter spaces by using separate acquisition functions and discrepancies for each parameter. The efficient additive acquisition structure is combined with exponentiated loss -likelihood to provide a misspecification-robust characterisation of the marginal posterior distribution for all model parameters. The method successfully performs computationally efficient inference in a 100-dimensional space on canonical examples and compares favourably to existing modularised ABC methods. We further illustrate the potential of this approach by fitting a bacterial transmission dynamics model to a real data set, which provides biologically coherent results on strain competition in a 30-dimensional parameter space.

For deploying a deep learning model into production, it needs to be both accurate and compact to meet the latency and memory constraints. This usually results in a network that is deep (to ensure performance) and yet thin (to improve computational efficiency). In this paper, we propose an efficient method to train a deep thin network with a theoretic guarantee. Our method is motivated by model compression. It consists of three stages. In the first stage, we sufficiently widen the deep thin network and train it until convergence. In the second stage, we use this well-trained deep wide network to warm up (or initialize) the original deep thin network. This is achieved by letting the thin network imitate the immediate outputs of the wide network from layer to layer. In the last stage, we further fine tune this well initialized deep thin network. The theoretical guarantee is established by using mean field analysis, which shows the advantage of layerwise imitation over traditional training deep thin networks from scratch by backpropagation. We also conduct large-scale empirical experiments to validate our approach. By training with our method, ResNet50 can outperform ResNet101, and BERT_BASE can be comparable with BERT_LARGE, where both the latter models are trained via the standard training procedures as in the literature.