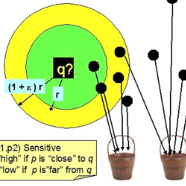

Vaccination passports are being issued by governments around the world in order to open up their travel and hospitality sectors. Civil liberty campaigners on the other hand argue that such mandatory instruments encroach upon our fundamental right to anonymity, freedom of movement, and are a backdoor to issuing "identity documents" to citizens by their governments. We present a privacy-preserving framework that uses two-factor authentication to create a unique identifier that can be used to locate a person's vaccination record on a blockchain, but does not store any personal information about them. Our main contribution is the employment of a locality sensitive hashing algorithm over an iris extraction technique, that can be used to authenticate users and anonymously locate vaccination records on the blockchain, without leaking any personally identifiable information to the blockchain. Our proposed system allows for the safe reopening of society, while maintaining the privacy of citizens.

相關內容

Process industry is one of the leading sectors of the world economy, characterized by difficult environmental conditions, low innovation and very high consumption of electricity. There is a strong push at worldwide level towards the dual objective of improving the efficiency of plants and products, significantly reducing the consumption of electricity and CO2 emissions. Digital transformation can be the enabling driver to achieve these goals, while ensuring better working conditions for workers. Currently, digital sensors in plants produce a large amount of data which constitutes a potential value that is not exploited. Digital technologies, with process design using digital twins, can combine the physical and the virtual worlds, bringing innovation with great efficiency and drastic reduction of waste. In accordance with the guidelines of Industrie 4.0, the H2020 funded CAPRI project aims to innovate the process industry, with a modular and scalable Reference Architecture, based on open source software, which can be implemented both in brownfield and greenfield scenarios. The ability to distribute processing between the edge, where the data is created, and the cloud, where the greatest computational resources are available, facilitates the development of integrated digital solutions with cognitive capabilities. The reference architecture will be finally validated in the asphalt, steel and pharma pilot plants, allowing the development of integrated planning solutions, with scheduling and control of the plants, optimizing the efficiency and reliability of the supply chain, and balancing energy efficiency.

Github Copilot, trained on billions of lines of public code, has recently become the buzzword in the computer science research and practice community. Although it is designed to help developers implement safe and effective code with powerful intelligence, practitioners and researchers raise concerns about its ethical and security problems, e.g., should the copyleft licensed code be freely leveraged or insecure code be considered for training in the first place? These problems pose a significant impact on Copilot and other similar products that aim to learn knowledge from large-scale open-source code through deep learning models, which are inevitably on the rise with the fast development of artificial intelligence. To mitigate such impacts, we argue that there is a need to invent effective mechanisms for protecting open-source code from being exploited by deep learning models. Here, we design and implement a prototype, CoProtector, which utilizes data poisoning techniques to arm source code repositories for defending against such exploits. Our large-scale experiments empirically show that CoProtector is effective in achieving its purpose, significantly reducing the performance of Copilot-like deep learning models while being able to stably reveal the secretly embedded watermark backdoors.

The classic paper of Shapley and Shubik \cite{Shapley1971assignment} characterized the core of the assignment game using ideas from matching theory and LP-duality theory and their highly non-trivial interplay. The worth of this game is given by an optimal solution to the primal LP and core imputations correspond to optimal solutions to the dual LP. This fact naturally raises the question of viewing core imputations through the lens of complementarity. Our exploration along these lines yields new insights: we obtain a relationship between the competitiveness of individuals, and teams of agents, and the amount of profit they accrue. It also sheds light on the phenomenon of degeneracy, i.e., when the optimal assignment is not unique. Shapley and Shubik's suggestion for dealing with degeneracy was to perturb the edge weights of the underlying graph to make the optimal assignment unique; however, this destroys crucial information contained in the original instance and the outcome becomes a function of the vagaries of the randomness imposed on the instance. The core is a quintessential solution concept in cooperative game theory. It contains all ways of distributing the total worth of a game among agents in such a way that no sub-coalition has incentive to secede from the grand coalition.

Build verifiability refers to the property that the build of a software system can be verified by independent third parties and it is crucial for the trustworthiness of a software system. Various efforts towards build verifiability have been made to C/C++-based systems, yet the techniques for Java-based systems are not systematic and are often specific to a particular build tool (e.g., Maven). In this study, we present a systematic approach towards build verifiability on Java-based systems. Our approach consists of three parts: a unified build process, a tool that dynamically controls non-determinism during the build process, and another tool that eliminates non-equivalences by post-processing the build artifacts. We apply our approach on 46 unverified open source projects from Reproducible Central and 13 open source projects that are widely used by Huawei commercial products. As a result, 91% of the unverified Reproducible Central projects and 100% of the commercially adopted OSS projects are successfully verified with our approach. In addition, based on our experience in analyzing thousands of builds for both commercial and open source Java-based systems, we present 14 patterns that introduce non-equivalences in generated build artifacts and their respective mitigation strategies. Among these patterns, 11 (78%) are unique for Java-based system, whereas the remaining 3 (22%) are common with C/C++-based systems. The approach and the findings of this paper are useful for both practitioners and researchers who are interested in build verifiability.

Today's distributed tracing frameworks only trace a small fraction of all requests. For application developers troubleshooting rare edge-cases, the tracing framework is unlikely to capture a relevant trace at all, because it cannot know which requests will be problematic until after-the-fact. Application developers thus heavily depend on luck. In this paper, we remove the dependence on luck for any edge-case where symptoms can be programmatically detected, such as high tail latency, errors, and bottlenecked queues. We propose a lightweight and always-on distributed tracing system, Hindsight, where each constituent node acts analogously to a car dash-cam that, upon detecting a sudden jolt in momentum, persists the last hour of footage. Hindsight implements a retroactive sampling abstraction: when the symptoms of a problem are detected, Hindsight retrieves and persists coherent trace data from all relevant nodes that serviced the request. Developers using Hindsight receive the exact edge-case traces they desire; by comparison existing sampling-based tracing systems depend wholly on serendipity. Our experimental evaluation shows that Hindsight successfully collects edge-case symptomatic requests in real-world use cases. Hindsight adds only nanosecond-level overhead to generate trace data, can handle GB/s of data per node, transparently integrates with existing distributed tracing systems, and persists full, detailed traces when an edge-case problem is detected.

Mobile devices often distribute measurements from a single physical sensor to multiple applications using software-based multiplexing. On Android devices, the highest requested sampling frequency is returned to all applications even if other applications request measurements at lower frequencies. In this paper, we comprehensively demonstrate that this design choice exposes practically exploitable side-channels based on frequency-key shifting. By carefully modulating sensor sampling frequencies in software, we show that unprivileged malicious applications can construct reliable spectral covert channels that bypass existing security mechanisms. Moreover, we present a novel variant that allows an unprivileged malicious observer app to fingerprint other victim applications at a coarse-grained level. Both techniques do not impose any special assumptions beyond accessing standard mobile services from unprivileged applications. As such, our work reports side-channel vulnerabilities that exploit subtle yet insecure design choices in mobile sensor stacks.

Technology has evolved over the years, making our lives easier. It has impacted the healthcare sector, increasing the average life expectancy of human beings. Still, there are gaps that remain unaddressed. There is a lack of transparency in the healthcare system, which results in inherent trust problems between patients and hospitals. In the present day, a patient does not know whether he or she will get the proper treatment from the hospital for the fee charged. A patient can claim reimbursement of the medical bill from any insurance company. However, today there is minimal scope for the Insurance Company to verify the validity of such bills or medical records. A patient can provide fake details to get financial benefits from the insurance company. Again, there are trust issues between the patient (i.e., the insurance claimer) and the insurance company. Blockchain integrated with the smart contract is a well-known disruptive technology that builds trust by providing transparency to the system. In this paper, we propose a blockchain-enabled Secure and Smart HealthCare System. Fairness of all the entities: patient, hospital, or insurance company involved in the system is guaranteed with no one trusting each other. Privacy and security of patients' medical data are ensured as well. We also propose a method for privacy-preserving sharing of aggregated data with the research community for their own purpose. Shared data must not be personally identifiable, i.e, no one can link the acquired data to the identity of any patient or their medical history. We have implemented the prototype in the Ethereum platform and Ropsten test network, and have included the analysis as well.

There is a growing body of work that proposes methods for mitigating bias in machine learning systems. These methods typically rely on access to protected attributes such as race, gender, or age. However, this raises two significant challenges: (1) protected attributes may not be available or it may not be legal to use them, and (2) it is often desirable to simultaneously consider multiple protected attributes, as well as their intersections. In the context of mitigating bias in occupation classification, we propose a method for discouraging correlation between the predicted probability of an individual's true occupation and a word embedding of their name. This method leverages the societal biases that are encoded in word embeddings, eliminating the need for access to protected attributes. Crucially, it only requires access to individuals' names at training time and not at deployment time. We evaluate two variations of our proposed method using a large-scale dataset of online biographies. We find that both variations simultaneously reduce race and gender biases, with almost no reduction in the classifier's overall true positive rate.

Recent advancements in deep neural networks for graph-structured data have led to state-of-the-art performance on recommender system benchmarks. However, making these methods practical and scalable to web-scale recommendation tasks with billions of items and hundreds of millions of users remains a challenge. Here we describe a large-scale deep recommendation engine that we developed and deployed at Pinterest. We develop a data-efficient Graph Convolutional Network (GCN) algorithm PinSage, which combines efficient random walks and graph convolutions to generate embeddings of nodes (i.e., items) that incorporate both graph structure as well as node feature information. Compared to prior GCN approaches, we develop a novel method based on highly efficient random walks to structure the convolutions and design a novel training strategy that relies on harder-and-harder training examples to improve robustness and convergence of the model. We also develop an efficient MapReduce model inference algorithm to generate embeddings using a trained model. We deploy PinSage at Pinterest and train it on 7.5 billion examples on a graph with 3 billion nodes representing pins and boards, and 18 billion edges. According to offline metrics, user studies and A/B tests, PinSage generates higher-quality recommendations than comparable deep learning and graph-based alternatives. To our knowledge, this is the largest application of deep graph embeddings to date and paves the way for a new generation of web-scale recommender systems based on graph convolutional architectures.

Content based video retrieval is an approach for facilitating the searching and browsing of large image collections over World Wide Web. In this approach, video analysis is conducted on low level visual properties extracted from video frame. We believed that in order to create an effective video retrieval system, visual perception must be taken into account. We conjectured that a technique which employs multiple features for indexing and retrieval would be more effective in the discrimination and search tasks of videos. In order to validate this claim, content based indexing and retrieval systems were implemented using color histogram, various texture features and other approaches. Videos were stored in Oracle 9i Database and a user study measured correctness of response.