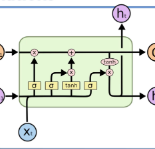

Modular soft robots have shown higher potential in sophisticated tasks than single-module robots. However, the modular structure incurs the complexity of accurate control and necessitates a control strategy specifically for modular robots. In this paper, we introduce a data collection strategy and a novel and accurate bidirectional LSTM configuration controller for modular soft robots with module number adaptability. Such a controller can control module configurations in robots with different module numbers. Simulation cable-driven robots and real pneumatic robots have been included in experiments to validate the proposed approaches, and we have proven that our controller can be leveraged even with the increase or decrease of module number. This is the first paper that gets inspiration from the physical structure of modular robots and utilizes bidirectional LSTM for module number adaptability. Future work may include a planning method that bridges the task and configuration spaces and the integration of an online controller.

相關內容

To enable large-scale and efficient deployment of artificial intelligence (AI), the combination of AI and edge computing has spawned Edge Intelligence, which leverages the computing and communication capabilities of end devices and edge servers to process data closer to where it is generated. A key technology for edge intelligence is the privacy-protecting machine learning paradigm known as Federated Learning (FL), which enables data owners to train models without having to transfer raw data to third-party servers. However, FL networks are expected to involve thousands of heterogeneous distributed devices. As a result, communication efficiency remains a key bottleneck. To reduce node failures and device exits, a Hierarchical Federated Learning (HFL) framework is proposed, where a designated cluster leader supports the data owner through intermediate model aggregation. Therefore, based on the improvement of edge server resource utilization, this paper can effectively make up for the limitation of cache capacity. In order to mitigate the impact of soft clicks on the quality of user experience (QoE), the authors model the user QoE as a comprehensive system cost. To solve the formulaic problem, the authors propose a decentralized caching algorithm with federated deep reinforcement learning (DRL) and federated learning (FL), where multiple agents learn and make decisions independently

To support extremely high data rates, reconfigurable intelligent surface (RIS)-assisted terahertz (THz) communication is considered to be a promising technology for future sixth-generation networks. However, due to the typical employment of hybrid beamforming architecture in THz systems, as well as the passive nature of RIS which lacks the capability to process pilot signals, obtaining channel state information (CSI) is facing significant challenges. To accurately estimate the cascaded channel, we propose a novel low-complexity channel estimation scheme, which includes three steps. Specifically, we first estimate full CSI within a small subset of subcarriers (SCs). Then, we acquire angular information at base station and RIS based on the full CSI. Finally, we derive spatial directions and recover full-CSI for the remaining SCs. Theoretical analysis and simulation results demonstrate that the proposed scheme can achieve superior performance in terms of normalized mean-square-error and exhibit a lower computational complexity compared with the existing algorithms.

Guided trajectory planning involves a leader robot strategically directing a follower robot to collaboratively reach a designated destination. However, this task becomes notably challenging when the leader lacks complete knowledge of the follower's decision-making model. There is a need for learning-based methods to effectively design the cooperative plan. To this end, we develop a Stackelberg game-theoretic approach based on the Koopman operator to address the challenge. We first formulate the guided trajectory planning problem through the lens of a dynamic Stackelberg game. We then leverage Koopman operator theory to acquire a learning-based linear system model that approximates the follower's feedback dynamics. Based on this learned model, the leader devises a collision-free trajectory to guide the follower using receding horizon planning. We use simulations to elaborate on the effectiveness of our approach in generating learning models that accurately predict the follower's multi-step behavior when compared to alternative learning techniques. Moreover, our approach successfully accomplishes the guidance task and notably reduces the leader's planning time to nearly half when contrasted with the model-based baseline method.

Generative modeling of 3D LiDAR data is an emerging task with promising applications for autonomous mobile robots, such as scalable simulation, scene manipulation, and sparse-to-dense completion of LiDAR point clouds. While existing approaches have demonstrated the feasibility of image-based LiDAR data generation using deep generative models, they still struggle with fidelity and training stability. In this work, we present R2DM, a novel generative model for LiDAR data that can generate diverse and high-fidelity 3D scene point clouds based on the image representation of range and reflectance intensity. Our method is built upon denoising diffusion probabilistic models (DDPMs), which have shown impressive results among generative model frameworks in recent years. To effectively train DDPMs in the LiDAR domain, we first conduct an in-depth analysis of data representation, loss functions, and spatial inductive biases. Leveraging our R2DM model, we also introduce a flexible LiDAR completion pipeline based on the powerful capabilities of DDPMs. We demonstrate that our method surpasses existing methods in generating tasks on the KITTI-360 and KITTI-Raw datasets, as well as in the completion task on the KITTI-360 dataset. Our project page can be found at //kazuto1011.github.io/r2dm.

Contact-rich manipulation tasks often exhibit a large sim-to-real gap. For instance, industrial assembly tasks frequently involve tight insertions where the clearance is less than 0.1 mm and can even be negative when dealing with a deformable receptacle. This narrow clearance leads to complex contact dynamics that are difficult to model accurately in simulation, making it challenging to transfer simulation-learned policies to real-world robots. In this paper, we propose a novel framework for robustly learning manipulation skills for real-world tasks using simulated data only. Our framework consists of two main components: the "Force Planner" and the "Gain Tuner". The Force Planner plans both the robot motion and desired contact force, while the Gain Tuner dynamically adjusts the compliance control gains to track the desired contact force during task execution. The key insight is that by dynamically adjusting the robot's compliance control gains during task execution, we can modulate contact force in the new environment, thereby generating trajectories similar to those trained in simulation and narrowing the sim-to-real gap. Experimental results show that our method, trained in simulation on a generic square peg-and-hole task, can generalize to a variety of real-world insertion tasks involving narrow and negative clearances, all without requiring any fine-tuning. Videos are available at //dynamic-compliance.github.io.

Deep neural networks have been shown to provide accurate function approximations in high dimensions. However, fitting network parameters requires informative training data that are often challenging to collect in science and engineering applications. This work proposes Neural Galerkin schemes based on deep learning that generate training data with active learning for numerically solving high-dimensional partial differential equations. Neural Galerkin schemes build on the Dirac-Frenkel variational principle to train networks by minimizing the residual sequentially over time, which enables adaptively collecting new training data in a self-informed manner that is guided by the dynamics described by the partial differential equations. This is in contrast to other machine learning methods that aim to fit network parameters globally in time without taking into account training data acquisition. Our finding is that the active form of gathering training data of the proposed Neural Galerkin schemes is key for numerically realizing the expressive power of networks in high dimensions. Numerical experiments demonstrate that Neural Galerkin schemes have the potential to enable simulating phenomena and processes with many variables for which traditional and other deep-learning-based solvers fail, especially when features of the solutions evolve locally such as in high-dimensional wave propagation problems and interacting particle systems described by Fokker-Planck and kinetic equations.

Existing knowledge graph (KG) embedding models have primarily focused on static KGs. However, real-world KGs do not remain static, but rather evolve and grow in tandem with the development of KG applications. Consequently, new facts and previously unseen entities and relations continually emerge, necessitating an embedding model that can quickly learn and transfer new knowledge through growth. Motivated by this, we delve into an expanding field of KG embedding in this paper, i.e., lifelong KG embedding. We consider knowledge transfer and retention of the learning on growing snapshots of a KG without having to learn embeddings from scratch. The proposed model includes a masked KG autoencoder for embedding learning and update, with an embedding transfer strategy to inject the learned knowledge into the new entity and relation embeddings, and an embedding regularization method to avoid catastrophic forgetting. To investigate the impacts of different aspects of KG growth, we construct four datasets to evaluate the performance of lifelong KG embedding. Experimental results show that the proposed model outperforms the state-of-the-art inductive and lifelong embedding baselines.

Translational distance-based knowledge graph embedding has shown progressive improvements on the link prediction task, from TransE to the latest state-of-the-art RotatE. However, N-1, 1-N and N-N predictions still remain challenging. In this work, we propose a novel translational distance-based approach for knowledge graph link prediction. The proposed method includes two-folds, first we extend the RotatE from 2D complex domain to high dimension space with orthogonal transforms to model relations for better modeling capacity. Second, the graph context is explicitly modeled via two directed context representations. These context representations are used as part of the distance scoring function to measure the plausibility of the triples during training and inference. The proposed approach effectively improves prediction accuracy on the difficult N-1, 1-N and N-N cases for knowledge graph link prediction task. The experimental results show that it achieves better performance on two benchmark data sets compared to the baseline RotatE, especially on data set (FB15k-237) with many high in-degree connection nodes.

Deep neural networks (DNNs) are successful in many computer vision tasks. However, the most accurate DNNs require millions of parameters and operations, making them energy, computation and memory intensive. This impedes the deployment of large DNNs in low-power devices with limited compute resources. Recent research improves DNN models by reducing the memory requirement, energy consumption, and number of operations without significantly decreasing the accuracy. This paper surveys the progress of low-power deep learning and computer vision, specifically in regards to inference, and discusses the methods for compacting and accelerating DNN models. The techniques can be divided into four major categories: (1) parameter quantization and pruning, (2) compressed convolutional filters and matrix factorization, (3) network architecture search, and (4) knowledge distillation. We analyze the accuracy, advantages, disadvantages, and potential solutions to the problems with the techniques in each category. We also discuss new evaluation metrics as a guideline for future research.

Recently, deep learning has achieved very promising results in visual object tracking. Deep neural networks in existing tracking methods require a lot of training data to learn a large number of parameters. However, training data is not sufficient for visual object tracking as annotations of a target object are only available in the first frame of a test sequence. In this paper, we propose to learn hierarchical features for visual object tracking by using tree structure based Recursive Neural Networks (RNN), which have fewer parameters than other deep neural networks, e.g. Convolutional Neural Networks (CNN). First, we learn RNN parameters to discriminate between the target object and background in the first frame of a test sequence. Tree structure over local patches of an exemplar region is randomly generated by using a bottom-up greedy search strategy. Given the learned RNN parameters, we create two dictionaries regarding target regions and corresponding local patches based on the learned hierarchical features from both top and leaf nodes of multiple random trees. In each of the subsequent frames, we conduct sparse dictionary coding on all candidates to select the best candidate as the new target location. In addition, we online update two dictionaries to handle appearance changes of target objects. Experimental results demonstrate that our feature learning algorithm can significantly improve tracking performance on benchmark datasets.