This article presents an innovative approach for developing an efficient reduced-order model to study the dispersion of urban air pollutants. The need for real-time air quality monitoring has become increasingly important, given the rise in pollutant emissions due to urbanization and its adverse effects on human health. The proposed methodology involves solving the linear advection-diffusion problem, where the solution of the Reynolds-averaged Navier-Stokes equations gives the convective field. At the same time, the source term consists of an empirical time series. However, the computational requirements of this approach, including microscale spatial resolution, repeated evaluation, and low time scale, necessitate the use of high-performance computing facilities, which can be a bottleneck for real-time monitoring. To address this challenge, a problem-specific methodology was developed that leverages a data-driven approach based on Proper Orthogonal Decomposition with regression (POD-R) coupled with Galerkin projection (POD-G) endorsed with the discrete empirical interpolation method (DEIM). The proposed method employs a feedforward neural network to non-intrusively retrieve the reduced-order convective operator required for online evaluation. The numerical framework was validated on synthetic emissions and real wind measurements. The results demonstrate that the proposed approach significantly reduces the computational burden of the traditional approach and is suitable for real-time air quality monitoring. Overall, the study advances the field of reduced order modeling and highlights the potential of data-driven approaches in environmental modeling and large-scale simulations.

相關內容

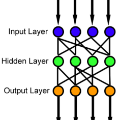

We present a simple linear regression based approach for learning the weights and biases of a neural network, as an alternative to standard gradient based backpropagation. The present work is exploratory in nature, and we restrict the description and experiments to (i) simple feedforward neural networks, (ii) scalar (single output) regression problems, and (iii) invertible activation functions. However, the approach is intended to be extensible to larger, more complex architectures. The key idea is the observation that the input to every neuron in a neural network is a linear combination of the activations of neurons in the previous layer, as well as the parameters (weights and biases) of the layer. If we are able to compute the ideal total input values to every neuron by working backwards from the output, we can formulate the learning problem as a linear least squares problem which iterates between updating the parameters and the activation values. We present an explicit algorithm that implements this idea, and we show that (at least for small problems) the approach is more stable and faster than gradient-based methods.

Incorporating unstructured data into physical models is a challenging problem that is emerging in data assimilation. Traditional approaches focus on well-defined observation operators whose functional forms are typically assumed to be known. This prevents these methods from achieving a consistent model-data synthesis in configurations where the mapping from data-space to model-space is unknown. To address these shortcomings, in this paper we develop a physics-informed dynamical variational autoencoder ($\Phi$-DVAE) to embed diverse data streams into time-evolving physical systems described by differential equations. Our approach combines a standard, possibly nonlinear, filter for the latent state-space model and a VAE, to assimilate the unstructured data into the latent dynamical system. Unstructured data, in our example systems, comes in the form of video data and velocity field measurements, however the methodology is suitably generic to allow for arbitrary unknown observation operators. A variational Bayesian framework is used for the joint estimation of the encoding, latent states, and unknown system parameters. To demonstrate the method, we provide case studies with the Lorenz-63 ordinary differential equation, and the advection and Korteweg-de Vries partial differential equations. Our results, with synthetic data, show that $\Phi$-DVAE provides a data efficient dynamics encoding methodology which is competitive with standard approaches. Unknown parameters are recovered with uncertainty quantification, and unseen data are accurately predicted.

Online polarization research currently focuses on studying single-issue opinion distributions or computing distance metrics of interaction network structures. Limited data availability often restricts studies to positive interaction data, which can misrepresent the reality of a discussion. We introduce a novel framework that aims at combining these three aspects, content and interactions, as well as their nature (positive or negative), while challenging the prevailing notion of polarization as an umbrella term for all forms of online conflict or opposing opinions. In our approach, built on the concepts of cleavage structures and structural balance of signed social networks, we factorize polarization into two distinct metrics: Antagonism and Alignment. Antagonism quantifies hostility in online discussions, based on the reactions of users to content. Alignment uses signed structural information encoded in long-term user-user relations on the platform to describe how well user interactions fit the global and/or traditional sides of discussion. We can analyse the change of these metrics through time, localizing both relevant trends but also sudden changes that can be mapped to specific contexts or events. We apply our methods to two distinct platforms: Birdwatch, a US crowd-based fact-checking extension of Twitter, and DerStandard, an Austrian online newspaper with discussion forums. In these two use cases, we find that our framework is capable of describing the global status of the groups of users (identification of cleavages) while also providing relevant findings on specific issues or in specific time frames. Furthermore, we show that our four metrics describe distinct phenomena, emphasizing their independent consideration for unpacking polarization complexities.

Physics-informed neural networks (PINNs) have emerged as promising surrogate modes for solving partial differential equations (PDEs). Their effectiveness lies in the ability to capture solution-related features through neural networks. However, original PINNs often suffer from bottlenecks, such as low accuracy and non-convergence, limiting their applicability in complex physical contexts. To alleviate these issues, we proposed auxiliary-task learning-based physics-informed neural networks (ATL-PINNs), which provide four different auxiliary-task learning modes and investigate their performance compared with original PINNs. We also employ the gradient cosine similarity algorithm to integrate auxiliary problem loss with the primary problem loss in ATL-PINNs, which aims to enhance the effectiveness of the auxiliary-task learning modes. To the best of our knowledge, this is the first study to introduce auxiliary-task learning modes in the context of physics-informed learning. We conduct experiments on three PDE problems across different fields and scenarios. Our findings demonstrate that the proposed auxiliary-task learning modes can significantly improve solution accuracy, achieving a maximum performance boost of 96.62% (averaging 28.23%) compared to the original single-task PINNs. The code and dataset are open source at //github.com/junjun-yan/ATL-PINN.

Conversion rate (CVR) prediction plays an important role in advertising systems. Recently, supervised deep neural network-based models have shown promising performance in CVR prediction. However, they are data hungry and require an enormous amount of training data. In online advertising systems, although there are millions to billions of ads, users tend to click only a small set of them and to convert on an even smaller set. This data sparsity issue restricts the power of these deep models. In this paper, we propose the Contrastive Learning for CVR prediction (CL4CVR) framework. It associates the supervised CVR prediction task with a contrastive learning task, which can learn better data representations exploiting abundant unlabeled data and improve the CVR prediction performance. To tailor the contrastive learning task to the CVR prediction problem, we propose embedding masking (EM), rather than feature masking, to create two views of augmented samples. We also propose a false negative elimination (FNE) component to eliminate samples with the same feature as the anchor sample, to account for the natural property in user behavior data. We further propose a supervised positive inclusion (SPI) component to include additional positive samples for each anchor sample, in order to make full use of sparse but precious user conversion events. Experimental results on two real-world conversion datasets demonstrate the superior performance of CL4CVR. The source code is available at //github.com/DongRuiHust/CL4CVR.

This paper presents a scalable solution with adjustable computation time for the joint problem of scheduling and assigning machines and transporters for missions that must be completed in a fixed order of operations across multiple stages. A battery-operated multi-robot system with a maximum travel range is employed as the transporter between stages and charging them is considered as an operation. Robots are assigned to a single job until its completion. Additionally, The operation completion time is assumed to be dependent on the machine and the type of operation, but independent of the job. This work aims to minimize a weighted multi-objective goal that includes both the required time and energy consumed by the transporters. This problem is a variation of the flexible flow shop with transports, that is proven to be NP-complete. To provide a solution, time is discretized, the solution space is divided temporally, and jobs are clustered into diverse groups. Finally, an integer linear programming solver is applied within a sliding time window to determine assignments and create a schedule that minimizes the objective. The computation time can be reduced depending on the number of jobs selected at each segment, with a trade-off on optimality. The proposed algorithm finds its application in a water sampling project, where water sampling jobs are assigned to robots, sample deliveries at laboratories are scheduled, and the robots are routed to charging stations.

We introduce DeepNash, an autonomous agent capable of learning to play the imperfect information game Stratego from scratch, up to a human expert level. Stratego is one of the few iconic board games that Artificial Intelligence (AI) has not yet mastered. This popular game has an enormous game tree on the order of $10^{535}$ nodes, i.e., $10^{175}$ times larger than that of Go. It has the additional complexity of requiring decision-making under imperfect information, similar to Texas hold'em poker, which has a significantly smaller game tree (on the order of $10^{164}$ nodes). Decisions in Stratego are made over a large number of discrete actions with no obvious link between action and outcome. Episodes are long, with often hundreds of moves before a player wins, and situations in Stratego can not easily be broken down into manageably-sized sub-problems as in poker. For these reasons, Stratego has been a grand challenge for the field of AI for decades, and existing AI methods barely reach an amateur level of play. DeepNash uses a game-theoretic, model-free deep reinforcement learning method, without search, that learns to master Stratego via self-play. The Regularised Nash Dynamics (R-NaD) algorithm, a key component of DeepNash, converges to an approximate Nash equilibrium, instead of 'cycling' around it, by directly modifying the underlying multi-agent learning dynamics. DeepNash beats existing state-of-the-art AI methods in Stratego and achieved a yearly (2022) and all-time top-3 rank on the Gravon games platform, competing with human expert players.

We describe ACE0, a lightweight platform for evaluating the suitability and viability of AI methods for behaviour discovery in multiagent simulations. Specifically, ACE0 was designed to explore AI methods for multi-agent simulations used in operations research studies related to new technologies such as autonomous aircraft. Simulation environments used in production are often high-fidelity, complex, require significant domain knowledge and as a result have high R&D costs. Minimal and lightweight simulation environments can help researchers and engineers evaluate the viability of new AI technologies for behaviour discovery in a more agile and potentially cost effective manner. In this paper we describe the motivation for the development of ACE0.We provide a technical overview of the system architecture, describe a case study of behaviour discovery in the aerospace domain, and provide a qualitative evaluation of the system. The evaluation includes a brief description of collaborative research projects with academic partners, exploring different AI behaviour discovery methods.

Recent contrastive representation learning methods rely on estimating mutual information (MI) between multiple views of an underlying context. E.g., we can derive multiple views of a given image by applying data augmentation, or we can split a sequence into views comprising the past and future of some step in the sequence. Contrastive lower bounds on MI are easy to optimize, but have a strong underestimation bias when estimating large amounts of MI. We propose decomposing the full MI estimation problem into a sum of smaller estimation problems by splitting one of the views into progressively more informed subviews and by applying the chain rule on MI between the decomposed views. This expression contains a sum of unconditional and conditional MI terms, each measuring modest chunks of the total MI, which facilitates approximation via contrastive bounds. To maximize the sum, we formulate a contrastive lower bound on the conditional MI which can be approximated efficiently. We refer to our general approach as Decomposed Estimation of Mutual Information (DEMI). We show that DEMI can capture a larger amount of MI than standard non-decomposed contrastive bounds in a synthetic setting, and learns better representations in a vision domain and for dialogue generation.

Graph Convolutional Network (GCN) has been widely applied in transportation demand prediction due to its excellent ability to capture non-Euclidean spatial dependence among station-level or regional transportation demands. However, in most of the existing research, the graph convolution was implemented on a heuristically generated adjacency matrix, which could neither reflect the real spatial relationships of stations accurately, nor capture the multi-level spatial dependence of demands adaptively. To cope with the above problems, this paper provides a novel graph convolutional network for transportation demand prediction. Firstly, a novel graph convolution architecture is proposed, which has different adjacency matrices in different layers and all the adjacency matrices are self-learned during the training process. Secondly, a layer-wise coupling mechanism is provided, which associates the upper-level adjacency matrix with the lower-level one. It also reduces the scale of parameters in our model. Lastly, a unitary network is constructed to give the final prediction result by integrating the hidden spatial states with gated recurrent unit, which could capture the multi-level spatial dependence and temporal dynamics simultaneously. Experiments have been conducted on two real-world datasets, NYC Citi Bike and NYC Taxi, and the results demonstrate the superiority of our model over the state-of-the-art ones.