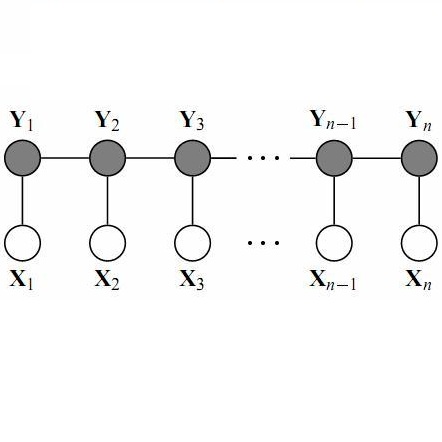

Sparse Conditional Random Field (CRF) is a powerful technique in computer vision and natural language processing for structured prediction. However, solving sparse CRFs in large-scale applications remains challenging. In this paper, we propose a novel safe dynamic screening method that exploits an accurate dual optimum estimation to identify and remove the irrelevant features during the training process. Thus, the problem size can be reduced continuously, leading to great savings in the computational cost without sacrificing any accuracy on the finally learned model. To the best of our knowledge, this is the first screening method which introduces the dual optimum estimation technique -- by carefully exploring and exploiting the strong convexity and the complex structure of the dual problem -- in static screening methods to dynamic screening. In this way, we can absorb the advantages of both the static and dynamic screening methods and avoid their drawbacks. Our estimation would be much more accurate than those developed based on the duality gap, which contributes to a much stronger screening rule. Moreover, our method is also the first screening method in sparse CRFs and even structure prediction models. Experimental results on both synthetic and real-world datasets demonstrate that the speedup gained by our method is significant.

相關內容

We present an adaptive stochastic variance reduced method with an implicit approach for adaptivity. As a variant of SARAH, our method employs the stochastic recursive gradient yet adjusts step-size based on local geometry. We provide convergence guarantees for finite-sum minimization problems and show a faster convergence than SARAH can be achieved if local geometry permits. Furthermore, we propose a practical, fully adaptive variant, which does not require any knowledge of local geometry and any effort of tuning the hyper-parameters. This algorithm implicitly computes step-size and efficiently estimates local Lipschitz smoothness of stochastic functions. The numerical experiments demonstrate the algorithm's strong performance compared to its classical counterparts and other state-of-the-art first-order methods.

Random field models are mathematical structures used in the study of stochastic complex systems. In this paper, we compute the shape operator of Gaussian random field manifolds using the first and second fundamental forms (Fisher information matrices). Using Markov Chain Monte Carlo techniques, we simulate the dynamics of these random fields and compute the Gaussian curvature of the parametric space, analyzing how this quantity changes along phase transitions. During the simulation, we have observed an unexpected phenomenon that we called the \emph{curvature effect}, which indicates that a highly asymmetric geometric deformation happens in the underlying parametric space when there are significant increase/decrease in the system's entropy. This asymmetric pattern relates to the emergence of hysteresis, leading to an intrinsic arrow of time along the dynamics.

In recent years, the literature on Bayesian high-dimensional variable selection has rapidly grown. It is increasingly important to understand whether these Bayesian methods can consistently estimate the model parameters. To this end, shrinkage priors are useful for identifying relevant signals in high-dimensional data. For multivariate linear regression models with Gaussian response variables, Bai and Ghosh (2018) proposed a multivariate Bayesian model with shrinkage priors (MBSP) for estimation and variable selection in high-dimensional settings. However, the proofs of posterior consistency for the MBSP method (Theorems 3 and 4 of Bai and Ghosh (2018) were incorrect. In this paper, we provide a corrected proof of Theorems 3 and 4 of Bai and Ghosh (2018). We leverage these new proofs to extend the MBSP model to multivariate generalized linear models (GLMs). Under our proposed model (MBSP-GLM), multiple responses belonging to the exponential family are simultaneously modeled and mixed-type responses are allowed. We show that the MBSP-GLM model achieves strong posterior consistency when $p$ grows at a subexponential rate with $n$. Furthermore, we quantify the posterior contraction rate at which the posterior shrinks around the true regression coefficients and allow the dimension of the responses $q$ to grow as $n$ grows. Thus, we strengthen the previous results on posterior consistency, which did not provide rate results. This greatly expands the scope of the MBSP model to include response variables of many data types, including binary and count data. To the best of our knowledge, this is the first posterior contraction result for multivariate Bayesian GLMs.

In model-based reinforcement learning for safety-critical control systems, it is important to formally certify system properties (e.g., safety, stability) under the learned controller. However, as existing methods typically apply formal verification \emph{after} the controller has been learned, it is sometimes difficult to obtain any certificate, even after many iterations between learning and verification. To address this challenge, we propose a framework that jointly conducts reinforcement learning and formal verification by formulating and solving a novel bilevel optimization problem, which is differentiable by the gradients from the value function and certificates. Experiments on a variety of examples demonstrate the significant advantages of our framework over the model-based stochastic value gradient (SVG) method and the model-free proximal policy optimization (PPO) method in finding feasible controllers with barrier functions and Lyapunov functions that ensure system safety and stability.

Predictor screening rules, which discard predictors from the design matrix before fitting a model, have had considerable impact on the speed with which l1-regularized regression problems, such as the lasso, can be solved. Current state-of-the-art screening rules, however, have difficulties in dealing with highly-correlated predictors, often becoming too conservative. In this paper, we present a new screening rule to deal with this issue: the Hessian Screening Rule. The rule uses second-order information from the model to provide more accurate screening as well as higher-quality warm starts. The proposed rule outperforms all studied alternatives on data sets with high correlation for both l1-regularized least-squares (the lasso) and logistic regression. It also performs best overall on the real data sets that we examine.

The annotation of disease severity for medical image datasets often relies on collaborative decisions from multiple human graders. The intra-observer variability derived from individual differences always persists in this process, yet the influence is often underestimated. In this paper, we cast the intra-observer variability as an uncertainty problem and incorporate the label uncertainty information as guidance into the disease screening model to improve the final decision. The main idea is dividing the images into simple and hard cases by uncertainty information, and then developing a multi-stream network to deal with different cases separately. Particularly, for hard cases, we strengthen the network's capacity in capturing the correct disease features and resisting the interference of uncertainty. Experiments on a fundus image-based glaucoma screening case study show that the proposed model outperforms several baselines, especially in screening hard cases.

3D modeling based on point clouds is an efficient way to reconstruct and create detailed 3D content. However, the geometric procedure may lose accuracy due to high redundancy and the absence of an explicit structure. In this work, we propose a human-in-the-loop sketch-based point cloud reconstruction framework to leverage users cognitive abilities in geometry extraction. We present an interactive drawing interface for 3D model creation from point cloud data with the help of user sketches. We adopt an optimization method in which the user can continuously edit the contours extracted from the obtained 3D model and retrieve the model iteratively. Finally, we verify the proposed user interface for modeling from sparse point clouds. see video here //www.youtube.com/watch?v=0H19NyXDRJE .

This PhD thesis contains several contributions to the field of statistical causal modeling. Statistical causal models are statistical models embedded with causal assumptions that allow for the inference and reasoning about the behavior of stochastic systems affected by external manipulation (interventions). This thesis contributes to the research areas concerning the estimation of causal effects, causal structure learning, and distributionally robust (out-of-distribution generalizing) prediction methods. We present novel and consistent linear and non-linear causal effects estimators in instrumental variable settings that employ data-dependent mean squared prediction error regularization. Our proposed estimators show, in certain settings, mean squared error improvements compared to both canonical and state-of-the-art estimators. We show that recent research on distributionally robust prediction methods has connections to well-studied estimators from econometrics. This connection leads us to prove that general K-class estimators possess distributional robustness properties. We, furthermore, propose a general framework for distributional robustness with respect to intervention-induced distributions. In this framework, we derive sufficient conditions for the identifiability of distributionally robust prediction methods and present impossibility results that show the necessity of several of these conditions. We present a new structure learning method applicable in additive noise models with directed trees as causal graphs. We prove consistency in a vanishing identifiability setup and provide a method for testing substructure hypotheses with asymptotic family-wise error control that remains valid post-selection. Finally, we present heuristic ideas for learning summary graphs of nonlinear time-series models.

This study considers the 3D human pose estimation problem in a single RGB image by proposing a conditional random field (CRF) model over 2D poses, in which the 3D pose is obtained as a byproduct of the inference process. The unary term of the proposed CRF model is defined based on a powerful heat-map regression network, which has been proposed for 2D human pose estimation. This study also presents a regression network for lifting the 2D pose to 3D pose and proposes the prior term based on the consistency between the estimated 3D pose and the 2D pose. To obtain the approximate solution of the proposed CRF model, the N-best strategy is adopted. The proposed inference algorithm can be viewed as sequential processes of bottom-up generation of 2D and 3D pose proposals from the input 2D image based on deep networks and top-down verification of such proposals by checking their consistencies. To evaluate the proposed method, we use two large-scale datasets: Human3.6M and HumanEva. Experimental results show that the proposed method achieves the state-of-the-art 3D human pose estimation performance.

We study response generation for open domain conversation in chatbots. Existing methods assume that words in responses are generated from an identical vocabulary regardless of their inputs, which not only makes them vulnerable to generic patterns and irrelevant noise, but also causes a high cost in decoding. We propose a dynamic vocabulary sequence-to-sequence (DVS2S) model which allows each input to possess their own vocabulary in decoding. In training, vocabulary construction and response generation are jointly learned by maximizing a lower bound of the true objective with a Monte Carlo sampling method. In inference, the model dynamically allocates a small vocabulary for an input with the word prediction model, and conducts decoding only with the small vocabulary. Because of the dynamic vocabulary mechanism, DVS2S eludes many generic patterns and irrelevant words in generation, and enjoys efficient decoding at the same time. Experimental results on both automatic metrics and human annotations show that DVS2S can significantly outperform state-of-the-art methods in terms of response quality, but only requires 60% decoding time compared to the most efficient baseline.