Assessing the impact of an intervention using time-series observational data on multiple units and outcomes is a frequent problem in many fields of scientific research. In this paper, we present a novel method to estimate intervention effects in such a setting by generalising existing approaches based on the factor analysis model and developing a Bayesian algorithm for inference. Our method is one of the few that can simultaneously: deal with outcomes of mixed type (continuous, binomial, count); increase efficiency in the estimates of the causal effects by jointly modelling multiple outcomes affected by the intervention; easily provide uncertainty quantification for all causal estimands of interest. We use the proposed approach to evaluate the impact that local tracing partnerships (LTP) had on the effectiveness of England's Test and Trace (TT) programme for COVID-19. Our analyses suggest that, overall, LTPs had a small positive impact on TT. However, there is considerable heterogeneity in the estimates of the causal effects over units and time.

相關內容

In many scientific disciplines, coarse-grained causal models are used to explain and predict the dynamics of more fine-grained systems. Naturally, such models require appropriate macrovariables. Automated procedures to detect suitable variables would be useful to leverage increasingly available high-dimensional observational datasets. This work introduces a novel algorithmic approach that is inspired by a new characterisation of causal macrovariables as information bottlenecks between microstates. Its general form can be adapted to address individual needs of different scientific goals. After a further transformation step, the causal relationships between learned variables can be investigated through additive noise models. Experiments on both simulated data and on a real climate dataset are reported. In a synthetic dataset, the algorithm robustly detects the ground-truth variables and correctly infers the causal relationships between them. In a real climate dataset, the algorithm robustly detects two variables that correspond to the two known variations of the El Nino phenomenon.

With the outbreak of COVID-19 pandemic, a dire need to effectively identify the individuals who may have come in close-contact to others who have been infected with COVID-19 has risen. This process of identifying individuals, also termed as 'Contact tracing', has significant implications for the containment and control of the spread of this virus. However, manual tracing has proven to be ineffective calling for automated contact tracing approaches. As such, this research presents an automated machine learning system for identifying individuals who may have come in contact with others infected with COVID-19 using sensor data transmitted through handheld devices. This paper describes the different approaches followed in arriving at an optimal solution model that effectually predicts whether a person has been in close proximity to an infected individual using a gradient boosting algorithm and time series feature extraction.

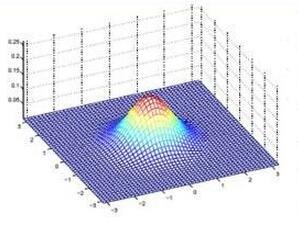

Seismic networks provide data that are used as basis both for public safety decisions and for scientific research. Their configuration affects the data completeness, which in turn, critically affects several seismological scientific targets (e.g., earthquake prediction, seismic hazard...). In this context, a key aspect is how to map earthquakes density in seismogenic areas from censored data or even in areas that are not covered by the network. We propose to predict the spatial distribution of earthquakes from the knowledge of presence locations and geological relationships, taking into account any interactions between records. Namely, in a more general setting, we aim to estimate the intensity function of a point process, conditional to its censored realization, as in geostatistics for continuous processes. We define a predictor as the best linear unbiased combination of the observed point pattern. We show that the weight function associated to the predictor is the solution of a Fredholm equation of second kind. Both the kernel and the source term of the Fredholm equation are related to the first-and second-order characteristics of the point process through the intensity and the pair correlation function. Results are presented and illustrated on simulated non-stationary point processes and real data for mapping Greek Hellenic seismicity in a region with unreliable and incomplete records.

Many applications require the collection of data on different variables or measurements over many system performance metrics. We term those broadly as measures or variables. Often data collection along each measure incurs a cost, thus it is desirable to consider the cost of measures in modeling. This is a fairly new class of problems in the area of cost-sensitive learning. A few attempts have been made to incorporate costs in combining and selecting measures. However, existing studies either do not strictly enforce a budget constraint, or are not the `most' cost effective. With a focus on classification problem, we propose a computationally efficient approach that could find a near optimal model under a given budget by exploring the most `promising' part of the solution space. Instead of outputting a single model, we produce a model schedule -- a list of models, sorted by model costs and expected predictive accuracy. This could be used to choose the model with the best predictive accuracy under a given budget, or to trade off between the budget and the predictive accuracy. Experiments on some benchmark datasets show that our approach compares favorably to competing methods.

We establish an equivalence between a family of adversarial training problems for non-parametric binary classification and a family of regularized risk minimization problems where the regularizer is a nonlocal perimeter functional. The resulting regularized risk minimization problems admit exact convex relaxations of the type $L^1+$ (nonlocal) $\operatorname{TV}$, a form frequently studied in image analysis and graph-based learning. A rich geometric structure is revealed by this reformulation which in turn allows us to establish a series of properties of optimal solutions of the original problem, including the existence of minimal and maximal solutions (interpreted in a suitable sense), and the existence of regular solutions (also interpreted in a suitable sense). In addition, we highlight how the connection between adversarial training and perimeter minimization problems provides a novel, directly interpretable, statistical motivation for a family of regularized risk minimization problems involving perimeter/total variation. The majority of our theoretical results are independent of the distance used to define adversarial attacks.

Structural equation models are commonly used to capture the relationship between sets of observed and unobservable variables. Traditionally these models are fitted using frequentist approaches but recently researchers and practitioners have developed increasing interest in Bayesian inference. In Bayesian settings, inference for these models is typically performed via Markov chain Monte Carlo methods, which may be computationally intensive for models with a large number of manifest variables or complex structures. Variational approximations can be a fast alternative; however, they have not been adequately explored for this class of models. We develop a mean field variational Bayes approach for fitting elemental structural equation models and demonstrate how bootstrap can considerably improve the variational approximation quality. We show that this variational approximation method can provide reliable inference while being significantly faster than Markov chain Monte Carlo.

Semiconductor device models are essential to understand the charge transport in thin film transistors (TFTs). Using these TFT models to draw inference involves estimating parameters used to fit to the experimental data. These experimental data can involve extracted charge carrier mobility or measured current. Estimating these parameters help us draw inferences about device performance. Fitting a TFT model for a given experimental data using the model parameters relies on manual fine tuning of multiple parameters by human experts. Several of these parameters may have confounding effects on the experimental data, making their individual effect extraction a non-intuitive process during manual tuning. To avoid this convoluted process, we propose a new method for automating the model parameter extraction process resulting in an accurate model fitting. In this work, model choice based approximate Bayesian computation (aBc) is used for generating the posterior distribution of the estimated parameters using observed mobility at various gate voltage values. Furthermore, it is shown that the extracted parameters can be accurately predicted from the mobility curves using gradient boosted trees. This work also provides a comparative analysis of the proposed framework with fine-tuned neural networks wherein the proposed framework is shown to perform better.

This paper investigates the impact of COVID-19 on financial markets. It focuses on the evolution of the market efficiency, using two efficiency indicators: the Hurst exponent and the memory parameter of a fractional L\'evy-stable motion. The second approach combines, in the same model of dynamic, an alpha-stable distribution and a dependence structure between price returns. We provide a dynamic estimation method for the two efficiency indicators. This method introduces a free parameter, the discount factor, which we select so as to get the best alpha-stable density forecasts for observed price returns. The application to stock indices during the COVID-19 crisis shows a strong loss of efficiency for US indices. On the opposite, Asian and Australian indices seem less affected and the inefficiency of these markets during the COVID-19 crisis is even questionable.

This work develops a multiphase thermomechanical model of porous silica aerogel and implements an uncertainty analysis framework consisting of the Sobol methods for global sensitivity analyses and Bayesian inference using a set of experimental data of silica aerogel. A notable feature of this work is implementing a new noise model within the Bayesian inversion to account for data uncertainty and modeling error. The hyper-parameters in the likelihood balance data misfit and prior contribution to the parameter posteriors and prevent their biased estimation. The results indicate that the uncertainty in solid conductivity and elasticity are the most influential parameters affecting the model output variance. Also, the Bayesian inference shows that despite the microstructural randomness in the thermal measurements, the model captures the data with 2% error. However, the model is inadequate in simulating the stress-strain measurements resulting in significant uncertainty in the computational prediction of a building insulation component.

A key challenge of big data analytics is how to collect a large volume of (labeled) data. Crowdsourcing aims to address this challenge via aggregating and estimating high-quality data (e.g., sentiment label for text) from pervasive clients/users. Existing studies on crowdsourcing focus on designing new methods to improve the aggregated data quality from unreliable/noisy clients. However, the security aspects of such crowdsourcing systems remain under-explored to date. We aim to bridge this gap in this work. Specifically, we show that crowdsourcing is vulnerable to data poisoning attacks, in which malicious clients provide carefully crafted data to corrupt the aggregated data. We formulate our proposed data poisoning attacks as an optimization problem that maximizes the error of the aggregated data. Our evaluation results on one synthetic and two real-world benchmark datasets demonstrate that the proposed attacks can substantially increase the estimation errors of the aggregated data. We also propose two defenses to reduce the impact of malicious clients. Our empirical results show that the proposed defenses can substantially reduce the estimation errors of the data poisoning attacks.