In this work, we propose a global model selection criterion to estimate the graph of conditional dependencies of a random vector based on a finite sample. By global criterion, we mean optimizing a function over the entire set of possible graphs, eliminating the need to estimate the individual neighborhoods and subsequently combine them to estimate the graph. We prove the almost sure convergence of the graph estimator. This convergence holds provided the data is a realization of a multivariate stochastic process that satisfies a mixing condition. To the best of our knowledge, these are the first results to show the consistency of a model selection criterion for Markov random fields on graphs under non-independent data.

相關內容

Automated mark localization in scatter images, greatly helpful for discovering knowledge and understanding enormous document images and reasoning in visual question answering AI systems, is a highly challenging problem because of the ubiquity of overlapping marks. Locating overlapping marks faces many difficulties such as no texture, less contextual information, hallow shape and tiny size. Here, we formulate it as a combinatorial optimization problem on clustering-based re-visualization from a non-training generative perspective, to locate scatter marks by finding the status of multi-variables when an objective function reaches a minimum. The objective function is constructed on difference between binarized scatter images and corresponding generated re-visualization based on their clustering. Fundamentally, re-visualization tries to generate a new scatter graph only taking a rasterized scatter image as an input, and clustering is employed to provide the information for such re-visualization. This method could stably locate severely-overlapping, variable-size and variable-shape marks in scatter images without dependence of any training dataset or reference. Meanwhile, we propose an adaptive variant of simulated annealing which can works on various connected regions. In addition, we especially built a dataset named SML2023 containing hundreds of scatter images with different markers and various levels of overlapping severity, and tested the proposed method and compared it to existing methods. The results show that it can accurately locate most marks in scatter images with different overlapping severity and marker types, with about 0.3 absolute increase on an assignment-cost-based metric in comparison with state-of-the-art methods. This work is of value to data mining on massive web pages and literatures, and shedding new light on image measurement such as bubble counting.

In this paper, we study the stability and convergence of a fully discrete finite difference scheme for the initial value problem associated with the Korteweg-De Vries (KdV) equation. We employ the Crank-Nicolson method for temporal discretization and establish that the scheme is $L^2$-conservative. The convergence analysis reveals that utilizing inherent Kato's local smoothing effect, the proposed scheme converges to a classical solution for sufficiently regular initial data $u_0 \in H^{3}(\mathbb{R})$ and to a weak solution in $L^2(0,T;L^2_{\text{loc}}(\mathbb{R}))$ for non-smooth initial data $u_0 \in L^2(\mathbb{R})$. Optimal convergence rates in both time and space for the devised scheme are derived. The theoretical results are justified through several numerical illustrations.

A high-order, degree-adaptive hybridizable discontinuous Galerkin (HDG) method is presented for two-fluid incompressible Stokes flows, with boundaries and interfaces described using NURBS. The NURBS curves are embedded in a fixed Cartesian grid, yielding an unfitted HDG scheme capable of treating the exact geometry of the boundaries/interfaces, circumventing the need for fitted, high-order, curved meshes. The framework of the NURBS-enhanced finite element method (NEFEM) is employed for accurate quadrature along immersed NURBS and in elements cut by NURBS curves. A Nitsche's formulation is used to enforce Dirichlet conditions on embedded surfaces, yielding unknowns only on the mesh skeleton as in standard HDG, without introducing any additional degree of freedom on non-matching boundaries/interfaces. The resulting unfitted HDG-NEFEM method combines non-conforming meshes, exact NURBS geometry and high-order approximations to provide high-fidelity results on coarse meshes, independent of the geometric features of the domain. Numerical examples illustrate the optimal accuracy and robustness of the method, even in the presence of badly cut cells or faces, and its suitability to simulate microfluidic systems from CAD geometries.

May's Theorem [K. O. May, Econometrica 20 (1952) 680-684] characterizes majority voting on two alternatives as the unique preferential voting method satisfying several simple axioms. Here we show that by adding some desirable axioms to May's axioms, we can uniquely determine how to vote on three alternatives. In particular, we add two axioms stating that the voting method should mitigate spoiler effects and avoid the so-called strong no show paradox. We prove a theorem stating that any preferential voting method satisfying our enlarged set of axioms, which includes some weak homogeneity and preservation axioms, agrees with Minimax voting in all three-alternative elections, except perhaps in some improbable knife-edged elections in which ties may arise and be broken in different ways.

The ensemble Kalman inversion (EKI), a recently introduced optimisation method for solving inverse problems, is widely employed for the efficient and derivative-free estimation of unknown parameters. Specifically in cases involving ill-posed inverse problems and high-dimensional parameter spaces, the scheme has shown promising success. However, in its general form, the EKI does not take constraints into account, which are essential and often stem from physical limitations or specific requirements. Based on a log-barrier approach, we suggest adapting the continuous-time formulation of EKI to incorporate convex inequality constraints. We underpin this adaptation with a theoretical analysis that provides lower and upper bounds on the ensemble collapse, as well as convergence to the constraint optimum for general nonlinear forward models. Finally, we showcase our results through two examples involving partial differential equations (PDEs).

Multimodal emotion recognition (MMER) is an active research field that aims to accurately recognize human emotions by fusing multiple perceptual modalities. However, inherent heterogeneity across modalities introduces distribution gaps and information redundancy, posing significant challenges for MMER. In this paper, we propose a novel fine-grained disentangled representation learning (FDRL) framework to address these challenges. Specifically, we design modality-shared and modality-private encoders to project each modality into modality-shared and modality-private subspaces, respectively. In the shared subspace, we introduce a fine-grained alignment component to learn modality-shared representations, thus capturing modal consistency. Subsequently, we tailor a fine-grained disparity component to constrain the private subspaces, thereby learning modality-private representations and enhancing their diversity. Lastly, we introduce a fine-grained predictor component to ensure that the labels of the output representations from the encoders remain unchanged. Experimental results on the IEMOCAP dataset show that FDRL outperforms the state-of-the-art methods, achieving 78.34% and 79.44% on WAR and UAR, respectively.

To achieve human-level dexterity, robots must infer spatial awareness from multimodal sensing to reason over contact interactions. During in-hand manipulation of novel objects, such spatial awareness involves estimating the object's pose and shape. The status quo for in-hand perception primarily employs vision, and restricts to tracking a priori known objects. Moreover, visual occlusion of objects in-hand is imminent during manipulation, preventing current systems to push beyond tasks without occlusion. We combine vision and touch sensing on a multi-fingered hand to estimate an object's pose and shape during in-hand manipulation. Our method, NeuralFeels, encodes object geometry by learning a neural field online and jointly tracks it by optimizing a pose graph problem. We study multimodal in-hand perception in simulation and the real-world, interacting with different objects via a proprioception-driven policy. Our experiments show final reconstruction F-scores of $81$% and average pose drifts of $4.7\,\text{mm}$, further reduced to $2.3\,\text{mm}$ with known CAD models. Additionally, we observe that under heavy visual occlusion we can achieve up to $94$% improvements in tracking compared to vision-only methods. Our results demonstrate that touch, at the very least, refines and, at the very best, disambiguates visual estimates during in-hand manipulation. We release our evaluation dataset of 70 experiments, FeelSight, as a step towards benchmarking in this domain. Our neural representation driven by multimodal sensing can serve as a perception backbone towards advancing robot dexterity. Videos can be found on our project website //suddhu.github.io/neural-feels/

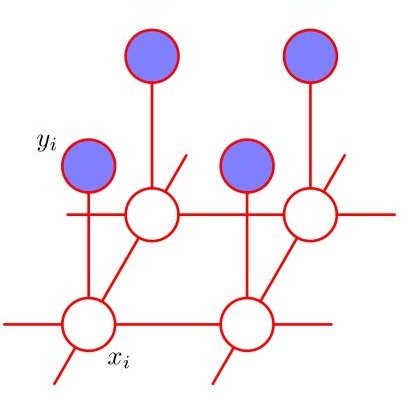

Heterogeneous graph neural networks (HGNNs) as an emerging technique have shown superior capacity of dealing with heterogeneous information network (HIN). However, most HGNNs follow a semi-supervised learning manner, which notably limits their wide use in reality since labels are usually scarce in real applications. Recently, contrastive learning, a self-supervised method, becomes one of the most exciting learning paradigms and shows great potential when there are no labels. In this paper, we study the problem of self-supervised HGNNs and propose a novel co-contrastive learning mechanism for HGNNs, named HeCo. Different from traditional contrastive learning which only focuses on contrasting positive and negative samples, HeCo employs cross-viewcontrastive mechanism. Specifically, two views of a HIN (network schema and meta-path views) are proposed to learn node embeddings, so as to capture both of local and high-order structures simultaneously. Then the cross-view contrastive learning, as well as a view mask mechanism, is proposed, which is able to extract the positive and negative embeddings from two views. This enables the two views to collaboratively supervise each other and finally learn high-level node embeddings. Moreover, two extensions of HeCo are designed to generate harder negative samples with high quality, which further boosts the performance of HeCo. Extensive experiments conducted on a variety of real-world networks show the superior performance of the proposed methods over the state-of-the-arts.

Recent work pre-training Transformers with self-supervised objectives on large text corpora has shown great success when fine-tuned on downstream NLP tasks including text summarization. However, pre-training objectives tailored for abstractive text summarization have not been explored. Furthermore there is a lack of systematic evaluation across diverse domains. In this work, we propose pre-training large Transformer-based encoder-decoder models on massive text corpora with a new self-supervised objective. In PEGASUS, important sentences are removed/masked from an input document and are generated together as one output sequence from the remaining sentences, similar to an extractive summary. We evaluated our best PEGASUS model on 12 downstream summarization tasks spanning news, science, stories, instructions, emails, patents, and legislative bills. Experiments demonstrate it achieves state-of-the-art performance on all 12 downstream datasets measured by ROUGE scores. Our model also shows surprising performance on low-resource summarization, surpassing previous state-of-the-art results on 6 datasets with only 1000 examples. Finally we validated our results using human evaluation and show that our model summaries achieve human performance on multiple datasets.

In this paper, we propose the joint learning attention and recurrent neural network (RNN) models for multi-label classification. While approaches based on the use of either model exist (e.g., for the task of image captioning), training such existing network architectures typically require pre-defined label sequences. For multi-label classification, it would be desirable to have a robust inference process, so that the prediction error would not propagate and thus affect the performance. Our proposed model uniquely integrates attention and Long Short Term Memory (LSTM) models, which not only addresses the above problem but also allows one to identify visual objects of interests with varying sizes without the prior knowledge of particular label ordering. More importantly, label co-occurrence information can be jointly exploited by our LSTM model. Finally, by advancing the technique of beam search, prediction of multiple labels can be efficiently achieved by our proposed network model.