Are we using the right potential functions in the Conditional Random Field models that are popular in the Vision community? Semantic segmentation and other pixel-level labelling tasks have made significant progress recently due to the deep learning paradigm. However, most state-of-the-art structured prediction methods also include a random field model with a hand-crafted Gaussian potential to model spatial priors, label consistencies and feature-based image conditioning. In this paper, we challenge this view by developing a new inference and learning framework which can learn pairwise CRF potentials restricted only by their dependence on the image pixel values and the size of the support. Both standard spatial and high-dimensional bilateral kernels are considered. Our framework is based on the observation that CRF inference can be achieved via projected gradient descent and consequently, can easily be integrated in deep neural networks to allow for end-to-end training. It is empirically demonstrated that such learned potentials can improve segmentation accuracy and that certain label class interactions are indeed better modelled by a non-Gaussian potential. In addition, we compare our inference method to the commonly used mean-field algorithm. Our framework is evaluated on several public benchmarks for semantic segmentation with improved performance compared to previous state-of-the-art CNN+CRF models.

相關內容

Recent studies in image retrieval task have shown that ensembling different models and combining multiple global descriptors lead to performance improvement. However, training different models for ensemble is not only difficult but also inefficient with respect to time or memory. In this paper, we propose a novel framework that exploits multiple global descriptors to get an ensemble-like effect while it can be trained in an end-to-end manner. The proposed framework is flexible and expandable by the global descriptor, CNN backbone, loss, and dataset. Moreover, we investigate the effectiveness of combining multiple global descriptors with quantitative and qualitative analysis. Our extensive experiments show that the combined descriptor outperforms a single global descriptor, as it can utilize different types of feature properties. In the benchmark evaluation, the proposed framework achieves the state-of-the-art performance on the CARS196, CUB200-2011, In-shop Clothes and Stanford Online Products on image retrieval tasks by a large margin compared to competing approaches. Our model implementations and pretrained models are publicly available.

Inferencing with network data necessitates the mapping of its nodes into a vector space, where the relationships are preserved. However, with multi-layered networks, where multiple types of relationships exist for the same set of nodes, it is crucial to exploit the information shared between layers, in addition to the distinct aspects of each layer. In this paper, we propose a novel approach that first obtains node embeddings in all layers jointly via DeepWalk on a \textit{supra} graph, which allows interactions between layers, and then fine-tunes the embeddings to encourage cohesive structure in the latent space. With empirical studies in node classification, link prediction and multi-layered community detection, we show that the proposed approach outperforms existing single- and multi-layered network embedding algorithms on several benchmarks. In addition to effectively scaling to a large number of layers (tested up to $37$), our approach consistently produces highly modular community structure, even when compared to methods that directly optimize for the modularity function.

Medical image segmentation is a primary task in many applications, and the accuracy of the segmentation is a necessity. Recently, many deep learning networks derived from U-Net have been extensively used and have achieved notable results. To further improve and refine the performance of U-Net, parallel decoders along with mask prediction decoder have been carried out and have shown significant improvement with additional advantages. In our work, we utilize the advantages of using a combination of contour and distance map as regularizers. In turn, we propose a novel architecture Psi-Net with a single encoder and three parallel decoders, one decoder to learn the mask and other two to learn the auxiliary tasks of contour detection and distance map estimation. The learning of these auxiliary tasks helps in capturing the shape and boundary. We also propose a new joint loss function for the proposed architecture. The loss function consists of a weighted combination of Negative likelihood and Mean Square Error loss. We have used two publicly available datasets: 1) Origa dataset for the task of optic cup and disc segmentation and 2) Endovis segment dataset for the task of polyp segmentation to evaluate our model. We have conducted extensive experiments using our network to show our model gives better results in terms of segmentation, boundary and shape metrics.

For the challenging semantic image segmentation task the most efficient models have traditionally combined the structured modelling capabilities of Conditional Random Fields (CRFs) with the feature extraction power of CNNs. In more recent works however, CRF post-processing has fallen out of favour. We argue that this is mainly due to the slow training and inference speeds of CRFs, as well as the difficulty of learning the internal CRF parameters. To overcome both issues we propose to add the assumption of conditional independence to the framework of fully-connected CRFs. This allows us to reformulate the inference in terms of convolutions, which can be implemented highly efficiently on GPUs. Doing so speeds up inference and training by a factor of more then 100. All parameters of the convolutional CRFs can easily be optimized using backpropagation. To facilitating further CRF research we make our implementation publicly available. Please visit: //github.com/MarvinTeichmann/ConvCRF

Weak supervision, e.g., in the form of partial labels or image tags, is currently attracting significant attention in CNN segmentation as it can mitigate the lack of full and laborious pixel/voxel annotations. Enforcing high-order (global) inequality constraints on the network output, for instance, on the size of the target region, can leverage unlabeled data, guiding training with domain-specific knowledge. Inequality constraints are very flexible because they do not assume exact prior knowledge. However,constrained Lagrangian dual optimization has been largely avoided in deep networks, mainly for computational tractability reasons.To the best of our knowledge, the method of Pathak et al. is the only prior work that addresses deep CNNs with linear constraints in weakly supervised segmentation. It uses the constraints to synthesize fully-labeled training masks (proposals)from weak labels, mimicking full supervision and facilitating dual optimization.We propose to introduce a differentiable term, which enforces inequality constraints directly in the loss function, avoiding expensive Lagrangian dual iterates and proposal generation. From constrained-optimization perspective, our simple approach is not optimal as there is no guarantee that the constraints are satisfied. However, surprisingly,it yields substantially better results than the proposal-based constrained CNNs, while reducing the computational demand for training.In the context of cardiac images, we reached a segmentation performance close to full supervision using a fraction (0.1%) of the full ground-truth labels and image-level tags.While our experiments focused on basic linear constraints such as the target-region size and image tags, our framework can be easily extended to other non-linear constraints.Therefore, it has the potential to close the gap between weakly and fully supervised learning in semantic image segmentation.

In this paper, we focus on triplet-based deep binary embedding networks for image retrieval task. The triplet loss has been shown to be most effective for the ranking problem. However, most of the previous works treat the triplets equally or select the hard triplets based on the loss. Such strategies do not consider the order relations, which is important for retrieval task. To this end, we propose an order-aware reweighting method to effectively train the triplet-based deep networks, which up-weights the important triplets and down-weights the uninformative triplets. First, we present the order-aware weighting factors to indicate the importance of the triplets, which depend on the rank order of binary codes. Then, we reshape the triplet loss to the squared triplet loss such that the loss function will put more weights on the important triplets. Extensive evaluations on four benchmark datasets show that the proposed method achieves significant performance compared with the state-of-the-art baselines.

In the same vein of discriminative one-shot learning, Siamese networks allow recognizing an object from a single exemplar with the same class label. However, they do not take advantage of the underlying structure of the data and the relationship among the multitude of samples as they only rely on pairs of instances for training. In this paper, we propose a new quadruplet deep network to examine the potential connections among the training instances, aiming to achieve a more powerful representation. We design four shared networks that receive multi-tuple of instances as inputs and are connected by a novel loss function consisting of pair-loss and triplet-loss. According to the similarity metric, we select the most similar and the most dissimilar instances as the positive and negative inputs of triplet loss from each multi-tuple. We show that this scheme improves the training performance. Furthermore, we introduce a new weight layer to automatically select suitable combination weights, which will avoid the conflict between triplet and pair loss leading to worse performance. We evaluate our quadruplet framework by model-free tracking-by-detection of objects from a single initial exemplar in several Visual Object Tracking benchmarks. Our extensive experimental analysis demonstrates that our tracker achieves superior performance with a real-time processing speed of 78 frames-per-second (fps).

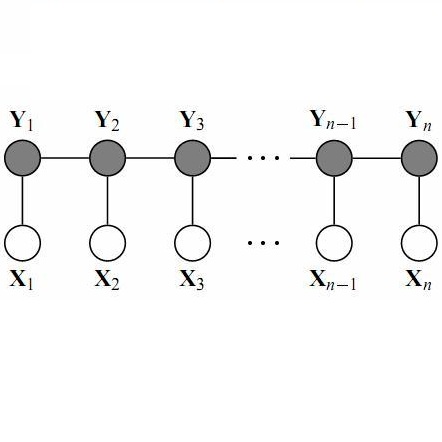

We propose a novel method for predicting image labels by fusing image content descriptors with the social media context of each image. An image uploaded to a social media site such as Flickr often has meaningful, associated information, such as comments and other images the user has uploaded, that is complementary to pixel content and helpful in predicting labels. Prediction challenges such as ImageNet~\cite{imagenet_cvpr09} and MSCOCO~\cite{LinMBHPRDZ:ECCV14} use only pixels, while other methods make predictions purely from social media context \cite{McAuleyECCV12}. Our method is based on a novel fully connected Conditional Random Field (CRF) framework, where each node is an image, and consists of two deep Convolutional Neural Networks (CNN) and one Recurrent Neural Network (RNN) that model both textual and visual node/image information. The edge weights of the CRF graph represent textual similarity and link-based metadata such as user sets and image groups. We model the CRF as an RNN for both learning and inference, and incorporate the weighted ranking loss and cross entropy loss into the CRF parameter optimization to handle the training data imbalance issue. Our proposed approach is evaluated on the MIR-9K dataset and experimentally outperforms current state-of-the-art approaches.

Image segmentation is considered to be one of the critical tasks in hyperspectral remote sensing image processing. Recently, convolutional neural network (CNN) has established itself as a powerful model in segmentation and classification by demonstrating excellent performances. The use of a graphical model such as a conditional random field (CRF) contributes further in capturing contextual information and thus improving the segmentation performance. In this paper, we propose a method to segment hyperspectral images by considering both spectral and spatial information via a combined framework consisting of CNN and CRF. We use multiple spectral cubes to learn deep features using CNN, and then formulate deep CRF with CNN-based unary and pairwise potential functions to effectively extract the semantic correlations between patches consisting of three-dimensional data cubes. Effective piecewise training is applied in order to avoid the computationally expensive iterative CRF inference. Furthermore, we introduce a deep deconvolution network that improves the segmentation masks. We also introduce a new dataset and experimented our proposed method on it along with several widely adopted benchmark datasets to evaluate the effectiveness of our method. By comparing our results with those from several state-of-the-art models, we show the promising potential of our method.

As a basic task in computer vision, semantic segmentation can provide fundamental information for object detection and instance segmentation to help the artificial intelligence better understand real world. Since the proposal of fully convolutional neural network (FCNN), it has been widely used in semantic segmentation because of its high accuracy of pixel-wise classification as well as high precision of localization. In this paper, we apply several famous FCNN to brain tumor segmentation, making comparisons and adjusting network architectures to achieve better performance measured by metrics such as precision, recall, mean of intersection of union (mIoU) and dice score coefficient (DSC). The adjustments to the classic FCNN include adding more connections between convolutional layers, enlarging decoders after up sample layers and changing the way shallower layers' information is reused. Besides the structure modification, we also propose a new classifier with a hierarchical dice loss. Inspired by the containing relationship between classes, the loss function converts multiple classification to multiple binary classification in order to counteract the negative effect caused by imbalance data set. Massive experiments have been done on the training set and testing set in order to assess our refined fully convolutional neural networks and new types of loss function. Competitive figures prove they are more effective than their predecessors.