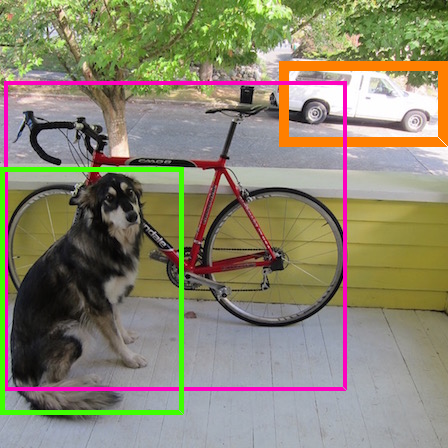

Wrong-way driving is one of the main causes of road accidents and traffic jam all over the world. By detecting wrong-way vehicles, the number of accidents can be minimized and traffic jam can be reduced. With the increasing popularity of real-time traffic management systems and due to the availability of cheaper cameras, the surveillance video has become a big source of data. In this paper, we propose an automatic wrong-way vehicle detection system from on-road surveillance camera footage. Our system works in three stages: the detection of vehicles from the video frame by using the You Only Look Once (YOLO) algorithm, track each vehicle in a specified region of interest using centroid tracking algorithm and detect the wrong-way driving vehicles. YOLO is very accurate in object detection and the centroid tracking algorithm can track any moving object efficiently. Experiment with some traffic videos shows that our proposed system can detect and identify any wrong-way vehicle in different light and weather conditions. The system is very simple and easy to implement.

相關內容

The emerging modular vehicle (MV) technology possesses the ability to physically connect/disconnect with each other and thus travel in platoon for less energy consumption. Moreover, a platoon of MVs can be regarded as a new bus-like platform with expanded on-board carrying capacity and provide larger service throughput according to the demand density. This innovation concept might solve the mismatch problems between the fixed vehicle capacity and the temporal-spatial variations of demand in current transportation system. To obtain the optimal assignments and routes for the operation of MVs, a mixed integer linear programming (MILP) model is formulated to minimize the weighted total cost of vehicle travel cost and passenger service time. The temporal and spatial synchronization of vehicle platoons and passenger en-route transfers are determined and optimized by the MILP model while constructing the paths. Heuristic algorithms based on large neighborhood search are developed to solve the modular dial-a-ride problem (MDARP) for practical scenarios. A set of small-scale synthetic numerical experiments are tested to evaluate the optimality gap and computation time between our proposed MILP model and heuristic algorithms. Large-scale experiments are conducted on the Anaheim network with 378 candidate join/split nodes to further explore the potentials and identify the ideal operation scenarios of MVs. The results show that the innovative MV technology can save up to 52.0% in vehicle travel cost, 35.6% in passenger service time, and 29.4% in total cost against existing on-demand mobility services. Results suggest that MVs best benefit from platooning by serving enclave pairs as a hub-and-spoke service.

The rapidly evolving industry demands high accuracy of the models without the need for time-consuming and computationally expensive experiments required for fine-tuning. Moreover, a model and training pipeline, which was once carefully optimized for a specific dataset, rarely generalizes well to training on a different dataset. This makes it unrealistic to have carefully fine-tuned models for each use case. To solve this, we propose an alternative approach that also forms a backbone of Intel Geti platform: a dataset-agnostic template for object detection trainings, consisting of carefully chosen and pre-trained models together with a robust training pipeline for further training. Our solution works out-of-the-box and provides a strong baseline on a wide range of datasets. It can be used on its own or as a starting point for further fine-tuning for specific use cases when needed. We obtained dataset-agnostic templates by performing parallel training on a corpus of datasets and optimizing the choice of architectures and training tricks with respect to the average results on the whole corpora. We examined a number of architectures, taking into account the performance-accuracy trade-off. Consequently, we propose 3 finalists, VFNet, ATSS, and SSD, that can be deployed on CPU using the OpenVINO toolkit. The source code is available as a part of the OpenVINO Training Extensions (//github.com/openvinotoolkit/training_extensions}

Unmanned air vehicles (UAVs) popularity is on the rise as it enables the services like traffic monitoring, emergency communications, deliveries, and surveillance. However, the unauthorized usage of UAVs (a.k.a drone) may violate security and privacy protocols for security-sensitive national and international institutions. The presented challenges require fast, efficient, and precise detection of UAVs irrespective of harsh weather conditions, the presence of different objects, and their size to enable SafeSpace. Recently, there has been significant progress in using the latest deep learning models, but those models have shortcomings in terms of computational complexity, precision, and non-scalability. To overcome these limitations, we propose a precise and efficient multiscale and multifeature UAV detection network for SafeSpace, i.e., \textit{MultiFeatureNet} (\textit{MFNet}), an improved version of the popular object detection algorithm YOLOv5s. In \textit{MFNet}, we perform multiple changes in the backbone and neck of the YOLOv5s network to focus on the various small and ignored features required for accurate and fast UAV detection. To further improve the accuracy and focus on the specific situation and multiscale UAVs, we classify the \textit{MFNet} into small (S), medium (M), and large (L): these are the combinations of various size filters in the convolution and the bottleneckCSP layers, reside in the backbone and neck of the architecture. This classification helps to overcome the computational cost by training the model on a specific feature map rather than all the features. The dataset and code are available as an open source: github.com/ZeeshanKaleem/MultiFeatureNet.

Technological advancements have normalized the usage of unmanned aerial vehicles (UAVs) in every sector, spanning from military to commercial but they also pose serious security concerns due to their enhanced functionalities and easy access to private and highly secured areas. Several instances related to UAVs have raised security concerns, leading to UAV detection research studies. Visual techniques are widely adopted for UAV detection, but they perform poorly at night, in complex backgrounds, and in adverse weather conditions. Therefore, a robust night vision-based drone detection system is required to that could efficiently tackle this problem. Infrared cameras are increasingly used for nighttime surveillance due to their wide applications in night vision equipment. This paper uses a deep learning-based TinyFeatureNet (TF-Net), which is an improved version of YOLOv5s, to accurately detect UAVs during the night using infrared (IR) images. In the proposed TF-Net, we introduce architectural changes in the neck and backbone of the YOLOv5s. We also simulated four different YOLOv5 models (s,m,n,l) and proposed TF-Net for a fair comparison. The results showed better performance for the proposed TF-Net in terms of precision, IoU, GFLOPS, model size, and FPS compared to the YOLOv5s. TF-Net yielded the best results with 95.7\% precision, 84\% mAp, and 44.8\% $IoU$.

Owing to effective and flexible data acquisition, unmanned aerial vehicle (UAV) has recently become a hotspot across the fields of computer vision (CV) and remote sensing (RS). Inspired by recent success of deep learning (DL), many advanced object detection and tracking approaches have been widely applied to various UAV-related tasks, such as environmental monitoring, precision agriculture, traffic management. This paper provides a comprehensive survey on the research progress and prospects of DL-based UAV object detection and tracking methods. More specifically, we first outline the challenges, statistics of existing methods, and provide solutions from the perspectives of DL-based models in three research topics: object detection from the image, object detection from the video, and object tracking from the video. Open datasets related to UAV-dominated object detection and tracking are exhausted, and four benchmark datasets are employed for performance evaluation using some state-of-the-art methods. Finally, prospects and considerations for the future work are discussed and summarized. It is expected that this survey can facilitate those researchers who come from remote sensing field with an overview of DL-based UAV object detection and tracking methods, along with some thoughts on their further developments.

Conventional methods for object detection typically require a substantial amount of training data and preparing such high-quality training data is very labor-intensive. In this paper, we propose a novel few-shot object detection network that aims at detecting objects of unseen categories with only a few annotated examples. Central to our method are our Attention-RPN, Multi-Relation Detector and Contrastive Training strategy, which exploit the similarity between the few shot support set and query set to detect novel objects while suppressing false detection in the background. To train our network, we contribute a new dataset that contains 1000 categories of various objects with high-quality annotations. To the best of our knowledge, this is one of the first datasets specifically designed for few-shot object detection. Once our few-shot network is trained, it can detect objects of unseen categories without further training or fine-tuning. Our method is general and has a wide range of potential applications. We produce a new state-of-the-art performance on different datasets in the few-shot setting. The dataset link is //github.com/fanq15/Few-Shot-Object-Detection-Dataset.

Benefit from the quick development of deep learning techniques, salient object detection has achieved remarkable progresses recently. However, there still exists following two major challenges that hinder its application in embedded devices, low resolution output and heavy model weight. To this end, this paper presents an accurate yet compact deep network for efficient salient object detection. More specifically, given a coarse saliency prediction in the deepest layer, we first employ residual learning to learn side-output residual features for saliency refinement, which can be achieved with very limited convolutional parameters while keep accuracy. Secondly, we further propose reverse attention to guide such side-output residual learning in a top-down manner. By erasing the current predicted salient regions from side-output features, the network can eventually explore the missing object parts and details which results in high resolution and accuracy. Experiments on six benchmark datasets demonstrate that the proposed approach compares favorably against state-of-the-art methods, and with advantages in terms of simplicity, efficiency (45 FPS) and model size (81 MB).

Object tracking is the cornerstone of many visual analytics systems. While considerable progress has been made in this area in recent years, robust, efficient, and accurate tracking in real-world video remains a challenge. In this paper, we present a hybrid tracker that leverages motion information from the compressed video stream and a general-purpose semantic object detector acting on decoded frames to construct a fast and efficient tracking engine suitable for a number of visual analytics applications. The proposed approach is compared with several well-known recent trackers on the OTB tracking dataset. The results indicate advantages of the proposed method in terms of speed and/or accuracy. Another advantage of the proposed method over most existing trackers is its simplicity and deployment efficiency, which stems from the fact that it reuses and re-purposes the resources and information that may already exist in the system for other reasons.

Vision-based vehicle detection approaches achieve incredible success in recent years with the development of deep convolutional neural network (CNN). However, existing CNN based algorithms suffer from the problem that the convolutional features are scale-sensitive in object detection task but it is common that traffic images and videos contain vehicles with a large variance of scales. In this paper, we delve into the source of scale sensitivity, and reveal two key issues: 1) existing RoI pooling destroys the structure of small scale objects, 2) the large intra-class distance for a large variance of scales exceeds the representation capability of a single network. Based on these findings, we present a scale-insensitive convolutional neural network (SINet) for fast detecting vehicles with a large variance of scales. First, we present a context-aware RoI pooling to maintain the contextual information and original structure of small scale objects. Second, we present a multi-branch decision network to minimize the intra-class distance of features. These lightweight techniques bring zero extra time complexity but prominent detection accuracy improvement. The proposed techniques can be equipped with any deep network architectures and keep them trained end-to-end. Our SINet achieves state-of-the-art performance in terms of accuracy and speed (up to 37 FPS) on the KITTI benchmark and a new highway dataset, which contains a large variance of scales and extremely small objects.

Automatic License Plate Recognition (ALPR) has been a frequent topic of research due to many practical applications. However, many of the current solutions are still not robust in real-world situations, commonly depending on many constraints. This paper presents a robust and efficient ALPR system based on the state-of-the-art YOLO object detection. The Convolutional Neural Networks (CNNs) are trained and fine-tuned for each ALPR stage so that they are robust under different conditions (e.g., variations in camera, lighting, and background). Specially for character segmentation and recognition, we design a two-stage approach employing simple data augmentation tricks such as inverted License Plates (LPs) and flipped characters. The resulting ALPR approach achieved impressive results in two datasets. First, in the SSIG dataset, composed of 2,000 frames from 101 vehicle videos, our system achieved a recognition rate of 93.53% and 47 Frames Per Second (FPS), performing better than both Sighthound and OpenALPR commercial systems (89.80% and 93.03%, respectively) and considerably outperforming previous results (81.80%). Second, targeting a more realistic scenario, we introduce a larger public dataset, called UFPR-ALPR dataset, designed to ALPR. This dataset contains 150 videos and 4,500 frames captured when both camera and vehicles are moving and also contains different types of vehicles (cars, motorcycles, buses and trucks). In our proposed dataset, the trial versions of commercial systems achieved recognition rates below 70%. On the other hand, our system performed better, with recognition rate of 78.33% and 35 FPS.