We propose a principal components regression method based on maximizing a joint pseudo-likelihood for responses and predictors. Our method uses both responses and predictors to select linear combinations of the predictors relevant for the regression, thereby addressing an oft-cited deficiency of conventional principal components regression. The proposed estimator is shown to be consistent in a wide range of settings, including ones with non-normal and dependent observations; conditions on the first and second moments suffice if the number of predictors ($p$) is fixed and the number of observations ($n$) tends to infinity and dependence is weak, while stronger distributional assumptions are needed when $p \to \infty$ with $n$. We obtain the estimator's asymptotic distribution as the projection of a multivariate normal random vector onto a tangent cone of the parameter set at the true parameter, and find the estimator is asymptotically more efficient than competing ones. In simulations our method is substantially more accurate than conventional principal components regression and compares favorably to partial least squares and predictor envelopes. The method's practical usefulness is illustrated in a data example with cross-sectional prediction of stock returns.

相關內容

Langevin Monte Carlo (LMC) is a popular Bayesian sampling method. For the log-concave distribution function, the method converges exponentially fast, up to a controllable discretization error. However, the method requires the evaluation of a full gradient in each iteration, and for a problem on $\mathbb{R}^d$, this amounts to $d$ times partial derivative evaluations per iteration. The cost is high when $d\gg1$. In this paper, we investigate how to enhance computational efficiency through the application of RCD (random coordinate descent) on LMC. There are two sides of the theory: 1 By blindly applying RCD to LMC, one surrogates the full gradient by a randomly selected directional derivative per iteration. Although the cost is reduced per iteration, the total number of iteration is increased to achieve a preset error tolerance. Ultimately there is no computational gain; 2 We then incorporate variance reduction techniques, such as SAGA (stochastic average gradient) and SVRG (stochastic variance reduced gradient), into RCD-LMC. It will be proved that the cost is reduced compared with the classical LMC, and in the underdamped case, convergence is achieved with the same number of iterations, while each iteration requires merely one-directional derivative. This means we obtain the best possible computational cost in the underdamped-LMC framework.

Logistic regression is one of the most fundamental methods for modeling the probability of a binary outcome based on a collection of covariates. However, the classical formulation of logistic regression relies on the independent sampling assumption, which is often violated when the outcomes interact through an underlying network structure. This necessitates the development of models that can simultaneously handle both the network peer-effect (arising from neighborhood interactions) and the effect of high-dimensional covariates. In this paper, we develop a framework for incorporating such dependencies in a high-dimensional logistic regression model by introducing a quadratic interaction term, as in the Ising model, designed to capture pairwise interactions from the underlying network. The resulting model can also be viewed as an Ising model, where the node-dependent external fields linearly encode the high-dimensional covariates. We propose a penalized maximum pseudo-likelihood method for estimating the network peer-effect and the effect of the covariates, which, in addition to handling the high-dimensionality of the parameters, conveniently avoids the computational intractability of the maximum likelihood approach. Consequently, our method is computationally efficient and, under various standard regularity conditions, our estimate attains the classical high-dimensional rate of consistency. In particular, our results imply that even under network dependence it is possible to consistently estimate the model parameters at the same rate as in classical logistic regression, when the true parameter is sparse and the underlying network is not too dense. As a consequence of the general results, we derive the rates of consistency of our estimator for various natural graph ensembles, such as bounded degree graphs, sparse Erd\H{o}s-R\'{e}nyi random graphs, and stochastic block models.

Multiclass logistic regression is a fundamental task in machine learning with applications in classification and boosting. Previous work (Foster et al., 2018) has highlighted the importance of improper predictors for achieving "fast rates" in the online multiclass logistic regression problem without suffering exponentially from secondary problem parameters, such as the norm of the predictors in the comparison class. While Foster et al. (2018) introduced a statistically optimal algorithm, it is in practice computationally intractable due to its run-time complexity being a large polynomial in the time horizon and dimension of input feature vectors. In this paper, we develop a new algorithm, FOLKLORE, for the problem which runs significantly faster than the algorithm of Foster et al.(2018) -- the running time per iteration scales quadratically in the dimension -- at the cost of a linear dependence on the norm of the predictors in the regret bound. This yields the first practical algorithm for online multiclass logistic regression, resolving an open problem of Foster et al.(2018). Furthermore, we show that our algorithm can be applied to online bandit multiclass prediction and online multiclass boosting, yielding more practical algorithms for both problems compared to the ones in Foster et al.(2018) with similar performance guarantees. Finally, we also provide an online-to-batch conversion result for our algorithm.

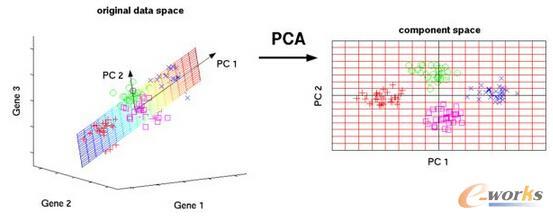

Ordinal data occur frequently in the social sciences. When applying principal components analysis (PCA), however, those data are often treated as numeric implying linear relationships between the variables at hand, or non-linear PCA is applied where the obtained quantifications are sometimes hard to interpret. Non-linear PCA for categorical data, also called optimal scoring/scaling, constructs new variables by assigning numerical values to categories such that the proportion of variance in those new variables that is explained by a predefined number of principal components is maximized. We propose a penalized version of non-linear PCA for ordinal variables that is an intermediate between standard PCAon category labels and non-linear PCA as used so far. The new approach is by no means limited to monotonic effects, and offers both better interpretability of the non-linear transformation of the category labels as well as better performance on validation data than unpenalized non-linear PCA and/or standard linear PCA. In particular, an application of penalized non-linear PCA to ordinal data as given with the International Classification of Functioning, Disability and Health (ICF) is provided.

Modern variable selection procedures make use of penalization methods to execute simultaneous model selection and estimation. A popular method is the LASSO (least absolute shrinkage and selection operator), which contains a tuning parameter. This parameter is typically tuned by minimizing the cross-validation error or Bayesian information criterion (BIC) but this can be computationally intensive as it involves fitting an array of different models and selecting the best one. However, we have developed a procedure based on the so-called "smooth IC" (SIC) in which the tuning parameter is automatically selected in one step. We also extend this model selection procedure to the so-called "multi-parameter regression" framework, which is more flexible than classical regression modelling. Multi-parameter regression introduces flexibility by taking account of the effect of covariates through multiple distributional parameters simultaneously, e.g., mean and variance. These models are useful in the context of normal linear regression when the process under study exhibits heteroscedastic behaviour. Reformulating the multi-parameter regression estimation problem in terms of penalized likelihood enables us to take advantage of the close relationship between model selection criteria and penalization. Utilizing the SIC is computationally advantageous, as it obviates the issue of having to choose multiple tuning parameters.

We introduce Autoregressive Diffusion Models (ARDMs), a model class encompassing and generalizing order-agnostic autoregressive models (Uria et al., 2014) and absorbing discrete diffusion (Austin et al., 2021), which we show are special cases of ARDMs under mild assumptions. ARDMs are simple to implement and easy to train. Unlike standard ARMs, they do not require causal masking of model representations, and can be trained using an efficient objective similar to modern probabilistic diffusion models that scales favourably to highly-dimensional data. At test time, ARDMs support parallel generation which can be adapted to fit any given generation budget. We find that ARDMs require significantly fewer steps than discrete diffusion models to attain the same performance. Finally, we apply ARDMs to lossless compression, and show that they are uniquely suited to this task. Contrary to existing approaches based on bits-back coding, ARDMs obtain compelling results not only on complete datasets, but also on compressing single data points. Moreover, this can be done using a modest number of network calls for (de)compression due to the model's adaptable parallel generation.

This paper considers inference in a linear regression model with random right censoring and outliers. The number of outliers can grow with the sample size while their proportion goes to zero. The model is semiparametric and we make only very mild assumptions on the distribution of the error term, contrary to most other existing approaches in the literature. We propose to penalize the estimator proposed by Stute for censored linear regression by the l1-norm. We derive rates of convergence and establish asymptotic normality of the estimator of the regression coefficients. Our estimator has the same asymptotic variance as Stute's estimator in the censored linear model without outliers. Hence, there is no loss of efficiency as a result of robustness. Tests and confidence sets can therefore rely on the theory developed by Stute. The outlined procedure is also computationally advantageous, since it amounts to solving a convex optimization program. We also propose a second estimator which uses the proposed penalized Stute estimator as a first step to detect outliers. It has similar theoretical properties but better performance in finite samples as assessed by simulations.

Real-world applications of machine learning tools in high-stakes domains are often regulated to be fair, in the sense that the predicted target should satisfy some quantitative notion of parity with respect to a protected attribute. However, the exact tradeoff between fairness and accuracy with a real-valued target is not entirely clear. In this paper, we characterize the inherent tradeoff between statistical parity and accuracy in the regression setting by providing a lower bound on the error of any fair regressor. Our lower bound is sharp, algorithm-independent, and admits a simple interpretation: when the moments of the target differ between groups, any fair algorithm has to make an error on at least one of the groups. We further extend this result to give a lower bound on the joint error of any (approximately) fair algorithm, using the Wasserstein distance to measure the quality of the approximation. With our novel lower bound, we also show that the price paid by a fair regressor that does not take the protected attribute as input is less than that of a fair regressor with explicit access to the protected attribute. On the upside, we establish the first connection between individual fairness, accuracy parity, and the Wasserstein distance by showing that if a regressor is individually fair, it also approximately verifies the accuracy parity, where the gap is given by the Wasserstein distance between the two groups. Inspired by our theoretical results, we develop a practical algorithm for fair regression through the lens of representation learning, and conduct experiments on a real-world dataset to corroborate our findings.

We propose a verified computation method for eigenvalues in a region and the corresponding eigenvectors of generalized Hermitian eigenvalue problems. The proposed method uses complex moments to extract the eigencomponents of interest from a random matrix and uses the Rayleigh--Ritz procedure to project a given eigenvalue problem into a reduced eigenvalue problem. The complex moment is given by contour integral and approximated by using numerical quadrature. We split the error in the complex moment into the truncation error of the quadrature and rounding errors and evaluate each. This idea for error evaluation inherits our previous Hankel matrix approach, whereas the proposed method requires half the number of quadrature points for the previous approach to reduce the truncation error to the same order. Moreover, the Rayleigh--Ritz procedure approach forms a transformation matrix that enables verification of the eigenvectors. Numerical experiments show that the proposed method is faster than previous methods while maintaining verification performance.

Graph Neural Networks (GNNs) have been shown to be effective models for different predictive tasks on graph-structured data. Recent work on their expressive power has focused on isomorphism tasks and countable feature spaces. We extend this theoretical framework to include continuous features - which occur regularly in real-world input domains and within the hidden layers of GNNs - and we demonstrate the requirement for multiple aggregation functions in this context. Accordingly, we propose Principal Neighbourhood Aggregation (PNA), a novel architecture combining multiple aggregators with degree-scalers (which generalize the sum aggregator). Finally, we compare the capacity of different models to capture and exploit the graph structure via a novel benchmark containing multiple tasks taken from classical graph theory, alongside existing benchmarks from real-world domains, all of which demonstrate the strength of our model. With this work, we hope to steer some of the GNN research towards new aggregation methods which we believe are essential in the search for powerful and robust models.