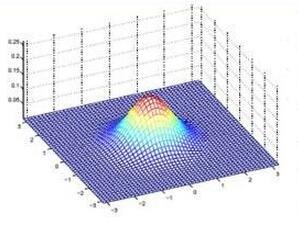

In this work, we consider the spatial-temporal multi-species competition model. A mathematical model is described by a coupled system of nonlinear diffusion-reaction equations. We use a finite volume approximation with semi-implicit time approximation for the numerical solution of the model with corresponding boundary and initial conditions. To understand the effect of the diffusion to solution in one and two-dimensional formulations, we present numerical results for several cases of the parameters related to the survival scenarios. The random initial conditions' effect on the time to reach equilibrium is investigated. The influence of diffusion on the survival scenarios is presented. In real-world problems, values of the parameters are usually unknown and vary in some range. In order to evaluate the impact of parameters on the system stability, we simulate a spatial-temporal model with random parameters and perform factor analysis for two and three-species competition models.

相關內容

The $k$-Server Problem covers plenty of resource allocation scenarios, and several variations have been studied extensively for decades. We present a model generalizing the $k$-Server Problem by preferences of the requests, where the servers are not identical and requests can express which specific servers should serve them. In our model, requests can either be answered by any server (general requests) or by a specific one (specific requests). If only general requests appear, the instance is one of the original $k$-Server Problem, and a lower bound for the competitive ratio of $k$ applies. If only specific requests appear, a solution with a competitive ratio of $1$ becomes trivial. We show that if both kinds of requests appear, the lower bound raises to $2k-1$. We study deterministic online algorithms and present two algorithms for uniform metrics. The first one has a competitive ratio dependent on the frequency of specific requests. It achieves a worst-case competitive ratio of $3k-2$ while it is optimal when only general requests appear or when specific requests dominate the input sequence. The second has a worst-case competitive ratio of $2k+14$. For the first algorithm, we show a lower bound of $3k-2$, while the second algorithm has a lower bound of $2k-1$ when only general requests appear. The two algorithms differ in only one behavioral rule that significantly influences the competitive ratio. We show that there is a trade-off between performing well against instances of the $k$-Server Problem and mixed instances based on the rule. Additionally, no deterministic online algorithm can be optimal for both kinds of instances simultaneously. Regarding non-uniform metrics, we present an adaption of the Double Coverage algorithm for $2$ servers on the line achieving a competitive ratio of $6$, and an adaption of the Work-Function-Algorithm achieving a competitive ratio of $4k$.

Neural ordinary differential equations (Neural ODEs) model continuous time dynamics as differential equations parametrized with neural networks. Thanks to their modeling flexibility, they have been adopted for multiple tasks where the continuous time nature of the process is specially relevant, as in system identification and time series analysis. When applied in a control setting, it is possible to adapt their use to approximate optimal nonlinear feedback policies. This formulation follows the same approach as policy gradients in reinforcement learning, covering the case where the environment consists of known deterministic dynamics given by a system of differential equations. The white box nature of the model specification allows the direct calculation of policy gradients through sensitivity analysis, avoiding the inexact and inefficient gradient estimation through sampling. In this work we propose the use of a neural control policy posed as a Neural ODE to solve general nonlinear optimal control problems while satisfying both state and control constraints, which are crucial for real world scenarios. Since the state feedback policy partially modifies the model dynamics, the whole space phase of the system is reshaped upon the optimization. This approach is a sensible approximation to the historically intractable closed loop solution of nonlinear control problems that efficiently exploits the availability of a dynamical system model.

In this paper we aim to use different metrics in the Euclidean space and Sobolev type metrics in function spaces in order to produce reliable parameters for the differentiation of point distributions and dynamical systems. The main tool is the analysis of the geometrical evolution of the hypergraphs generated by the growth of the radial parameters for a choice of an appropriate metric in the space containing the data points. Once this geometric dynamics is obtained we use Lebesque and Sobolev type norms in order to compare the basic geometric signals obtained.

This paper introduces a new modeling framework for the statistical analysis of point patterns on a manifold M_{d}, defined by a connected and compact two-point homogeneous space, including the special case of the sphere. The presented approach is based on temporal Cox processes driven by a L^{2}(\mathbb{M}_{d})-valued log-intensity. Different aggregation schemes on the manifold of the spatiotemporal point-referenced data are implemented in terms of the time-varying discrete Jacobi polynomial transform of the log-risk process. The n-dimensional microscale point pattern evolution in time at different manifold spatial scales is then characterized from such a transform. The simulation study undertaken illustrates the construction of spherical point process models displaying aggregation at low Legendre polynomial transform frequencies (large scale), while regularity is observed at high frequencies (small scale). K-function analysis supports these results under temporal short-, intermediate- and long-range dependence of the log-risk process.

Language models suffer from various degenerate behaviors. These differ between tasks: machine translation (MT) exhibits length bias, while tasks like story generation exhibit excessive repetition. Recent work has attributed the difference to task constrainedness, but evidence for this claim has always involved many confounding variables. To study this question directly, we introduce a new experimental framework that allows us to smoothly vary task constrainedness, from MT at one end to fully open-ended generation at the other, while keeping all other aspects fixed. We find that: (1) repetition decreases smoothly with constrainedness, explaining the difference in repetition across tasks; (2) length bias surprisingly also decreases with constrainedness, suggesting some other cause for the difference in length bias; (3) across the board, these problems affect the mode, not the whole distribution; (4) the differences cannot be attributed to a change in the entropy of the distribution, since another method of changing the entropy, label smoothing, does not produce the same effect.

This paper considers the estimation and inference of the low-rank components in high-dimensional matrix-variate factor models, where each dimension of the matrix-variates ($p \times q$) is comparable to or greater than the number of observations ($T$). We propose an estimation method called $\alpha$-PCA that preserves the matrix structure and aggregates mean and contemporary covariance through a hyper-parameter $\alpha$. We develop an inferential theory, establishing consistency, the rate of convergence, and the limiting distributions, under general conditions that allow for correlations across time, rows, or columns of the noise. We show both theoretical and empirical methods of choosing the best $\alpha$, depending on the use-case criteria. Simulation results demonstrate the adequacy of the asymptotic results in approximating the finite sample properties. The $\alpha$-PCA compares favorably with the existing ones. Finally, we illustrate its applications with a real numeric data set and two real image data sets. In all applications, the proposed estimation procedure outperforms previous methods in the power of variance explanation using out-of-sample 10-fold cross-validation.

Predicting the behaviour of shoppers provides valuable information for retailers, such as the expected spend of a shopper or the total turnover of a supermarket. The ability to make predictions on an individual level is useful, as it allows supermarkets to accurately perform targeted marketing. However, given the expected number of shoppers and their diverse behaviours, making accurate predictions on an individual level is difficult. This problem does not only arise in shopper behaviour, but also in various business processes, such as predicting when an invoice will be paid. In this paper we present CAPiES, a framework that focuses on this trade-off in an online setting. By making predictions on a larger number of entities at a time, we improve the predictive accuracy but at the potential cost of usefulness since we can say less about the individual entities. CAPiES is developed in an online setting, where we continuously update the prediction model and make new predictions over time. We show the existence of the trade-off in an experimental evaluation in two real-world scenarios: a supermarket with over 160 000 shoppers and a paint factory with over 171 000 invoices.

This paper focuses on the expected difference in borrower's repayment when there is a change in the lender's credit decisions. Classical estimators overlook the confounding effects and hence the estimation error can be magnificent. As such, we propose another approach to construct the estimators such that the error can be greatly reduced. The proposed estimators are shown to be unbiased, consistent, and robust through a combination of theoretical analysis and numerical testing. Moreover, we compare the power of estimating the causal quantities between the classical estimators and the proposed estimators. The comparison is tested across a wide range of models, including linear regression models, tree-based models, and neural network-based models, under different simulated datasets that exhibit different levels of causality, different degrees of nonlinearity, and different distributional properties. Most importantly, we apply our approaches to a large observational dataset provided by a global technology firm that operates in both the e-commerce and the lending business. We find that the relative reduction of estimation error is strikingly substantial if the causal effects are accounted for correctly.

Transfer learning aims at improving the performance of target learners on target domains by transferring the knowledge contained in different but related source domains. In this way, the dependence on a large number of target domain data can be reduced for constructing target learners. Due to the wide application prospects, transfer learning has become a popular and promising area in machine learning. Although there are already some valuable and impressive surveys on transfer learning, these surveys introduce approaches in a relatively isolated way and lack the recent advances in transfer learning. As the rapid expansion of the transfer learning area, it is both necessary and challenging to comprehensively review the relevant studies. This survey attempts to connect and systematize the existing transfer learning researches, as well as to summarize and interpret the mechanisms and the strategies in a comprehensive way, which may help readers have a better understanding of the current research status and ideas. Different from previous surveys, this survey paper reviews over forty representative transfer learning approaches from the perspectives of data and model. The applications of transfer learning are also briefly introduced. In order to show the performance of different transfer learning models, twenty representative transfer learning models are used for experiments. The models are performed on three different datasets, i.e., Amazon Reviews, Reuters-21578, and Office-31. And the experimental results demonstrate the importance of selecting appropriate transfer learning models for different applications in practice.

Many tasks in natural language processing can be viewed as multi-label classification problems. However, most of the existing models are trained with the standard cross-entropy loss function and use a fixed prediction policy (e.g., a threshold of 0.5) for all the labels, which completely ignores the complexity and dependencies among different labels. In this paper, we propose a meta-learning method to capture these complex label dependencies. More specifically, our method utilizes a meta-learner to jointly learn the training policies and prediction policies for different labels. The training policies are then used to train the classifier with the cross-entropy loss function, and the prediction policies are further implemented for prediction. Experimental results on fine-grained entity typing and text classification demonstrate that our proposed method can obtain more accurate multi-label classification results.