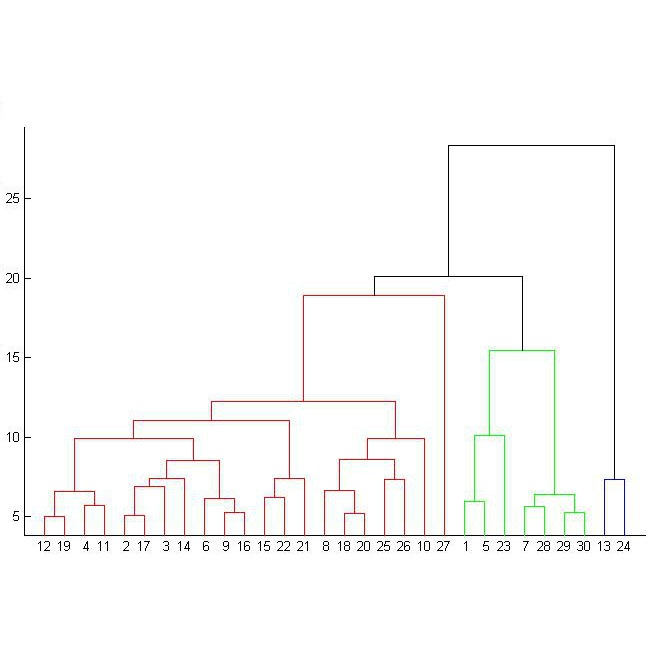

In this paper we offer a new perspective on the well established agglomerative clustering algorithm, focusing on recovery of hierarchical structure. We recommend a simple variant of the standard algorithm, in which clusters are merged by maximum average dot product and not, for example, by minimum distance or within-cluster variance. We demonstrate that the tree output by this algorithm provides a bona fide estimate of generative hierarchical structure in data, under a generic probabilistic graphical model. The key technical innovations are to understand how hierarchical information in this model translates into tree geometry which can be recovered from data, and to characterise the benefits of simultaneously growing sample size and data dimension. We demonstrate superior tree recovery performance with real data over existing approaches such as UPGMA, Ward's method, and HDBSCAN.

相關內容

The HTTPS protocol has enforced a higher level of robustness to several attacks; however, it is not easy to set up the required certificates on intranets, nor is it effective in the case the server confidentiality is not reliable, as in the case of cloud services, or it could be compromised. A simple method is proposed to encrypt the data on the client side, using Web Assembly. It never transfers data to the server as clear text. Searching fields in the server is made possible by an encoding scheme that ensures a stable prefix correspondence between ciphertext and plaintext. The method has been developed for a semantic medical database, and allows accessing personal data using an additional password while maintaining non-sensitive information in clear form. Web Assembly has been chosen to guarantee the fast and efficient execution of encrypting/decrypting operations and because of its characteristic of producing modules that are very robust against reverse engineering. The code is available at //github.com/mfalda/client-encdec.

Twitter as one of the most popular social networks, offers a means for communication and online discourse, which unfortunately has been the target of bots and fake accounts, leading to the manipulation and spreading of false information. Towards this end, we gather a challenging, multilingual dataset of social discourse on Twitter, originating from 9M users regarding the recent Russo-Ukrainian war, in order to detect the bot accounts and the conversation involving them. We collect the ground truth for our dataset through the Twitter API suspended accounts collection, containing approximately 343K of bot accounts and 8M of normal users. Additionally, we use a dataset provided by Botometer-V3 with 1,777 Varol, 483 German accounts, and 1,321 US accounts. Besides the publicly available datasets, we also manage to collect 2 independent datasets around popular discussion topics of the 2022 energy crisis and the 2022 conspiracy discussions. Both of the datasets were labeled according to the Twitter suspension mechanism. We build a novel ML model for bot detection using the state-of-the-art XGBoost model. We combine the model with a high volume of labeled tweets according to the Twitter suspension mechanism ground truth. This requires a limited set of profile features allowing labeling of the dataset in different time periods from the collection, as it is independent of the Twitter API. In comparison with Botometer our methodology achieves an average 11% higher ROC-AUC score over two real-case scenario datasets.

This study proposes a unified optimization-based planning framework that addresses the precise and efficient navigation of a controlled object within a constrained region, while contending with obstacles. We focus on handling two collision avoidance problems, i.e., the object not colliding with obstacles and not colliding with boundaries of the constrained region. The object or obstacle is denoted as a union of convex polytopes and ellipsoids, and the constrained region is denoted as an intersection of such convex sets. Using these representations, collision avoidance can be approached by formulating explicit constraints that separate two convex sets, or ensure that a convex set is contained in another convex set, referred to as separating constraints and containing constraints, respectively. We propose to use the hyperplane separation theorem to formulate differentiable separating constraints, and utilize the S-procedure and geometrical methods to formulate smooth containing constraints. We state that compared to the state of the art, the proposed formulations allow a considerable reduction in nonlinear program size and geometry-based initialization in auxiliary variables used to formulate collision avoidance constraints. Finally, the efficacy of the proposed unified planning framework is evaluated in two contexts, autonomous parking in tractor-trailer vehicles and overtaking on curved lanes. The results in both cases exhibit an improved computational performance compared to existing methods.

With the rise of large language models (LLMs) and concerns about potential misuse, watermarks for generative LLMs have recently attracted much attention. An important aspect of such watermarks is the trade-off between their identifiability and their impact on the quality of the generated text. This paper introduces a systematic approach to this trade-off in terms of a multi-objective optimization problem. For a large class of robust, efficient watermarks, the associated Pareto optimal solutions are identified and shown to outperform the currently default watermark.

The aim of the work presented in this paper is to develop and evaluate an integrated system that provides automated lecture style evaluation, allowing teachers to get instant feedback related to the goodness of their lecturing style. The proposed system aims to promote improvement of lecture quality, that could upgrade the overall student learning experience. The proposed application utilizes specific measurable biometric characteristics, such as facial expressions, body activity, speech rate and intonation, hand movement, and facial pose, extracted from a video showing the lecturer from the audience point of view. Measurable biometric features extracted during a lecture are combined to provide teachers with a score reflecting lecture style quality both at frame rate and by providing lecture quality metrics for the whole lecture. The acceptance of the proposed lecture style evaluation system was evaluated by chief education officers, teachers and students regarding the functionality, usefulness of the application, and possible improvements. The results indicate that participants found the application novel and useful in providing automated feedback regarding lecture quality. Furthermore, the performance evaluation of the proposed system was compared with the performance of humans in the task of lecture style evaluation. Results indicate that the proposed system not only achieves similar performance to human observers, but in some cases, it outperforms them.

We develop new tools to study landscapes in nonconvex optimization. Given one optimization problem, we pair it with another by smoothly parametrizing the domain. This is either for practical purposes (e.g., to use smooth optimization algorithms with good guarantees) or for theoretical purposes (e.g., to reveal that the landscape satisfies a strict saddle property). In both cases, the central question is: how do the landscapes of the two problems relate? More precisely: how do desirable points such as local minima and critical points in one problem relate to those in the other problem? A key finding in this paper is that these relations are often determined by the parametrization itself, and are almost entirely independent of the cost function. Accordingly, we introduce a general framework to study parametrizations by their effect on landscapes. The framework enables us to obtain new guarantees for an array of problems, some of which were previously treated on a case-by-case basis in the literature. Applications include: optimizing low-rank matrices and tensors through factorizations; solving semidefinite programs via the Burer-Monteiro approach; training neural networks by optimizing their weights and biases; and quotienting out symmetries.

In this note, we present an abstract approach to study asymptotic orders for adaptive approximations with respect to a monotone set function $\mathfrak{J}$ defined on dyadic cubes. We determine the exact upper order in terms of the critical value of the corresponding $\mathfrak{J}$-partition function, and we are able to provide upper and lower bounds in term of fractal-geometric quantities. With properly chosen $\mathfrak{J}$, our new approach has applications in many different areas of mathematics, including the spectral theory of Krein-Feller operators, quantization dimensions of compactly supported probability measures, and the exact asymptotic order for Kolmogorov, Gelfand and linear widths for Sobolev embeddings into $L_{\mu}^p$-spaces.

In this paper we investigate the notion of legibility in sequential decision tasks under uncertainty. Previous works that extend legibility to scenarios beyond robot motion either focus on deterministic settings or are computationally too expensive. Our proposed approach, dubbed PoL-MDP, is able to handle uncertainty while remaining computationally tractable. We establish the advantages of our approach against state-of-the-art approaches in several simulated scenarios of different complexity. We also showcase the use of our legible policies as demonstrations for an inverse reinforcement learning agent, establishing their superiority against the commonly used demonstrations based on the optimal policy. Finally, we assess the legibility of our computed policies through a user study where people are asked to infer the goal of a mobile robot following a legible policy by observing its actions.

In this paper, we introduce a joint central limit theorem (CLT) for specific bilinear forms, encompassing the resolvent of the sample covariance matrix under an elliptical distribution. Through an exhaustive exploration of our theoretical findings, we unveil a phase transition in the limiting parameters that relies on the moments of the random radius in our derived CLT. Subsequently, we employ the established CLT to address two statistical challenges under elliptical distribution. The first task involves deriving the CLT for eigenvector statistics of the sample covariance matrix. The second task aims to ascertain the limiting properties of the spiked sample eigenvalues under a general spiked model. As a byproduct, we discover that the eigenmatrix of the sample covariance matrix under a light-tailed elliptical distribution satisfies the necessary conditions for asymptotic Haar, thereby extending the Haar conjecture to broader distributions.

In this paper we develop a novel neural network model for predicting implied volatility surface. Prior financial domain knowledge is taken into account. A new activation function that incorporates volatility smile is proposed, which is used for the hidden nodes that process the underlying asset price. In addition, financial conditions, such as the absence of arbitrage, the boundaries and the asymptotic slope, are embedded into the loss function. This is one of the very first studies which discuss a methodological framework that incorporates prior financial domain knowledge into neural network architecture design and model training. The proposed model outperforms the benchmarked models with the option data on the S&P 500 index over 20 years. More importantly, the domain knowledge is satisfied empirically, showing the model is consistent with the existing financial theories and conditions related to implied volatility surface.